Improving Factual Consistency of Abstractive Summarization Via Question Answering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fury's Comeback Falls Just Short in Los Angeles

PRESENTS FURY’S COMEBACK FALLS JUST SHORT IN LOS ANGELES GOBSMACKED! By William Dettloff yson Fury made but two mistakes Saturday night against Deontay Wilder, and those mistakes – along with an indefensible scorecard turned in by Alejandro TRochin – resulted in a mostly derided draw ver- dict in front of a purported 17,698 at The Sta- ples Center in Los Angeles. Fury – he of the nearly three-year layoff, manic depression, confessed drug and alcohol binges and morbid obesity – boxed Wilder ragged for the majority of the fight, displaying a quickness, dexterity and nimbleness of foot that belied his 6’9”, 256-pound frame. He consistently ducked, rolled with or slipped under Wilder’s attacks, which admittedly were crude at best, and feinted Wilder into knots before stepping forward with short, crisp combinations. “I’m what you call a pro athlete that loves to box,” Fury said afterward. “I don’t know anyone on the planet that can move like that. That man is a fearsome puncher and I was able to avoid that. The world knows I won the fight,” he said. Such was Fury’s early dominance that there was perhaps one round out of the first six that the clear-eyed and uncor- rupted could reasonably give to Wilder. Rochin gifted him the first four, which at the end contributed to an inexplica- ble 115-111 card. Judges Robert Tapper and Phil Edwards scored it a more reasonable 114-112 for Fury and 113-113, respectively. RINGSIDE SEAT saw Fury the winner by a 115-111 margin. -

Beats and Sky Sports #Beheard: Brand Integration Into the Fight of the Century

SUCCESS STORY Beats and Sky Sports #BeHeard: Brand integration into the Fight of the Century Beats wanted to establish their brand as the common denominator between music and sport. Partnering with Sky Sports, Beats gave fans an insider view to the biggest boxing event of the century through exclusive content in the build-up and night of the fight, dominating all social conversation. The results were knock-out across engagement, awareness and most importantly, sales. Challenge Beats had identified the power of music within sport as an untapped opportunity, leading to the introduction of the winning formula, ‘Music + Sports = Beats’. The brand wanted to associate with a highly anticipated sporting event to build brand awareness and engagement with fans. 18% 350% 24.4m increase in increase in Beats views across all AJ ecommerce sales website visits #BeHeard content on Social Media Insight On 29th April 2017, Anthony Joshua (AJ), The Future Great, took on the fight of his life against Wladimir Klitschko, The Current Great. Rising star of heavyweight boxing and brand ambassador, Anthony Joshua, presented Beats and Sky with a huge opportunity. As the official fight broadcaster, Sky Sports owned the connection between the ring and the fans, offering the perfect partner and platform for Beats. Idea Beats by Dre and Sky Sports partnered to share content through their joint investment in Anthony Joshua (AJ). Filming exclusive behind the scenes content in the build up the fight and the night itself, the content would be distributed across linear, digital and social platforms. By partnering with Sky, Beats had the platform to launch a campaign and #BeHeard. -

This Is a Terrific Book from a True Boxing Man and the Best Account of the Making of a Boxing Legend.” Glenn Mccrory, Sky Sports

“This is a terrific book from a true boxing man and the best account of the making of a boxing legend.” Glenn McCrory, Sky Sports Contents About the author 8 Acknowledgements 9 Foreword 11 Introduction 13 Fight No 1 Emanuele Leo 47 Fight No 2 Paul Butlin 55 Fight No 3 Hrvoje Kisicek 6 3 Fight No 4 Dorian Darch 71 Fight No 5 Hector Avila 77 Fight No 6 Matt Legg 8 5 Fight No 7 Matt Skelton 93 Fight No 8 Konstantin Airich 101 Fight No 9 Denis Bakhtov 10 7 Fight No 10 Michael Sprott 113 Fight No 11 Jason Gavern 11 9 Fight No 12 Raphael Zumbano Love 12 7 Fight No 13 Kevin Johnson 13 3 Fight No 14 Gary Cornish 1 41 Fight No 15 Dillian Whyte 149 Fight No 16 Charles Martin 15 9 Fight No 17 Dominic Breazeale 16 9 Fight No 18 Eric Molina 17 7 Fight No 19 Wladimir Klitschko 185 Fight No 20 Carlos Takam 201 Anthony Joshua Professional Record 215 Index 21 9 Introduction GROWING UP, boxing didn’t interest ‘Femi’. ‘Never watched it,’ said Anthony Oluwafemi Olaseni Joshua, to give him his full name He was too busy climbing things! ‘As a child, I used to get bored a lot,’ Joshua told Sky Sports ‘I remember being bored, always out I’m a real street kid I like to be out exploring, that’s my type of thing Sitting at home on the computer isn’t really what I was brought up doing I was really active, climbing trees, poles and in the woods ’ He also ran fast Joshua reportedly ran 100 metres in 11 seconds when he was 14 years old, had a few training sessions at Callowland Amateur Boxing Club and scored lots of goals on the football pitch One season, he scored -

WORLD BOXING ASSOCIATION GILBERTO JESUS MENDOZA PRESIDENT OFFICIAL RATINGS AS of FEBRUARY Based on Results Held from 01St to February 28Th, 2019

2019 Ocean Business Plaza Building, Av. Aquilino de la Guardia and 47 St., 14th Floor, Office 1405 Panama City, Panama Phone: +507 203-7681 www.wbaboxing.com WORLD BOXING ASSOCIATION GILBERTO JESUS MENDOZA PRESIDENT OFFICIAL RATINGS AS OF FEBRUARY Based on results held from 01st to February 28th, 2019 CHAIRMAN VICE CHAIRMAN MIGUEL PRADO SANCHEZ - PANAMA GUSTAVO PADILLA - PANAMA [email protected] [email protected] The WBA President Gilberto Jesus Mendoza has been acting as interim MEMBERS ranking director for the compilation of this ranking. The current GEORGE MARTINEZ - CANADA director Miguel Prado is on a leave of absence for medical reasons. JOSE EMILIO GRAGLIA - ARGENTINA MARIANA BORISOVA - BULGARIA Over 200 Lbs / 200 Lbs / 175 Lbs / HEAVYWEIGHT CRUISERWEIGHT LIGHT HEAVYWEIGHT Over 90.71 Kgs 90.71 Kgs 79.38 Kgs WBA SUPER CHAMPION: ANTHONY JOSHUA GBR WBA SUPER CHAMPION: OLEKSANDR USYK UKR WORLD CHAMPION: MANUEL CHARR LIB WORLD CHAMPION: BEIBUT SHUMENOV KAZ WORLD CHAMPION: DMITRY BIVOL RUS WBA GOLD CHAMPION: JOE JOYCE GBR WBA GOLD CHAMPION: ARSEN GOULAMIRIAN ARM WBC: DEONTAY WILDER WBC: OLEKSANDR USYK WBC: OLEKSANDR GVOZDYK IBF: ANTHONY JOSHUA WBO: ANTHONY JOSHUA IBF: OLEKSANDR USYK WBO: OLEKSANDR USYK IBF: ARTUR BETERBIEV WBO: SERGEY KOVALEV 1. TREVOR BRYAN INTERIM CHAMP USA 1. YUNIEL DORTICOS CUB 1. MARCUS BROWNE INTERIM CHAMP USA 2. JARRELL MILLER USA 2. ANDREW TABITI USA 2. SULLIVAN BARRERA CUB 3. FRES OQUENDO PUR 3. RYAD MERHY INT CIV 3. FELIX VALERA LAC DOM 4. DILLIAN WHYTE JAM 4. YURY KASHINSKY RUS 4. SVEN FORNLING SWE 5. OTTO WALLIN SWE 5. MURAT GASSIEV RUS 5. JOSHUA BUATSI INT GHA 6. -

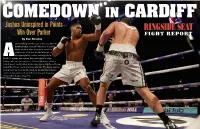

Joshua Uninspired in Points Win Over Parker FIGHT REPORT by Don Stradley

Joshua Uninspired in Points Win Over Parker FIGHT REPORT By Don Stradley nyone walking into the press conference after the Anthony Joshua –Joseph Parker bout in Cardiff, Wales would’ve been shocked by the mood of the room. It felt less like the aftermath of a heavyweight title contest, and more like a Abunch of grumpy men waiting their turn before a judge. Joshua, who won a unanimous 12-round decision, smiled a lot but wasn’t exactly elated. Parker, who looked slightly scuffed up around the left eye, could only shrug and say, “The bigger and bet- ter man won.” As for going 12 rounds for the first time in his pro career, Joshua said he felt good. More smiling and shrugging followed this. It was a shrug fest. y winning, Joshua added another alphabet title to his was focused. I controlled him behind the jab, and the main collection – he has three now – and he said he wants thing is I am the unified champion of the world. I thought it all the belts because it would make him “the most was hard, but going the 12 rounds was light work.” powerful man at the table,” a reference, perhaps, to It was a scrappy bout. Parker used his own jab to keep BDeontay Wilder, the American heavyweight who also owns a things close during the early part of the fight, but by the mid- title belt. Though Joshua and Parker had inspired 78,000 cus- dle rounds it appeared Joshua was landing more often. Parker tomers to crowd into Principality Stadium, Wilder was on the dashed in and out, doing what shorter fighters are supposed minds of many. -

World Boxing Council

xbp WORLD BOXING COUNCIL R A T I N G S RATINGS AS OF FEBRUARY - 2019 / CLASIFICACIONES DEL MES DE FEBRERO - 2019 WORLD BOXING COUNCIL / CONSEJO MUNDIAL DE BOXEO COMITE DE CLASIFICACIONES / RATINGS COMMITTEE WBC Adress: Riobamba # 835, Col. Lindavista 07300 – CDMX, México Telephones: (525) 5119-5274 / 5119-5276 – Fax (525) 5119-5293 E-mail: [email protected] RATINGS AS OF FEBRUARY - 2019 / CLASIFICACIONES DEL MES DE FEBRERO - 2019 HEAVYWEIGHT (+200 - +90.71) CHAMPION: DEONTAY WILDER (US) WON TITLE: January 17, 2015 LAST DEFENCE: December 1, 2018 LAST COMPULSORY: November 4, 2017 WBC SILVER CHAMPION: Dillian Whyte (Jamaica/GB) WBC INT. CHAMPION: Filip Hrgovic (Croatia) IBF CHAMPION: Anthony Joshua (GB) WBO CHAMPION: Anthony Joshua (GB) Contenders: 1 Dillian Whyte (Jamaica/GB) SILVER Note: all boxers rated within the top 15 are 2 Tyson Fury (GB) required to register with the WBC Clean 3 Luis Ortiz (Cuba) Boxing Program at: www.wbcboxing.com 4 Dominic Breazeale (US) Continental Federations Champions: 5 Adam Kownacki (US) ABCO: ABU: Tshibuabua Kalonga (Congo/Germany) 6 Joseph Parker (New Zealand) BBBofC: Hughie Fury (GB) 7 CISBB: Alexander Povetkin (Russia) EBU: Agit Kabayel (Germany) 8 Agit Kabayel (Germany) EBU FECARBOX: Abigail Soto (Dom. R.) 9 FECONSUR: Kubrat Pulev (Bulgaria) NABF: Oscar Rivas (Colombia/Canada) 10 Oscar Rivas (Colombia/Canada) NABF OPBF: Kyotaro Fujimoto (Japan) 11 Carlos Takam (Cameroon) 12 Tony Yoka (France) * CBP/P Affiliated Titles Champions: 13 Commonwealth: Joe Joyce (GB) Dereck Chisora (GB) Continental Americas: 14 Charles Martin (US) Francophone: Dillon Carman (Canada) 15 Latino: Tyrone Spong (Surinam) Sergey Kuzmin (Russia) Mediterranean: Petar Milas (Croatia) USNBC: 16 Filip Hrgovic (Croatia) INTL YOUTH: Daniel Dubois (GB) 17 Joe Joyce (GB) COMM 18 NA= Not Available: Andy Ruiz Jr. -

HEAVYWEIGHT (OVER 200 LBS) CH Anthony Joshua ENG 1 NOT

HEAVYWEIGHT (OVER 200 LBS) CRUISERWEIGHT (200 LBS) LT. HEAVYWEIGHT (175 LBS) S. MIDDLEWEIGHT (168 LBS) CH Anthony Joshua ENG CH Mairis Briedis LVA CH Artur Beterbiev RUS CH Caleb Plant USA 1 NOT RATED 1 NOT RATED 1 Meng Fanlong CHN 1 NOT RATED 2 Charles Martin USA 2 NOT RATED 2 NOT RATED 2 NOT RATED 3 Oleksandr Usyk UKR 3 Jai Opetaia AUS 3 Joshua Buatsi GHA 3 Evgeny Shvedenko RUS 4 Michael Hunter USA 4 Yury Kashinsky RUS 4 Lyndon Arthur ENG 4 Zach Parker ENG 5 Filip Hrgovic HRV 5 Krzysztof Wlodarczyk POL 5 Adam Deines GER 5 Aidos Yerbossynuly KAZ 6 Joseph Parker NZL 6 Evgeniy Tishchenko RUS 6 Ricards Bolotniks LVA 6 Jurgen Brahmer GER 7 Agit Kabayel GER 7 David Light NZL 7 Umar Salamov UKR 7 Leon Bunn GER 8 Tony Yoka FRA 8 Dmitry Kudryashov RUS 8 Craig Richards ENG 8 Daniel Jacobs USA 9 Uaine Fa Jr NZL 9 Artur Mann KAZ 9 Mathieu Bauderlique FRA 9 Caleb Truax USA 10 Luis Ortiz CUB 10 Tommy Mc Carthy ENG 10 Nick Hanning GER 10 Anthony Dirrell USA 11 Kubrat Pulev BGR 11 Fabio Turchi ITA 11 Anthony Yarde ENG 11 Leon Bauer GER 12 Joseph Joyce ENG 12 Richard Riakporhe ENG 12 Badou Jack SWE 12 Vladimir Shishkin RUS 13 Demsey Mc Kean AUS 13 Mateusz Masternak POL 13 Ali Izmailov RUS 13 Daniele Scardina ITA 14 Otto Wallin SWE 14 Michal Cieslak POL 14 Mansur Elsaev RUS 14 Aslambek Idigov RUS 15 Zhang Zhilei CHN 15 Bilal Laggoune BEL 15 Blake Caparello AUS 15 Alantez Fox USA Page 1/5 MIDDLEWEIGHT (160 LBS) JR. -

NENT Group to Show Conor Mcgregor's Return to UFC

• NENT Group to show Conor McGregor’s return to UFC to challenge UFC Lightweight champion Khabib Nurmagomedov • NENT Group secures exclusive Nordic rights to Anthony Joshua’s defence of four world heavyweight boxing belts against Alexander Povetkin • NENT Group shows more than 50,000 hours of the world’s best live sporting action every year Nordic Entertainment Group (NENT Group) will provide exclusive pan-Nordic coverage of former UFC champion Conor McGregor’s highly anticipated return to the octagon to take on UFC Lightweight champion Khabib Nurmagomedov. NENT Group has also secured the exclusive Nordic media rights to the world heavyweight championship boxing match between Anthony Joshua and Alexander Povetkin. Both fights will be shown live as pay- per-view events on NENT Group’s Viaplay streaming service this autumn. One of this year’s most eagerly awaited UFC events, the title showdown between McGregor (21-3, 18 KOs) and Nurmagomedov (26-0, 8 KOs) at the T-Mobile Arena in Las Vegas on 6 October marks McGregor’s first contest since August 2017’s ‘Money Fight’ boxing match against Floyd Mayweather Jr. Nurmagomedov is the reigning UFC Lightweight champion and currently holds the longest undefeated streak (26 wins) in MMA history. NENT Group has also acquired the exclusive Nordic media rights to the world heavyweight championship boxing fight between Anthony Joshua (21-0, 20 KOs) and Alexander Povetkin (34-1, 24 KOs) at London’s Wembley Stadium on 22 September, when the WBA (Super), IBF, WBO and IBO heavyweight belts will all be on the line. Each fight will be shown as a pay-per-view event on Viaplay and will be priced at DKK 499 in Denmark, EUR 59.95 in Finland, NOK 499 in Norway and SEK 499 in Sweden. -

HEAVYWEIGHT (OVER 200 LBS) CH Wladimir Klitschko UKR 1

HEAVYWEIGHT (OVER 200 LBS) CRUISERWEIGHT (200 LBS) LT. HEAVYWEIGHT (175 LBS) S. MIDDLEWEIGHT (168 LBS) CH Wladimir Klitschko UKR CH Victor Ramirez ARG CH Sergey Kovalev RUS CH James De Gale ENG 1 Vyacheslav Glazkov UKR 1 NOT RATED 1 NOT RATED 1 Jose Uzcategui VEN 2 NOT RATED 2 NOT RATED 2 Artur Beterbiev RUS 2 NOT RATED 3 Tyson Fury ENG 3 Murat Gassiev RUS 3 Bernard Hopkins USA 3 Gilberto Ramirez MEX 4 Charles Martin USA 4 Yoan Pablo Hernandez GER 4 Erik Skoglund SWE 4 Rogelio Medina USA 5 Erkan Teper GER 5 Rakhim Chakhkiev RUS 5 Andrzej Fonfara POL 5 Rocky Fielding ENG 6 Steve Cunningham USA 6 Micki Nielsen DNK 6 Sean Monaghan USA 6 Patrick Nielsen DNK 7 Artur Szpilka POL 7 Marco Huck GER 7 Jean Pascal CAN 7 Andre Dirrell USA 8 Lucas Browne AUS 8 Tony Bellew ENG 8 Sullivan Barrera USA 8 Jesse Hart USA 9 Anthony Joshua ENG 9 Dmitry Kudryashov RUS 9 Eleider Alvarez CAN 9 Lucian Bute CAN 10 Carlos Takam FRA 10 Ola Afolabi USA 10 Igor Mikhalkin RUS 10 Callum Smith ENG 11 Bryant Jennings USA 11 Mateusz Masternak POL 11 Yuniesky Gonzalez CUB 11 J'Leon Love USA 12 Bermane Stiverne HTI 12 Noel Gevor ARM 12 Edwin Rodriguez USA 12 Tyron Zeuge GER 13 Mariusz Wach POL 13 Ovill Mc Kenzie ENG 13 Nadjib Mohammedi FRA 13 Julius Jackson USA 14 Andy Ruiz Jr MEX 14 Iago Kiladze GEO 14 Karo Murat GER 14 Zac Dunn AUS 15 Andrey Fedosov RUS 15 Isiah Thomas USA 15 Marcus Browne USA 15 Hadillah Mohoumadi FRA Page 1/5 MIDDLEWEIGHT (160 LBS) JR. -

HEAVYWEIGHT (OVER 200 LBS) CH Andy Ruiz Jr MEX 1 Kubrat Pulev

HEAVYWEIGHT (OVER 200 LBS) CRUISERWEIGHT (200 LBS) LT. HEAVYWEIGHT (175 LBS) S. MIDDLEWEIGHT (168 LBS) CH Andy Ruiz Jr MEX CH Yuniel Dorticos CUB CH Artur Beterbiev RUS CH Caleb Plant USA 1 Kubrat Pulev BGR 1 NOT RATED 1 Meng Fanlong CHN 1 NOT RATED 2 NOT RATED 2 NOT RATED 2 NOT RATED 2 NOT RATED 3 Adam Kownacki USA 3 Yury Kashinsky RUS 3 Dominic Bosel GER 3 Vincent Feigenbutz GER 4 Agit Kabayel GER 4 Kevin Lerena ZAF 4 Joshua Buatsi GHA 4 Caleb Truax USA 5 Anthony Joshua ENG 5 Ruslan Fayfer RUS 5 Umar Salamov UKR 5 Jose Uzcategui VEN 6 Tyson Fury ENG 6 Firat Arslan GER 6 Sven Fornling SWE 6 Fedor Chudinov RUS 7 Michael Hunter USA 7 Andrew Tabiti USA 7 Callum Johnson ENG 7 Chris Eubank Jr ENG 8 Gerald Washington USA 8 Bilal Laggoune BEL 8 Leon Bunn GER 8 Zach Parker ENG 9 Filip Hrgovic HRV 9 Yves Ngabu BEL 9 Adam Deines GER 9 Stefan Hartel GER 10 Alexander Povetkin RUS 10 Jai Opetaia AUS 10 Jesse Hart USA 10 Evgeny Shvedenko RUS 11 Joseph Parker NZL 11 Lyndon Arthur ENG 11 Maksim Vlasov RUS 11 Jurgen Brahmer GER 12 Daniel Dubois ENG 12 Noel Gevor ARM 12 Felix Valera DOM 12 Alfredo Angulo MEX 13 Tom Schwarz GER 13 Lawrence Okolie ENG 13 Igor Mikhalkin RUS 13 Leon Bauer GER 14 Sergey Kuzmin RUS 14 Evgeniy Tishchenko RUS 14 Karo Murat GER 14 Zac Dunn AUS 15 Otto Wallin SWE 15 Krzysztof Wlodarczyk POL 15 Enrico Koelling GER 15 Erik Bazinyan CAN Page 1/5 MIDDLEWEIGHT (160 LBS) JR. -

The Assassinator - ANTHONY JOSHUA by RYAN

The assassinator - ANTHONY JOSHUA BY RYAN Who is he? Lavish lifestyle? Past fights and fights to come Anthony Oluwafemi Olaseni Joshua, Anthony Joshua lives the life of a AJ vs. Charles Martin (MBE) is a British professional boxer. millionaire, but he does not spend a O2 Arena, London He is currently a unified world penny doing so as, all his sponsors pay for heavyweight champion, having held everything like his private jets his 09/04/16/ the IBF title since 2016, and the expensive clothing cars nutrition and WBA and IBO titles since 2017. sports gear. TIME 1:32 ROUND 02 BY KO Born: 15 October 1989 (age 28), Did you know he still lives in a council AJ vs. Wladimir Klitschko Watford house with his mother and son? He does Wembley Stadium, London not live with his ex-partner; he hopes to Height: 1.98 m leave all he has with his son. He still lives 29/04/17/ Weight: 113 kg in the council house so he does not forget TIME 02:25 ROUND 11 BY TKO where he stems from. Reach: 82 in (208 cm) Saturday, 31 March Although he travels around all the world, Parents: Yeta Odusanya, Robert the athlete still has a very healthy diet and AJ vs. Dominic Breazeale Joshua he still does training and conditioning O2 Arena, London Siblings: Jacob Joshua, Janet Joshua, while abroad. Anthony Joshua used to get Loretta Joshua in a lot of trouble when he was younger 25/06/16/ and then he joined Finchley ABC and his TIME 1:01 ROUND 07 BY TKO Joshua made astonishing progress boxing coach took him off the streets and through the amateur ranks. -

Anthony Joshua & Tyson Fury Agree to Two Fights

Anthony Joshua & Tyson Fury agree to two fights British World Heavyweight Champions Anthony Joshua and Tyson Fury have agreed to a two-fight deal, says promoter Eddie Hearn. Talks over an historic bout for the undisputed title began in early May and have been finalised today. Joshua, 30, holds the WBA, IBF and WBO belts while 31-year-old Fury is the WBC champion. The winner would bring all the belts together which is called “Unifying the division” for the first time since Britain’s Lennox Lewis in 1999. The successful athlete would become the undisputed World Heavyweight Champion and hold all 4 belts at the same time. "It's fair to say that Joshua and Fury are in agreement about the financial terms of the fight" Hearn, who is Joshua's promoter, told Sky Sports News. The actual amounts have not been disclosed but the total amount of money for the fight is thought to be around £150 million which would be split 50/50 for the first fight and then 60/40 in favour of the winner of the second fight. Fury expressed his delight at the news in a social media post. The Manchester- born boxer said he would fight Joshua in 2021, but would first have to overcome the "hurdle in the road" Deontay Wilder, in a third meeting. Fury is contracted to fight the American, who he beat to win the WBC title in February. Joshua, who reclaimed his world titles in December, has to face his mandatory challenger, Bulgaria's Kubrat Pulev, when the sport fully resumes after the coronavirus shutdown.