International Journal of Computer Science in Sport a Team-Compatibility Decision Support System for the National Football League

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nfl 100 All-Time Team’

FOR IMMEDIATE RELEASE Alex Riethmiller – 310.840.4635 NFL – 11/18/19 [email protected] NFL RELEASES RUNNING BACK FINALISTS FOR THE ‘NFL 100 ALL-TIME TEAM’ 24 Transformative Rushers Kick Off Highly Anticipated Reveal The ‘NFL 100 All-Time Team’ Premieres Friday, November 22 at 8:00 PM ET on NFL Network The NFL is proud to announce the 24 running backs that have been named as finalists for the NFL 100 All-Time Team. First announced on tonight’s edition of Monday Night Countdown on ESPN, the NFL 100 All- Time Team running back finalist class account for 14 NFL MVP titles and combine for 2,246 touchdowns. Of the 24 finalists at running back, 23 are enshrined in the Pro Football Hall of Fame in Canton, OH, while one is still adding to his legacy on the field as an active player. The NFL100 All-Time Team premieres on November 22 and continues for six weeks through Week 17 of the regular season. Rich Eisen, Cris Collinsworth and Bill Belichick will reveal the NFL 100 All-Time Team selections by position in each episode beginning at 8:00 PM ET every Friday night, followed by a live reaction show hosted by Chris Rose immediately afterward, exclusively on NFL Network. Of the 24 running back finalists, Friday’s premiere of the NFL 100 All-Time Team will name 12 individuals as the greatest running backs of all time. The process to select and celebrate the historic team began in early 2018 with the selection of a 26-person blue-ribbon voting panel. -

The Disparate Impact of the NFL's Use of the Wonderlic Intelligence Test and the Case for a Football- Specific Estt Note

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by OpenCommons at University of Connecticut University of Connecticut OpenCommons@UConn Connecticut Law Review School of Law 2009 Fourth and Short on Equality: The Disparate Impact of the NFL's Use of the Wonderlic Intelligence Test and the Case for a Football- Specific estT Note Christopher Hatch Follow this and additional works at: https://opencommons.uconn.edu/law_review Recommended Citation Hatch, Christopher, "Fourth and Short on Equality: The Disparate Impact of the NFL's Use of the Wonderlic Intelligence Test and the Case for a Football-Specific estT Note" (2009). Connecticut Law Review. 38. https://opencommons.uconn.edu/law_review/38 CONNECTICUT LAW REVIEW VOLUME 41 JULY 2009 NUMBER 5 Note FOURTH AND SHORT ON EQUALITY: THE DISPARATE IMPACT OF THE NFL’S USE OF THE WONDERLIC INTELLIGENCE TEST AND THE CASE FOR A FOOTBALL-SPECIFIC TEST CHRISTOPHER HATCH Prior to being selected in the NFL draft, a player must undergo a series of physical and mental evaluations, including the Wonderlic Intelligence Test. The twelve-minute test, which measures “cognitive ability,” has been shown to have a disparate impact on minorities in various employment situations. This Note contends that the NFL’s use of the Wonderlic also has a disparate impact because of its effect on a player’s draft status and ultimately his salary. The test cannot be justified by business necessity because there is no correlation between a player’s Wonderlic score and their on-field performance. As such, this Note calls for the creation of a football-specific intelligence test that would be less likely to have a disparate impact than the Wonderlic, while also being sufficiently job-related and more reliable in predicting a player’s success. -

Facts & Figures on 2007 Nfl Draft

NATIONAL FOOTBALL LEAGUE 280 Park Avenue, New York, NY 10017 (212) 450-2000 * FAX (212) 681-7573 WWW.NFLMedia.com Joe Browne, Executive Vice President-Communications Greg Aiello, Vice President-Public Relations FOR USE AS DESIRED 4/18/07 FACTS & FIGURES ON 2007 NFL DRAFT WHAT: 72nd Annual National Football League Player Selection Meeting. WHERE: Radio City Music Hall, 1260 6th Avenue, New York City (Between 50th and 51st Streets). WHEN: Noon ET, Saturday, April 28 (Rounds 1-3). 11:00 AM ET, Sunday, April 29 (Rounds 4-7). The first three rounds will conclude on Saturday by approximately 10:00 PM ET. In 2006, the first round consumed four hours and 48 minutes; the second, three hours and 10 minutes; and the third, one hour and 38 minutes. The draft will resume on Sunday at 11:00 AM ET for the final four rounds, ending at approximately 6:00 PM ET. DRAFTING: Representatives of the 32 NFL clubs by telephone communication with their general managers, coaches and scouts. ROUNDS: Seven Rounds – Rounds 1 through 3 on Saturday, April 28; and Rounds 4 through 7 on Sunday, April 29. There will be 255 selections, including 32 compensatory choices that have been awarded to 16 teams which suffered a net loss of certain quality unrestricted free agents last year. The following 32 compensatory choices will supplement the 223 regular choices in the seven rounds – Round 3: San Diego, 33; San Francisco, 34; Indianapolis, 35; Oakland, 36. Round 4: Pittsburgh, 33; Atlanta, 34; Baltimore, 35; San Francisco, 36; Indianapolis, 37; Baltimore, 38. -

2008 Mock Draft

2008 DRAFTwww.newerascouting.com GUIDE MOCK DRAFT: RANKINGS: Seven full rounds Analysis of the top prospects in the country TEAMS: HIGH SCHOOL: How each team can Learn about the next crop improve on draft day of hot football players Top 300 Players (Seniors and declared underclassmen only) Rank Player Position School 1 Glenn Dorsey DT LSU 2 Jake Long OT Michigan 3 Sedrick Ellis DT USC 4 Matt Ryan QB Boston College 5 Chris Long DE Virginia 6 Antoine Cason CB Arizona 7 Brian Brohm QB Louisville 8 Keith Rivers LB USC 9 Andre Woodson QB Kentucky 10 Dan Connor LB Penn State 11 Martin Rucker TE Missouri 12 Mike Jenkins CB South Florida 13 Limas Sweed WR Texas 14 Quentin Groves DE Auburn 15 Kenny Phillips* S Miami (FL) 16 Shawn Crable LB Michigan 17 Barry Richardson OT Clemson 18 Early Doucet WR LSU 19 Frank Okam DT Texas 20 Tashard Choice RB Georgia Tech 21 Chris Ellis DE Virginia Tech 22 Adarius Bowman WR Oklahoma State 23 Leodis McKelvin CB Troy 24 Jeff Otah OT Pittsburgh 25 Tracy Porter CB Indiana 26 Ali Highsmith LB LSU 27 Matt Forte' RB Tulane 28 Sam Baker OT USC 29 Red Bryant DT Texas A&M 30 Lawrence Jackson DE USC 31 Mike Hart RB Michigan 32 Keenan Burton WR Kentucky 33 Philip Wheeler LB Georgia Tech 34 DeJuan Tribble CB Boston College 35 Quintin Demps S UTEP 36 Vince Hall LB Virginia Tech 37 Colt Brennan QB Hawaii 38 Fred Davis TE USC 39 Shannon Tevaga OG UCLA 40 Dre Moore DT Maryland 41 Dominique Rodgers-Cromartie CB Tennessee State 42 Allen Patrick RB Oklahoma 43 Mario Urrutia* WR Louisville 44 Xavier Adibi LB Virginia Tech 45 Erik Ainge QB Tennessee 46 Peyton Hillis FB Arkansas 47 Marcus Henry WR Kansas 48 Tony Hills, Jr. -

All-Gator Bowl Team Based Off Gator Bowl Performance OFFENSE

All-Gator Bowl Team Based Off Gator Bowl Performance OFFENSE Quarterback (Vote for 1) Matt Cavanaugh (Pittsburgh QB 1975 – 1977) Pitt exploded for 566 total yards and a record 30 first downs. Cavanaugh threw for 387 yards and 4 touchdowns to shoulder his team’s offense and lead them to a win over the South Carolina Gamecocks. Chip Ferguson (Florida State QB 1985 – 1988) The Seminoles accounted for 597 yards of total offense in 1985 and 338 came via QB Chip Ferguson’s arm. He added 2 touchdowns as the Seminoles would go on to defeat the Oklahoma State Cowboys 34-23. Kim Hammond (Florida State QB 1965 – 1967) Penn State looked to put the 1967 Gator Bowl away early, and by the looks of the first half they succeeded. Penn State took a comfortable 17-0 lead into halftime. Kim Hammond simply took over in the second half. Hammond completed 37 passes for 362 yards, both records that eclipsed former FSU quarterback Steve Tensi. Hammond scored twice; once on the ground and once through the air. 14 of his completions were to his favorite target, Ron Sellers, who caught for 145 yards. The Seminoles stormed back and nailed a field goal with 15 seconds left to end the game in a 17-17 tie. Graham Harrell (Texas Tech QB 2005 – 2008) Harrell had one of the most successful Gator Bowl performances against the Virginia Cavaliers. He set the bowl record in passing attempts (69), completions (44), and yards gained (407). He threw for 3 touchdowns to lead the Red Raiders to a 38-35 victory over the Cavaliers and was named game MVP. -

2007 NFL Draft's No. 1 Pick to Become FATHEAD®

TM FOR IMMEDIATE RELEASE CONTACT: Nick Paulenich (734) 386-5921 [email protected] 2007 NFL Draft’s No. 1 Pick to Become FATHEAD® For the First Time, NFL Player Fathead Planned Before Playing Professionally LIVONIA, Mich., April 9, 2007 – The top pick in this year’s National Football League (NFL) Draft will make Fathead® history. Fathead, the company that produces life-size wall graphics of sports and entertainment stars, will create a wall graphic of the No. 1 overall pick in the 2007 NFL Draft. This will also mark the first time an athlete is named a Fathead before playing a single game as a professional athlete. Current frontrunners for the honor are wide receiver Calvin Johnson, running back Adrian Peterson, quarterbacks Brady Quinn and JaMarcus Russell and offensive tackle Joe Thomas. The 2007 NFL Draft is April 28-29 in New York, N.Y. There have been only three players to have earned Fathead status as rookies, all from the 2006 NFL Draft Class: New Orleans Saints running back Reggie Bush, Arizona Cardinals quarterback Matt Leinart and Tennessee Titans quarterback Vince Young, the 2006 AP Offensive Rookie of the Year. “The NFL Draft has become a must-see event on every football fan’s calendar. It only makes sense that we celebrate this event and honor the top selection with a Fathead,” said Brock Weatherup, Chief Executive Officer, Fathead, LLC. “This player will be in great company. We offer 51 past and present NFL players, including more than 40 players who have played in at least one Pro Bowl.” The No. -

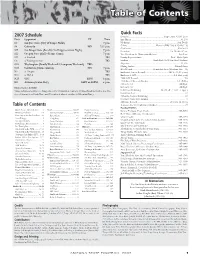

Table of Contents

Table of Contents Quick Facts 2007 Schedule Location ..............................................Tempe, Ariz. 85287-2505 Date Opponent TV Time Enrollment ................................................................. 63,278 S1 San Jose State (City of Tempe Night) 7 p.m. Nickname ...............................................................Sun Devils S8 Colorado FSN 7:15 p.m. Colors ......................................Maroon (PMS 208) & Gold (123) Conference ..............................................................Pacific-10 S15 San Diego State (Faculty/Staff Appreciation Night) 7 p.m. President ...................................................... Dr. Michael Crow S22 Oregon State (Hall of Fame Game) 7 p.m. Vice President for University Athletics............................. Lisa Love S29 at Stanford TBD Faculty Representative ......................................Prof. Myles Lynk O6 at Washington State TBD Stadium ................................Frank Kush Field/Sun Devil Stadium O13 Washington (Family Weekend/Champions Weekend) TBD Capacity ..................................................................... 71,706 Playing Surface .....................................................Natural Grass O27 California (Homecoming) FSN 7 p.m. Head Coach ...........................Dennis Erickson (Montana State ‘70) N3 at Oregon TBD Erickson’s Career Record ...............................148-65-1 (18 years) N10 at UCLA TBD Erickson at ASU ..................................................0-0 (first year) N22 USC ESPN 6 p.m. 2006 -

2016 Nfl Draft Facts & Figures

Minnesota Vikings 2016 Draft Guide MINNESOTA VIKINGS PRESEASON WEEK DAY DATE OPPONENT TIME (CT) TV RADIO P1 Friday Aug. 12 at Cincinnati Bengals 6:30 p.m. FOX 9 KFAN / KTLK P2 Thursday Aug. 18 at Seattle Seahawks 9:00 p.m. FOX 9 KFAN / KTLK P3 Sunday Aug. 28 San Diego Chargers Noon FOX 9 KFAN / KTLK P4 Thursday Sept. 1 Los Angeles Rams 7:00 p.m. FOX 9 KFAN / KTLK REGULAR SEASON WEEK DAY DATE OPPONENT TIME (CT) TV RADIO 1 Sunday Sept. 11 at Tennessee Titans Noon FOX KFAN / KTLK 2 Sunday Sept. 18 Green Bay Packers 7:30 p.m. NBC KFAN / KTLK 3 Sunday Sept. 25 at Carolina Panthers Noon FOX KFAN / KTLK 4 Monday Oct. 3 New York Giants 7:30 p.m. ESPN KFAN / KTLK 5 Sunday Oct. 9 Houston Texans Noon CBS KFAN / KTLK 6 Sunday Oct. 16 BYE WEEK 7 Sunday Oct. 23 at Philadelphia Eagles Noon* FOX KFAN / KTLK 8 Monday Oct. 31 at Chicago Bears 7:30 p.m. ESPN KFAN / KTLK 9 Sunday Nov. 6 Detroit Lions Noon* FOX KFAN / KTLK 10 Sunday Nov. 13 at Washington Redskins Noon* FOX KFAN / KTLK 11 Sunday Nov. 20 Arizona Cardinals Noon* FOX KFAN / KTLK 12 Thursday Nov. 24 at Detroit Lions 11:30 a.m. CBS KFAN / KTLK 13 Thursday Dec. 1 Dallas Cowboys 7:25 p.m. NBC/NFLN KFAN / KTLK 14 Sunday Dec. 11 at Jacksonville Jaguars Noon* FOX KFAN / KTLK 15 Sunday Dec. 18 Indianapolis Colts Noon* CBS KFAN / KTLK 16 Saturday Dec. 24 at Green Bay Packers Noon* FOX KFAN / KTLK 17 Sunday Jan. -

Fbl-Guide-16-Nfl.Pdf

WWOLVERINESOLVERINES PPRORO FOOTBALLFOOTBALL HONORSHONORS NFL HISTORY PRO BOWL (1950-2014) ALL-NFL (ALL-PRO) season after which game was played 1933 - Harry Newman 1950 - Al Wistert 1952 - Len Ford 1951 - Len Ford, Elroy Hirsch 1953 - Len Ford 1952 - Len Ford, Elroy Hirsch 1954 - Len Ford, Roger Zatkoff 1953 - Len Ford, Elroy Hirsch 1955 - Len Ford 1954 - Len Ford, Roger Zatkoff 1962 - Ron Kramer 1955 - Roger Zatkoff 1967 - Tom Keating 1956 - Roger Zatkoff 1970 - Rick Volk 1962 - Ron Kramer 1971 - Rick Volk 1963 - John Morrow 1975 - Dan Dierdorf 1964 - Terry Barr 1976 - Dan Dierdorf 1965 - Terry Barr 1977 - Dan Dierdorf 1966 - Tom Keating 1978 - Dan Dierdorf 1967 - Rick Volk, Tom Keating, Tom Mack 1980 - Dan Dierdorf, Mike Kenn 1968 - Tom Mack 1982 - Mike Kenn 1969 - Rick Volk, Tom Mack 1983 - Ali Haji-Sheikh, Mike Kenn 1970 - Tom Mack 1984 - Mike Kenn, Dwight Hicks, Dave Brown 1971 - Rick Volk, Tom Mack 1985 - Dave Brown 1972 - Tom Mack 1987 - Keith Bostic, Anthony Carter 1973 - Tom Mack 1991 - Mike Kenn 1974 - Dan Dierdorf, Tom Mack 1996 - Desmond Howard 1975 - Dan Dierdorf, Tom Mack 1998 - Ty Law 1976 - Dan Dierdorf 1999 - Charles Woodson 1977 - Dan Dierdorf, Tom Mack 2003 - Steve Hutchinson 1978 - Dan Dierdorf, Thom Darden, Tom Mack 2004 - Steve Hutchinson 1980 - Dan Dierdorf, Randy Logan, Mike Kenn 2005 - Steve Hutchinson 1981 - Randy Logan, Dwight Hicks, Mike Kenn 2006 - Steve Hutchinson 1982 - Dwight Hicks, Mike Kenn 2007 - Tom Brady, Steve Hutchinson 1983 - Ali Haji-Sheikh, Dwight Hicks, Mike 2008 - Steve Hutchinson (1st), Charles -

Drafts 1968 Afl Expansion Draft Jan

(Player history, continued) PLAYER HISTORY — DRAFTS 1968 AFL EXPANSION DRAFT JAN. 21 1968 AFL/NFL DRAFT JAN. 30-31 1970 NFL DRAFT JAN. 27-28 PLAYER .................. POS. COLLEGE ........................... AFL TEAM RD. PLAYER ................... POS. COLLEGE ....................... SEL. # RD. PLAYER .................... POS. COLLEGE ....................... SEL. # Dan Archer* ...................... T Oregon ............................. Oakland Raiders 1 Bob Johnson....................... C Tennessee .................................. *2 1 Mike Reid ......................... DT Penn State .................................... 7 Estes Banks* .................. RB Colorado .......................... Oakland Raiders 1 (sent to Miami in trade on 12-26-67) ............................................ *27 2 Ron Carpenter .................. DT North Carolina State ................... 32 Joe Bellino ...................... RB Navy .................................. Boston Patriots 2a Bill Staley ....................... DE/T Utah State ................................. *28 3 Chip Bennett ..................... LB Abilene Christian ......................... 60 Jim Boudreaux ................ DT Louisiana Tech .................. Boston Patriots 2 (sent to Miami in trade on 12-26-67) ............................................ *54 4a Joe Stephens ..................... G Jackson State ............................. 85 Dan Brabham* ................ LB Arkansas .............................Houston Oilers 2b Tom Smiley....................... RB Lamar ....................................... -

PREP PROGRAM AMENITIES Staff and State of the Art Facility Truly Made All the Difference When • React PT and Massage Therapy On-Site Testing Time Came

NEW TCBOOST FACILITY During my time leading up to the NFL Combine, I trained with TCBoost. It was an easy EQUIPMENT/FEATURES decision for me considering their past success with NFL • 60-Yard Olympic-Level Indoor Mondo Sprint Track athletes. Each week I saw significant improvements in my • 60-Yard AstroTurf PureGrass Linear Speed and Acceleration Area explosiveness, mobility, and • 3000 Square Foot Position and Agility Drill Area flexibility. I felt like I was one of the most prepared athletes at • Specialized Inertial Resistance Speed Development Devices the Combine. • Agility Space Equipped with Specialized Cable and Resistance-Band -Dean Lowry Attachments DE, NFL Combine Prep 4th Rd Pick, Green Bay Packers • Sorinex, VersaPulley, VertiMax, and Westside Barbell Equipped Weight Room • Tendo Fitrodyne Weightlifting Analyzer for Power and Velocity Measurements • Portable Video Analysis for Instantaneous Feedback and Coaching SPECIFICATIONS “With many places offering me what sounded like the same • 10,000 Square Foot Facility training approach, I’m very glad I went with the unique experience • 24-Foot Ceilings 20192015 that TCBOOST offers. I don’t think • Easy Access Off I-94 in Both Directions anywhere else could’ve offered me as comprehensive an opportunity NFL COMBINE & DRAFT • 5 Minutes From Lake Cook Road Metra Station (MD-N) to succeed as TCBOOST did. The constant one on one attention Tommy gave me along with his PREP PROGRAM AMENITIES staff and state of the art facility truly made all the difference when • React PT and Massage Therapy -

Player History ALL-TIME ROSTER to Be Eligible for the All-Time Roster, a Player Must Have Been in 18 Breen, Adrian

player history ALL-TIME ROSTER To be eligible for the all-time roster, a player must have been in 18 Breen, Adrian ..................... QB ’87 Morehead State 92 Copeland, John ........... DE/DT ’93-00 Alabama uniform for at least one regular-season or postseason game, or spent a 83 Brennan, Brian .................. WR ’92 Boston College 81 Corbett, Jim ....................... TE ’77-80 Pittsburgh minimum of four games on the roster (including inactive status). A player 60 Brennan, Mike ................... OT ’90-91 Notre Dame 16 Core, Cody ....................... WR ’16-17 Mississippi who qualifies at any point under these guidelines also will be credited for 88 Brewer, Sean ..................... TE ’01-02 San Jose State 76 Cornish, Frank ................... DT ’70 Grambling seasons in which he was on a reserve list but did not play or dress for a 10 Brice, Will ............................ P ’99 Virginia 12 Cosby, Quan .............. WR/PR ’09-10 Texas game. Players who spend time on a reserve list but have never played or 47 Bright, Greg ......................... S ’80-81 Morehead State 88 Coslet, Bruce ..................... TE ’69-76 Pacific dressed for a game do not qualify for the all-time roster. The Practice 65 Brilz, Darrick ........................ C ’94-98 Oregon State 6 Costello, Brad ....................... P ’98-99 Boston University Squad does not qualify. 43 Brim, Mike ......................... CB ’93-95 Virginia Union 46,29 Cothran, Jeff ...................... FB ’94-96 Ohio State 83 Brock, Kevin ...................... TE ’14 Rutgers 70 Cotton, Barney .................... G ’79 Nebraska — A — 50,55 Brooks, Ahmad .................. LB ’06-07 Virginia 52 Cousino, Brad..................... LB ’75 Miami (Ohio) NO. NAME POS. YRS. COLLEGE 82 Brooks, Billy .....................