Storage Node Setup a Storage Node (Or System As Your Scale) Is a Very Important Unit for an HPC Cluster

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chrooting All Services in Linux

LinuxFocus article number 225 http://linuxfocus.org Chrooting All Services in Linux by Mark Nielsen (homepage) About the author: Abstract: Mark works as an independent consultant Chrooted system services improve security by limiting damage that donating time to causes like someone who broke into the system can possibly do. GNUJobs.com, writing _________________ _________________ _________________ articles, writing free software, and working as a volunteer at eastmont.net. Introduction What is chroot? Chroot basically redefines the universe for a program. More accurately, it redefines the "ROOT" directory or "/" for a program or login session. Basically, everything outside of the directory you use chroot on doesn't exist as far a program or shell is concerned. Why is this useful? If someone breaks into your computer, they won't be able to see all the files on your system. Not being able to see your files limits the commands they can do and also doesn't give them the ability to exploit other files that are insecure. The only drawback is, I believe it doesn't stop them from looking at network connections and other stuff. Thus, you want to do a few more things which we won't get into in this article too much: Secure your networking ports. Have all services run as a service under a non-root account. In addition, have all services chrooted. Forward syslogs to another computer. Analyze logs files Analyze people trying to detect random ports on your computer Limit cpu and memory resources for a service. Activate account quotas. The reason why I consider chroot (with a non-root service) to be a line of defense is, if someone breaks in under a non-root account, and there are no files which they can use to break into root, then they can only limit damage to the area they break in. -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

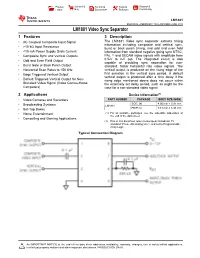

LM1881 Video Sync Separator Datasheet

Product Sample & Technical Tools & Support & Folder Buy Documents Software Community LM1881 SNLS384G –FEBRUARY 1995–REVISED JUNE 2015 LM1881 Video Sync Separator 1 Features 3 Description The LM1881 Video sync separator extracts timing 1• AC Coupled Composite Input Signal information including composite and vertical sync, • >10-kΩ Input Resistance burst or back porch timing, and odd and even field • <10-mA Power Supply Drain Current information from standard negative going sync NTSC, • Composite Sync and Vertical Outputs PAL (1) and SECAM video signals with amplitude from • Odd and Even Field Output 0.5-V to 2-V p-p. The integrated circuit is also capable of providing sync separation for non- • Burst Gate or Back Porch Output standard, faster horizontal rate video signals. The • Horizontal Scan Rates to 150 kHz vertical output is produced on the rising edge of the • Edge Triggered Vertical Output first serration in the vertical sync period. A default vertical output is produced after a time delay if the • Default Triggered Vertical Output for Non- rising edge mentioned above does not occur within Standard Video Signal (Video Games-Home the externally set delay period, such as might be the Computers) case for a non-standard video signal. 2 Applications Device Information(1) • Video Cameras and Recorders PART NUMBER PACKAGE BODY SIZE (NOM) SOIC (8) 4.90 mm × 3.91 mm • Broadcasting Systems LM1881 • Set-Top Boxes PDIP (8) 9.81 mm × 6.35 mm • Home Entertainment (1) For all available packages, see the orderable addendum at the end of the data sheet. • Computing and Gaming Applications (1) PAL in this datasheet refers to European broadcast TV standard “Phase Alternating Line”, and not to Programmable Array Logic. -

26 Disk Space Management

26 Disk Space Management 26.1 INTRODUCTION It has been said that the only thing all UNIX systems have in common is the login message asking users to clean up their files and use less disk space. No matter how much space you have, it isn’t enough; as soon as a disk is added, files magically appear to fill it up. Both users and the system itself are potential sources of disk bloat. Chapter 12, Syslog and Log Files, discusses various sources of logging information and the techniques used to manage them. This chapter focuses on space problems caused by users and the technical and psy- chological weapons you can deploy against them. If you do decide to Even if you have the option of adding more disk storage to your system, add a disk, refer to it’s a good idea to follow this chapter’s suggestions. Disks are cheap, but Chapter 9 for help. administrative effort is not. Disks have to be dumped, maintained, cross-mounted, and monitored; the fewer you need, the better. 26.2 DEALING WITH DISK HOGS In the absence of external pressure, there is essentially no reason for a user to ever delete anything. It takes time and effort to clean up unwanted files, and there’s always the risk that something thrown away might be wanted again in the future. Even when users have good intentions, it often takes a nudge from the system administrator to goad them into action. 618 Chapter 26 Disk Space Management 619 On a PC, disk space eventually runs out and the machine’s primary user must clean up to get the system working again. -

How UNIX Organizes and Accesses Files on Disk Why File Systems

UNIX File Systems How UNIX Organizes and Accesses Files on Disk Why File Systems • File system is a service which supports an abstract representation of the secondary storage to the OS • A file system organizes data logically for random access by the OS. • A virtual file system provides the interface between the data representation by the kernel to the user process and the data presentation to the kernel in memory. The file and directory system cache. • Because of the performance disparity between disk and CPU/memory, file system performance is the paramount issue for any OS Main memory vs. Secondary storage • Small (MB/GB) Large (GB/TB) • Expensive Cheap -2 -3 • Fast (10-6/10-7 sec) Slow (10 /10 sec) • Volatile Persistent Cannot be directly accessed • Directly accessible by CPU by CPU – Interface: (virtual) memory – Data should be first address brought into the main memory Secondary storage (disk) • A number of disks directly attached to the computer • Network attached disks accessible through a fast network - Storage Area Network (SAN) • Simple disks (IDE, SATA) have a described disk geometry. Sector size is the minimum read/write unit of data (usually 512Bytes) – Access: (#surface, #track, #sector) • Smart disks (SCSI, SAN, NAS) hide the internal disk layout using a controller type function – Access: (#sector) • Moving arm assembly (Seek) is expensive – Sequential access is x100 times faster than the random access Internal disk structure • Disk structure User Process Accessing Data • Given the file name. Get to the file’s FCB using the file system catalog (Open, Close, Set_Attribute) • The catalog maps a file name to the FCB – Checks permissions • file_handle=open(file_name): – search the catalog and bring FCB into the memory – UNIX: in-memory FCB: in-core i-node • Use the FCB to get to the desired offset within the file data: (CREATE, DELETE, SEEK, TRUNCATE) • close(file_handle): release FCB from memory Catalog Organization (Directories) • In UNIX, special files (not special device files) called directories contain information about other files. -

Linux Kernel and Driver Development Training Slides

Linux Kernel and Driver Development Training Linux Kernel and Driver Development Training © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Latest update: October 9, 2021. Document updates and sources: https://bootlin.com/doc/training/linux-kernel Corrections, suggestions, contributions and translations are welcome! embedded Linux and kernel engineering Send them to [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/470 Rights to copy © Copyright 2004-2021, Bootlin License: Creative Commons Attribution - Share Alike 3.0 https://creativecommons.org/licenses/by-sa/3.0/legalcode You are free: I to copy, distribute, display, and perform the work I to make derivative works I to make commercial use of the work Under the following conditions: I Attribution. You must give the original author credit. I Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. I For any reuse or distribution, you must make clear to others the license terms of this work. I Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. Document sources: https://github.com/bootlin/training-materials/ - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/470 Hyperlinks in the document There are many hyperlinks in the document I Regular hyperlinks: https://kernel.org/ I Kernel documentation links: dev-tools/kasan I Links to kernel source files and directories: drivers/input/ include/linux/fb.h I Links to the declarations, definitions and instances of kernel symbols (functions, types, data, structures): platform_get_irq() GFP_KERNEL struct file_operations - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/470 Company at a glance I Engineering company created in 2004, named ”Free Electrons” until Feb. -

Installing the Data Broker on a Linux Host : Cloud Sync

Installing the data broker on a Linux host Cloud Sync Ben Cammett April 11, 2021 This PDF was generated from https://docs.netapp.com/us-en/cloudsync/task_installing_linux.html on September 23, 2021. Always check docs.netapp.com for the latest. Table of Contents Installing the data broker on a Linux host. 1 Linux host requirements. 1 Networking requirements . 1 Enabling access to AWS . 1 Enabling access to Google Cloud . 2 Enabling access to Microsoft Azure . 2 Installing the data broker . 2 Installing the data broker on a Linux host When you create a new data broker, choose the On-Prem Data Broker option to install the data broker software on an on-premises Linux host, or on an existing Linux host in the cloud. Cloud Sync guides you through the installation process, but the requirements and steps are repeated on this page to help you prepare for installation. Linux host requirements • Operating system: ◦ CentOS 7.0, 7.7, and 8.0 ◦ Red Hat Enterprise Linux 7.7 and 8.0 ◦ Ubuntu Server 20.04 LTS ◦ SUSE Linux Enterprise Server 15 SP1 The command yum update all must be run on the host before you install the data broker. A Red Hat Enterprise Linux system must be registered with Red Hat Subscription Management. If it is not registered, the system cannot access repositories to update required 3rd party software during installation. • RAM: 16 GB • CPU: 4 cores • Free disk space: 10 GB • SELinux: We recommend that you disable SELinux on the host. SELinux enforces a policy that blocks data broker software updates and can block the data broker from contacting endpoints required for normal operation. -

Administrivia Confining Code with Legacy Oses Using Chroot Escaping Chroot System Call Interposition Limitations of Syscall Inte

Administrivia Confining code with legacy OSes Guest lecture Thursday • Often want to confine code on legacy OSes - Mark Lentczner (Google) on the Belay project • - Please attend lecture if at all possible Analogy: Firewalls • Last project due Thursday attacker • - No extensions unless all non-SCPD group members at lecture Hopelessly - If staff grants you extension, means only if you attend lecture Insecure attacker Server - We will have a more stringent enforcement mechanism Final Exam • - Your machine runs hopelessly insecure software - Wednesday March 16, 12:15-3:15pm - Can’t fix it—no source or too complicated - Open book, covers all 19 lectures - Can reason about network traffic (possibly including topics already on the midterm) Similarly block unrusted code within a machine Televised final review session Friday • • - By limiting what it can interact with - Bring questions on lecture material 1/37 2/37 Using chroot Escaping chroot chroot (char *dir) “changes root directory” Re-chroot to a lower directory, then chroot .. • • - Kernel stores root directory of each process - Each process has one root directory, so chrooting to a new - File name “/” now refers to dir directory can put you above your new root - Accessing “..” in dir now returns dir Create devices that let you access raw disk • Need root privs to call chroot • Send signals to or ptrace non-chrooted processes - But subsequently can drop privileges • Create setuid program for non-chrooted proc. to run Ideally “Chrooted process” wouldn’t affect parts of • • the system outside of -

Troubleshoot

Troubleshoot XCP NetApp September 23, 2021 This PDF was generated from https://docs.netapp.com/us-en/xcp/xcp-troubleshoot-xcp-nfs.html on September 23, 2021. Always check docs.netapp.com for the latest. Table of Contents Troubleshoot. 1 Troubleshoot XCP NFS errors . 1 Troubleshoot XCP SMB Errors . 2 Troubleshoot XCP File Analytics errors . 3 Troubleshoot Troubleshoot XCP NFS errors Review the solutions to troubleshoot your issue. XCP issue Solution xcp: ERROR: must run as root Execute XCP commands as root user xcp: ERROR: License file Download the license from the XCP site and copy to /opt/NetApp/xFiles/xcp/license not found. /opt/NetApp/xFiles/xcp/ xcp: ERROR: This license has expired Renew or obtain the new XCP license from the XCP site. xcp: ERROR: License unreadable License file might be corrupted. Obtain the new XCP license from the XCP site. xcp: ERROR: XCP not activated, Run the xcp activate command run 'activate' first This copy is not licensed Obtain the appropriate XCP license file. Copy the XCP license to the /opt/NetApp/xFiles/xcp/ directory on the XCP server. Run the xcp activate command to activate the license. xcp: ERROR: Failed to activate license: Server You are trying to activate the online license and your unreachable host system is not connected to internet. Make sure your system is connected internet. xcp: ERROR: Failed to activate license: Server Make sure xcp.netapp.com is reachable from your xcp.netapp.com unreachable host or request for the offline license xcp: HINT: Configure DNS on this host or return to the license page to request a private license Expected error: Failed to activate license: Server xcp.netapp.com unreachable xcp: ERROR: Catalog inaccessible: Cannot mount Open the editor on the XCP Linux client host and nfs_server:/export[:subdirectory] update the configuration file with the proper catalog location. -

Setting up Your Own Mozilla Sync Server Setting up Your Own Mozilla Sync Server

2021/07/30 17:34 (UTC) 1/8 Setting Up Your Own Mozilla Sync Server Setting Up Your Own Mozilla Sync Server Mozilla Sync is a feature which is supported in Firefox since version 4. It allows you to store your bookmarks, browsing history, browser preferences, and saved passwords on a remote server. It even allows you to work on multiple computers and have the same Firefox configuration on all these computers. That’s a pretty cool feature. You can for instance setup sync on your Android phone’s Firefox browser and quickly configure it by entering your account-specific sync key. Moments later you will have your synced desktop browser configuration on your phone. Mozilla offers public sync servers. If you don't trust your passwords and browsing history in the hands of Mozilla (though your data is supposedly being encrypted with your unique sync key before it gets uploaded to Mozilla), then you can use this HOWTO for setting up your own Sync Server at home (or at work). Luckily, Mozilla is open as always and makes the Open Source Sync Server code available to everyone who wants to play with it. And setting it up is not difficult at all. Note: Mozilla have re-designed and re-written the original Sync Server (it was called “Mozilla Weave Server” at the time, and was almost impossible to setup). It is now written in Python, and the steps to create your own Sync Server are described on this page. That page is what you should follow. The rest of this HOWTO tries to bring more detail to the process of configuring your Slackware box to become a real Sync Server. -

Unix Programmer's Manual

There is no warranty of merchantability nor any warranty of fitness for a particu!ar purpose nor any other warranty, either expressed or imp!ied, a’s to the accuracy of the enclosed m~=:crials or a~ Io ~helr ,~.ui~::~::.j!it’/ for ~ny p~rficu~ar pur~.~o~e. ~".-~--, ....-.re: " n~ I T~ ~hone Laaorator es 8ssumg$ no rO, p::::nS,-,,.:~:y ~or their use by the recipient. Furln=,, [: ’ La:::.c:,:e?o:,os ~:’urnes no ob~ja~tjon ~o furnish 6ny a~o,~,,..n~e at ~ny k:nd v,,hetsoever, or to furnish any additional jnformstjcn or documenta’tjon. UNIX PROGRAMMER’S MANUAL F~ifth ~ K. Thompson D. M. Ritchie June, 1974 Copyright:.©d972, 1973, 1974 Bell Telephone:Laboratories, Incorporated Copyright © 1972, 1973, 1974 Bell Telephone Laboratories, Incorporated This manual was set by a Graphic Systems photo- typesetter driven by the troff formatting program operating under the UNIX system. The text of the manual was prepared using the ed text editor. PREFACE to the Fifth Edition . The number of UNIX installations is now above 50, and many more are expected. None of these has exactly the same complement of hardware or software. Therefore, at any particular installa- tion, it is quite possible that this manual will give inappropriate information. The authors are grateful to L. L. Cherry, L. A. Dimino, R. C. Haight, S. C. Johnson, B. W. Ker- nighan, M. E. Lesk, and E. N. Pinson for their contributions to the system software, and to L. E. McMahon for software and for his contributions to this manual. -

Puremessage for Unix Help Contents Getting Started

PureMessage for Unix help Contents Getting Started......................................................................................................................................... 1 Welcome to PureMessage for Unix.............................................................................................. 1 Deployment Strategies.................................................................................................................. 6 Installing PureMessage............................................................................................................... 18 Upgrading PureMessage.............................................................................................................51 Quick Reference Guide...............................................................................................................65 Contacting Sophos...................................................................................................................... 75 Managing PureMessage........................................................................................................................ 77 Dashboard Tab............................................................................................................................77 Policy Tab....................................................................................................................................79 Quarantine Tab..........................................................................................................................130