Developing Cooperative Agents for Nba Jam

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Video Games and Enhancing Prosocial Behaviour

Chapter GAMING FOR GOOD: VIDEO GAMES AND ENHANCING PROSOCIAL BEHAVIOUR Holli-Anne Passmore∗ and Mark D. Holder Department of Psychology, IKBSAS, University of British Columbia, Kelowna, BC, Canada ABSTRACT The number of publications pertaining to video gaming and its effects on subsequent behavior has more than tripled from the past to the current decade. This surge of research parallels the ubiquitousness of video game play in everyday life, and the increasing concern of parents, educators, and the public regarding possible deleterious effects of gaming. Numerous studies have now investigated this concern. Recently, research has also begun to explore the possible benefits of gaming, in particular, increasing prosocial behaviour. This chapter presents a comprehensive review of the research literature examining the effects of video game playing on prosocial behaviour. Within this literature, a variety of theoretical perspectives and research methodologies have been adopted. For example, many researchers invoke the General Learning Model to explain the mechanisms by which video games may influence behaviour. Other researchers refer to frameworks involving moral education, character education, and care-ethics in their examination of the relationship between gaming and prosocial development. Diverse parameters have been explored in these studies. For example, different studies have Email: [email protected]. 2 Holli-Anne Passmore and Mark D. Holder assessed both the immediate and delayed impacts of gaming, and investigated the effects of different durations of video game playing. Additionally, based on each study’s operational definitions of “aggressive behaviour” and “prosocial behaviour”, a variety of behaviours have been assessed and different measures have been employed. For instance, studies have used self-report measures of empathy, the character strengths of generosity and kindness, and the level of civic engagement, as well as used word-completion and story completion tasks and tit-for-tat social situation games such as “Prisoners' Dilemma”. -

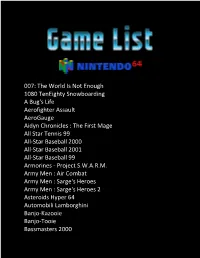

Video Game Archive: Nintendo 64

Video Game Archive: Nintendo 64 An Interactive Qualifying Project submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfilment of the requirements for the degree of Bachelor of Science by James R. McAleese Janelle Knight Edward Matava Matthew Hurlbut-Coke Date: 22nd March 2021 Report Submitted to: Professor Dean O’Donnell Worcester Polytechnic Institute This report represents work of one or more WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. Abstract This project was an attempt to expand and document the Gordon Library’s Video Game Archive more specifically, the Nintendo 64 (N64) collection. We made the N64 and related accessories and games more accessible to the WPI community and created an exhibition on The History of 3D Games and Twitch Plays Paper Mario, featuring the N64. 2 Table of Contents Abstract…………………………………………………………………………………………………… 2 Table of Contents…………………………………………………………………………………………. 3 Table of Figures……………………………………………………………………………………………5 Acknowledgements……………………………………………………………………………………….. 7 Executive Summary………………………………………………………………………………………. 8 1-Introduction…………………………………………………………………………………………….. 9 2-Background………………………………………………………………………………………… . 11 2.1 - A Brief of History of Nintendo Co., Ltd. Prior to the Release of the N64 in 1996:……………. 11 2.2 - The Console and its Competitors:………………………………………………………………. 16 Development of the Console……………………………………………………………………...16 -

007: the World Is Not Enough 1080 Teneighty Snowboarding a Bug's

007: The World Is Not Enough 1080 TenEighty Snowboarding A Bug's Life Aerofighter Assault AeroGauge Aidyn Chronicles : The First Mage All Star Tennis 99 All-Star Baseball 2000 All-Star Baseball 2001 All-Star Baseball 99 Armorines - Project S.W.A.R.M. Army Men : Air Combat Army Men : Sarge's Heroes Army Men : Sarge's Heroes 2 Asteroids Hyper 64 Automobili Lamborghini Banjo-Kazooie Banjo-Tooie Bassmasters 2000 Batman Beyond : Return of the Joker BattleTanx BattleTanx - Global Assault Battlezone : Rise of the Black Dogs Beetle Adventure Racing! Big Mountain 2000 Bio F.R.E.A.K.S. Blast Corps Blues Brothers 2000 Body Harvest Bomberman 64 Bomberman 64 : The Second Attack! Bomberman Hero Bottom of the 9th Brunswick Circuit Pro Bowling Buck Bumble Bust-A-Move '99 Bust-A-Move 2: Arcade Edition California Speed Carmageddon 64 Castlevania Castlevania : Legacy of Darkness Chameleon Twist Chameleon Twist 2 Charlie Blast's Territory Chopper Attack Clay Fighter : Sculptor's Cut Clay Fighter 63 1-3 Command & Conquer Conker's Bad Fur Day Cruis'n Exotica Cruis'n USA Cruis'n World CyberTiger Daikatana Dark Rift Deadly Arts Destruction Derby 64 Diddy Kong Racing Donald Duck : Goin' Qu@ckers*! Donkey Kong 64 Doom 64 Dr. Mario 64 Dual Heroes Duck Dodgers Starring Daffy Duck Duke Nukem : Zero Hour Duke Nukem 64 Earthworm Jim 3D ECW Hardcore Revolution Elmo's Letter Adventure Elmo's Number Journey Excitebike 64 Extreme-G Extreme-G 2 F-1 World Grand Prix F-Zero X F1 Pole Position 64 FIFA 99 FIFA Soccer 64 FIFA: Road to World Cup 98 Fighter Destiny 2 Fighters -

Spontaneous Emotional Speech Recordings Through a Cooperative Online Video Game

Spontaneous emotional speech recordings through a cooperative online video game Daniel Palacios-Alonso, Victoria Rodellar-Biarge, Victor Nieto-Lluis, and Pedro G´omez-Vilda Centro de Tecnolog´ıaBiom´edicaand Escuela T´ecnica Superior de Ingenieros Inform´aticos Universidad Polit´ecnicade Madrid Campus de Montegancedo - Pozuelo de Alarc´on- 28223 Madrid - SPAIN email:[email protected].fi.upm.es Abstract. Most of emotional speech databases are recorded by actors and some of spontaneous databases are not free of charge. To progress in emotional recognition, it is necessary to carry out a big data acquisition task. The current work gives a methodology to capture spontaneous emo- tions through a cooperative video game. Our methodology is based on three new concepts: novelty, reproducibility and ubiquity. Moreover, we have developed an experiment to capture spontaneous speech and video recordings in a controlled environment in order to obtain high quality samples. Keywords: Spontaneous emotions; Affective Computing; Cooperative Platform; Databases; MOBA Games 1 Introduction Capturing emotions is an arduous task, above all when we speak about cap- turing and identifying spontaneous emotions in voice. Major progress has been made in the capturing and identifying gestural or body emotions [1]. However, this progress is not similar in the speech emotion field. Emotion identification is a very complex task because it is dependent on, among others factors, culture, language, gender and the age of the subject. The consulted literature mentions a few databases and data collections of emotional speech in different languages but in many cases this information is not open to the community and not available for research. -

8 Guilt in Dayz Marcus Carter and Fraser Allison Guilt in Dayz

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Sydney eScholarship FOR REPOSITORY USE ONLY DO NOT DISTRIBUTE 8 Guilt in DayZ Marcus Carter and Fraser Allison Guilt in DayZ Marcus Carter and Fraser Allison © Massachusetts Institute of Technology All Rights Reserved I get a sick feeling in my stomach when I kill someone. —Player #1431’s response to the question “Do you ever feel bad killing another player in DayZ ?” Death in most games is simply a metaphor for failure (Bartle 2010). Killing another player in a first-person shooter (FPS) game such as Call of Duty (Infinity Ward 2003) is generally considered to be as transgressive as taking an opponent’s pawn in chess. In an early exploratory study of players’ experiences and processing of violence in digital videogames, Christoph Klimmt and his colleagues concluded that “moral man- agement does not apply to multiplayer combat games” (2006, 325). In other words, player killing is not a violation of moral codes or a source of moral concern for players. Subsequent studies of player experiences of guilt and moral concern in violent video- games (Hartmann, Toz, and Brandon 2010; Hartmann and Vorderer 2010; Gollwitzer and Melzer 2012) have consequently focused on the moral experiences associated with single-player games and the engagement with transgressive fictional, virtual narrative content. This is not the case, however, for DayZ (Bohemia Interactive 2017), a zombie- themed FPS survival game in which players experience levels of moral concern and anguish that might be considered extreme for a multiplayer digital game. -

Newagearcade.Com 5000 in One Arcade Game List!

Newagearcade.com 5,000 In One arcade game list! 1. AAE|Armor Attack 2. AAE|Asteroids Deluxe 3. AAE|Asteroids 4. AAE|Barrier 5. AAE|Boxing Bugs 6. AAE|Black Widow 7. AAE|Battle Zone 8. AAE|Demon 9. AAE|Eliminator 10. AAE|Gravitar 11. AAE|Lunar Lander 12. AAE|Lunar Battle 13. AAE|Meteorites 14. AAE|Major Havoc 15. AAE|Omega Race 16. AAE|Quantum 17. AAE|Red Baron 18. AAE|Ripoff 19. AAE|Solar Quest 20. AAE|Space Duel 21. AAE|Space Wars 22. AAE|Space Fury 23. AAE|Speed Freak 24. AAE|Star Castle 25. AAE|Star Hawk 26. AAE|Star Trek 27. AAE|Star Wars 28. AAE|Sundance 29. AAE|Tac/Scan 30. AAE|Tailgunner 31. AAE|Tempest 32. AAE|Warrior 33. AAE|Vector Breakout 34. AAE|Vortex 35. AAE|War of the Worlds 36. AAE|Zektor 37. Classic Arcades|'88 Games 38. Classic Arcades|1 on 1 Government (Japan) 39. Classic Arcades|10-Yard Fight (World, set 1) 40. Classic Arcades|1000 Miglia: Great 1000 Miles Rally (94/07/18) 41. Classic Arcades|18 Holes Pro Golf (set 1) 42. Classic Arcades|1941: Counter Attack (World 900227) 43. Classic Arcades|1942 (Revision B) 44. Classic Arcades|1943 Kai: Midway Kaisen (Japan) 45. Classic Arcades|1943: The Battle of Midway (Euro) 46. Classic Arcades|1944: The Loop Master (USA 000620) 47. Classic Arcades|1945k III 48. Classic Arcades|19XX: The War Against Destiny (USA 951207) 49. Classic Arcades|2 On 2 Open Ice Challenge (rev 1.21) 50. Classic Arcades|2020 Super Baseball (set 1) 51. -

Commercialism@ School. Com: the Third Annual Report on Trends In

DOCUMENT RESUME ED 446 390 EA 030 684 AUTHOR Molnar, Alex; Morales, Jennifer TITLE [email protected]: The Third Annual Report on Trends in Schoolhouse Commercialism. INSTITUTION Wisconsin Univ., Milwaukee. Center for the Analysis of Commercialism in Education. REPORT NO CACE-00-02 PUB DATE 2000-09-00 NOTE 47p. AVAILABLE FROM Full text: http://www.schoolcommercialism.org. PUB TYPE Reports Descriptive (141) EDRS PRICE MF01/PCO2 Plus Postage. DESCRIPTORS Business; *Charter Schools; Elementary Secondary Education; *Internet; *Merchandising; *Privatization; *Public Schools; *Vendors ABSTRACT This report details the seven categories tracked by the Center for the Analysis of Commercialism in Education (CACE) between 1990 and 1999-2000: sponsorship of programs and activities, exclusive agreements, incentive programs, appropriation of space, sponsored educational materials, electronic marketing, and privatization. The 1999-2000 report added an 8th category: fundraising. The total number of press citations found in CACE's database searches increased 23 percent between 1998-99 and 1999-2000. Commercial activities in schools appear to be continuing the upward trend observed throughout the 1990s. Furthermore, the growing interconnection of schoolhouse commercializing suggests that marketers are attempting to create a seamless advertising environment that surrounds children in and out of school. The drive to put technology in schools appears to be opening new channels for commercial activity in schools. In spite of the apparent increase in commercial activity, signs of disenchantment are beginning to emerge. Increasing attempts at the local, state, and federal levels to regulate or ban certain types of commercial activities are another indication of a developing public awareness of potential negative consequences of such activities. -

The Effects of Cooperative Gameplay on Aggression and Prosocial Behavior

Intuition: The BYU Undergraduate Journal of Psychology Volume 14 Issue 1 Article 5 2019 The Effects of Cooperative Gameplay on Aggression and Prosocial Behavior Ariqua M. Furse Brigham Young University Follow this and additional works at: https://scholarsarchive.byu.edu/intuition Part of the Psychology Commons Recommended Citation Furse, Ariqua M. (2019) "The Effects of Cooperative Gameplay on Aggression and Prosocial Behavior," Intuition: The BYU Undergraduate Journal of Psychology: Vol. 14 : Iss. 1 , Article 5. Available at: https://scholarsarchive.byu.edu/intuition/vol14/iss1/5 This Article is brought to you for free and open access by the Journals at BYU ScholarsArchive. It has been accepted for inclusion in Intuition: The BYU Undergraduate Journal of Psychology by an authorized editor of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. Furse: Effects of Cooperative Gameplay The Effects of Cooperative Gameplay on Aggression and Prosocial Behavior Ariqua Furse Brigham Young University Abstract Over a quarter of the world’s population spends an average of 5.96 hours a week gaming. The top ten most played games are either exclusively multiplayer or have a multiplayer option, with 70% containing violent content. Despite the prevalence of multiplayer gaming, most video game research has been focused on single player modes. Video game aversion is based on this single player research. There is a lesser awareness of the effects of cooperative video game play. The majority of the literature on the effects of cooperative game play on aggression and prosocial behavior reviewed shows that, when played cooperatively, video games, regardless of content, have little or no effect on aggression or prosocial behavior. -

It's in the Game: the Effect of Competition and Cooperation on Anti-Social Behavior in Online Video Games David P. Mclean Thes

It’s in the Game: The effect of Competition and Cooperation on Anti-Social Behavior in Online Video Games David P. McLean Thesis submitted to the faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Arts In Communication James D. Ivory, Chair Beth M. Waggenspack Marcus Cayce Myers 6/15/2016 Blacksburg, VA Keywords: Video Games, Hostility, Online interaction Copyright © 2016 Dave McLean It’s in the Game: The effect of Competition and Helpfulness on Anti-Social Behavior in Online Video Games David P. McLean ABSTRACT Video games have been criticized for the amount of violence present in them and how this violence could affect aggression and anti-social behavior. Much of the literature on video games effects has focused primarily on the content of video games, but recent studies show that competition in video games could be a major influence on aggression. While competing against other players has been shown to increase aggression, there is less research on whether the mere presence of a competitive environment can influence aggression. The existing research has also primarily been performed using surveys and lab experiments. While these two approaches are very useful, they lack the ecological validity of methods like field experiments. This study examined how competitiveness, teamwork, and co-operation affect anti-social behavior in video games. A 2 (competition: high vs low) x 2 (cooperation: vs no cooperation) x 2 (team: teammates vs opponents) online field experiment on hostile speech was performed. In this study, it was found that players experience more hostile language from their teammates than they do opponents. -

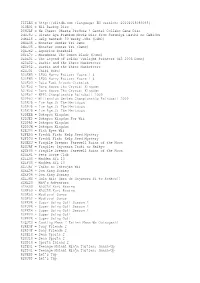

TITLES = (Language: EN Version: 20101018083045

TITLES = http://wiitdb.com (language: EN version: 20101018083045) 010E01 = Wii Backup Disc DCHJAF = We Cheer: Ohasta Produce ! Gentei Collabo Game Disc DHHJ8J = Hirano Aya Premium Movie Disc from Suzumiya Haruhi no Gekidou DHKE18 = Help Wanted: 50 Wacky Jobs (DEMO) DMHE08 = Monster Hunter Tri Demo DMHJ08 = Monster Hunter Tri (Demo) DQAJK2 = Aquarius Baseball DSFE7U = Muramasa: The Demon Blade (Demo) DZDE01 = The Legend of Zelda: Twilight Princess (E3 2006 Demo) R23E52 = Barbie and the Three Musketeers R23P52 = Barbie and the Three Musketeers R24J01 = ChibiRobo! R25EWR = LEGO Harry Potter: Years 14 R25PWR = LEGO Harry Potter: Years 14 R26E5G = Data East Arcade Classics R27E54 = Dora Saves the Crystal Kingdom R27X54 = Dora Saves The Crystal Kingdom R29E52 = NPPL Championship Paintball 2009 R29P52 = Millennium Series Championship Paintball 2009 R2AE7D = Ice Age 2: The Meltdown R2AP7D = Ice Age 2: The Meltdown R2AX7D = Ice Age 2: The Meltdown R2DEEB = Dokapon Kingdom R2DJEP = Dokapon Kingdom For Wii R2DPAP = Dokapon Kingdom R2DPJW = Dokapon Kingdom R2EJ99 = Fish Eyes Wii R2FE5G = Freddi Fish: Kelp Seed Mystery R2FP70 = Freddi Fish: Kelp Seed Mystery R2GEXJ = Fragile Dreams: Farewell Ruins of the Moon R2GJAF = Fragile: Sayonara Tsuki no Haikyo R2GP99 = Fragile Dreams: Farewell Ruins of the Moon R2HE41 = Petz Horse Club R2IE69 = Madden NFL 10 R2IP69 = Madden NFL 10 R2JJAF = Taiko no Tatsujin Wii R2KE54 = Don King Boxing R2KP54 = Don King Boxing R2LJMS = Hula Wii: Hura de Hajimeru Bi to Kenkou!! R2ME20 = M&M's Adventure R2NE69 = NASCAR Kart Racing -

The Relation Between Gaming and the Development of Emotion Regulation Skills Adam Lobel the Relation Between Gaming and the Deve

GAME ON Hanneke Scholten // [email protected] 06-2372-1158 Martin Perescis // [email protected] 06-4711-2810 Aula, Comeniuslaan 2 Radboud University Defense Party 6525 HP Nijmegen 14:30 sharp @ INVITATION Contact paranymphs: The Relation Between Gaming Soul, Funk, & Rock ‘n Roll and the Development of 6512 EN Nijmegen Emotion Regulation Skills Leemptstraat 34 Emotion Regulation Skills American BBQ + Thieme Loods, Adam Lobel 18:30 @ Adam Lobel 20 17 14349_Adam Lobel_Cover.indd 1 13-12-16 11:25 Game on: The relation between gaming & emotion regulation development Adam Lobel The studies described in this thesis were funded by the Behavioural Science Institute, Radboud University Nijmegen. Publication of this thesis was financially supported by the Radboud University Nijmegen. This support is gratefully acknowledged. Crackman by Typodermic Fonts, Inc. Pokemon GB by Jackster Productions. Roboto Condensed Font by Christian Robertson. Cover & bookmark design by David Auden Nash. Nevermind screenshot (Chapter VII title page) courtesy of Erin Reynolds, Flying Mollusk. Printed by Ridderprint with special thanks to Robert Kanters. ISBN: 978-94-6299-514-7 © Adam Lobel, December 2016 For my Mishpacha & For my Savta Rita, who would have been so proud to read the books of her offspring Game on: De relatie tussen gamen en de ontwikkeling van emotieregulatie waardigheden Proefschrift ter verkrijging van de graad van doctor aan de Radboud Universiteit Nijmegen op gezag van de rector magnificus prof. dr. J.H.J.M. van Krieken, volgens besluit van het college van decanen in het openbaar te verdedigen op woensdag, 18 januari, 2017, om 14:30 uur precies door Adam Lobel Geboren op 13 juli 1987 te New York, New York, Verenigde Staten Promotors: prof. -

Cooperative Video Game Play and Generosity : Oxytocin Production As

COOPERATIVE VIDEO GAME PLAY AND GENEROSITY: OXYTOCIN PRODUCTION AS A CAUSAL MECHANISM REGARDING PROSOCIAL BEHAVIOR RESULTING FROM COOPERATIVE VIDEO GAME PLAY By Matthew Nelson Grizzard A DISSERTATION Submitted to Michigan State University in partial fulfillment of the requirements for the degree of Communication – Doctor of Philosophy 2013 ABSTRACT COOPERATIVE VIDEO GAME PLAY AND GENEROSITY: OXYTOCIN PRODUCTION AS A CAUSAL MECHANISM REGARDING PROSOCIAL BEHAVIOR RESULTING FROM COOPERATIVE VIDEO GAME PLAY By Matthew Nelson Grizzard Recent research has begun to examine whether contextual features of video game play, such as the cooperative versus competitive nature of interaction between game play participants, can mitigate aggressive responses related to violent video game play, or even lead to prosocial responses such as generosity. This research provided the foundation for the current dissertation that sought to (a) examine the effect of cooperative play on generosity and (b) associate cooperative game play with increased production of oxytocin, a neuromodulating hormone related to bonding, trust, and social interaction. The potential negative effects of video game play have been a central focus of psychological and communicological research, with the majority of studies using competitive, aggressive games as their stimulus materials. By utilizing a non- aggressive game, examining the role of cooperative versus solo play in that game, and assessing changes in oxytocin production and associating those changes with post-game play generosity, the current study provides an opportunity for determining potential prosocial effects of non- aggressive video game play and linking those effects with an endocrinological mechanism. A random assignment (solo versus cooperative play) experiment with an offset control condition was conducted using a guitar-music video game as stimuli.