On Performance Optimization and System Design of Flash Memory Based Solid State Drives in the Storage Hierarchy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advanced NAND Flash Memory Single-Chip Storage Solution

SOLUTION SHEET: ADVANCED SOLID STATE MEMORY CONTROLLER Advanced NAND Flash Memory Single-Chip Storage Solution ? The growing complexity of flash memory management algorithms has made controller designs complex and has impacted the performance and the diversity of NAND devices that a single controller can support. As the complexity of the controller design increases, there comes a point where hardware acceleration is necessary. We see this trend for partial FTL (Flash Translation Layer) layers, addressing and wear leveling, and command queuing. At the same time, algorithms and flash device characteristics constantly change through the life of the controller. But data center managers expect the controller to constantly make design tradeoffs in order to improve in-system performance. These changes are also impacted by the traffic patterns of the application. The challenge is to provide hardware-accelerated algorithms that can change frequently in the field. The Context Solid-state storage based on NAND flash technology has become an important new lever for improving data-center performance and predictability. But the solid-state drives also present cost and predictability issues of their own. Surprisingly, these issues can be addressed through design of the flash memory controller. The NAND array has become the dominating factor for the cost of the drive. Increasing the life expectancy of the NAND array obviously reduces the total cost of ownership of the system. Also, the ability to reconfigure the controller to implement improved algorithms or to adapt to a new generation of flash chips extends the system life. Performance predictability and throughput are key concerns for data-center managers. -

SAS Enters the Mainstream Although Adoption of Serial Attached SCSI

SAS enters the mainstream By the InfoStor staff http://www.infostor.com/articles/article_display.cfm?Section=ARTCL&C=Newst&ARTICLE_ID=295373&KEYWORDS=Adaptec&p=23 Although adoption of Serial Attached SCSI (SAS) is still in the infancy stages, the next 12 months bode well for proponents of the relatively new disk drive/array interface. For example, in a recent InfoStor QuickVote reader poll, 27% of the respondents said SAS will account for the majority of their disk drive purchases over the next year, although Serial ATA (SATA) topped the list with 37% of the respondents, followed by Fibre Channel with 32%. Only 4% of the poll respondents cited the aging parallel SCSI interface (see figure). However, surveys of InfoStor’s readers are skewed by the fact that almost half of our readers are in the channel (primarily VARs and systems/storage integrators), and the channel moves faster than end users in terms of adopting (or at least kicking the tires on) new technologies such as serial interfaces. Click here to enlarge image To get a more accurate view of the pace of adoption of serial interfaces such as SAS, consider market research predictions from firms such as Gartner and International Data Corp. (IDC). Yet even in those firms’ predictions, SAS is coming on surprisingly strong, mostly at the expense of its parallel SCSI predecessor. For example, Gartner predicts SAS disk drives will account for 16.4% of all multi-user drive shipments this year and will garner almost 45% of the overall market in 2009 (see figure on p. 18). -

Fragmentation in Large Object Repositories

Fragmentation in Large Object Repositories Experience Paper Russell Sears Catharine van Ingen University of California, Berkeley Microsoft Research [email protected] [email protected] ABSTRACT 1. INTRODUCTION Fragmentation leads to unpredictable and degraded application Application data objects continue to increase in size. performance. While these problems have been studied in detail Furthermore, the increasing popularity of web services and other for desktop filesystem workloads, this study examines newer network applications means that systems that once managed static systems such as scalable object stores and multimedia archives of “finished” objects now manage frequently modified repositories. Such systems use a get/put interface to store objects. versions of application data. Rather than updating these objects in In principle, databases and filesystems can support such place, typical archives either store multiple versions of the objects applications efficiently, allowing system designers to focus on (the V of WebDAV stands for “versioning” [25]), or simply do complexity, deployment cost and manageability. wholesale replacement (as in SharePoint Team Services [19]). Similarly, applications such as personal video recorders and Although theoretical work proves that certain storage policies media subscription servers continuously allocate and delete large, behave optimally for some workloads, these policies often behave transient objects. poorly in practice. Most storage benchmarks focus on short-term behavior or do not measure fragmentation. We compare SQL Applications store large objects as some combination of files in Server to NTFS and find that fragmentation dominates the filesystem and as BLOBs (binary large objects) in a database. performance when object sizes exceed 256KB-1MB. NTFS Only folklore is available regarding the tradeoffs. -

ECE 598 – Advanced Operating Systems Lecture 19

ECE 598 { Advanced Operating Systems Lecture 19 Vince Weaver http://web.eece.maine.edu/~vweaver [email protected] 7 April 2016 Announcements • Homework #7 was due • Homework #8 will be posted 1 Why use FAT over ext2? • FAT simpler, easy to code • FAT supported on all major OSes • ext2 faster, more robust filename and permissions 2 btrfs • B-tree fs (similar to a binary tree, but with pages full of leaves) • overwrite filesystem (overwite on modify) vs CoW • Copy on write. When write to a file, old data not overwritten. Since old data not over-written, crash recovery better Eventually old data garbage collected • Data in extents 3 • Copy-on-write • Forest of trees: { sub-volumes { extent-allocation { checksum tree { chunk device { reloc • On-line defragmentation • On-line volume growth 4 • Built-in RAID • Transparent compression • Snapshots • Checksums on data and meta-data • De-duplication • Cloning { can make an exact snapshot of file, copy-on- write different than link, different inodles but same blocks 5 Embedded • Designed to be small, simple, read-only? • romfs { 32 byte header (magic, size, checksum,name) { Repeating files (pointer to next [0 if none]), info, size, checksum, file name, file data • cramfs 6 ZFS Advanced OS from Sun/Oracle. Similar in idea to btrfs indirect still, not extent based? 7 ReFS Resilient FS, Microsoft's answer to brtfs and zfs 8 Networked File Systems • Allow a centralized file server to export a filesystem to multiple clients. • Provide file level access, not just raw blocks (NBD) • Clustered filesystems also exist, where multiple servers work in conjunction. -

Ext4 File System and Crash Consistency

1 Ext4 file system and crash consistency Changwoo Min 2 Summary of last lectures • Tools: building, exploring, and debugging Linux kernel • Core kernel infrastructure • Process management & scheduling • Interrupt & interrupt handler • Kernel synchronization • Memory management • Virtual file system • Page cache and page fault 3 Today: ext4 file system and crash consistency • File system in Linux kernel • Design considerations of a file system • History of file system • On-disk structure of Ext4 • File operations • Crash consistency 4 File system in Linux kernel User space application (ex: cp) User-space Syscalls: open, read, write, etc. Kernel-space VFS: Virtual File System Filesystems ext4 FAT32 JFFS2 Block layer Hardware Embedded Hard disk USB drive flash 5 What is a file system fundamentally? int main(int argc, char *argv[]) { int fd; char buffer[4096]; struct stat_buf; DIR *dir; struct dirent *entry; /* 1. Path name -> inode mapping */ fd = open("/home/lkp/hello.c" , O_RDONLY); /* 2. File offset -> disk block address mapping */ pread(fd, buffer, sizeof(buffer), 0); /* 3. File meta data operation */ fstat(fd, &stat_buf); printf("file size = %d\n", stat_buf.st_size); /* 4. Directory operation */ dir = opendir("/home"); entry = readdir(dir); printf("dir = %s\n", entry->d_name); return 0; } 6 Why do we care EXT4 file system? • Most widely-deployed file system • Default file system of major Linux distributions • File system used in Google data center • Default file system of Android kernel • Follows the traditional file system design 7 History of file system design 8 UFS (Unix File System) • The original UNIX file system • Design by Dennis Ritche and Ken Thompson (1974) • The first Linux file system (ext) and Minix FS has a similar layout 9 UFS (Unix File System) • Performance problem of UFS (and the first Linux file system) • Especially, long seek time between an inode and data block 10 FFS (Fast File System) • The file system of BSD UNIX • Designed by Marshall Kirk McKusick, et al. -

Partitioner Och Filsystem 2

Partitioner och filsystem 2 File systems FAT Unix-like NTFS Vad är ett filsystem? • Datorer behöver en metod för att lagra och hämta data… • Referensmodell för filsystem (Carrier) – Filsystem kategori • Layout och storleksinformation – Innehålls kategori • Kluster och block – data enheter – Metadata kategori • Tidsinformation, storlek, access kontroll • Adresser till allokerade data enheter – Filnamn kategori • Oftast ihop-kopplad med metadata – Applikations kategori • Quota • Journaler • De modernaste påminner mycket om relations databaser Windows • NTFS (New Technology File System) – 6 versioner finns, de nyaste är v3.0 (Windows 2000) och v3.1 (XP, 2003, Vista, 2008, 7), kallas även 5.0, 5.1, 5.2, 6.0 och 6.1 (efter OS version) – Stöd för unicode, säkerhet, mm. - är mycket mer komplext än FAT! – http://en.wikipedia.org/wiki/Ntfs • FAT 12/16/32, VFAT (långa filnamn i Win95) – Används fortfarande men är inte effektivt för större lagringskapaciteter (klusterstorleken) – Långsammare access än NTFS • Windows Future Storage (WinFS) inställt projekt, enligt rykten var det en SQL-databas som ligger ovanpå ett NTFS filsystem – Läs mer på: http://www.ntfs.com/ – Och: http://en.wikipedia.org/wiki/WinFS FAT12, 16 och 32 • FAT12, finns på floppy diskar – Begränsad lagringskapacitet – Designat för MS-DOS 1.0 • FAT16, var designat för större diskar – Äldre OS använde detta • MS-DOS 3.0, Win95 OSR1, NT 3.5 och NT 4.0 – Max diskstorlek 2 GB • FAT32 kom när diskar större än 2GB kom – Vissa äldre och alla nya OS kan använda FAT32 • Windows 98/Me/2000/XP/2003/Vista/7 och 2008 • Begränsningar med FAT32 – Största formaterabara volymen är 32GB (större volymer kan dock användas, < 16 TiB) – Begränsade features vad gäller komprimering, kryptering, säkerhet och hastighet jämfört mot NTFS • http://en.wikipedia.org/wiki/FAT_file_system exFAT • exFAT (Extended File Allocation Table, a.k.a. -

HP Pavilion Data Sheet

Windows®. Life without WallsTM. HP recommends Windows 7. HP Pavilion Elite HPE-250f PC Highlights: • Intel® Core™ i7-860 Processor(2) • Genuine Windows® 7 Home Premium 64-bit(1) • Get powerful 64-bit performance with 8GB DDR3 system memory • 1 Terabyte hard drive(4) stores up to 220,000 songs or 176,000 photos(5) • Combination Blu-ray Disc player and SuperMulti DVD burner with LightScribe technology(6) • ATI RadeonTM HD 5770 Graphics Card • Wireless LAN 802.11b/g/n(19) • Front-panel 15-in-1 memory card reader • 24 x 7 toll-free phone support and one-year HP limited warranty provide priceless peace of mind Windows®. Life without WallsTM. HP recommends Windows 7. Designed for powerful performance and elegance Your PC, your way, with HP & Windows® 7(1) Get serious about computing with the HP Pavilion Elite HPE-250f PC, a stylish, Windows® 7 makes everyday tasks simple—and makes new things possible. high-performance PC for your most demanding digital tasks. Premium performance levels enable an enhanced experience whether you’re working, • HP Advisor PC Discovery gives you access to software and services you need e-mailing, editing photos or videos, gaming or connecting with friends to get the most out of your Windows® 7 HP PC. online(10). The HP Pavilion Elite HPE-250f PC's flexibility and expandability let • HP Support Assistant provides a rich set of troubleshooting tools to solve you add devices and features to support your growing needs. The elegant, Windows® 7 HP PC problems faster. chrome-accented design is sure to attract attention in any home décor. -

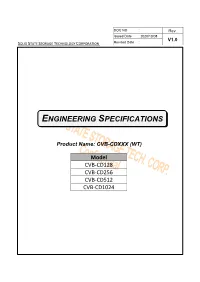

Engineering Specifications

DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : ENGINEERING SPECIFICATIONS Product Name: CVB-CDXXX (WT) Model CVB-CD128 CVB-CD256 CVB-CD512 CVB-CD1024 Author: Ken Liao DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Version History Date 0.1 Draft 2020/07/20 1.0 First release 2020/10/08 DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Copyright 2020 SOLID STATE STORAGE TECHNOLOGY CORPORATION Disclaimer The information in this document is subject to change without prior notice in order to improve reliability, design, and function and does not represent a commitment on the part of the manufacturer. In no event will the manufacturer be liable for direct, indirect, special, incidental, or consequential damages arising out of the use or inability to use the product or documentation, even if advised of the possibility of such damages. This document contains proprietary information protected by copyright. All rights are reserved. No part of this datasheet may be reproduced by any mechanical, electronic, or other means in any form without prior written permission of SOLID STATE STORAGE Technology Corporation. DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Table of Contents 1 Introduction ....................................................................... 5 1.1 Overview ............................................................................................. -

Using Data Postcompensation and Predistortion to Tolerate Cell-To-Cell

2718 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: REGULAR PAPERS, VOL. 57, NO. 10, OCTOBER 2010 Using Data Postcompensation and Predistortion to Tolerate Cell-to-Cell Interference in MLC NAND Flash Memory Guiqiang Dong, Student Member, IEEE, Shu Li, and Tong Zhang, Senior Member, IEEE Abstract—With the appealing storage-density advantage, mul- than 1 bit in each memory cell (or floating-gate MOS tran- tilevel-per-cell (MLC) NAND Flash memory that stores more than sistor) by programming the cell threshold voltage into one 1 bit in each memory cell now largely dominates the global Flash of voltage windows, has been widely used to further memory market. However, due to the inherent smaller noise NAND margin, the MLC NAND Flash memory is more subject to various improve the Flash memory storage density. Because of device/circuit variability and noise, particularly as the industry is its obvious advantages in terms of storage density and, hence, pushing the limit of technology scaling and a more aggressive use cost, MLC NAND Flash memory now largely dominates the of MLC storage. Cell-to-cell interference has been well recognized global Flash memory market. In current design practice, most as a major noise source responsible for raw-memory-storage MLC NAND Flash memories store 2 bits per cell, while 3- and reliability degradation. Leveraging the fact that cell-to-cell in- terference is a deterministic data-dependent process and can even 4-bit-per-cell NAND Flash memories have been recently be mathematically described with a simple formula, we present reported in the open literature [2]–[6]. -

Enhance Your Customer's Vista System with Datatraveler Readyflash

kingston.com/windowsvista Enhance your customer’s Vista system with DataTraveler ReadyFlash For more information, Complement your Vista™ sales with a Kingston® for Windows ReadyBoost with at least 512 MB of please contact your dedicated DataTraveler® ReadyFlash USB Flash drive enhanced available space. Kingston representative: for Windows® ReadyBoost™. Craig McQueenie – Central Can I enable ReadyBoost with any USB (416) 315-9097 Ideal for systems with limited memory expansion Flash drive? [email protected] capabilities, Windows ReadyBoost allows a user to No, Vista will only offer the option of using Daniel Ouimet – Quebec utilize memory outside of system memory module ReadyBoost on USB Flash drives that have met both (514) 244-4901 upgrades. For instance, if a notebook has a maximum a specific performance and a capacity threshold. [email protected] memory capacity of 1 GB installed, Windows Patrick Jan – West ReadyBoost allows for usage of a Flash memory Will Kingston offer additional Flash memory (604) 961-8038 device, such as DataTraveler ReadyFlash as a more devices for ReadyBoost? [email protected] responsive storage medium for ReadyBoost cache. Yes, Kingston will offer additional Flash products that Royal Taylor – Central (416) 617-6714 Kingston Part Number: DTR/1GB are enhanced for Windows ReadyBoost. Please contact [email protected] your Kingston representative for further details. Description: 1-GB USB Flash drive enhanced for Windows ReadyBoost. Can I use more than one ReadyBoost enabled device at a time? Windows Vista allows for only one device enabled FAQ: for ReadyBoost at a time. What is ReadyBoost? ReadyBoost is a Microsoft Windows Vista feature How much memory should I allocate for which may improve system performance by ReadyBoost? allowing a compatible USB drive to be configured If you have selected to use ReadyBoost, you can with a ReadyBoost cache. -

Toshiba Notebooks

Toshiba Notebooks June 28, 2005 SATELLITE QOSMIO SATELLITE PRO TECRA PORTÉGÉ LIBRETTO • Stylish, feature-packed value • The art of smart entertainment • The perfect companions for SMBs • First-class scalability, power and • Ultimate mobility: Redefining ultra- • The return of the mini-notebook on the move connectivity for corporate portable wireless computing • Offering outstanding quality • Born from the convergence of the AV computing • The innovatively designed libretto combined with high performance and and PC worlds, Qosmio allows you to • From the entry-level Satellite Pro, • The Portégé series offers the ultimate U100 heralds powerful, reliable attractive prices, these notebooks are create your own personal universe which offers great-value power, • The Tecra range brings the benefits in portability, from the ultra-thin portability in celebration of 20 years of ideal when impressive design, mobility and performance to the of seamless wireless connectivity and Portégé R200 to the impressive, leadership in mobile computing multimedia performance, mobility and • Designed to be the best mobile hub stylish, feature-packed widescreen exceptional mobile performance to stylish Portégé M300 and the reliability are needed, anywhere, for smart entertainment, Qosmio model, Toshiba's Satellite Pro range is business computing, with state-of-the- innovative anytime integrates advanced technologies to sure to provide an all-in-one notebook art features, comprehensive expansion Tablet PC Portégé M200 make your life simpler and more guaranteed to suit your business and complete mobility entertaining needs Product specification and prices are subject to change without prior notice. Errors and omissions excepted. For further information on Toshiba Europe GmbH Toshiba options & services visit Tel. -

Azor: Using Two-Level Block Selection to Improve SSD-Based I/O Caches

Azor: Using Two-level Block Selection to Improve SSD-based I/O caches Yannis Klonatos∗†, Thanos Makatos∗, Manolis Marazakis∗, Michail D. Flouris∗, and Angelos Bilas∗† ∗Foundation for Research and Technology - Hellas (FORTH), Institute of Computer Science (ICS) 100 N. Plastira Ave., Vassilika Vouton, Heraklion, GR-70013, Greece †Department of Computer Science, University of Crete, P.O. Box 2208, Heraklion, GR 71409, Greece. {klonatos, mcatos, maraz, flouris, bilas}@ics.forth.gr Abstract—Flash-based solid state drives (SSDs) exhibit poten- approaches that are application-specific and require application tial for solving I/O bottlenecks by offering superior performance knowledge, intervention, and tuning, Azor transparently and over hard disks for several workloads. In this work we design dynamically places data blocks in the SSD cache as they flow Azor, an SSD-based I/O cache that operates at the block-level and is transparent to existing applications, such as databases. Our in the I/O path between main memory and HDDs. In this work, design provides various choices for associativity, write policies we investigate the following problems: and cache line size, while maintaining a high degree of I/O concurrency. Our main contribution is that we explore differ- TABLE I entiation of HDD blocks according to their expected importance HDD AND SSD PERFORMANCE METRICS. on system performance. We design and analyze a two-level block selection scheme that dynamically differentiates HDD blocks, and SSD HDD selectively places them in the limited space of the SSD cache. Price/capacity ($/GB) $3 $0.3 We implement Azor in the Linux kernel and evaluate its Response time (ms) 0.17 12.6 effectiveness experimentally using a server-type platform and Throughput (R/W) (MB/s) 277/202 100/90 large problem sizes with three I/O intensive workloads: TPC-H, IOPS (R/W) 30,000/3,500 150/150 SPECsfs2008, and Hammerora.