Download the Data on Their Computer and Then Upload Them to the Neurosuites Server

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interfacing Apache HTTP Server 2.4 with External Applications

Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick November 6, 2012 Who am I? Interfacing Apache HTTP Server 2.4 with External Applications Met Unix (in the form of Xenix) in 1985 Jeff Trawick Joined IBM in 1990 to work on network software for mainframes Moved to a different organization in 2000 to work on Apache httpd Later spent about 4 years at Sun/Oracle Got tired of being tired of being an employee of too-huge corporation so formed my own too-small company Currently working part-time, coding on other projects, and taking classes Overview Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Huge problem space, so simplify Perspective: \General purpose" web servers, not minimal application containers which implement HTTP \Applications:" Code that runs dynamically on the server during request processing to process input and generate output Possible web server interactions Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Native code plugin modules (uhh, assuming server is native code) Non-native code + language interpreter inside server (Lua, Perl, etc.) Arbitrary processes on the other side of a standard wire protocol like HTTP (proxy), CGI, FastCGI, etc. (Java and \all of the above") or private protocol Some hybrid such as mod fcgid mod fcgid as example hybrid Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Supports applications which implement a standard wire protocol, no restriction on implementation mechanism Has extensive support for managing the application[+interpreter] processes so that the management of the application processes is well-integrated with the web server Contrast with mod proxy fcgi (pure FastCGI, no process management) or mod php (no processes/threads other than those of web server). -

Python for Bioinformatics, Second Edition

PYTHON FOR BIOINFORMATICS SECOND EDITION CHAPMAN & HALL/CRC Mathematical and Computational Biology Series Aims and scope: This series aims to capture new developments and summarize what is known over the entire spectrum of mathematical and computational biology and medicine. It seeks to encourage the integration of mathematical, statistical, and computational methods into biology by publishing a broad range of textbooks, reference works, and handbooks. The titles included in the series are meant to appeal to students, researchers, and professionals in the mathematical, statistical and computational sciences, fundamental biology and bioengineering, as well as interdisciplinary researchers involved in the field. The inclusion of concrete examples and applications, and programming techniques and examples, is highly encouraged. Series Editors N. F. Britton Department of Mathematical Sciences University of Bath Xihong Lin Department of Biostatistics Harvard University Nicola Mulder University of Cape Town South Africa Maria Victoria Schneider European Bioinformatics Institute Mona Singh Department of Computer Science Princeton University Anna Tramontano Department of Physics University of Rome La Sapienza Proposals for the series should be submitted to one of the series editors above or directly to: CRC Press, Taylor & Francis Group 3 Park Square, Milton Park Abingdon, Oxfordshire OX14 4RN UK Published Titles An Introduction to Systems Biology: Statistical Methods for QTL Mapping Design Principles of Biological Circuits Zehua Chen Uri Alon -

Mastering Flask Web Development Second Edition

Mastering Flask Web Development Second Edition Build enterprise-grade, scalable Python web applications Daniel Gaspar Jack Stouffer BIRMINGHAM - MUMBAI Mastering Flask Web Development Second Edition Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Commissioning Editor: Amarabha Banerjee Acquisition Editor: Devanshi Doshi Content Development Editor: Onkar Wani Technical Editor: Diksha Wakode Copy Editor: Safis Editing Project Coordinator: Sheejal Shah Proofreader: Safis Editing Indexer: Rekha Nair Graphics: Alishon Mendonsa Production Coordinator: Aparna Bhagat First published: September 2015 Second Edition: October 2018 Production reference: 1301018 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78899-540-5 www.packtpub.com mapt.io Mapt is an online digital library that gives you full access to over 5,000 books and videos, as well as industry leading tools to help you plan your personal development and advance your career. -

Uwsgi Documentation Release 2.0

uWSGI Documentation Release 2.0 uWSGI Jun 17, 2020 Contents 1 Included components (updated to latest stable release)3 2 Quickstarts 5 3 Table of Contents 33 4 Tutorials 303 5 Articles 343 6 uWSGI Subsystems 375 7 Scaling with uWSGI 457 8 Securing uWSGI 485 9 Keeping an eye on your apps 503 10 Async and loop engines 511 11 Web Server support 525 12 Language support 541 13 Other plugins 629 14 Broken/deprecated features 633 15 Release Notes 643 16 Contact 741 17 Commercial support 743 18 Donate 745 19 Sponsors 747 20 Indices and tables 749 i Python Module Index 751 Index 753 ii uWSGI Documentation, Release 2.0 The uWSGI project aims at developing a full stack for building hosting services. Application servers (for various programming languages and protocols), proxies, process managers and monitors are all implemented using a common api and a common configuration style. Thanks to its pluggable architecture it can be extended to support more platforms and languages. Currently, you can write plugins in C, C++ and Objective-C. The “WSGI” part in the name is a tribute to the namesake Python standard, as it has been the first developed plugin for the project. Versatility, performance, low-resource usage and reliability are the strengths of the project (and the only rules fol- lowed). Contents 1 uWSGI Documentation, Release 2.0 2 Contents CHAPTER 1 Included components (updated to latest stable release) The Core (implements configuration, processes management, sockets creation, monitoring, logging, shared memory areas, ipc, cluster membership and the uWSGI Subscription Server) Request plugins (implement application server interfaces for various languages and platforms: WSGI, PSGI, Rack, Lua WSAPI, CGI, PHP, Go . -

An Analysis of Python's Topics, Trends, and Technologies Through Mining Stack Overflow Discussions

1 An Analysis of Python’s Topics, Trends, and Technologies Through Mining Stack Overflow Discussions Hamed Tahmooresi, Abbas Heydarnoori, and Alireza Aghamohammadi F Abstract—Python is a popular, widely used, and general-purpose pro- (LDA) [3] to categorize discussions in specific areas such gramming language. In spite of its ever-growing community, researchers as web programming [4], mobile application development [5], have not performed much analysis on Python’s topics, trends, and tech- security [6], and blockchain [7]. However, none of them has nologies which provides insights for developers about Python commu- focused on Python. In this study, we shed light on the nity trends and main issues. In this article, we examine the main topics Python’s main areas of discussion through mining 2 461 876 related to this language being discussed by developers on one of the most popular Q&A websites, Stack Overflow, as well as temporal trends posts, from August 2008 to January 2019, using LDA based through mining 2 461 876 posts. To be more useful for the software topic modeling . We also analyze the temporal trends of engineers, we study what Python provides as the alternative to popular the extracted topics to find out how the developers’ interest technologies offered by common programming languages like Java. is changing over the time. After explaining the topics and Our results indicate that discussions about Python standard features, trends of Python, we investigate the technologies offered web programming, and scientific programming 1 are the most popular by Python, which are not properly revealed by the topic areas in the Python community. -

Master Thesis

MASTER THESIS TITLE: Analysis and evaluation of high performance web servers MASTER DEGREE: Master in Science in Telecommunication Engineering & Management AUTHOR: Albert Hidalgo Barea DIRECTOR: Rubén González Blanco SUPERVISOR: Roc Meseguer Pallarès DATE: July 13 th 2011 Title: Analysis and evaluation of high performance web servers Author: Albert Hidalgo Barea Director: Rubén González Blanco Supervisor: Roc Meseguer Pallarès Date: July 13 th 2011 Overview Web servers are a very important tool when providing users with requested content on the Internet. Usage of the Internet is growing day-by-day, making those software applications essential. In the first part of the thesis, the web server world will be introduced to the reader, by giving a brief explanation of some of the available technologies as well as different dynamic protocols. Also, as there are different web servers available in the market, during this report it will be chosen the best performing ones. So, it will be presented a comparative chart between all of them in order to show the most important features of each one. Defining the scenario and the test cases is mandatory. For this reason, it is described the used hardware and software used to perform those benchmarks. The hardware is maintained equal during the whole test process, in order to let web server’s performance gaps to their internal architecture. Operating system and benchmarking tools are also described and given some examples. Furthermore, test cases are chosen to show some strengths and weakness of each web server, enabling us to compare the relative performance between them. Finally, the last part of the report consists on presenting the obtained results during the benchmark process, as well as presenting some lessons learned during the curse of the whole thesis, summing-up with some conclusions. -

Deploying Python Applications with Httpd

Introduction Generalities Brass Tacks Configuration/deployment example For Further Study Deploying Python Applications with httpd Jeff Trawick http://emptyhammock.com/ [email protected] April 14, 2015 ApacheCon US 2015 Introduction Generalities Brass Tacks Configuration/deployment example For Further Study Get these slides... http://emptyhammock.com/projects/info/slides.html Introduction Generalities Brass Tacks Configuration/deployment example For Further Study Revisions Get a fresh copy of the slide deck before using any recipes. If I find errors before this deck is marked as superseded on the web page, I'll update the .pdf and note important changes here. (And please e-mail me with any problems you see.) Introduction Generalities Brass Tacks Configuration/deployment example For Further Study Who am I? • My day jobs over the last 25 years have included work on several products which were primarily based on or otherwise included Apache HTTP Server as well as lower-level networking products and web applications. My primary gig now is with a Durham, North Carolina company which specializes in Django application development. • I've been an httpd committer since 2000. A general functional area of Apache HTTP Server that I have helped maintain over the years (along with many others) is the interface with applications running in different processes, communicating with the server using CGI, FastCGI, or SCGI protocols. Introduction Generalities Brass Tacks Configuration/deployment example For Further Study mod wsgi vs. mod proxy-based solution I won't cover mod wsgi in this talk. I currently use it for a couple of applications but am migrating away from it, primarily for these reasons: • mod proxy supports more separation between web server and application • Supports moving applications around or running applications in different modes for debugging without changing web server • Supports drastic changes to the web front-end without affecting application • No collision between software stack in web server vs. -

Comparative Study on Python Web Frameworks: Flask and Django

Devndra Ghimire Comparative study on Python web frameworks: Flask and Django Metropolia University of Applied Sciences Bachelor of Engineering Media Engineering Bachelor’s Thesis 5 May 2020 Abstract Devndra Ghimire Author(s) Comparative study on Python web frameworks: Flask and Title Django. Number of Pages 37 pages + 0 appendices Date 5 May 2010 Degree Bachelor of Engineering Degree Programme Media Engineering Specialisation option Software Engineering Instructor(s) Kari Salo, Senior Lecturer The purpose of the thesis was to the study the various features, advantages, and the limita- tion of two web development frameworks for Python programming language. It aims to com- pare the usage of Django and Flask frameworks from a novice point of view. The theoretical part of the thesis presents the various types of programming languages and web technolo- gies. In the practical part, however, the study is divided into two parts, each part observing the respective web application framework. In order to perform the comparison, a social network and eCommerce like application was built for Flask and Django respectively. The comparison was started by developing the social network application first with Flask and finished with the e-commerce application using Django. Python programing language, SQLite database for the backend and HTML, JavaS- cript, and Ajax were used for the frontend technology. At the end of the project, both appli- cations were deployed to the cloud platform called Heroku. After the comparison, it was found that the most significant advantages of Flask were that it provides simplicity, flexibility, fine-grained control and quick and easy to learn. On the other hand, Django was easy to work with because of its extensive features and support for librar- ies. -

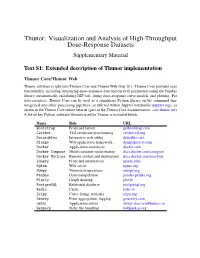

Visualization and Analysis of High-Throughput Dose-Response Datasets Supplementary Material

Thunor: Visualization and Analysis of High-Throughput Dose-Response Datasets Supplementary Material Text S1: Extended description of Thunor implementation Thunor Core/Thunor Web Thunor software is split into Thunor Core and Thunor Web (Fig. S1). Thunor Core provides core functionality, including structuring dose–response data and curve fit parameters using the Pandas library, automatically calculating DIP rate, fitting dose–response curve models, and plotting. For data scientists, Thunor Core can be used as a standalone Python library on the command line, integrated into other processing pipelines, or utilized within Jupyter notebooks (jupyter.org), as shown in the Thunor Core online tutorial (part of the Thunor Core documentation, core.thunor.net). A list of key Python software libraries used by Thunor is included below. Name Role URL Bootstrap Front end layout getbootstrap.com Certbot TLS certificate provisioning certbot.eff.org Datatables Interactive web tables datatables.net Django Web application framework djangoproject.com Docker Application containers docker.com Docker Compose Multi-container orchestration docs.docker.com/compose Docker Machine Remote control and deployment docs.docker.com/machine jQuery Front end interactivity jquery.com Nginx Web server nginx.org Numpy Numerical operations numpy.org Pandas Data manipulation pandas.pydata.org Plotly Graph drawing plot.ly PostgreSQL Relational database postgresql.org Redis Cache redis.io Scipy Curve fitting, statistics scipy.org Sentry Error aggregation, logging getsentry.com uWSGI Application server uwsgi-docs.readthedocs.io Webpack Static file bundling webpack.js.org Thunor Core Thunor Core is a Python package, which can be used interactively (Python prompt or within Jupyter Notebooks) or integrated into other data processing pipelines. -

Green Cluster of Low-Power Embedded Hardware Server Accelerators

GREEN CLUSTER OF LOW-POWER EMBEDDED HARDWARE SERVER ACCELERATORS NAVID MOHAGHEGH A DISSERTATION SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN FULFILMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTERS OF APPLIED SCIENCE AND ENGINEERING GRADUATE PROGRAM IN COMPUTER SCIENCE AND ENGINEERING YORK UNIVERSITY, TORONTO, ONTARIO NOVEMBER 2011 Library and Archives Bibliotheque et Canada Archives Canada Published Heritage Direction du 1+1 Branch Patrimoine de I'edition 395 Wellington Street 395, rue Wellington Ottawa ON K1A0N4 Ottawa ON K1A 0N4 Canada Canada Your file Votre reference ISBN: 978-0-494-88639-7 Our file Notre reference ISBN: 978-0-494-88639-7 NOTICE: AVIS: The author has granted a non L'auteur a accorde une licence non exclusive exclusive license allowing Library and permettant a la Bibliotheque et Archives Archives Canada to reproduce, Canada de reproduire, publier, archiver, publish, archive, preserve, conserve, sauvegarder, conserver, transmettre au public communicate to the public by par telecommunication ou par I'lnternet, preter, telecommunication or on the Internet, distribuer et vendre des theses partout dans le loan, distrbute and sell theses monde, a des fins commerciales ou autres, sur worldwide, for commercial or non support microforme, papier, electronique et/ou commercial purposes, in microform, autres formats. paper, electronic and/or any other formats. The author retains copyright L'auteur conserve la propriete du droit d'auteur ownership and moral rights in this et des droits moraux qui protege cette these. Ni thesis. Neither the thesis nor la these ni des extraits substantiels de celle-ci substantial extracts from it may be ne doivent etre imprimes ou autrement printed or otherwise reproduced reproduits sans son autorisation. -

Weblate Image Guide Weblate Stack Components

Weblate Image Guide Version 2.16 Websoft9 Weblate is a pre-configured, ready to run image for running Weblate on Alibaba Cloud. Weblate is a free web-based translation tool with tight version control integration. It features simple and clean user interface, propagation of translations across components within a project, quality checks and automatic linking to source files. Weblate Stack Components Application Software(Weblate2.16) Weblate project directory: /data/wwwroot/weblate Weblate application directory: /data/wwwroot/weblate/weblate Weblate configuration file: /data/wwwroot/weblate/weblate/settings.py Application Server(Python2.7,virtualenv 15.0.1,django 1.11.4,uwsgi 2.0.12,nginx 1.10.3) Weblate for python Virtualenv directory: /data/wwwroot/weblate/env Nginx configuration directory: /etc/nginx/ Nginx for Weblate configuration file: /etc/nginx/sites-enabled/weblate uwsgi configuration directory: /etc/uwsgi uwsgi for weblate configuration file: /etc/uwsgi/apps-enabled/weblate.ini Database(MYSQL5.7) MySQL directory: /var/lib/mysql The default database name for Weblate: weblate Verify the Image After the installation of Image,please verify it Login on Alibaba Cloud console,get you Internet IP Address Open you Chrome or Firefox on your local PC,visit the http://Internet IP Address/ If verify successfully,you can enter the Start Installation page of this Image If no response from browser,please check the Security Group settings (https://www.alibabacloud.com/help/doc-detail/25471.htm) to ensure that port 80 is been allowed Start to use Weblate Using Chrome or Firefox to visit: http://Internet IP Address/ to start using this application.Following is the steps: 1. -

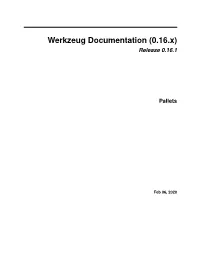

Werkzeug Documentation (0.16.X) Release 0.16.1

Werkzeug Documentation (0.16.x) Release 0.16.1 Pallets Feb 06, 2020 Contents 1 Getting Started 3 1.1 Installation................................................3 1.2 Transition to Werkzeug 1.0........................................5 1.3 Werkzeug Tutorial............................................6 1.4 API Levels................................................ 14 1.5 Quickstart................................................ 15 2 Serving and Testing 21 2.1 Serving WSGI Applications....................................... 21 2.2 Test Utilities............................................... 26 2.3 Debugging Applications......................................... 32 3 Reference 37 3.1 Request / Response Objects....................................... 37 3.2 URL Routing............................................... 54 3.3 WSGI Helpers.............................................. 67 3.4 Filesystem Utilities............................................ 74 3.5 HTTP Utilities.............................................. 74 3.6 Data Structures.............................................. 83 3.7 Utilities.................................................. 99 3.8 URL Helpers............................................... 106 3.9 Context Locals.............................................. 112 3.10 Middleware................................................ 115 3.11 HTTP Exceptions............................................ 121 4 Deployment 127 4.1 Application Deployment......................................... 127 5 Contributed Modules 133 5.1 Contributed