Ensuring Application Uptime in the Cloud the Importance of Uptime

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

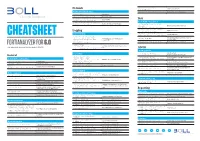

Fortianalyzer

Network diagnose fortilogd Device message rate msgrate-device Network Troubleshooting diagnose fortilogd msgrate-type Message rate for each log type execute ping [host] Ping utility execute traceroute [host] Traceroute utility diag sniffer packet <interface> Packet sniffer Disk <filter> <level> <timestamp> Disk / RAID / Virtual Disk config system fortiview settings Resolve IP address to hostname set resolve-ip enable config system locallog disk setting What happens with oldest logs set diskfull nolog / overwrite Logging diagnose system raid [option] RAID information status, hwinfo, alarms Log Forwarding diagnose system disk [option] Disk information info, health, errors, attributes CHEATSHEET config system log-forward edit log-aggregation <id> For virtual machines: provides a list of Forwarding logs to FortiAnalyzer / execute lvm info available disks aggregation-client set mode Syslog / CEF <realtime, execute lvm extend <disk nr.> For virtual machines: Add disk FORTIANALYZER FOR 6.0 aggregation, disable> config system Configure the FortiAnalyzer that receives © BOLL Engineering AG, FortiAnalyzer Cheat Sheet Version 1.1 / 08.02.2019 log-forward-service logs ADOM set accept-aggregation enable ADOM operation Log Backup config system global ADOM settings set adom-status [en/dis] Enable or disable ADOM mode General execute backup logs config system global Set ADOM mode to normal or advanced / Default device information <device name | all> for VDOMs) <ftp | sftp | scp> <server ip> Backup logs to external storage set adom-mode [normal/advanced] -

Introduction to UNIX at MSI June 23, 2015 Presented by Nancy Rowe

Introduction to UNIX at MSI June 23, 2015 Presented by Nancy Rowe The Minnesota Supercomputing Institute for Advanced Computational Research www.msi.umn.edu/tutorial/ © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Overview • UNIX Overview • Logging into MSI © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Frequently Asked Questions msi.umn.edu > Resources> FAQ Website will be updated soon © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research What’s the difference between Linux and UNIX? The terms can be used interchangeably © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research UNIX • UNIX is the operating system of choice for engineering and scientific workstations • Originally developed in the late 1960s • Unix is flexible, secure and based on open standards • Programs are often designed “to do one simple thing right” • Unix provides ways for interconnecting these simple programs to work together and perform more complex tasks © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Getting Started • MSI account • Service Units required to access MSI HPC systems • Open a terminal while sitting at the machine • A shell provides an interface for the user to interact with the operating system • BASH is the default shell at MSI © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Bastion Host • login.msi.umn.edu • Connect to bastion host before connecting to HPC systems • Cannot run software on bastion host (login.msi.umn.edu) © 2015 Regents of the University of Minnesota. -

Chapter 10 SHELL Substitution and I/O Operations

Chapter 10 SHELL Substitution and I/O Operations 10.1 Command Substitution Command substitution is the mechanism by which the shell performs a given set of commands and then substitutes their output in the place of the commands. Syntax: The command substitution is performed when a command is given as: `command` When performing command substitution make sure that you are using the backquote, not the single quote character. Example: Command substitution is generally used to assign the output of a command to a variable. Each of the following examples demonstrate command substitution: #!/bin/bash DATE=`date` echo "Date is $DATE" USERS=`who | wc -l` echo "Logged in user are $USERS" UP=`date ; uptime` echo "Uptime is $UP" This will produce following result: Date is Thu Jul 2 03:59:57 MST 2009 Logged in user are 1 Uptime is Thu Jul 2 03:59:57 MST 2009 03:59:57 up 20 days, 14:03, 1 user, load avg: 0.13, 0.07, 0.15 10.2 Shell Input/Output Redirections Most Unix system commands take input from your terminal and send the resulting output back to your terminal. A command normally reads its input from a place called standard input, which happens to be your terminal by default. Similarly, a command normally writes its output to standard output, which is also your terminal by default. Output Redirection: The output from a command normally intended for standard output can be easily diverted to a file instead. This capability is known as output redirection: If the notation > file is appended to any command that normally writes its output to standard output, the output of that command will be written to file instead of your terminal: Check following who command which would redirect complete output of the command in users file. -

The Linux Command Line Presentation to Linux Users of Victoria

The Linux Command Line Presentation to Linux Users of Victoria Beginners Workshop August 18, 2012 http://levlafayette.com What Is The Command Line? 1.1 A text-based user interface that provides an environment to access the shell, which interfaces with the kernel, which is the lowest abstraction layer to system resources (e.g., processors, i/o). Examples would include CP/M, MS-DOS, various UNIX command line interfaces. 1.2 Linux is the kernel; GNU is a typical suite of commands, utilities, and applications. The Linux kernel may be accessed by many different shells e.g., the original UNIX shell (sh), the TENEX C shell (tcsh), Korn shell (ksh), and explored in this presentation, the Bourne-Again Shell (bash). 1.3 The command line interface can be contrasted with the graphic user interface (GUI). A GUI interface typically consists of window, icon, menu, pointing-device (WIMP) suite, which is popular among casual users. Examples include MS-Windows, or the X- Window system. 1.4 A critical difference worth noting is that in UNIX-derived systems (such as Linux and Mac OS), the GUI interface is an application launched from the command-line interface, whereas with operating systems like contemporary versions of MS-Windows, the GUI is core and the command prompt is a native MS-Windows application. Why Use The Command Line? 2.1 The command line uses significantly less resources to carry out the same task; it requires less processor power, less memory, less hard-disk etc. Thus, it is preferred on systems where performance is considered critical e.g., supercomputers and embedded systems. -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Garrels BVBA <tille wants no spam _at_ garrels dot be> Version 1.11 Last updated 20081227 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Multiping Version 3 Product Manual

MultiPing version 3 Product Manual (c) 2002, 2014 Nessoft, LLC MultiPing (c) 2002, 2014 Nessoft, LLC All rights reserved. No parts of this work may be reproduced in any form or by any means - graphic, electronic, or mechanical, including photocopying, recording, taping, or information storage and retrieval systems - without the written permission of the publisher. Products that are referred to in this document may be either trademarks and/or registered trademarks of the respective owners. The publisher and the author make no claim to these trademarks. While every precaution has been taken in the preparation of this document, the publisher and the author assume no responsibility for errors or omissions, or for damages resulting from the use of information contained in this document or from the use of programs and source code that may accompany it. In no event shall the publisher and the author be liable for any loss of profit or any other commercial damage caused or alleged to have been caused directly or indirectly by this document. Printed: August 2014 Special thanks to: Publisher All the people who contributed to this documention and to the Nessoft, LLC PingPlotter software. Managing Editor Special recognition to: Pete Ness Pierre Schwab Technical Editor Tom Larrow Jeff Murri Jenna Clara Andrea, D.D., Nina Mele Cam M. Larry M. Contents I Table of Contents Part I Welcome 2 Part II Support / Help 4 Part III Licensing 6 Part IV Getting Started 8 1 Things .t.o.. .d..o.. .w...i.t.h.. .M..u..l.t.i.P...i.n..g. -

Command Reference Guide for Cisco Prime Infrastructure 3.9

Command Reference Guide for Cisco Prime Infrastructure 3.9 First Published: 2020-12-17 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

OAM Tutorial V5

Operations, Maintenance and Administration (OAM) Tutorial 83 rd IETF, Paris, France March, 2012 Tissa Senevirathne and Sam K Aldrin What is OAM Means different things to different people and organizations. Worst, some times it means different things to different people within the same organization IETF standardized the meaning of OAM within the IETF ◦ June 2011, RFC 6291 IETF definition of OAM ((RFCRFC 6291) Operations: Operational activities to keep network up and running. E.g. Monitoring, finding faults Administration: Involves keeping track of network resources. E.g. Bookkeeping, (available ports, BW) Maintenance: Involves repair and upgrades. E.g. Software upgrades, configurations, corrective and preventive measures. Scope of the Tutorial Today’s presentation mainly focus on IETF defined Operations aspects of OAM. Summary of applicable Administrative and Maintenance IETF standards are presented Important Terminologies Before we dive deeper, it is important to understand some of the terminologies and their meanings What are they ? ◦ Various organizations (IEEE, ITUT, IETF) all have their version ◦ We will discuss here selected set of definitions from RFC 5860, RFC 6371 and draft -ietf -opsawg -oam - overview-05 Good understanding of these Terminologies will help us to appreciate modern OAM protocols better. Important Terminologies Maintenance Point (MP) ◦ Is a functional entity that is defined within a node that either initiate or react to a OAM message Maintenance Entity (ME) ◦ Point to Point relationship between two MP -

Freebsd Command Reference

FreeBSD command reference Command structure Each line you type at the Unix shell consists of a command optionally followed by some arguments , e.g. ls -l /etc/passwd | | | cmd arg1 arg2 Almost all commands are just programs in the filesystem, e.g. "ls" is actually /bin/ls. A few are built- in to the shell. All commands and filenames are case-sensitive. Unless told otherwise, the command will run in the "foreground" - that is, you won't be returned to the shell prompt until it has finished. You can press Ctrl + C to terminate it. Colour code command [args...] Command which shows information command [args...] Command which modifies your current session or system settings, but changes will be lost when you exit your shell or reboot command [args...] Command which permanently affects the state of your system Getting out of trouble ^C (Ctrl-C) Terminate the current command ^U (Ctrl-U) Clear to start of line reset Reset terminal settings. If in xterm, try Ctrl+Middle mouse button stty sane and select "Do Full Reset" exit Exit from the shell logout ESC :q! ENTER Quit from vi without saving Finding documentation man cmd Show manual page for command "cmd". If a page with the same man 5 cmd name exists in multiple sections, you can give the section number, man -a cmd or -a to show pages from all sections. man -k str Search for string"str" in the manual index man hier Description of directory structure cd /usr/share/doc; ls Browse system documentation and examples. Note especially cd /usr/share/examples; ls /usr/share/doc/en/books/handbook/index.html cd /usr/local/share/doc; ls Browse package documentation and examples cd /usr/local/share/examples On the web: www.freebsd.org Includes handbook, searchable mailing list archives System status Alt-F1 .. -

The Linux Command Line

The Linux Command Line Second Internet Edition William E. Shotts, Jr. A LinuxCommand.org Book Copyright ©2008-2013, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. This book is also available in printed form, published by No Starch Press and may be purchased wherever fine books are sold. No Starch Press also offers this book in elec- tronic formats for most popular e-readers: http://nostarch.com/tlcl.htm Release History Version Date Description 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. 09.11 November 19, 2009 Fourth draft with almost all reviewer feedback incorporated and edited through chapter 37. 09.10 October 3, 2009 Third draft with revised table formatting, partial application of reviewers feedback and edited through chapter 18. 09.08 August 12, 2009 Second draft incorporating the first editing pass. 09.07 July 18, 2009 Completed first draft. Table of Contents Introduction....................................................................................................xvi -

Troubleshooting

Troubleshooting This section contains the following topics: • Troubleshooting, on page 1 Troubleshooting You can troubleshoot a Cisco Crosswork Data Gateway instance directly from the VM. Cisco Crosswork Data Gateway provides logs of errors, requests to the server, and changes made to the VM and reports any process failures/outages. To access Troubleshooting menu, select 5 Troubleshooting from the Main Menu and click OK, as shown in the following figure: Cisco Crosswork Data Gateway opens the Troubleshooting menu that provides you the following options to troubleshoot your Cisco Crosswork Data Gateway instance: Troubleshooting 1 Troubleshooting Ping a Host Note The following figure shows the Troubleshooting Menu corresponding to dg-admin user. Few of these options are not available to dg-oper user. See Table Table 1. This section contains the following topics: • Ping a Host, on page 2 • Traceroute to a Host, on page 3 • Check NTP Status, on page 4 • Check System Uptime, on page 4 • Run show-tech, on page 5 • Reboot Cisco Crosswork Data Gateway VM, on page 6 • Test SSH Connection, on page 6 Ping a Host To aid troubleshooting, Cisco Crosswork Data Gateway provides you Ping utility that can be used to check reachability to any IP address. Step 1 From Troubleshooting menu, select 1 Ping a Host and click OK. Step 2 Enter the ping destination. Troubleshooting 2 Troubleshooting Traceroute to a Host Step 3 Click OK. Cisco Crosswork Data Gateway displays the result of the ping operation. Traceroute to a Host Cisco Crosswork Data Gateway provides Traceroute to a Host option to help troubleshoot latency issues. -

Netscaler’S SSH

Use Putty to connect to NetScaler’s SSH www.putty.org. Login with your NetScaler username and password. You will see some commands starting with ‘#’ – these are shell commands. To enter NetScaler’s shell mode (FreeBSD) type “shell”, to exit the shell mode type “exit”. CLI Command Description set cli mode -color ON Adds color to CLI set cli prompt %u@%h-%T Adds username, hostname and current time NetScaler Information show version NetScaler firmware version show hostname NetScaler hostname show hardware Hardware details including serial number show interface -summary NetScaler network interfaces stat ssl SSL summary show node High Availability (HA) node configuration # sysctl -a netscaler | more Detailed NetScaler info: description, model, platform, CPUs, etc. NetScaler Configuration show ip NetScaler IPs: NSIP, SNIP, VIP, MIP show feature NetScaler features: available and configured show ns mode NetScaler modes: available and configured show info NetScaler firmware, NSIP, config data, features and modes show license License and licensed features show run | more Running NetScaler configuration NetScaler Status shell top NetScaler “Task Manager” # uptime Appliance uptime Networking show route Configured routing table show ip Configured IPs: NSIP, SNIP, MIP, VIP show arp ARP table show vlan Configured VLANs Networking Tools # ping Ping # traceroute Traceroute # telnet Telnet CLI Command Description Load Balancing show lb vserver | more Load balancing virtual servers list and configuration show lb vserver <vserver_name> Load balancing virtual