Bigartm Documentation Release 1.0 Konstantin Vorontsov

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Source Used in Influx1.8 Influx 1.9

Open Source Used In Influx1.8 Influx 1.9 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-1178791953 Open Source Used In Influx1.8 Influx 1.9 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-1178791953 Contents 1.1 golang-protobuf-extensions v1.0.1 1.1.1 Available under license 1.2 prometheus-client v0.2.0 1.2.1 Available under license 1.3 gopkg.in-asn1-ber v1.0.0-20170511165959-379148ca0225 1.3.1 Available under license 1.4 influxdata-raft-boltdb v0.0.0-20210323121340-465fcd3eb4d8 1.4.1 Available under license 1.5 fwd v1.1.1 1.5.1 Available under license 1.6 jaeger-client-go v2.23.0+incompatible 1.6.1 Available under license 1.7 golang-genproto v0.0.0-20210122163508-8081c04a3579 1.7.1 Available under license 1.8 influxdata-roaring v0.4.13-0.20180809181101-fc520f41fab6 1.8.1 Available under license 1.9 influxdata-flux v0.113.0 1.9.1 Available under license 1.10 apache-arrow-go-arrow v0.0.0-20200923215132-ac86123a3f01 1.10.1 Available under -

License Agreement

End User License Agreement If you have another valid, signed agreement with Licensor or a Licensor authorized reseller which applies to the specific products or services you are downloading, accessing, or otherwise receiving, that other agreement controls; otherwise, by using, downloading, installing, copying, or accessing Software, Maintenance, or Consulting Services, or by clicking on "I accept" on or adjacent to the screen where these Master Terms may be displayed, you hereby agree to be bound by and accept these Master Terms. These Master Terms also apply to any Maintenance or Consulting Services you later acquire from Licensor relating to the Software. You may place orders under these Master Terms by submitting separate Order Form(s). Capitalized terms used in the Agreement and not otherwise defined herein are defined at https://terms.tibco.com/posts/845635-definitions. 1. Applicability. These Master Terms represent one component of the Agreement for Licensor's products, services, and partner programs and apply to the commercial arrangements between Licensor and Customer (or Partner) listed below. Additional terms referenced below shall apply. a. Products: i. Subscription, Perpetual, or Term license Software ii. Cloud Service (Subject to the Cloud Service Terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#cloud-services) iii. Equipment (Subject to the Equipment Terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#equipment-terms) b. Services: i. Maintenance (Subject to the Maintenance terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#october-maintenance) ii. Consulting Services (Subject to the Consulting terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#supplemental-terms) iii. -

Download the Basketballplayer.Ngql Fle Here

Nebula Graph Database Manual v1.2.1 Min Wu, Amber Zhang, XiaoDan Huang 2021 Vesoft Inc. Table of contents Table of contents 1. Overview 4 1.1 About This Manual 4 1.2 Welcome to Nebula Graph 1.2.1 Documentation 5 1.3 Concepts 10 1.4 Quick Start 18 1.5 Design and Architecture 32 2. Query Language 43 2.1 Reader 43 2.2 Data Types 44 2.3 Functions and Operators 47 2.4 Language Structure 62 2.5 Statement Syntax 76 3. Build Develop and Administration 128 3.1 Build 128 3.2 Installation 134 3.3 Configuration 141 3.4 Account Management Statement 161 3.5 Batch Data Management 173 3.6 Monitoring and Statistics 192 3.7 Development and API 199 4. Data Migration 200 4.1 Nebula Exchange 200 5. Nebula Graph Studio 224 5.1 Change Log 224 5.2 About Nebula Graph Studio 228 5.3 Deploy and connect 232 5.4 Quick start 237 5.5 Operation guide 248 6. Contributions 272 6.1 Contribute to Documentation 272 6.2 Cpp Coding Style 273 6.3 How to Contribute 274 6.4 Pull Request and Commit Message Guidelines 277 7. Appendix 278 7.1 Comparison Between Cypher and nGQL 278 - 2/304 - 2021 Vesoft Inc. Table of contents 7.2 Comparison Between Gremlin and nGQL 283 7.3 Comparison Between SQL and nGQL 298 7.4 Vertex Identifier and Partition 303 - 3/304 - 2021 Vesoft Inc. 1. Overview 1. Overview 1.1 About This Manual This is the Nebula Graph User Manual. -

Mysql NDB Cluster 7.6 Community Release License Information User

Licensing Information User Manual MySQL NDB Cluster 7.6.12 (and later) Table of Contents Licensing Information .......................................................................................................................... 2 Licenses for Third-Party Components .................................................................................................. 9 ANTLR 3 .................................................................................................................................... 9 argparse ................................................................................................................................... 10 Boost Library ............................................................................................................................ 11 Corosync .................................................................................................................................. 11 Cyrus SASL ............................................................................................................................. 12 dtoa.c ....................................................................................................................................... 13 Editline Library (libedit) ............................................................................................................. 13 Facebook Fast Checksum Patch ............................................................................................... 15 Facebook Patches ................................................................................................................... -

Brackets Third Party Page (

Brackets Third Party Page (http://www.adobe.com/go/thirdparty) "Cowboy" Ben Alman Copyright © 2010-2012 "Cowboy" Ben Alman Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. The Android Open Source Project Copyright (C) 2008 The Android Open Source Project All rights reserved. Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met: Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. -

Project Skeleton for Scientific Software

Computational Photonics Group Department of Electrical and Computer Engineering Technical University of Munich bertha: Project Skeleton for Scientific Software Michael Riesch , Tien Dat Nguyen , and Christian Jirauschek Department of Electrical and Computer Engineering, Technical University of Munich, Arcisstr. 21, 80333 Munich, Germany [email protected] Received: 10 December 2019 / Accepted: 04 March 2020 / Published: 23 March 2020 * Abstract — Science depends heavily on reliable and easy-to-use software packages, such as mathematical libraries or data analysis tools. Developing such packages requires a lot of effort, which is too often avoided due to the lack of funding or recognition. In order to reduce the efforts required to create sustainable software packages, we present a project skeleton that ensures the best software engineering practices from the start of a project, or serves as reference for existing projects. 1 Introduction In a recent essay in Nature [1], a familiar dilemma in science was addressed. On the one hand, science relies heavily on open-source software packages, such as libraries for mathematical operations, implementations of numerical methods, or data analysis tools. As a consequence, those software packages need to work reliably and should be easy to use. On the other hand, scientific software is notoriously underfunded and the required efforts are achieved as side projects or by the scientists working in their spare time. Indeed, a lot of effort has to be invested beyond the work on the actual implementation – which is typically a formidable challenge on its own. This becomes apparent from literature on software engineering in general (such as the influential “Pragmatic Programmer” [2]), and in scientific contexts in particular (e.g., [3–6]). -

Product End User License Agreement

End User License Agreement If you have another valid, signed agreement with Licensor or a Licensor authorized reseller which applies to the specific products or services you are downloading, accessing, or otherwise receiving, that other agreement controls; otherwise, by using, downloading, installing, copying, or accessing Software, Maintenance, or Consulting Services, or by clicking on "I accept" on or adjacent to the screen where these Master Terms may be displayed, you hereby agree to be bound by and accept these Master Terms. These Master Terms also apply to any Maintenance or Consulting Services you later acquire from Licensor relating to the Software. You may place orders under these Master Terms by submitting separate Order Form(s). Capitalized terms used in the Agreement and not otherwise defined herein are defined at https://terms.tibco.com/posts/845635-definitions. 1. Applicability. These Master Terms represent one component of the Agreement for Licensor's products, services, and partner programs and apply to the commercial arrangements between Licensor and Customer (or Partner) listed below. Additional terms referenced below shall apply. a. Products: i. Subscription, Perpetual, or Term license Software ii. Cloud Service (Subject to the Cloud Service Terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#cloud-services) iii. Equipment (Subject to the Equipment Terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#equipment-terms) b. Services: i. Maintenance (Subject to the Maintenance terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#october-maintenance) ii. Consulting Services (Subject to the Consulting terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#supplemental-terms) iii. -

Product End User License Agreement

End User License Agreement If you have another valid, signed agreement with Licensor or a Licensor authorized reseller which applies to the specific products or services you are downloading, accessing, or otherwise receiving, that other agreement controls; otherwise, by using, downloading, installing, copying, or accessing Software, Maintenance, or Consulting Services, or by clicking on "I accept" on or adjacent to the screen where these Master Terms may be displayed, you hereby agree to be bound by and accept these Master Terms. These Master Terms also apply to any Maintenance or Consulting Services you later acquire from Licensor relating to the Software. You may place orders under these Master Terms by submitting separate Order Form(s). Capitalized terms used in the Agreement and not otherwise defined herein are defined at https://terms.tibco.com/posts/845635-definitions. 1. Applicability. These Master Terms represent one component of the Agreement for Licensor's products, services, and partner programs and apply to the commercial arrangements between Licensor and Customer (or Partner) listed below. Additional terms referenced below shall apply. a. Products: i. Subscription, Perpetual, or Term license Software ii. Cloud Service (Subject to the Cloud Service terms) iii. Equipment (Subject to the Equipment terms) b. Services: i. Maintenance (Subject to the Maintenance terms) ii. Consulting Services (Subject to the Consulting terms) iii. Education and Training (Subject to the Training Restrictions and Limitations) c. Partners: i. Partners (Subject to the Partner terms) ii. Distribution, Reseller, and VAR Partner (Subject to the Partner Terms and Distributor/Reseller/VAR terms) iii. Developer and Solution/Technology Partner (Subject to the Partner Terms and Developer and Technology Partner terms) d. -

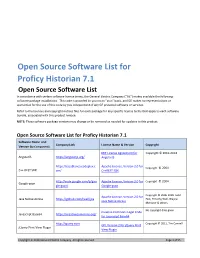

Open Source Software List for Proficy Historian

Open Source Software List for Proficy Historian 7.1 Open Source Software List In accordance with certain software license terms, the General Electric Company (“GE”) makes available the following software package installations. This code is provided to you on an “as is” basis, and GE makes no representations or warranties for the use of this code by you independent of any GE provided software or services. Refer to the licenses and copyright notices files for each package for any specific license terms that apply to each software bundle, associated with this product release. NOTE: These software package versions may change or be removed as needed for updates to this product. Open Source Software List for Proficy Historian 7.1 Software Name and Company Link License Name & Version Copyright Version (by Component) MIT License Agreement for Copyright © 2010-2014 AngularJS https://angularjs.org/ AngularJS https://casablanca.codeplex.c Apache License, Version 2.0 for Copyright © 2004 C++ REST SDK om/ C++REST SDK http://code.google.com/p/goo Apache License, Version 2.0 for Copyright © 2004 Google-gson gle-gson/ Google-gson Copyright © 2006-2009 Todd Apache License, Version 2.0 for Java Native Access https://github.com/twall/jna Fast, Timothy Wall, Wayne Java Native Access Meissner & others No copyright date given Creative Commons Legal Code Javascript Base64 https://creativecommons.org/ for Javascript Base64 Copyright © 2011, Tim Connell http://jquery.com GPL Version 2 for jQuery Print jQuery Print View Plugin View Plugin Copyright © 2018 General -

Biodynamo: a New Platform for Large-Scale Biological Simulation

Lukas Johannes Breitwieser, BSc BioDynaMo: A New Platform for Large-Scale Biological Simulation MASTER’S THESIS to achieve the university degree of Diplom-Ingenieur Master’s degree programme Software Engineering and Management submitted to Graz University of Technology Supervisor Ass.Prof. Dipl.-Ing. Dr.techn. Christian Steger Institute for Technical Informatics Advisor Ass.Prof. Dipl.-Ing. Dr.techn. Christian Steger Dr. Fons Rademakers (CERN) November 23, 2016 AFFIDAVIT I declare that I have authored this thesis independently, that I have not used other than the declared sources/resources, and that I have explicitly indicated all ma- terial which has been quoted either literally or by content from the sources used. The text document uploaded to TUGRAZonline is identical to the present master‘s thesis. Date Signature This thesis is dedicated to my parents Monika and Fritz Breitwieser Without their endless love, support and encouragement I would not be where I am today. iii Acknowledgment This thesis would not have been possible without the support of a number of people. I want to thank my supervisor Christian Steger for his guidance, support, and input throughout the creation of this document. Furthermore, I would like to express my deep gratitude towards the entire CERN openlab team, Alberto Di Meglio, Marco Manca and Fons Rademakers for giving me the opportunity to work on this intriguing project. Furthermore, I would like to thank them for vivid discussions, their valuable feedback, scientific freedom and possibility to grow. My gratitude is extended to Roman Bauer from Newcastle University (UK) for his helpful insights about computational biology detailed explanations and valuable feedback. -

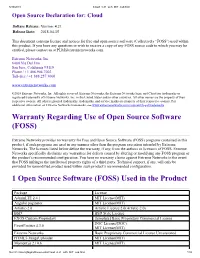

Warranty Regarding Use of Open Source Software (FOSS) 1 Open

5/30/2018 Cloud_4.21_with_MIT_osd.html Open Source Declaration for: Cloud Softare Release: Version: 4.21 Release Date: 2018-04-15 This document contains license and notices for free and open source software (Collectively “FOSS”) used within this product. If you have any questions or wish to receive a copy of any FOSS source code to which you may be entitled, please contact us at [email protected]. Extreme Networks, Inc. 6480 Via Del Oro San Jose, California 95119 Phone / +1 408.904.7002 Toll-free / +1 888.257.3000 www.extremenetworks.com ©2018 Extreme Networks, Inc. All rights reserved. Extreme Networks, the Extreme Networks logo, and Cloud are trademarks or registered trademarks of Extreme Networks, Inc. in the United States and/or other countries. All other names are the property of their respective owners. All other registered trademarks, trademarks, and service marks are property of their respective owners. For additional information on Extreme Networks trademarks, see www.extremenetworks.com/company/legal/trademarks Warranty Regarding Use of Open Source Software (FOSS) Extreme Networks provides no warranty for Free and Open Source Software (FOSS) programs contained in this product, if such programs are used in any manner other than the program execution intended by Extreme Networks. The licenses listed below define the warranty, if any, from the authors or licensors of FOSS. Extreme Networks specifically disclaims any warranties for defects caused by altering or modifying any FOSS program or the product´s recommended configuration. You have no warranty claims against Extreme Networks in the event that FOSS infringes the intellectual property rights of a third party. -

André Filipe Faria Dos Santos Mm M M M M

±±±±±±±±±±±±±±±±±±±±± ¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿ ssssss ss ss ss Ss sss mm m m m m mm 5ssss s 5555 ±±±±±±±±±±±±±±±±±±±±± ¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿¿ sss ss s ss s a as assas ssssss ss ss ss Ss sss mm m m m m mm ±¿¿¿¿¿±¿±±¿¿¿¿¿±¿¿±¿¿¿¿ sss s s s 5s s ss a as assas s ss s ssss s s ss s ss ss s s m m m m m o o m m m 5ssss s 5555 ACKNOWLEDGEMENTS I express my gratitude to my supervisors, Professor Alcino Cunha and Nuno Macedo. They main- tained a constant presence and guidance throughout the project, by reviewing my work, helping me stay on the right track, and pointing out possible routes and resources of relevance. Without their support I would have faced many more obstacles and dead ends along the way. I also thank my colleagues and members of HASLab who contributed to an inspirational work environment, reviewed parts of my work, or have otherwise been helpful. Last but not least, I thank my parents and Sofia for their personal support. They always listened to me, even though at times they did not fully understand what I talked about. ABSTRACT Software static analysis is a key component of formal software verification. It consists on inspecting code, using automated tools, to determine a set of relevant properties without executing the program. An example of such properties is the compliance with certain coding standards - sets of rules, subsets of the programming languages, defined to achieve high-quality software, minimizing risks and main- tenance costs. Some coding standards, such as MISRA C++ or HIC++, are highly adopted nowadays, in safety-critical systems with high reliability requirements.