Important Concepts Not on the AP Statistics Formula Sheet Part I

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AP Statistics 2021 Free-Response Questions

2021 AP® Statistics Free-Response Questions © 2021 College Board. College Board, Advanced Placement, AP, AP Central, and the acorn logo are registered trademarks of College Board. Visit College Board on the web: collegeboard.org. AP Central is the official online home for the AP Program: apcentral.collegeboard.org. Formulas for AP Statistics I. Descriptive Statistics 2 1 ∑ x 1 2 ∑( x − x ) x = ∑ x = i s = ∑( x − x ) = i ni n x n − 1 i n − 1 yˆ = a + bx y = a + bx 1 xi − x yi − y sy r = ∑ b = r n − 1 sx sy sx II. Probability and Distributions PA( ∩ B) PA( ∪ B )()()(= PA+ PB − PA ∩ B ) PAB()| = PB() Probability Distribution Mean Standard Deviation µ = E( X) = ∑ xP x 2 Discrete random variable, X X i ( i ) σ = ∑ − µ X ( xi X ) Px( i ) If has a binomial distribution µ = np σ = − with parameters n and p, then: X X np(1 p) n x nx− PX( = x) = p (1 − p) x where x = 0, 1, 2, 3, , n If has a geometric distribution 1 1 − p with parameter p, then: µ = σ = X p X PX( = x) = (1 − p) x−1 p p where x = 1, 2, 3, III. Sampling Distributions and Inferential Statistics statistic − parameter Standardized test statistic: standard error of the statistic Confidence interval: statistic ± (critical value )( standard error of statistic ) (observed − expected)2 Chi-square statistic: χ 2 = ∑ expected III. Sampling Distributions and Inferential Statistics (continued) Sampling distributions for proportions: Random Parameters of Standard Error* Variable Sampling Distribution of Sample Statistic For one population: µ = p p(1 − p) pˆ (1 − pˆ ) pˆ σ pˆ = -

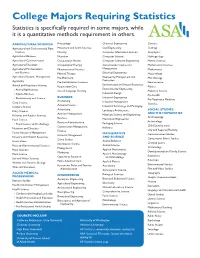

College Majors Requiring Statistics Statistics Is Specifically Required in Some Majors, While It Is a Quantitative Methods Requirement in Others

College Majors Requiring Statistics Statistics is specifically required in some majors, while it is a quantitative methods requirement in others. AGRICULTURAL SCIENCES Kinesiology Chemical Engineering Genetics Agricultural and Environmental Plant Movement and Sport Sciences Civil Engineering Geology Sciences Nursing Computer Information Systems Geophysics Agricultural Business Nutrition Computer Science Hydrogeology Agricultural Communication Occupational Health Computer Software Engineering Marine Sciences Agricultural Education Occupational Therapy Construction Science and Mathematical Sciences Agricultural Mechanization Pharmaceutical Sciences Management Mathematics and Business Physical Therapy Electrical Engineering Meteorology Agricultural Systems Management Pre-Pharmacy Engineering Management and Microbiology Protection Agronomy Pre-Rehabilitation Sciences Neuroscience Environmental and Natural Resources Animal and Veterinary Sciences Respiratory Care Physics Environmental Engineering • Animal Agribusiness Speech Language Hearing Planetary Science Industrial Design • Equine Business Pre-Health • Preveterinary and Science BUSINESS Industrial Engineering Accounting Pre-Veterinary Medicine Crop Science Industrial Management Actuarial Science Statistics Culinary Science Industrial Technology and Packaging Advertising SOCIAL STUDIES Dairy Science Landscape Architecture Aviation Management AND THE HUMANITIES Fisheries and Aquatic Sciences Materials Science and Engineering Business Anthropology Food Science Mechanical Engineering Business -

Graduate School of Education ❖ Department of Learning And

Graduate School of Education Department of Learning and Instruction Teacher Education Institute Math Education For those who hold a valid NYS Initial Teacher Certificate in Mathematics (grades 7-12) and are seeking the Master of Education degree as well as recommendation for the Professional Teacher Certificate in Mathematics (grades 7-12) and the Professional Extension in Mathematics (grades 5-6). Name: ___________________________________________ Person Number: _____________________________________ III. Professional Certification/Master of Education (Grades 7-12, 5-6 extension) Date Credit Gra de EDUCATION ELECTIVES (6 credits ) LAI 514 Adolescent Writing Across Curriculum (Formerly Language, Cognition, and Writing ) NOTE: If you have taken LAI 414 as a UB undergraduate student, please choose a course from one 3 of the Mathematics Education Electives below in place of LAI 514. LAI 552 Mid dle Ch ildh oo d-Adol escent Literacy Methods 3 MATHEMATICS ELECTIVES (Select 12 credits from the following) LAI 544 Teaching of AP Calculus (May also use as Math Ed elective) 3 LAI 545 Problem Solving & Posing in Mathematics (May also use as Math Ed elective) 3 LAI 643 School Math Advanced Standpoint 1 * 3 LAI 644 School Math Advanced Standpoint 2 * 3 LAI 645 School Math Advanced Standpoint 3 * 3 LAI 64 7 School Math Advanced Standpoint 4 * 3 LAI xxx Teaching & Learning of AP Statistics (May also use as Math Ed elective) 3 Any graduate level course (500 or above) in the Department of Mathematics 3-6 Other electives with advisor approval 3 MATHEMATICS -

2020-21 Eagle Ridge Academy School of Rhetoric • Course Catalog

2020-21 Eagle Ridge Academy School of Rhetoric • Course Catalog Eagle Ridge Academy School of Rhetoric 2020-21 Course Catalog | 1 TABLE OF CONTENTS Welcome ........................................................................................................................3 How to Use This Guide ...............................................................................................4 College Readiness, Admissions, & Your Four-Year High School Course Plan .................................................5 College Preparation & Credit Opportunities ........................................................6 Additional Course & Scheduling Information ......................................................7 Course & Credit Requirements for Graduation Credits Required for Graduation......................................................................................8 Sample Four-Year Plan .....................................................................................................8 Resources Course Offerings by Year (2020-2024)..........................................................................9 Course Guide Art History.........................................................................................................................10 Electives.............................................................................................................................11 Fine Arts...........................................................................................................................13 Humanities -

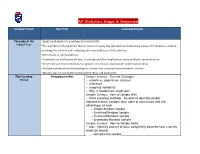

AP Statistics Scope & Sequence

AP Statistics Scope & Sequence Grading Period Unit Title Learning Targets Throughout the *Apply mathematics to problems in everyday life School Year *Use a problem-solving model that incorporates analyzing information, formulating a plan, determining a solution, justifying the solution and evaluating the reasonableness of the solution *Select tools to solve problems *Communicate mathematical ideas, reasoning and their implications using multiple representations *Create and use representations to organize, record and communicate mathematical ideas *Analyze mathematical relationships to connect and communicate mathematical ideas *Display, explain and justify mathematical ideas and arguments First Grading Designing Studies Sample Surveys: General Concepts Period -- sample vs. population (census) -- inference -- sampling variability -- Why is randomness important? Sample Surveys: How to Sample Well -- Valid sampling methods - be able to describe proper implementation (random digit table or calculator) and the advantages of each -- Simple Random Sample -- Stratified Random Sample -- Clustered Random Sample -- Systematic Random Sample Sample Surveys: How to Sample Badly -- bias - identify sources of bias; completely describe how a survey might be biased -- convenience sample -- voluntary response sample -- undercoverage -- nonresponse bias -- response bias Experiments: Studies vs. Experiments -- observational study vs. experiment -- scope and nature of the conclusions that can be made from a study description Experiments: Terminology -- experimental -

Massachusetts Mathematics Curriculum Framework — 2017

Massachusetts Curriculum MATHEMATICS Framework – 2017 Grades Pre-Kindergarten to 12 i This document was prepared by the Massachusetts Department of Elementary and Secondary Education Board of Elementary and Secondary Education Members Mr. Paul Sagan, Chair, Cambridge Mr. Michael Moriarty, Holyoke Mr. James Morton, Vice Chair, Boston Dr. Pendred Noyce, Boston Ms. Katherine Craven, Brookline Mr. James Peyser, Secretary of Education, Milton Dr. Edward Doherty, Hyde Park Ms. Mary Ann Stewart, Lexington Dr. Roland Fryer, Cambridge Mr. Nathan Moore, Chair, Student Advisory Council, Ms. Margaret McKenna, Boston Scituate Mitchell D. Chester, Ed.D., Commissioner and Secretary to the Board The Massachusetts Department of Elementary and Secondary Education, an affirmative action employer, is committed to ensuring that all of its programs and facilities are accessible to all members of the public. We do not discriminate on the basis of age, color, disability, national origin, race, religion, sex, or sexual orientation. Inquiries regarding the Department’s compliance with Title IX and other civil rights laws may be directed to the Human Resources Director, 75 Pleasant St., Malden, MA, 02148, 781-338-6105. © 2017 Massachusetts Department of Elementary and Secondary Education. Permission is hereby granted to copy any or all parts of this document for non-commercial educational purposes. Please credit the “Massachusetts Department of Elementary and Secondary Education.” Massachusetts Department of Elementary and Secondary Education 75 Pleasant Street, Malden, MA 02148-4906 Phone 781-338-3000 TTY: N.E.T. Relay 800-439-2370 www.doe.mass.edu Massachusetts Department of Elementary and Secondary Education 75 Pleasant Street, Malden, Massachusetts 02148-4906 Dear Colleagues, I am pleased to present to you the Massachusetts Curriculum Framework for Mathematics adopted by the Board of Elementary and Secondary Education in March 2017. -

Department of Teaching & Learning Parent/Student Course Information Advanced Placement Statistics

Department of Teaching & Learning Parent/Student Course Information Advanced Placement Statistics (MA 3192) One credit, One year Grades 11-12 Counselors are available to assist parents and students with course selections and career planning. Parents may arrange to meet with the counselor by calling the school's guidance department. COURSE DESCRIPTION Students study the major concepts and tools for collecting, analyzing and drawing conclusions from data. This course is taught on the college level and the topics meet the requirements set forth in the syllabus of the College Board. Inferential and diagnostic methods are applied to data, and probability is used to describe confidence intervals. PREREQUISITE Algebra II or Algebra II/Trigonometry REQUIRED TEXTBOOK The Practice of Statistics, 5th Edition, Daren S. Starnes, Josh Tabor, Daniel S. Yates, and David S. Moore, Bedford, Freeman and Worth (2015) RECOMMENDED CALCULATOR TI-83 Plus, TI-84 Plus, TI-84 Plus C or TI-84 Plus CE Virginia Beach Instructional Objectives AP Statistics – MA3192 VBO# Objective Exploring Data: Describing Patterns and Departures From Patterns MA.APSTAT.1.1 The student will be able to compare and contrast methods of data collection including census, sample survey, experiment and observational study and determine an approximate method of data collection based on purpose, cost, ethics, legalities and other factors. MA.APSTAT.1.2 The student will be able to interpret, compare and contrast graphical displays of distributions of univariate data including dotplot, stemplot, histogram and cumulative frequency plot. MA.APSTAT.1.3 The student will be able to analyze measures of central tendency and variation of distributions of univariate data and compute and interpret measures of position including percentiles, quartiles and z-scores. -

AP Statistics Is a College Level Course Covering Material Traditionally Taught in an Introductory Statistics College Class

AP Statistics is a college level course covering material traditionally taught in an introductory statistics college class. It will probably be unlike any other math course you have taken because AP Statistics is more like an ENGLISH CLASS with a little bit of math calculations. You will need to be able to work with equations and concepts that you learned in Algebra 1 & 2. You also will need to be able to analyze and interpret problems with context. There is more reading and writing in AP Statistics than in any other math class. This packet will help you prepare and review for the course as well as see a preview of what is coming. • Complete the packet for review. Round to the hundredths place when needed. • TI-84 Plus CE calculator is recommended for this packet and class. • This packet is due the first day of school. • This packet will be graded. If you are unsure of how to solve a problem, please use your resources such as: internet, peers, etc. • All work must be shown to receive credit. NEVER leave anything blank! Partial credit is given. • Please make sure the work and answers are legible. If you cannot fit the work on the space provided, I would recommend completing the packet on a separate piece of paper. • Knowing and understanding these concepts is KEY to your success in AP Statistics and to prepare for the AP exam. See you soon! Ms. Vinagre [email protected] Some websites for reference: https://www.khanacademy.org/ http://www.stattrek.com I. -

AP Statistics

AP Statistics Is it for me? What is Advanced Placement (AP)? • AP is college level curriculum, the same as at a college or university, taught at a high school in order to make students college- ready and eliminate the need to take course basics. College-eligible vs. College-ready • College-eligible • College-ready • Is meeting the minimum • Is having the necessary skills requirements in order to be desired by a college or accepted by a college or university in order to be university successful in college • Passing TAKS • Problem-Solving Skills • High School Diploma • Ability to research • Acceptable SAT/ ACT • Reasoning and Logic scores • Ability to Interpret Information • Precision and Accuracy How hard is AP? • Level of intensity: • AP – 4-Year University Level Curriculum • Dual Credit – Junior or Community College Level Curriculum • Pre-AP – High School Level Material taught for high achieving students with a greater depth of understanding available at Junior High and High Schools • Regular – Material taught on the appropriate grade level for the majority of students Who Should Take AP? • Counts as a 4th year of Math just like Pre-Calculus, Computer Science and Calculus • Top 25% should take AP over Dual Credit to be ready to go straight to a 4-yr college • Most college major degree plans require at least one Statistics class • StudentsWho interested should in any of the take AP Statistics? following: • Engineering – Chemical, Civil, Structural, etc. • Science – Biology, Chemistry, Physics, etc. • Social Sciences – Psychology, Sociology, Anthropology, etc. • Medicine – Doctors, Nurses, Veterinarians, etc. • Healthcare – Hospitals, Pharmacies, Hospice, etc. • Political Studies • Business – Accounting, Finance, Management, etc. -

AP Statistics Midterm Exam

AP Statistics Name:____________________________________ Review for Midterm Date:_____________________________________ Format: Two test grades in the second marking. Four free response questions and twenty multiple-choice questions. The free response and multiple-choice portions are weighted equally. These are some main topics you should know: Unit 1 Probability • Binomial Some Vocabulary • Geometric Terms • Discrete Probability Distribution-mean/sd • Skewed Right • Combinations • Skewed Left • P(A or B), P(A and B) • Bell shaped • Normal Distributions(z score) • Symmetric • Invnorm problems • Uniform • Central Limit Theorem • Normal curve • Mean and proportions problems for samples • Mean/SD/Med • CLT • Variability • Range/IQR Unit 2 Describing Data • Variance • Dot plot/ bar chart/scatter plot • Quartile • Stem Plot-regular and comparative • Gaps/clusters • Box Plot-skeletal and modified. 5 # summary, markers for outliers • Observational • Histogram-frequency table and percentile Study • Calculate mean and standard deviation. • Experiment -know what happens to each when data is increased by 15% • Treatment -know what happens to each when each data point is increased by 15 • Response Var • 4 measures of center • Census • 4 measures of variability • Voluntary resp. • 2 measures of relative location • Ramdon sample • Skewed right/skewed left. Examples. Mean vs median • Control group • Placebo Unit 3 Correlation and Regression 2 • Stratified • Lin Reg, r, r , S , residuals, good fit, transformations E • Bias • Unit 4 Experimental Design Randomization • • Experiments vs Observations Blinding • • 5 sampling techniques Double blind • • Definition of SRS Confounding • 3 types of bias • Design an experiment • Key concepts of experimental design Unit 5- Part 1- Confidence Intervals • Confidence Intervals for means (T score) • Confidence Intervals for proportions • Sample size 1 AP Statistics Name:____________________________________ Review for Midterm Date:_____________________________________ Multiple Choice 1. -

AP Statistics Course?

Who Should take an AP Statistics course? AP Stat is an introductory course in sta- tistics. You’ll learn how to collect, or- PIKE HIGH SCHOOL ganize, analyze, and interpret data. At Pike, AP courses also improve your GPA because they are weighted. So, who needs statistics? Apparently more people than need calculus. More of us will use statistics than will use cal- culus, trigonometry, or college algebra; that makes AP Statistics your opportuni- ty to learn how to produce and use data, to recognize bad data, and to make decisions with data. Statistics allows you to drive data, rather than being driven by it. You can take this class as long as you have completed Algebra 1 & 2 and Ge- ometry. You can take this class concur- rently with Finite Mathematics, Pre- Calculus and with Calculus. Everyone uses statistics, college-bound AP Statistics or not. Those going to college will prob- ably take some class, some time that uses statistics. Why not be prepared? AP Statistics HOW TO SUCCEED IN AP Statis- Kathleen Hernandez tics : Teacher PIKE HIGH SCHOOL If you don’t do your homework, AP Stat is hard. But it’s not like a regu- For more information contact:: lar math class—we don’t have prob- Kathleen Hernandez lem after problem in which the an- swer is “4” or “x + 3.” As a bonus, Phone: 317-387-2753 you don’t have to memorize formu- E-mail: [email protected] las and we use a graphing calculator every day. COURSE OBJECTIVES: Why take AP Statistics The main objectives of the course is to give stu- dents an understanding on the main concepts of It is a full-year class for a course statistics and to provide them with the skills that in college is taught in one needed to work with data and draw appropriate semester. -

Advanced Placement Credit Guide Swanson School Of

ADVANCED PLACEMENT CREDIT GUIDE SWANSON SCHOOL OF ENGINEERING EXAM CODE DESCRIPTION SCORE PITT COURSE CREDITS ISSUED # PITT CREDITS ARH Art History 3,4,5 HA&A 0000 3 BY Biology 4 BIOSC 0050,0150 4 5 BIOSC 0050, 0150, 0060, 0160 8 MAB Calculus AB 4, 5 MATH 0220 4 MBC Calculus BC 4, 5 MATH 0220, 0230 8 CH Chemistry 3, 4 CHEM 0110 4 5 CHEM 0110, 0120 8 CHIN Chinese Language 4, 5 CHIN 0001, 0002 10 GPC Comparative Government and Politics 4, 5 PS 0300 3 CSA Computer Science A 4, 5 CS 0401 4 CSAB Computer Science AB 4, 5 CS 0401 4 EMA Economics – Macroeconomics 4, 5 ECON 0110 3 EMI Economics - Microeconomics 4, 5 ECON 0100 3 ENGC English Language and Composition 4, 5 ENGLIT 0000 3 ELC English Literature and Composition 4, 5 ENGLIT 0000 3 EH European History 4, 5 HIST 0100 or HIST 0101 3 FRA French Language 4 FR 0055 3 5 FR 0055, 0056 6 FRI French Literature 4 FR 0021 3 5 FR 0021, 0055 6 GM German Language 4 GER 1490 3 5 GER 1490 5 ITAL Italian Language 4 ITAL 0004 3 ITAL 0004, and either 0055 or 0061 5 6 (subject to faculty interview) JPN Japanese Culture and Language 4 JPNSE 1901 3 JPN Japanese Culture and Language 5 JPNSE 1901 5 LTL Latin – Literature 4, 5 LATN 0220 3 LTV Latin – Vergil 4, 5 LATN 0220 3 MSL Music – Listening and Literature 3, 4, 5 MUSIC 0211 3 MST Music Theory 3, 4, 5 MUSIC 0100 3 PHCM Physics C-Mechanics 5 PHYS 0174 4 PY Psychology 4, 5 PSY 0010 3 SPL Spanish Language 4, 5 See Dept.