Defending Secrets, Sharing Data: New Locks and Keys for Electronic Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Independent Look at the Arc of .NET

Past, present and future of C# and .NET Kathleen Dollard Director of Engineering, ROICode [email protected] Coding: 2 Advanced: 2 “In the beginning there was…” Take a look back at over 15 years of .NET and C# evolution and look into the future driven by enormous underlying changes. Those changes are driven by a shift in perception of how .NET fits into the Microsoft ecosystem. You’ll leave understanding how to leverage the .NET Full Framework, .NET Core 1.0, .NET Standard at the right time. Changes in .NET paralleled changes in the languages we’ll reflect on how far C# and Visual Basic have come and how they’ve weathered major changes in how we think about code. Looking to the future, you’ll see both the impact of functional approaches and areas where C# probably won’t go. The story would not be complete without cruising through adjacent libraries – the venerable ASP.NET and rock-star Entity Framework that’s recovered so well from its troubled childhood. You’ll leave this talk with a better understanding of the tool you’re using today, and how it’s changing to keep you relevant in a constantly morphing world. Coding: 2 Advanced: 2 “In the beginning there was…” Take a look back at over 15 years of .NET and C# evolution and look into the future driven by enormous underlying changes. Those changes are driven by a shift in perception of how .NET fits into the Microsoft ecosystem. You’ll leave understanding how to leverage the .NET Full Framework, .NET Core 1.0, .NET Standard at the right time. -

DELL EMC VMAX ALL FLASH STORAGE for MICROSOFT HYPER-V DEPLOYMENT July 2017

DELL EMC VMAX ALL FLASH STORAGE FOR MICROSOFT HYPER-V DEPLOYMENT July 2017 Abstract This white paper examines deployment of the Microsoft Windows Server Hyper-V virtualization solution on Dell EMC VMAX All Flash arrays, with focus on storage efficiency, availability, scalability, and best practices. H16434R This document is not intended for audiences in China, Hong Kong, Taiwan, and Macao. WHITE PAPER Copyright The information in this publication is provided as is. Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. Copyright © 2017 Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Intel, the Intel logo, the Intel Inside logo and Xeon are trademarks of Intel Corporation in the U.S. and/or other countries. Other trademarks may be the property of their respective owners. Published in the USA 07/17 White Paper H16434R. Dell Inc. believes the information in this document is accurate as of its publication date. The information is subject to change without notice. 2 Dell EMC VMAX All Flash Storage for Microsoft Hyper-V Deployment White Paper Contents Contents Chapter 1 Executive Summary 5 Summary ............................................................................................................. -

Water Balance of a Feedlot

Water Balance of a Feedlot A Thesis Submitted to the College of Graduate Studies In Partial Fulfillment of the Requirements for the Degree of Master of Science in the Department of Agricultural and Bioresource Engineering University of Saskatchewan Saskatoon, SK By Lisa N. White February 2006 © Copyright Lisa Nicole White, 2006. All rights reserved. PERMISSION TO USE In presenting this thesis as partial fulfillment of a Postgraduate degree at the University of Saskatchewan, the author has agreed that the Libraries of the University of Saskatchewan, may make this thesis freely available for inspection. Moreover, the author has agreed that permission for extensive copying of this thesis for scholarly purposes may be granted by the professor or professors who supervised my thesis work recorded herein, or in absence, by the head of the Department or the Dean of the College in which my thesis was done. It is understood that due recognition will be given to the author of this thesis and to the University of Saskatchewan in any use of the material in this thesis. Copying or publication or any other use of my thesis for financial gain without written approval of the author and the University of Saskatchewan is prohibited. Requests for permission to copy or make any other use of the material in this thesis, in whole or in part, should be addressed to: Head of Department Agricultural and Bioresource Engineering University of Saskatchewan 57 Campus Drive, Saskatoon, SK Canada, S7N 5A9 i ABSTRACT The overall purpose of this study was to define the water balance of feedlot pens in a Saskatchewan cattle feeding operation for a one year period. -

Device Configuration – Surface Pro 4 | Microtower

Device Configuration – Surface Pro 4 | Microtower Technology Department Babylon School District 50 Railroad Avenue Babylon, NY 11702 www.babylon.k12.ny.us (631) 893-7983 [TECHNOLOGY DEPARTMENT: DEVICE CONFIGURATION Surface Pro 4 | Microtower Device Configuration: You will need to configure at least two devices in order to synchronize all of your documents/email from your Surface Pro 4 to your microtower attached to your Smartboard/projector/LED (if you teach in multiple rooms, you will need to configure more). Once you have completed this simple three-step process, you can work on either device and they will sync to the cloud and then back down to your other device. Step 1: Adding your work or School Account a. Go to Start > Settings b. From the settings screen choose accounts Babylon School District – 50 Railroad Avenue - Babylon, New York – 11702 |1| [TECHNOLOGY DEPARTMENT: DEVICE CONFIGURATION Surface Pro 4 | Microtower c. Choose Access Work or School Account from the left side menu d. Choose the Connect button e. Add your school email address (ex. [email protected]) Babylon School District – 50 Railroad Avenue - Babylon, New York – 11702 |2| [TECHNOLOGY DEPARTMENT: DEVICE CONFIGURATION Surface Pro 4 | Microtower f. If you are prompted, choose Work or School Account g. When prompted, put in your Password h. You have now added your account to Windows 10. Babylon School District – 50 Railroad Avenue - Babylon, New York – 11702 |3| [TECHNOLOGY DEPARTMENT: DEVICE CONFIGURATION Surface Pro 4 | Microtower Step 2: Add your Email to Outlook a. Open Outlook from your start menu (Microsoft Office folder) b. This will open the Welcome to Outlook 2016 screen > Click Next Babylon School District – 50 Railroad Avenue - Babylon, New York – 11702 |4| [TECHNOLOGY DEPARTMENT: DEVICE CONFIGURATION Surface Pro 4 | Microtower c. -

The Return of Coppersmith's Attack:Practical Factorization of Widely Used RSA Moduli

Session H1: Crypto Attacks CCS’17, October 30-November 3, 2017, Dallas, TX, USA The Return of Coppersmith’s Aack: Practical Factorization of Widely Used RSA Moduli Matus Nemec Marek Sys∗ Petr Svenda Masaryk University, Masaryk University Masaryk University Ca’ Foscari University of Venice [email protected] [email protected] [email protected] Dusan Klinec Vashek Matyas EnigmaBridge, Masaryk University Masaryk University [email protected] [email protected] ABSTRACT 1 INTRODUCTION We report on our discovery of an algorithmic aw in the construc- RSA [69] is a widespread algorithm for asymmetric cryptography tion of primes for RSA key generation in a widely-used library used for digital signatures and message encryption. RSA security of a major manufacturer of cryptographic hardware. e primes is based on the integer factorization problem, which is believed generated by the library suer from a signicant loss of entropy. to be computationally infeasible or at least extremely dicult for We propose a practical factorization method for various key lengths suciently large security parameters – the size of the private primes including 1024 and 2048 bits. Our method requires no additional and the resulting public modulus N . As of 2017, the most common information except for the value of the public modulus and does length of the modulus N is 2048 bits, with shorter key lengths not depend on a weak or a faulty random number generator. We such as 1024 bits still used in practice (although not recommend devised an extension of Coppersmith’s factorization aack utilizing anymore) and longer lengths like 4096 bits becoming increasingly an alternative form of the primes in question. -

Surface Pro 3 Fact Sheet May 2014

Surface Pro 3 Fact sheet May 2014 Meet Surface Pro 3, the tablet that can replace your laptop. Wrapped in magnesium and loaded with a 12-inch ClearType Full HD display, 4th-generation Intel® Core™ processor and up to 8 GB of RAM in a sleek frame — just 0.36 inches thin and 1.76 pounds — with up to nine hours of Web-browsing battery life, Surface Pro 3 has all the power, performance and mobility of a laptop in an incredibly lightweight, versatile form. The thinnest and lightest member of the Surface Pro family, Surface Pro 3 features a large and beautiful 2160x1440 2K color-calibrated screen and 3:2 aspect ratio with multitouch input, so you can swipe, pinch and drag whenever you need. The improved optional Surface Pro Type Cover and more adjustable, continuous kickstand will transform your device experience from tablet to laptop in a snap. Surface Pro Type Cover features a double-fold hinge enabling you to magnetically lock it to the display’s lower bezel, keeping everything steady so you can work just as comfortably on your lap as you do at your desk. With a full-size USB 3.0 port, microSD card reader and Mini DisplayPort, you can quickly transfer files and easily connect peripherals like external displays. And with the optional Surface Ethernet Adapter, you can instantly connect your Surface to a wired Ethernet network with transfer rates of up to 1 Gbps1. The custom Surface Pen, crafted with a solid, polished aluminum finish, was designed to look and feel like an actual fountain pen to give you a natural writing experience. -

The Industrial Role of Scrap Copper at Jamestown Carter C

THE JOURNAL OF THE JAMESTOWN Carter C. Hudgins REDISCOVERY CENTER “Old World Industries and New World Hope: The VOL. 2 JAN. 2004 Industrial Role of Scrap Copper at Jamestown” Available from https://www.historicjamestowne.org Old World Industries and New World Hope: The Industrial Role of Scrap Copper at Jamestown Carter C. Hudgins 1. Introduction Jamestown scholars have long believed that during the first ten years of the Virginia Colony (1607-1617), settlers attempted to elude starvation by trading European copper with local Powhatans in exchange for foodstuffs. Contem- porary reports, such as those written by John Smith, docu- Figure 1. A sample of scrap waste selected from copper found at ment this bartering, and recent archaeological discoveries Jamestown. of over 7,000 pieces of scrap sheet copper within James Fort seem to substantiate the existence of this commerce (Figure 2. Jamestown’s Scrap Copper 1). Although much of this waste metal was undoubtedly Examinations of the scrap copper found in Jamestown associated with the exchange of goods between the English Rediscovery’s five largest Fort-Period features—Pit 1, Pit 3, and Powhatans, this study suggests that significant amounts Structure 165, SE Bulwark Trench, and Feature JR 731— of Jamestown’s scrap copper were also related to contempo- reveal that a significant percentage of the copper bears manu- rary English copper industries and an anticipation of met- facturing evidence that can be credited to coppersmithing allurgical resources in the New World. industries in England. Several examples of copper that pos- th During the late 16 century, the Society of Mines Royal sess the markings of English industry are shown in Figures and the Society of Minerals and Battery Works were formed 2, 3 and 4, and relate to the making of copper wire, buckles to mine English metals, produce copper-alloy products, and and kettles respectively. -

T-Mobile and Metropcs Continue to Expand Consumer Choice, Will Offer New Windows Phone 8.1 on Nokia’S Upcoming Lumia 635

T-Mobile and MetroPCS Continue to Expand Consumer Choice, Will Offer New Windows Phone 8.1 on Nokia’s Upcoming Lumia 635 BELLEVUE, Wash. – April 2, 2014 – Immediately on the heels of Microsoft’s Windows Phone 8.1 unveiling today, T-Mobile US, Inc. (NYSE: TMUS) has announced the company will offer up its Redmond neighbor’s latest mobile OS as part of its ongoing commitment to deliver greater freedom and choice for American wireless consumers – starting with Nokia’s new Lumia 635 coming this summer. The Lumia 635 will be the first device sold in the United States powered out of the box by the very latest Windows Phone 8.1 operating system, introduced earlier today at Microsoft’s 2014 Build developers conference in San Francisco. T-Mobile US today also announced that, come summer, T-Mobile and MetroPCS will be the best places to get the very first smartphone with the new Windows Phone OS for a low upfront cost and with zero service contract, zero overages (while on its wicked-fast network), zero hidden device costs, and zero upgrade wait. And only T-Mobile and MetroPCS customers can experience the next-gen Lumia 635 on America’s fastest nationwide 4G LTE network. “The Un-carrier’s all about removing crazy restrictions and delivering total wireless freedom and flexibility,” said Jason Young, senior vice president of Marketing at T-Mobile. “With Windows Phone, we can offer customers another great choice in mobile platforms. And we’re excited to bring to both T- Mobile and MetroPCS customers the combination of next-gen software, great features and fresh design that Nokia’s latest Windows Phone has to offer.” The Lumia 635 will build on all the qualities and benefits that made its predecessor – the Lumia 521 – so popular among American wireless customers. -

Coppersmith Media

Contact: Todd Messelt Media Line Communications 612.222.8585 [email protected] Twin Cities-based CopperSmith® emerges as leading e-retailer of upscale home products, including in-house brand of handmade, custom-order, solid copper range hoods, sinks, bathtubs and more Specialty manufacturer and e-retailer of high-end housewares credits steady growth to a direct-to-consumer business model, offering live product support, free design assistance, and drop-shipping services to homeowners, interior designers and home builders. GOLDEN VALLEY, MINN.—In 2009, Ryan Grambart dove head-first into launching CopperSmith® ( www. worldcoppersmith.com ), galvanized by the allure of a semi-precious metal that has attracted artists, designers and architects for more than 10,000 years. Since its humble beginnings as a purveyor of copper gutter systems for Twin Cities-area homes, Grambart’s fledging company has emerged as a leading Online retailer and specialty in-house manufacturer of upscale, premium-quality products for discerning homeowners; while offering wholesale B2B programs and drop-shipping services to contractors and interior designers seeking made-to-order range hoods, kitchen sinks, farmhouse sinks, bathroom sinks, tables, light fixtures, mirrors, towel bars and rings, cabinet knobs and pulls, flooring tiles and registers, cookware and more. “We got our start manufacturing, installing and retailing a small line of specialty products we believed in,” says Grambart. “We’re not just another e-commerce company posting a zillion skus hoping for some quick and easy sales. We have a thriving installation business that serves local building contractors and provides front-line market research and hands-on working knowledge of the products we sell nationally.” Direct-to-Consumer Approach CopperSmith® was founded on the principle that our quality of life is profoundly affected by the products we surround ourselves with. -

Secure Communications One Time Pad Cipher

Cipher Machines & Cryptology © 2010 – D. Rijmenants http://users.telenet.be/d.rijmenants THE COMPLETE GUIDE TO SECURE COMMUNICATIONS WITH THE ONE TIME PAD CIPHER DIRK RIJMENANTS Abstract : This paper provides standard instructions on how to protect short text messages with one-time pad encryption. The encryption is performed with nothing more than a pencil and paper, but provides absolute message security. If properly applied, it is mathematically impossible for any eavesdropper to decrypt or break the message without the proper key. Keywords : cryptography, one-time pad, encryption, message security, conversion table, steganography, codebook, message verification code, covert communications, Jargon code, Morse cut numbers. version 012-2011 1 Contents Section Page I. Introduction 2 II. The One-time Pad 3 III. Message Preparation 4 IV. Encryption 5 V. Decryption 6 VI. The Optional Codebook 7 VII. Security Rules and Advice 8 VIII. Appendices 17 I. Introduction One-time pad encryption is a basic yet solid method to protect short text messages. This paper explains how to use one-time pads, how to set up secure one-time pad communications and how to deal with its various security issues. It is easy to learn to work with one-time pads, the system is transparent, and you do not need special equipment or any knowledge about cryptographic techniques or math. If properly used, the system provides truly unbreakable encryption and it will be impossible for any eavesdropper to decrypt or break one-time pad encrypted message by any type of cryptanalytic attack without the proper key, even with infinite computational power (see section VII.b) However, to ensure the security of the message, it is of paramount importance to carefully read and strictly follow the security rules and advice (see section VII). -

Motion to Approve the Purchase of a Microsoft Surface Pro 4 Tablet for the Delano

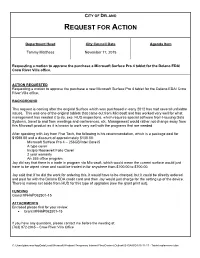

CITY OF DELANO REQUEST FOR ACTION Department Head City Council Date Agenda Item Tammy Matthees November 17, 2015 Requesting a motion to approve the purchase a Microsoft Surface Pro 4 tablet for the Delano EDA/ Crow River Villa office. ACTION REQUESTED Requesting a motion to approve the purchase a new Microsoft Surface Pro 4 tablet for the Delano EDA/ Crow River Villa office. BACKGROUND This request is coming after the original Surface which was purchased in early 2012 has had several unfixable issues. This was one of the original tablets that came out from Microsoft and has worked very well for what management has needed it to do, exe. HUD inspections, which requires special software from Housing Data Systems, travel to and from meetings and conferences, etc. Management would rather not change away from this Microsoft product as it is known to work very well with the programs that are needed. After speaking with Jay from Five Tech, the following is his recommendation, which is a package deal for $1598.00 and a discount of approximately $130.00: Microsoft Surface Pro 4 – 256GB/Intel Core i5 A type cover Incipio Roosevelt Folio Cover 2 year warranty An 365 office program Jay did say that there is a trade in program via Microsoft, which would mean the current surface would just have to be wiped clean and could be traded in for anywhere from $100.00 to $700.00. Jay said that if he did the work for ordering this, it would have to be charged, but it could be directly ordered and paid for with the Delano EDA credit card and then Jay would just charge for the setting up of the device. -

![[Mailto:Gordon.White@Med.Uvm.Edu] Sent: Friday, July 28, 2017 2:56 PM Subject: Class of 2021 - Technology Information](https://docslib.b-cdn.net/cover/4441/mailto-gordon-white-med-uvm-edu-sent-friday-july-28-2017-2-56-pm-subject-class-of-2021-technology-information-974441.webp)

[Mailto:[email protected]] Sent: Friday, July 28, 2017 2:56 PM Subject: Class of 2021 - Technology Information

From: White, Gordon [mailto:[email protected]] Sent: Friday, July 28, 2017 2:56 PM Subject: Class of 2021 - Technology Information Hello Class of 2021, We are excited to have you arrive for orientation and introduce you to our technology environment. Until then, here are a few important items. 1. If you have not logged into both COMET and the Larner College of Medicine (LCOM) email system, please look to previous emails for instructions and do that first. If you do not have those, please reply to this email and let me know. 2. Your @med.uvm.edu email address is your professional email address within academic medicine. Please do not redirect mail from this address to your personal account. It is against policy, and will disrupt the integrated communication between you, the administration and the faculty. If you have redirected the @med.uvm.edu address to a personal account, please reverse these changes. If you would like to see the official policy about redirecting LCOM mail, you can find it in the computer use policy: http://www.med.uvm.edu/studenthandbook/94000 3. Many of you have asked how to configure your personal computer mail clients. We ask that you only use Outlook Web Access when connecting to the mail server from a non-school computer. Links to Outlook Web access are available from COMET. https://comet.med.uvm.edu 4. If you would like to configure your mobile device for email and calendar access, please download the Outlook for Mobile app from the App Store or the Play Store.