A Survey of Process Migration Mechanisms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Design of the EMPS Multiprocessor Executive for Distributed Computing

The design of the EMPS multiprocessor executive for distributed computing Citation for published version (APA): van Dijk, G. J. W. (1993). The design of the EMPS multiprocessor executive for distributed computing. Technische Universiteit Eindhoven. https://doi.org/10.6100/IR393185 DOI: 10.6100/IR393185 Document status and date: Published: 01/01/1993 Document Version: Publisher’s PDF, also known as Version of Record (includes final page, issue and volume numbers) Please check the document version of this publication: • A submitted manuscript is the version of the article upon submission and before peer-review. There can be important differences between the submitted version and the official published version of record. People interested in the research are advised to contact the author for the final version of the publication, or visit the DOI to the publisher's website. • The final author version and the galley proof are versions of the publication after peer review. • The final published version features the final layout of the paper including the volume, issue and page numbers. Link to publication General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal. -

Sprite File System There Are Three Important Aspects of the Sprite ®Le System: the Scale of the System, Location-Transparency, and Distributed State

Naming, State Management, and User-Level Extensions in the Sprite Distributed File System Copyright 1990 Brent Ballinger Welch CHAPTER 1 Introduction ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ ¡ This dissertation concerns network computing environments. Advances in network and microprocessor technology have caused a shift from stand-alone timesharing systems to networks of powerful personal computers. Operating systems designed for stand-alone timesharing hosts do not adapt easily to a distributed environment. Resources like disk storage, printers, and tape drives are not concentrated at a single point. Instead, they are scattered around the network under the control of different hosts. New operating system mechanisms are needed to handle this sort of distribution so that users and application programs need not worry about the distributed nature of the underlying system. This dissertation explores the approach of centering a distributed computing environment around a shared network ®le system. The ®le system is chosen as a starting point because it is a heavily used service in stand-alone systems, and the read/write para- digm of the ®le system is a familiar one that can be applied to many system resources. The ®le system described in this dissertation provides a distributed name space for sys- tem resources, and it provides remote access facilities so all resources are available throughout the network. Resources accessible via the ®le system include disk storage, other types of peripheral devices, and user-implemented service applications. The result- ing system is one where resources are named and accessed via the shared ®le system, and the underlying distribution of the system among a collection of hosts is not important to users. -

Distributed Virtual Machines: a System Architecture for Network Computing

Distributed Virtual Machines: A System Architecture for Network Computing Emin Gün Sirer, Robert Grimm, Arthur J. Gregory, Nathan Anderson, Brian N. Bershad {egs,rgrimm,artjg,nra,bershad}@cs.washington.edu http://kimera.cs.washington.edu Dept. of Computer Science & Engineering University of Washington Seattle, WA 98195-2350 Abstract Modern virtual machines, such as Java and Inferno, are emerging as network computing platforms. While these virtual machines provide higher-level abstractions and more sophisticated services than their predecessors from twenty years ago, their architecture has essentially remained unchanged. State of the art virtual machines are still monolithic, that is, they are comprised of closely-coupled service components, which are thus replicated over all computers in an organization. This crude replication of services forms one of the weakest points in today’s networked systems, as it creates widely acknowledged and well-publicized problems of security, manageability and performance. We have designed and implemented a new system architecture for network computing based on distributed virtual machines. In our system, virtual machine services that perform rule checking and code transformation are factored out of clients and are located in enterprise- wide network servers. The services operate by intercepting application code and modifying it on the fly to provide additional service functionality. This architecture reduces client resource demands and the size of the trusted computing base, establishes physical isolation between virtual machine services and creates a single point of administration. We demonstrate that such a distributed virtual machine architecture can provide substantially better integrity and manageability than a monolithic architecture, scales well with increasing numbers of clients, and does not entail high overhead. -

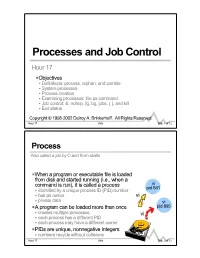

Processes and Job Control

Processes and Job Control Hour 17 PObjectives < Definitions: process, orphan, and zombie < System processes < Process creation < Examining processes: the ps command < Job control: &, nohup, fg, bg, jobs, ( ), and kill < Exit status Copyright © 1998-2002 Delroy A. Brinkerhoff. All Rights Reserved. Hour 17 Unix Slide 1 of 12 Process Also called a job by C and Korn shells PWhen a program or executable file is loaded from disk and started running (i.e., when a command is run), it is called a process vi pid 641 < identified by a unique process ID (PID) number < has an owner vi < private data vi PA program can be loaded more than once pid 895 < creates multiple processes vi < each process has a different PID < each process may have a different owner PPIDs are unique, nonnegative integers < numbers recycle without collisions Hour 17 Unix Slide 2 of 12 System Processes Processes created during system boot P0System kernel < “hand crafted” at boot < called swap in older versions (swaps the CPU between processes) < called sched in newer versions (schedules processes) < creates process 1 P1 init (the parent of all processes except process 0) < general process spawner < begins building locale-related environment < sets or changes the system run-level P2 page daemon (pageout on most systems) P3 file system flusher (fsflush) Hour 17 Unix Slide 3 of 12 Process Life Cycle Overview of creating new processes fork init init pid 467 Pfork creates two identical pid 1 exec processes (parent and child) getty pid 467 Pexec < replaces the process’s instructions -

Lecture 4: September 13 4.1 Process State

CMPSCI 377 Operating Systems Fall 2012 Lecture 4: September 13 Lecturer: Prashant Shenoy TA: Sean Barker & Demetre Lavigne 4.1 Process State 4.1.1 Process A process is a dynamic instance of a computer program that is being sequentially executed by a computer system that has the ability to run several computer programs concurrently. A computer program itself is just a passive collection of instructions, while a process is the actual execution of those instructions. Several processes may be associated with the same program; for example, opening up several windows of the same program typically means more than one process is being executed. The state of a process consists of - code for the running program (text segment), its static data, its heap and the heap pointer (HP) where dynamic data is kept, program counter (PC), stack and the stack pointer (SP), value of CPU registers, set of OS resources in use (list of open files etc.), and the current process execution state (new, ready, running etc.). Some state may be stored in registers, such as the program counter. 4.1.2 Process Execution States Processes go through various process states which determine how the process is handled by the operating system kernel. The specific implementations of these states vary in different operating systems, and the names of these states are not standardised, but the general high-level functionality is the same. When a process is first started/created, it is in new state. It needs to wait for the process scheduler (of the operating system) to set its status to "new" and load it into main memory from secondary storage device (such as a hard disk or a CD-ROM). -

Workstation Operating Systems Mac OS 9

15-410 “Now that we've covered the 1970's...” Plan 9 Nov. 25, 2019 Dave Eckhardt 1 L11_P9 15-412, F'19 Overview “The land that time forgot” What style of computing? The death of timesharing The “Unix workstation problem” Design principles Name spaces File servers The TCP file system... Runtime environment 3 15-412, F'19 The Land That Time Forgot The “multi-core revolution” already happened once 1982: VAX-11/782 (dual-core) 1984: Sequent Balance 8000 (12 x NS32032) 1985: Encore MultiMax (20 x NS32032) 1990: Omron Luna88k workstation (4 x Motorola 88100) 1991: KSR1 (1088 x KSR1) 1991: “MCS” paper on multi-processor locking algorithms 1995: BeBox workstation (2 x PowerPC 603) The Land That Time Forgot The “multi-core revolution” already happened once 1982: VAX-11/782 (dual-core) 1984: Sequent Balance 8000 (12 x NS32032) 1985: Encore MultiMax (20 x NS32032) 1990: Omron Luna88k workstation (4 x Motorola 88100) 1991: KSR1 (1088 x KSR1) 1991: “MCS” paper on multi-processor locking algorithms 1995: BeBox workstation (2 x PowerPC 603) Wow! Why was 1995-2004 ruled by single-core machines? What operating systems did those multi-core machines run? The Land That Time Forgot Why was 1995-2004 ruled by single-core machines? In 1995 Intel + Microsoft made it feasible to buy a fast processor that fit on one chip, a fast I/O bus, multiple megabytes of RAM, and an OS with memory protection. Everybody could afford a “workstation”, so everybody bought one. Massive economies of scale existed in the single- processor “Wintel” universe. -

Computer Demos—What Makes Them Tick?

AALTO UNIVERSITY School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Markku Reunanen Computer Demos—What Makes Them Tick? Licentiate Thesis Helsinki, April 23, 2010 Supervisor: Professor Tapio Takala AALTO UNIVERSITY ABSTRACT OF LICENTIATE THESIS School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Author Date Markku Reunanen April 23, 2010 Pages 134 Title of thesis Computer Demos—What Makes Them Tick? Professorship Professorship code Contents Production T013Z Supervisor Professor Tapio Takala Instructor - This licentiate thesis deals with a worldwide community of hobbyists called the demoscene. The activities of the community in question revolve around real-time multimedia demonstrations known as demos. The historical frame of the study spans from the late 1970s, and the advent of affordable home computers, up to 2009. So far little academic research has been conducted on the topic and the number of other publications is almost equally low. The work done by other researchers is discussed and additional connections are made to other related fields of study such as computer history and media research. The material of the study consists principally of demos, contemporary disk magazines and online sources such as community websites and archives. A general overview of the demoscene and its practices is provided to the reader as a foundation for understanding the more in-depth topics. One chapter is dedicated to the analysis of the artifacts produced by the community and another to the discussion of the computer hardware in relation to the creative aspirations of the community members. -

Distributed Operating Systems

Distributed Operating Systems ANDREW S. TANENBAUM and ROBBERT VAN RENESSE Department of Mathematics and Computer Science, Vrije Universiteit, Amsterdam, The Netherlands Distributed operating systems have many aspects in common with centralized ones, but they also differ in certain ways. This paper is intended as an introduction to distributed operating systems, and especially to current university research about them. After a discussion of what constitutes a distributed operating system and how it is distinguished from a computer network, various key design issues are discussed. Then several examples of current research projects are examined in some detail, namely, the Cambridge Distributed Computing System, Amoeba, V, and Eden. Categories and Subject Descriptors: C.2.4 [Computer-Communications Networks]: Distributed Systems-network operating system; D.4.3 [Operating Systems]: File Systems Management-distributed file systems; D.4.5 [Operating Systems]: Reliability-fault tolerance; D.4.6 [Operating Systems]: Security and Protection-access controls; D.4.7 [Operating Systems]: Organization and Design-distributed systems General Terms: Algorithms, Design, Experimentation, Reliability, Security Additional Key Words and Phrases: File server INTRODUCTION more convenient to use than the bare ma- chine. Examples of well-known centralized Everyone agrees that distributed systems (i.e., not distributed) operating systems are are going to be very important in the future. CP/M,’ MS-DOS,’ and UNIX.3 Unfortunately, not everyone agrees on A distributed operating system is one that what they mean by the term “distributed looks to its users like an ordinary central- system.” In this paper we present a view- ized operating system but runs on multi- point widely held within academia about ple, independent central processing units what is and is not a distributed system, we (CPUs). -

Open Source Democracy How Online Com M U N I C Ation Is Changing Offline Pol I T I C S

Pol i t ical struc tur es ne ed to cha n g e. The y wil l eme rg e fr om peopl e acting and comm un i c a t in g in the pres e nt , not talking abou t a fictio nal futur e Open Source Democracy How online com m u n i c ation is changing offline pol i t i c s Douglas Rushkoff About Demos Demos is a greenhouse for new ideas which can improve the quality of our lives.As an independent think tank, we aim to create an open resource of knowledge and learning that operates beyond traditional party politics. We connect researchers, thinkers and practitioners to an international network of people changing politics.Our ideas regularly influence government policy, but we also work with companies, NGOs, colleges and professional bodies. Demos knowledge is organised around five themes, which combine to create new perspectives.The themes are democracy, learning, enterprise, quality of life and global change. But we also understand that thinking by itself is not enough. Demos has helped to initiate a number of practical projects which are delivering real social benefit through the redesign of public services. For Demos, the process is as important as the final product. We bring together people from a wide range of backgrounds to cross-fertilise ideas and experience. By working with Demos, our partners help us to develop sharper insight into the way ideas shape society. www.demos.co.uk © Douglas Rushkoff 2003 Open access. Some rights reserved. As the publisher of this work, Demos has an open access policy which enables anyone to access our content electronically without charge. -

System Calls & Signals

CS345 OPERATING SYSTEMS System calls & Signals Panagiotis Papadopoulos [email protected] 1 SYSTEM CALL When a program invokes a system call, it is interrupted and the system switches to Kernel space. The Kernel then saves the process execution context (so that it can resume the program later) and determines what is being requested. The Kernel carefully checks that the request is valid and that the process invoking the system call has enough privilege. For instance some system calls can only be called by a user with superuser privilege (often referred to as root). If everything is good, the Kernel processes the request in Kernel Mode and can access the device drivers in charge of controlling the hardware (e.g. reading a character inputted from the keyboard). The Kernel can read and modify the data of the calling process as it has access to memory in User Space (e.g. it can copy the keyboard character into a buffer that the calling process has access to) When the Kernel is done processing the request, it restores the process execution context that was saved when the system call was invoked, and control returns to the calling program which continues executing. 2 SYSTEM CALLS FORK() 3 THE FORK() SYSTEM CALL (1/2) • A process calling fork()spawns a child process. • The child is almost an identical clone of the parent: • Program Text (segment .text) • Stack (ss) • PCB (eg. registers) • Data (segment .data) #include <sys/types.h> #include <unistd.h> pid_t fork(void); 4 THE FORK() SYSTEM CALL (2/2) • The fork()is one of the those system calls, which is called once, but returns twice! Consider a piece of program • After fork()both the parent and the child are .. -

Troubleshoot Zombie/Defunct Processes in UC Servers

Troubleshoot Zombie/Defunct Processes in UC Servers Contents Introduction Prerequisites Requirements Components Used Background Information Check Zombies using UCOS Admin CLI Troubleshoot/Clear the Zombies Manually Restart the Appropriate Service Reboot the Server Kill the Parent Process Verify Introduction This document describes how to work with zombie processes seen on CUCM, IMnP, and other Cisco UC products when logged in using Admin CLI. Prerequisites Requirements Cisco recommends that you have knowledge of using Admin CLI of the UC servers: ● Cisco Unified Communications Manager (CUCM) ● Cisco Unified Instant Messaging and Presence Server (IMnP) ● Cisco Unity Connection Server (CUC) Components Used This document is not restricted to specific software and hardware versions. The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, ensure that you understand the potential impact of any command. Background Information The Unified Communications servers are essentially Linux OS based applications. When a process dies on Linux, it is not all removed from memory immediately, its process descriptor (PID) stays in memory which only takes a tiny amount of memory. This process becomes a defunct process and the process's parent is notified that its child process has died. The parent process is then supposed to read the dead process's exit status and completely remove it from the memory. Once this is done using the wait() system call, the zombie process is eliminated from the process table. This is known as reaping the zombie process. -

Operating Systems Homework Assignment 2

Operating Systems Homework Assignment 2 Preparation For this homework assignment, you need to get familiar with a Linux system (Ubuntu, Fedora, CentOS, etc.). There are two options to run Linux on your Desktop (or Laptop) computer: 1. Run a copy of a virtual machine freely available on-line in your own PC environment using Virtual Box. Create your own Linux virtual machine (Linux as a guest operating system) in Windows. You may consider installing MS Windows as a guest OS in Linux host. OS-X can host Linux and/or Windows as guest Operating systems by installing Virtual Box. 2. Or on any hardware system that you can control, you need to install a Linux system (Fedora, CentOS Server, Ubuntu, Ubuntu Server). Tasks to Be Performed • Write the programs listed (questions from Q1 to Q6), run the program examples, and try to answer the questions. Reference for the Assignment Some useful shell commands for process control Command Descriptions & When placed at the end of a command, execute that command in the background. Interrupt and stop the currently running process program. The program remains CTRL + "Z" stopped and waiting in the background for you to resume it. Bring a command in the background to the foreground or resume an interrupted program. fg [%jobnum] If the job number is omitted, the most recently interrupted or background job is put in the foreground. Place an interrupted command into the background. bg [%jobnum] If the job number is omitted, the most recently interrupted job is put in the background. jobs List all background jobs.