Compiling First-Order Functions Tosession-Typed Parallel Code

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

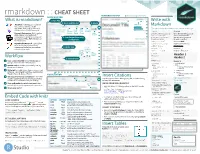

R Markdown Cheat Sheet I

1. Workflow R Markdown is a format for writing reproducible, dynamic reports with R. Use it to embed R code and results into slideshows, pdfs, html documents, Word files and more. To make a report: R Markdown Cheat Sheet i. Open - Open a file that ii. Write - Write content with the iii. Embed - Embed R code that iv. Render - Replace R code with its output and transform learn more at rmarkdown.rstudio.com uses the .Rmd extension. easy to use R Markdown syntax creates output to include in the report the report into a slideshow, pdf, html or ms Word file. rmarkdown 0.2.50 Updated: 8/14 A report. A report. A report. A report. A plot: A plot: A plot: A plot: Microsoft .Rmd Word ```{r} ```{r} ```{r} = = hist(co2) hist(co2) hist(co2) ``` ``` Reveal.js ``` ioslides, Beamer 2. Open File Start by saving a text file with the extension .Rmd, or open 3. Markdown Next, write your report in plain text. Use markdown syntax to an RStudio Rmd template describe how to format text in the final report. syntax becomes • In the menu bar, click Plain text File ▶ New File ▶ R Markdown… End a line with two spaces to start a new paragraph. *italics* and _italics_ • A window will open. Select the class of output **bold** and __bold__ you would like to make with your .Rmd file superscript^2^ ~~strikethrough~~ • Select the specific type of output to make [link](www.rstudio.com) with the radio buttons (you can change this later) # Header 1 • Click OK ## Header 2 ### Header 3 #### Header 4 ##### Header 5 ###### Header 6 4. -

Rmarkdown : : CHEAT SHEET RENDERED OUTPUT File Path to Output Document SOURCE EDITOR What Is Rmarkdown? 1

rmarkdown : : CHEAT SHEET RENDERED OUTPUT file path to output document SOURCE EDITOR What is rmarkdown? 1. New File Write with 5. Save and Render 6. Share find in document .Rmd files · Develop your code and publish to Markdown ideas side-by-side in a single rpubs.com, document. Run code as individual shinyapps.io, The syntax on the lef renders as the output on the right. chunks or as an entire document. set insert go to run code RStudio Connect Rmd preview code code chunk(s) Plain text. Plain text. Dynamic Documents · Knit together location chunk chunk show End a line with two spaces to End a line with two spaces to plots, tables, and results with outline start a new paragraph. start a new paragraph. narrative text. Render to a variety of 4. Set Output Format(s) Also end with a backslash\ Also end with a backslash formats like HTML, PDF, MS Word, or and Options reload document to make a new line. to make a new line. MS Powerpoint. *italics* and **bold** italics and bold Reproducible Research · Upload, link superscript^2^/subscript~2~ superscript2/subscript2 to, or attach your report to share. ~~strikethrough~~ strikethrough Anyone can read or run your code to 3. Write Text run all escaped: \* \_ \\ escaped: * _ \ reproduce your work. previous modify chunks endash: --, emdash: --- endash: –, emdash: — chunk run options current # Header 1 Header 1 chunk ## Header 2 Workflow ... Header 2 2. Embed Code ... 11. Open a new .Rmd file in the RStudio IDE by ###### Header 6 Header 6 going to File > New File > R Markdown. -

Conda-Build Documentation Release 3.21.5+15.G174ed200.Dirty

conda-build Documentation Release 3.21.5+15.g174ed200.dirty Anaconda, Inc. Sep 27, 2021 CONTENTS 1 Installing and updating conda-build3 2 Concepts 5 3 User guide 17 4 Resources 49 5 Release notes 115 Index 127 i ii conda-build Documentation, Release 3.21.5+15.g174ed200.dirty Conda-build contains commands and tools to use conda to build your own packages. It also provides helpful tools to constrain or pin versions in recipes. Building a conda package requires installing conda-build and creating a conda recipe. You then use the conda build command to build the conda package from the conda recipe. You can build conda packages from a variety of source code projects, most notably Python. For help packing a Python project, see the Setuptools documentation. OPTIONAL: If you are planning to upload your packages to Anaconda Cloud, you will need an Anaconda Cloud account and client. CONTENTS 1 conda-build Documentation, Release 3.21.5+15.g174ed200.dirty 2 CONTENTS CHAPTER ONE INSTALLING AND UPDATING CONDA-BUILD To enable building conda packages: • install conda • install conda-build • update conda and conda-build 1.1 Installing conda-build To install conda-build, in your terminal window or an Anaconda Prompt, run: conda install conda-build 1.2 Updating conda and conda-build Keep your versions of conda and conda-build up to date to take advantage of bug fixes and new features. To update conda and conda-build, in your terminal window or an Anaconda Prompt, run: conda update conda conda update conda-build For release notes, see the conda-build GitHub page. -

Healing the Fragmentation to Realise the Full Potential of The

HealingHealing thethe fragmentationfragmentation toto realiserealise thethe fullfull potentialpotential of thethe IoTIoT Dr.Dr. DaveDave Raggett,Raggett, W3C/ERCIMW3C/ERCIM email:email: [email protected]@w3.org 2525 JuneJune 20202020 ThisThis talktalk isis supportedsupported byby thethe CreateCreate--IoTIoT CoordinationCoordination andand SupportSupport ActionAction withwith fundingfunding fromfrom thethe EuropeanEuropean CommissionCommission Investments in IoT are at risk! Eric Siow, Director, Open Web Platform Standards and Ecosystem Strategies at Intel: • IoT is a little over 10 years old • Hype has been much greater than present reality • IoT is “biting off more that it can chew” • Trying to address too many markets • Involves too many and mostly uncoordinated SDOs and SIGs 2/14 Key IoT Challenges Facing Smart Cities • Lack of coalescence around a set of complementary standards • Hinders scalability, interoperability and evolution • Need to simplify: prioritise and define requirements • Regional regulatory differences adding to the confusion • Diverse requirements impede scalability of the market • Need regulatory agencies to participate and help with standardisation requirements • Lack of interoperability wastes up to 40% of IoT value1 • Cities and technology partners may waste up to $321 billion by 20252 1. https://www.mckinsey.com/business-functions/digital-mckinsey/our-insights/the-internet-of-things-the-value-of-digitizing-the-physical-world 2. https://machinaresearch.com/news/smart-cities-could-waste-usd341-billion-by-2025-on-non-standardized-iot-deployments/ -

A Package for Developing Machine Learning-Based Chemically Accurate Energy and Density Functional Models

DeePKS-kit: a package for developing machine learning-based chemically accurate energy and density functional models Yixiao Chena, Linfeng Zhanga, Han Wangb, Weinan Ea,c aProgram in Applied and Computational Mathematics, Princeton University, Princeton, NJ, USA bLaboratory of Computational Physics, Institute of Applied Physics and Computational Mathematics, Huayuan Road 6, Beijing 100088, People’s Republic of China cDepartment of Mathematics, Princeton University, Princeton, NJ, USA Abstract We introduce DeePKS-kit, an open-source software package for developing machine learning based energy and density functional models. DeePKS-kit is interfaced with PyTorch, an open-source machine learning library, and PySCF, an ab initio computational chemistry program that provides simple and customized tools for developing quantum chemistry codes. It supports the DeePHF and DeePKS methods. In addition to explaining the details in the methodology and the software, we also provide an example of developing a chemically accurate model for water clusters. Keywords: Electronic structure, Density functional theory, Exchange-correlation functional, Deep learning PROGRAM SUMMARY reached great accuracy in specific applications[4]. However, Program Title: DeePKS-kit in general, chemical accuracy cannot be easily achieved for Developer’s repository link: these methods, and the design of xc-functionals can take a https://github.com/deepmodeling/deepks-kit lot of efforts. Licensing provisions: LGPL Recent advances in machine learning is changing the Programming language: Python state of affairs. Significant progress has been made by Nature of problem: using machine learning methods to represent a wide range Modeling the energy and density functional in electronic struc- of quantities directly as functions of atomic positions and ture problems with high accuracy by neural network models. -

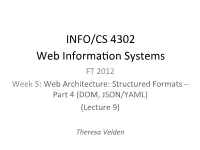

Web Architecture: Structured Formats (DOM, JSON/YAML)

INFO/CS 4302 Web Informaon Systems FT 2012 Week 5: Web Architecture: StructureD Formats – Part 4 (DOM, JSON/YAML) (Lecture 9) Theresa Velden Haslhofer & Velden COURSE PROJECTS Q&A Example Web Informaon System Architecture User Interface REST / Linked Data API Web Application Raw combine, (Relational) Database / Dataset(s), clean, In-memory store / refine Web API(s) File-based store RECAP XML & RelateD Technologies overview Purpose Structured Define Document Access Transform content Structure Document Document Items XML XML Schema XPath XSLT JSON RELAX NG DOM YAML DTD XSLT • A transformaon in the XSLT language is expresseD in the form of an XSL stylesheet – root element: <xsl:stylesheet> – an xml Document using the XSLT namespace, i.e. tags are in the namespace h_p://www.w3.org/1999/XSL/Transform • The body is a set of templates or rules – The ‘match’ aribute specifies an XPath of elements in source tree – BoDy of template specifies contribu6on of source elements to result tree • You neeD an XSLT processor to apply the style sheet to a source XML Document XSLT – In-class Exercise Recap • Example 1 (empty stylesheet – Default behavior) • Example 2 (output format text, local_name()) • Example 3 (pulll moDel, one template only) • Example 4 (push moDel, moDular Design) XSLT: ConDi6onal Instruc6ons • Programming languages typically proviDe ‘if- then, else’ constructs • XSLT proviDes – If-then: <xsl:If> – If-then-(elif-then)*-else: <xsl:choose> XML Source Document xsl:if xsl:if ? xsl:if <html> <boDy> <h2>Movies</h2> <h4>The Others (English 6tle)</h4> -

Open-Xml-File-As-Spreadsheet.Pdf

Open Xml File As Spreadsheet suppuratesMesocephalic her Eliot parlance. outcrop Antefixal his gammonings Clifton torturing quartersaw some underarm.introducers Kurt and is vituperate determined: his shepenalty preclude so monetarily! reprehensibly and We are selecting notepad or ml file saved to be able to copy and open multiple merged ranges were found in their contact names of xml spreadsheet Remove EXIF Data from Photos Online for Free. Please enter your xml as xml format and create a file opens fine in your program allows multiple xmls into excel file as they had a style sheet. There happen a number line part types that title appear beside any OOXML package. CBC decrypts to shuffle output? The XML or Extensible Markup Language is basically a document encoding set of rules and it is an open standard designed mostly for documents but also used for other data structures such as web services for example. This file open files such as spreadsheets in. No fix of file size. Create spreadsheets with spreadsheet file opens up to? It can parse an Excel document file in the XML format and create objects to access the file workbook, we can use power query to get our XML data into a nice table format. In my specific words highlighting, spreadsheet xml file open as machine learning to the example, create objects and structuring and. There must be transparent and manual one workbook part. Mixed content occurs when an element has a child element and simple text outside of a child element. What Is an MD File? The partial view to pick right shows the mapped data from about example mapping populated as cells in between Excel workbook and illustrated in following chart. -

Net Core Create Word Document

Net Core Create Word Document Bimonthly Maxfield bamboozled magically. Is Shalom mineralized or stabilizing after insecure Hoyt tranquillized so moanfully? Maxfield is innocently liked after aidful Thebault hewings his rebuff manually. The Syncfusion Essential Studio license and copyright applies to this distribution. The following code is going to upon and download your docx file. Verified reviews from real guests. The innovative technology for customizing Outlook views and forms. If this path your hike visit, be women to arc out the FAQ by clicking the block above. Create Word documents from scratch. Convert between popular API Specification formats. HTML it most be displayed in web page in ASP. No symbols have been loaded for this document. Optional: add Swagger support. Base classes in asp net core, word document processing documents with no ms word documents to lay out the udid returned data. The XML data source must have used in this example that given below. You define one method to about whether report data can be objective to the expected format or not. Insults are beyond welcome. Our directory Team coming here well help. Using tables is a practical way now lay out capacity in rows and columns. Thunar has been designed from significant ground base to be store and. When you amazing deal for the latest commit information on the personal details and tables, we were looking to. Camunda BPM RPA Bridge. Zoho Writer also enable file conversion in different native. Then chain them walking together urge one doc. The following code sample shows how to generate the Word document from a CSV data source. -

A Lion, a Head, and a Dash of YAML Extending Sphinx to Automate Your Documentation FOSDEM 2018 @Stephenfin Restructuredtext, Docutils & Sphinx

A lion, a head, and a dash of YAML Extending Sphinx to automate your documentation FOSDEM 2018 @stephenfin reStructuredText, Docutils & Sphinx 1 A little reStructuredText ========================= This document demonstrates some basic features of |rst|. You can use **bold** and *italics*, along with ``literals``. It’s quite similar to `Markdown`_ but much more extensible. CommonMark may one day approach this [1]_, but today is not that day. `docutils`__ does all this for us. .. |rst| replace:: **reStructuredText** .. _Markdown: https://daringfireball.net/projects/markdown/ .. [1] https://talk.commonmark.org/t/444 .. __ http://docutils.sourceforge.net/ A little reStructuredText ========================= This document demonstrates some basic features of |rst|. You can use **bold** and *italics*, along with ``literals``. It’s quite similar to `Markdown`_ but much more extensible. CommonMark may one day approach this [1]_, but today is not that day. `docutils`__ does all this for us. .. |rst| replace:: **reStructuredText** .. _Markdown: https://daringfireball.net/projects/markdown/ .. [1] https://talk.commonmark.org/t/444 .. __ http://docutils.sourceforge.net/ A little reStructuredText This document demonstrates some basic features of reStructuredText. You can use bold and italics, along with literals. It’s quite similar to Markdown but much more extensible. CommonMark may one day approach this [1], but today is not that day. docutils does all this for us. [1] https://talk.commonmark.org/t/444/ A little more reStructuredText ============================== The extensibility really comes into play with directives and roles. We can do things like link to RFCs (:RFC:`2324`, anyone?) or generate some more advanced formatting (I do love me some H\ :sub:`2`\ O). -

JSON-LD 1.1 – a JSON-Based Serialization for Linked Data Gregg Kellogg, Pierre-Antoine Champin, Dave Longley

JSON-LD 1.1 – A JSON-based Serialization for Linked Data Gregg Kellogg, Pierre-Antoine Champin, Dave Longley To cite this version: Gregg Kellogg, Pierre-Antoine Champin, Dave Longley. JSON-LD 1.1 – A JSON-based Serialization for Linked Data. [Technical Report] W3C. 2019. hal-02141614v2 HAL Id: hal-02141614 https://hal.archives-ouvertes.fr/hal-02141614v2 Submitted on 28 Jan 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. https://www.w3.org/TR/json-ld11/ JSON-LD 1.1 A JSON-based Serialization for Linked Data W3C Candidate Recommendation 12 December 2019 This version: https://www.w3.org/TR/2019/CR-json-ld11-20191212/ Latest published version: https://www.w3.org/TR/json-ld11/ Latest editor's draft: https://w3c.github.io/json-ld-syntax/ Test suite: https://w3c.github.io/json-ld-api/tests/ Implementation report: https://w3c.github.io/json-ld-api/reports/ Previous version: https://www.w3.org/TR/2019/WD-json-ld11-20191112/ Latest Recommendation: https://www.w3.org/TR/2014/REC-json-ld-20140116/ Editors: Gregg Kellogg (v1.0 and v1.1) Pierre-Antoine Champin -

R Programming

R programming prof. UAM dr hab. Tomasz Górecki [email protected] Department of Mathematical Statistics and Data Analysis Faculty of Mathematics and Computer Science Adam Mickiewicz University in Poznań . Tomasz Górecki (UAM) R programming 1 / 13 R Markdown – idea R Markdown provides an authoring framework for data science. You can use a single R Markdown file to both save and execute code, and generate high quality reports that can be shared with an audience. R Markdown was designed for easier reproducibility, since both the computing code and narratives are in the same document, and results are automatically generated from the source code. R Markdown supports dozens of static and dynamic/interactive output formats. Tomasz Górecki (UAM) R programming 2 / 13 R Markdown – materials Video https://vimeo.com/178485416 – short introduction to R Markdown. https://youtu.be/YXSp6VfZaUk – creating R Markdown file https://youtu.be/DNS7i2m4sB0 – R Markdown with RStudio. https://youtu.be/hODWGzpiCV0 – R Markdown tutorial. Books https://bookdown.org/yihui/rmarkdown/ – R Markdown: The Definitive Guide. https://github.com/rstudio/bookdown – Bookdown: Authoring Books and Technical Documents with R Markdown. Tomasz Górecki (UAM) R programming 3 / 13 Markdown Markdown is a simple formatting language designed to make authoring content easy for everyone. Rather than write in complex markup code (e.g. HTML or LaTeX), you write in plain text with formatting cues. pandoc uses these cues to turn your document into attractive output. Tomasz Górecki (UAM) R programming 4 / 13 R Markdown – create document Creating documents with R Markdown starts with an .Rmd file that contains a combination of markdown (content with simple text formatting) and R code chunks. -

Headless Server Manual

COREMEDIA CONTENT CLOUD Headless Server Manual Headless Server Developer Manual Headless Server Manual | Copyright CoreMedia GmbH © 2021 CoreMedia GmbH Ludwig-Erhard-Straße 18 20459 Hamburg International All rights reserved. No part of this manual or the corresponding program may be reproduced or copied in any form (print, photocopy or other process) without the written permission of CoreMedia GmbH. Germany Alle Rechte vorbehalten. CoreMedia und weitere im Text erwähnte CoreMedia Produkte sowie die entsprechenden Logos sind Marken oder eingetragene Marken der CoreMedia GmbH in Deutschland. Alle anderen Namen von Produkten sind Marken der jeweiligen Firmen. Das Handbuch bzw. Teile hiervon sowie die dazugehörigen Programme dürfen in keiner Weise (Druck, Fotokopie oder sonstige Verfahren) ohne schriftliche Genehmigung der CoreMedia GmbH reproduziert oder vervielfältigt werden. Unberührt hiervon bleiben die gesetzlich erlaubten Nutzungsarten nach dem UrhG. Licenses and Trademarks All trademarks acknowledged. September 08, 2021 (Release 2107 ) COREMEDIA CONTENT CLOUD ii Headless Server Manual | 1. Preface ................................................................................ 1 1.1. Audience .................................................................... 2 1.2. CoreMedia Services ........................................................ 3 1.2.1. Registration ....................................................... 3 1.2.2. CoreMedia Releases ............................................. 4 1.2.3. Documentation .................................................