Practical Dynamic Software Updating for C∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Laboratory 2 ARP; Zebra Routing Daemon Part1. Introduction

Facultatea de Electronică şi Telecomunicaţii Communications Network Laboratory 1 Laboratory 2 ARP; Zebra routing daemon Part1. Introduction ARP Address Resolution Protocol, ARP, is used by a system, which wants to send data an IP address on the local network, and it doesn’t know the destination MAC address. Systems keep an ARP look-up table where they store information about the association between the IP and MAC addresses. If the MAC address is not in the ARP table, then ARP protocol is used it knowing the destination IP addresss. ARP operation for communications inside the local network: • System checks its ARP table for the MAC address associated with the IP address. • If the MAC address is not in the ARP table, an ARP request is broadcasted in the local network, requesting the MAC address for the specified IP address. • The machine with the requested IP address will reply with an ARP packet containing its MAC address. • Thepacket is sent to the learned MAC address. ARP operation for communication between hosts located in different networks • System determines that the IP address does not belong to the local network and decides to send the packet to the gateway. It has to determine the MAC address of the gateway. • It broadcast an ARP request asking for the MAC address of the IP address belonging to the gateway. It knows the gateway’s IP address from the static route specifying the default gateway. • The gateway will reply with its MAC address. • The packet is sent to the gateway. • The gateway will be in charge with sending the packet to the next hop towards the destination. -

Zebra 2.0 and Lagopus: Newly-Designed Routing Stack On

Zebra 2.0 and Lagopus: newly-designed routing stack on high-performance packet forwarder Kunihiro Ishiguro∗, Yoshihiro Nakajimay, Masaru Okiz, Hirokazu Takahashiy ∗ Hash-Set, Tokyo, Japan y Nippon Telegraph and Telephone Corporation, Tokyo, Japan z Internet Initiative Japan Inc, Tokyo, Japan e-mail: [email protected], [email protected], [email protected], [email protected] Abstract First GNU Zebra architecture and its issues Zebra 2.0 is the new version of open source networking When we designed the first GNU Zebra, the biggest ambition software which is implemented from scratch. Zebra 2.0 was to make multi-process networking software work. The is designed to supports BGP/OSPF/LDP/RSVP-TE and co- first GNU Zebra is made from a collection of several dae- working with Lagopus as fast packet forwarder with Open- mons that work together to build the routing table. There may Flow API. In this new version of Zebra, it adapts new archi- be several protocol-specific routing daemons and Zebra’s ker- tecture which is mixture of thread model and task completion nel routing manager. Figure 1 shows the architecture of the model to achieve maximum scalability with multi-core CPUs. first GNU Zebra. RIB (Routing Information Base) / FIB (For- Zebra has separate independent configuration manager that warding Information Base) and the interface manager are sep- supports commit/rollback and validation functionality. The configuration manager understand YANG based configuration arated into an isolated process called ’zebra’. All of protocol model so we can easily add a new configuration written in handling is also separated to it’s own process such as ’ripd’, YANG. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

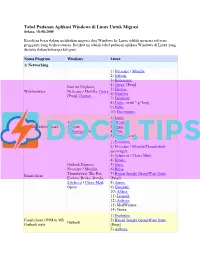

List Software Pengganti Windows Ke Linux

Tabel Padanan Aplikasi Windows di Linux Untuk Migrasi Selasa, 18-08-2009 Kesulitan besar dalam melakukan migrasi dari Windows ke Linux adalah mencari software pengganti yang berkesesuaian. Berikut ini adalah tabel padanan aplikasi Windows di Linux yang disusun dalam beberapa kategori. Nama Program Windows Linux 1) Networking. 1) Netscape / Mozilla. 2) Galeon. 3) Konqueror. 4) Opera. [Prop] Internet Explorer, 5) Firefox. Web browser Netscape / Mozilla, Opera 6) Nautilus. [Prop], Firefox, ... 7) Epiphany. 8) Links. (with "-g" key). 9) Dillo. 10) Encompass. 1) Links. 1) Links 2) ELinks. Console web browser 2) Lynx 3) Lynx. 3) Xemacs + w3. 4) w3m. 5) Xemacs + w3. 1) Evolution. 2) Netscape / Mozilla/Thunderbird messenger. 3) Sylpheed / Claws Mail. 4) Kmail. Outlook Express, 5) Gnus. Netscape / Mozilla, 6) Balsa. Thunderbird, The Bat, 7) Bynari Insight GroupWare Suite. Email client Eudora, Becky, Datula, [Prop] Sylpheed / Claws Mail, 8) Arrow. Opera 9) Gnumail. 10) Althea. 11) Liamail. 12) Aethera. 13) MailWarrior. 14) Opera. 1) Evolution. Email client / PIM in MS 2) Bynari Insight GroupWare Suite. Outlook Outlook style [Prop] 3) Aethera. 4) Sylpheed. 5) Claws Mail 1) Sylpheed. 2) Claws Mail Email client in The Bat The Bat 3) Kmail. style 4) Gnus. 5) Balsa. 1) Pine. [NF] 2) Mutt. Mutt [de], Pine, Pegasus, Console email client 3) Gnus. Emacs 4) Elm. 5) Emacs. 1) Knode. 2) Pan. 1) Agent [Prop] 3) NewsReader. 2) Free Agent 4) Netscape / Mozilla Thunderbird. 3) Xnews 5) Opera [Prop] 4) Outlook 6) Sylpheed / Claws Mail. 5) Netscape / Mozilla Console: News reader 6) Opera [Prop] 7) Pine. [NF] 7) Sylpheed / Claws Mail 8) Mutt. -

OSPF Link-State Database in GNU Zebra

Ecole Nationale Superieure´ des Tel´ ecommunica´ tions Dep´ artement Informatique et Resea´ ux Student's Project OSPF Link-State Database in GNU Zebra Gerhard Munz¨ [email protected] Tutor: Jean-Louis Rougier 1 July 2002 Abstract OSPF (Open Shortest Path First) is a widely used link-state routing protocol for intra-domain routing, a so-called Interior Gateway Protocol (IGP). As each OSPF router takes his routing decisions on the basis of its link-state database reflecting the network topology, timeliness of this database is essential for network stability and fast convergence in case of topology changes. Present development of OSPF aims at accelerating the exchange of link-state information, which will cause an increasing number of OSPF messages received and sent by the routers. A generator of Link State Advertisements (LSAs) is needed to examine the behavior of OSPF routers under such conditions. Within this project, the code of the free routing software GNU Zebra was exam- ined with respect to link-state related functionality, such as originating and refresh- ing LSAs. The results can be used to build a Zebra based LSA generator. Moreover, a patch is presented that inserts fake AS-external-LSAs into the link-state database. 1 Contents 1 Introduction 2 2 GNU Zebra 3 2.1 General Description . 3 2.2 Implementation Features . 3 2.3 Installation and Configuration . 3 3 Zebra's OSPF daemon 4 3.1 Source Files . 4 3.2 Router-LSAs and Network-LSAs . 5 3.3 AS-external-LSAs . 6 3.4 Summary-LSAs and ASBR-summary-LSAs . 8 3.5 LSA Refresh Mechanisms . -

Securing BGP Using External Security Monitors ∗

Securing BGP Using External Security Monitors ∗ Patrick Reynolds, Oliver Kennedy, Emin Gun¨ Sirer, Fred B. Schneider freynolds,okennedy,egs,[email protected] Abstract External security monitor Safety specification Security modifications to legacy network protocols are ex- Trusted platform pensive and disruptive. This paper outlines an approach, based on external security monitors, for securing legacy protocols by deploying additional hosts that locally mon- Legacy host itor the inputs and outputs of each host executing the pro- Unmodified network protocol tocol, check the behavior of the host against a safety spec- ification, and communicate using an overlay to alert other Figure 1: An external security monitor monitoring the hosts about invalid behavior and to initiate remedial ac- traffic for a legacy host on two network links. tions. Trusted computing hardware provides the basis for trust in external security monitors. This paper applies this approach to secure the Border Gateway Protocol, yield- disruptive, and thus more likely to get deployed. ing an external security monitor called N-BGP. N-BGP We propose external security monitors (ESMs) as a can accurately monitor a BGP router using commodity means of identifying malicious, misconfigured, or com- trusted computing hardware. Deploying N-BGP at a ran- promised hosts, thereby providing a way to secure legacy dom 10% of BGP routers is sufficient to guarantee the se- network protocols. An ESM is an additional trusted host curity of 80% of Internet routes where both endpoints are or process dedicated to ensuring that one host executing a monitored by N-BGP. Overall, external security monitors protocol complies with a safety specification. -

Kupní Smlouva

KUPNÍ SMLOUVA č. prodávajícího. č. kupujícího: Smluvní strany 1. Prodávající: OCC s.r.o. - Lidická 198/68, Bolevec, 323 00 Pizen Sídlo: zástupce jednatele) Jednající: Pavel Kraus a Jan Toman (zplnomo y IČ: 27970922 DIČ: CZ2797092; Kontakt: 7 , 1^11/ . .... , . ixroickvm soudem v Plzni, oddíl C 19349 Zapsán v obchodním rejstříku vedenem Krajským Bankovní spojení: Raiffeisenbank a.s., č. účtu: 2588 a 2. Kupující: Československé armády 408, 502 00 Hradec Králové Sídlo: Jednající: MUDr. Zdeněk Fink, primátor města IČ: 00268810 Bankovní spojení: ..... , , v . rnV.. nodle § 2079 a násl. z. č. 89/2012 Sb., uzavírají mze uvedeného dne, mesice a toku PUVJ 3 občanský zákoník v platném znění, tuto kupní smlouvu: « ,nriáMk\j PC a dalšího IT vybavení Modernizace infrastruktury ZS - dodav y I. Úvodní ustanovení 1. Statutární orgány (příp. další osoby oprávněné k podpisu smlouvy) uvedené v záhlaví smlouvy prohlašují, že jsou oprávněny v souladu s obecně závaznými právními předpisy a vnitřními předpisy příslušné smluvní strany podepsat bez dalšího tuto kupní smlouvu. 2. Prodávající prohlašuje, že má všechna podnikatelská oprávnění potřebná k realizaci této smlouvy. 3. Tato smlouva je uzavřena na základě výsledků zadávacího řízení na veřejnou zakázku zadanou v otevřeném nadlimitním řízení č.j. MMHK/030710/2018 ze dne 15. 2. 2018 pod názvem „Modernizace infrastruktury ZŠ - dodávky PC a dalšího IT vybavení". V rámci uvedeného zadávacího řízení byla nabídka prodávajícího vybrána jako nejvýhodnější usnesením č. RM/2018/724 na 10. zasedání Rady města Hradec Králové dne 19. 6. 2018. 4. Kupující bude na tento projekt žádat o spolufinancování z Evropské unie prostřednictvím Integrovaného regionálního operačního programu, SC 2.4: Zvýšení kvality a dostupnosti infrastruktury pro vzdělávání a celoživotního učení, výzva č. -

The Table of Equivalents / Replacements / Analogs of Windows Software in Linux

The table of equivalents / replacements / analogs of Windows software in Linux. Last update: 16.07.2003, 31.01.2005, 27.05.2005, 04.12.2006 You can always find the last version of this table on the official site: http://www.linuxrsp.ru/win-lin-soft/. This page on other languages: Russian, Italian, Spanish, French, German, Hungarian, Chinese. One of the biggest difficulties in migrating from Windows to Linux is the lack of knowledge about comparable software. Newbies usually search for Linux analogs of Windows software, and advanced Linux-users cannot answer their questions since they often don't know too much about Windows :). This list of Linux equivalents / replacements / analogs of Windows software is based on our own experience and on the information obtained from the visitors of this page (thanks!). This table is not static since new application names can be added to both left and the right sides. Also, the right column for a particular class of applications may not be filled immediately. In future, we plan to migrate this table to the PHP/MySQL engine, so visitors could add the program themselves, vote for analogs, add comments, etc. If you want to add a program to the table, send mail to winlintable[a]linuxrsp.ru with the name of the program, the OS, the description (the purpose of the program, etc), and a link to the official site of the program (if you know it). All comments, remarks, corrections, offers and bugreports are welcome - send them to winlintable[a]linuxrsp.ru. Notes: 1) By default all Linux programs in this table are free as in freedom. -

Lab 2: Introduction to Free Range Routing (FRR)

BORDER GATEWAY PROTOCOL Lab 2: Introduction to Free Range Routing (FRR) Document Version: 02-18-2020 Award 1829698 “CyberTraining CIP: Cyberinfrastructure Expertise on High-throughput Networks for Big Science Data Transfers” Lab 2: Introduction to Free Range Routing (FRR) Contents Overview ............................................................................................................................. 3 Objectives............................................................................................................................ 3 Lab settings ......................................................................................................................... 3 Lab roadmap ....................................................................................................................... 3 1 Introduction to FRR ..................................................................................................... 3 1.1 FRR architecture ................................................................................................... 4 1.2 FRR and Mininet integration ................................................................................ 5 2 Lab topology................................................................................................................ 6 2.1 Lab settings........................................................................................................... 6 2.2 Open the topology .............................................................................................. -

Evaluation of Virtual Routing Appliances As Routers Virtual Environment

Rochester Institute of Technology RIT Scholar Works Theses 2008 Evaluation of virtual routing appliances as routers virtual environment Ahmed Al-Amoudi Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Al-Amoudi, Ahmed, "Evaluation of virtual routing appliances as routers virtual environment" (2008). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Networking, Security and System Administration ~ Thesis Report Approval Form ~ Student Name: Ahmed Al-amoudi Thesis Title: Evaluation of Virtual Routing Appliances as routers virtual environment ~ MS Thesis Committee ~ Name Signature Date Prof. Charles Border Chair Prof. Luther Troell Committee Member Prof. Pete Lutz Committee Member 1 Evaluation of Virtual Routing Appliances as routers in a virtual environment By Ahmed Al-amoudi Project submitted in partial fulfillment of the requirements for the Degree of Master of Science in Networking, Security, and System Administration Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences August 20, 2008 2 Abstract : A virtual routing appliance is a system for the rapid, automated management and employment of virtual networks. Virtual routing appliances utilize virtual machines to enable virtual infrastructure, and they have been used commonly in order to implement experimental networks and devoted subnets over a virtual network. Existing research in this area such as cluster-based virtual routers, and Xen routers require the use of physical resources to establish connectivity and to guarantee efficient resource utilization. -

Les Logiciels Sous Linux Pour Remplacer Les Logiciels Équivalents

Les logiciels sous Linux pour remplacer les logiciels équivalents sous Windows La table des équivalences / remplacements / analogies des logiciels sous Linux équivalents aux produits commerciaux sous Windows Dernière mise à jour : 13.07.2003. Site d'origine de ce projet : http://linuxshop.ru/linuxbegin/win-lin-soft-en/ This page on other languages: Russian version,English version,Italian version, Spanish version, French version, German version 27.09.2003 : Mises à jour (ajout de la sous-section "Parity Archive Volume Set" ou gestion des fichiers . par pour sauvegardes et restauration orientée archives Usenet dans "travailler avec les fichiers") 13.07.2003 : Mises à jour + corrections mineures. 30.06.2003 : Mises à jour + corrections mineures. 29.06.2003 : Traduction française par OM Conseil + quelques ajouts et modifications. 19.04.2003 : Beaucoup de corrections et d'ajouts. Merci à tous ceux qui ont contribué :-) 15.03.2003 : Nouvelle section : Les Emulateurs 07.03.2003 : 1ère édition en langue Russe de cette table. Cette page est sous licence GNU FDL L'une des tâches les plus difficiles lorsque l'on veut migrer de Windows à Linux est l'absence de logiciels comparables. Les utilisateurs débutants recherchent des logiciels Linux identiques à ceux qu'ils utilisent sous Windows et les utilisateurs avancés sous Linux ne peuvent les aider puisque bien souvent ils ne connaissent pas bien les solutions existantes sous Windows :-) Cette liste d'équivalence est basée sur les connaissances de ses auteurs d'origine Valery V. Kachurov, Nesov Artem, ainsi que de ma propre expérience sur les deux systèmes et de celles des lecteurs de cette page (ou des traductions existantes) qui y participent en envoyant leurs commentaires et idées par email. -

Experimental Results About Multiprotocol Routing and Route Redistribution

Experimental results about Multiprotocol Routing and Route Redistribution Eugen PETAC, Member IEEE , Bogdan MUSAT network by departments, VLANs (Virtual LAN) were Abstract — This paper considers an experimental platform used, because they allow for logical grouping, no matter of and some results about the problem of multi protocol routing physical location. All computers within a department were and route redistribution. Our particular lab platform, with connected to the same VLAN, like this: IT – VLAN ID 10, Zebra/ Quagga routing software packages, is analyzed. The particularity of this case study is that we can link normal Design – VLAN ID 20, Human Resources – VLAN ID 30, computers running Zebra / Quagga together with Cisco Public Relations – VLAN ID 40. dedicated equipments. Interrupting various links between The connection between branches and headquarters is routers for studying convergence time as well as shutting based on a Frame Relay network, with the two ends of down various routers to study network flexibility led to each connection being Ethernet based. Because of didactic results according to routing protocols specifications. reasons, the Frame Relay network does not appear on network schematic, the link between Zebra2 and Keywords — IGRP, OSPF, Quagga, RIP, route redistribution, routing, VLAN, Zebra Cisco2513 being a direct one. The Iasi branch has a single LAN and the connection with headquarters is done through a serial link. Headquarters in Bucharest also has a LAN, I. INTRODUCTION but it also has an Internet connection. This Internet connection is used also by branches. HIS paper studies a lab platform which is used to Each branch can choose its own routing protocol.