Mining Relational and Nonrelational Data with IBM Intelligent Miner For

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Netezza EOL: IBM IAS Is a Flawed Upgrade Path Netezza EOL: IBM IAS Is a Flawed Upgrade Path

Author: Marc Staimer WHITE PAPER Netezza EOL: What You’re Not Being Told About IBM IAS Is a Flawed Upgrade Path Database as a Service (DBaaS) WHITE PAPER • Netezza EOL: IBM IAS Is a Flawed Upgrade Path Netezza EOL: IBM IAS Is a Flawed Upgrade Path Executive Summary It is a common technology vendor fallacy to compare their systems with their competitors by focusing on the features, functions, and specifications they have, but the other guy doesn’t. Vendors ignore the opposite while touting hardware specs that mean little. It doesn’t matter if those features, functions, and specifications provide anything meaningfully empirical to the business applications that rely on the system. It only matters if it demonstrates an advantage on paper. This is called specsmanship. It’s similar to starting an argument with proof points. The specs, features, and functions are proof points that the system can solve specific operational problems. They are the “what” that solves the problem or problems. They mean nothing by themselves. Specsmanship is defined by Wikipedia as: “inappropriate use of specifications or measurement results to establish supposed superiority of one entity over another, generally when no such superiority exists.” It’s the frequent ineffective sales process utilized by IBM. A textbook example of this is IBM’s attempt to move their Netezza users to the IBM Integrated Analytics System (IIAS). IBM is compelling their users to move away from Netezza. In the fall of 2017, IBM announced to the Enzee community (Netezza users) that they can no longer purchase or upgrade PureData System for Analytics (the most recent IBM name for its Netezza appliances), and it will end-of-life all support next year. -

SAP Database Administration with IBM

André Faustmann, Michael Greulich, André Siegling, Benjamin Wegner, and Ronny Zimmerman SAP® Database Administration with IBM® DB2® Bonn Boston Contents at a Glance 1 Introduction ............................................................................ 19 2 SAP System Landscapes ......................................................... 25 3 Basics and Architecture of the IBM DB2 for LUW Database ................................................................................. 71 4 Lifecycle ................................................................................. 233 5 Administration Tools Inside and Outside the SAP System .... 327 6 Backup, Restore, and Recovery .............................................. 463 7 Monitoring DB2 SAP Systems with SAP Solution Manager .................................................................................. 575 8 SAP NetWeaver Business Warehouse and IBM DB2 for LUW .................................................................................. 647 9 Common Problems and Their Solutions for DB2 Administrators ........................................................................ 697 Contents Foreword .................................................................................... 15 Acknowledgments ....................................................................... 17 1 Introduction ................................................................. 19 1.1 Who This Book Is For .................................................... 21 1.2 Focus of This Book ....................................................... -

IBM DB2 for Z/OS: the Database for Gaining a Competitive Advantage!

Why You Should Read This Book Tom Ramey, Director, DB2 for z/OS IBM Silicon Valley Laboratory “This book is a ‘must read’ for Enterprise customers and contains a wealth of valuable information! “It is clear that there is a technology paradigm shift underway, and this is opening enormous opportunities for companies in all industries. Adoption of Cloud, Mobile, and Analytics promises to revolutionize the way we do business and will add value to a company’s business processes across all functions from sales, marketing, procurement, manufacturing and finance. “IT will play a significant role enabling this shift. Read this book and find out how to integrate the heart of your infrastructure, DB2 for z/OS, with new technologies in order to maximize your investment and drive new value for your customers.” Located at IBM’s Silicon Valley Laboratory, Tom is the director of IBM’s premiere relational database management system. His responsibilities include Architecture, Development, Service, and Customer Support for DB2. He leads development labs in the United States, Germany, and China. Tom works closely with IBM’s largest clients to ensure that DB2 for z/OS continues as the leading solution for modern applications, encompassing OLTP to mobile to analytics. At the same time he continues an uncompromising focus on meeting the needs of the most demanding operational environments on Earth, through DB2’s industry- leading performance, availability, scaling, and security capabilities. IBM DB2 for z/OS: The Database for Gaining a Competitive Advantage! Shantan Kethireddy Jane Man Surekha Parekh Pallavi Priyadarshini Maryela Weihrauch MC Press Online, LLC Boise, ID 83703 USA IBM DB2 for z/OS: The Database for Gaining a Competitive Advantage! Shantan Kethireddy, Jane Man, Surekha Parekh, Pallavi Priyadarshini, and Maryela Weihrauch First Edition First Printing—October 2015 © Copyright 2015 IBM. -

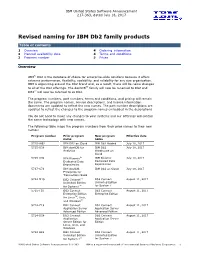

Revised Naming for IBM Db2 Family Products

IBM United States Software Announcement 217-363, dated July 18, 2017 Revised naming for IBM Db2 family products Table of contents 1 Overview 4 Ordering information 3 Planned availability date 4 Terms and conditions 3 Program number 5 Prices Overview IBM(R) Db2 is the database of choice for enterprise-wide solutions because it offers extreme performance, flexibility, scalability, and reliability for any size organization. IBM is organizing around the Db2 brand and, as a result, there will be name changes to all of the Db2 offerings. The dashDBTM family will now be renamed to Db2 and DB2(R) will now be referred to as Db2. The program numbers, part numbers, terms and conditions, and pricing will remain the same. The program names, service descriptions, and license information documents are updated to reflect the new names. The part number descriptions are updated to reflect the changes to the program names embedded in the descriptions. You do not need to make any changes to your systems and our offerings will contain the same technology with new names. The following table maps the program numbers from their prior names to their new names: Program number Prior program New program Effective date name name 5725-W92 IBM DB2 on Cloud IBM Db2 Hosted July 18, 2017 5725-U38 IBM dashDB for IBM Db2 July 18, 2017 Analytics Warehouse on Cloud 5725-U32 IBM Bluemix(R) IBM Bluemix July 18, 2017 Dedicated Data Dedicated Data Repositories Repositories 5737-C74 IBM dashDB IBM Db2 on Cloud July 18, 2017 Enterprise for Transactions SaaS 5724-M15 DB2 ConnectTM -

Developing Embedded SQL Applications

IBM DB2 10.1 for Linux, UNIX, and Windows Developing Embedded SQL Applications SC27-3874-00 IBM DB2 10.1 for Linux, UNIX, and Windows Developing Embedded SQL Applications SC27-3874-00 Note Before using this information and the product it supports, read the general information under Appendix B, “Notices,” on page 209. Edition Notice This document contains proprietary information of IBM. It is provided under a license agreement and is protected by copyright law. The information contained in this publication does not include any product warranties, and any statements provided in this manual should not be interpreted as such. You can order IBM publications online or through your local IBM representative. v To order publications online, go to the IBM Publications Center at http://www.ibm.com/shop/publications/ order v To find your local IBM representative, go to the IBM Directory of Worldwide Contacts at http://www.ibm.com/ planetwide/ To order DB2 publications from DB2 Marketing and Sales in the United States or Canada, call 1-800-IBM-4YOU (426-4968). When you send information to IBM, you grant IBM a nonexclusive right to use or distribute the information in any way it believes appropriate without incurring any obligation to you. © Copyright IBM Corporation 1993, 2012. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Chapter 1. Introduction to embedded Include files for COBOL embedded SQL SQL................1 applications .............29 Embedding SQL statements -

Proc SQL Vs. Pass-Thru SQL on DB2® Or How I Found a Day

NESUG 15 Beginning Tutorials Proc SQL vs. Pass-Thru SQL on DB2® Or How I Found a Day Jeffrey S. Cohen, American Education Services/PHEAA Abstract When extracting data from multiple tables, you must employ Join conditions to avoid a Cartesian product, Many people use Proc SQL to query DB2 where each row from table one will be joined with databases yet ignore the Pass-thru ability of this each row in table two. A Join condition will tell the Proc. This paper will compare the Proc SQL of DBMS how to match the rows of the two tables. Consider the above example, only now we want the SAS® and Pass-thru SQL on the same 30 million- color of the person's car as well: row table to highlight the time and resource Select A.Name, savings that Pass-through SQL can give. A.Age, A.City, Overview B.Color From Table_Demographics A Inner Join One of the most useful features of SAS software is Table_Cars B on its ability to read data in almost any form or A.Name = B.Name storage medium from multiple data sets. When Where A.Age < 30 and accessing data from a Database Management (A.City = 'Syracuse' or System (DBMS) such as DB2, the SQL procedure A.State = 'Maine') is required. Like many SAS procedures there are a Notice that when selecting multiple columns a variety of options available, some greatly comma must separate them. Also notice that the impacting performance. We will examine three table Table_Demographics is given the pseudonym techniques to use when joining three tables with of A and the table Table_Cars is given the Proc SQL and the results on performance. -

Part 2 the Next Generation Iseries... Simplicity in an on Demand World

IBM eServerJ iSeriesJ GP03 Technical Overview - Part 2 The Next Generation iSeries... Simplicity in an On Demand World January, May 2003 Announcements © 2000-2003 IBM Corporation IBM eServer iSeries The Customer Challenge: Solving the Cost3 Equation 2. Cost of Ongoing Operations ... Ongoing cost of skills to deploy and manage solutions © 2000-2003 IBM Corporation IBM eServer iSeries Enterprise IT management Made Simple OS/400 V5R2 Highlights Performance at your fingertips Flexible Capacity Upgrade on Demand i825, i870 and i890 Dynamic logical partitioning for award-winning 64-bit Linux7 Intuitive iSeries Navigator workload management tools Adaptive storage virtualization for high availability Mainframe-class functionality with switched disk cluster management Self-optimizing, multiple IBM DB27 UDB images for business unit consolidation Extensive Windows server management now supports Microsoft7 Cluster Service Flexible, secure management of e-business infrastructure Industry's first IBM Autonomous Computing Initiative inspired Enterprise Identity Mapping facilitates true single signon High performance Apache Web serving with secure sockets and caching accelerators Simple and pervasive operations with wireless-optimized Web-ready micro-drivers © 2000-2003 IBM Corporation IBM eServer iSeries Notes: Enterprise IT Management Made Simple OS/400 V5R2 builds on the mainframe-class management functions of OS/400 V5R1, such as dynamic logical partitioning with built-in graphical management tools such as iSeries Navigator. OS/400 V5R2 continues to focus on enterprise-class management tools with new self-managing technologies from IBM's Autonomic Computing project. V5R2 also extends many of the virtualization technologies available on the iSeries, to further assist clustering and business continuity solutions. For example, switched disk cluster services are extended with V5R2 to support database objects. -

Toad for IBM DB2 Release Notes

Toad for IBM DB2 7.1.x Release Notes Monday, November 18, 2019 Contents About Toad for IBM DB2 1 New Features 1 System Requirements 2 Resolved Issues and Enhancements 4 Known Issues 4 Third Party Known Issues 6 Getting Started 7 Toad for DB2 Editions 7 Product Licensing 8 Globalization 8 About Us 9 Copyright 9 About Toad for IBM DB2 With Toad, developers and database administrators can use a familiar, proven tool to manage databases. Toad empowers developers and administrators, of varying skill levels, to rapidly create and execute queries, automate database object management, and develop SQL more efficiently. Toad also provides utilities to compare, extract and search for objects, manage projects, import/export data and administer the database, while increasing your productivity and delivering access to an active user community. Toad for IBM DB2 7.1.2 is a patch release with new features, security enhancements and resolved issues. New Features In Toad for IBM DB2 7.1 the following new features were implemented: Security and Installation Enhancements For this release of Toad for IBM DB2 we have provided internal security adjustments. We have revised a list of third party components in use according to their known and potential vulnerabilities. Toad uses only reliable and safe components by third party vendors. See the list of third party components in Toad for IBM DB2the help file, section About Us | Third Party Components. Our Toad installers are now based on the Wix Toolset , which improves user experience and performance of the installation process. See Resolved Issues for more enhancements and resolved issues in this release of Toad for IBM DB2. -

DB2 Database Facilities Management …

IBM Jay.Manaloto Search Submit IBM Profiles Communities Apps Share Wikis This Wiki Search IBM TRIRIGA Following Actions Wiki Actions TRIRIGA Wiki Home You are in: IBM TRIRIGA > IBM TRIRIGA Application Platform > Performance > Best Practices for System Performance > Database specific considerations > DB2 database Facilities Management … Facilities Maintenance DB2 database Like | Updated December 4, 2019 by Jay.Manaloto | Tags: db2, db2_database, db2_performance, db2_tuning, performance, reserve_performance, system_performance Add or Environmental & Ener… remove tags Real Estate Management Edit Page Actions Capital Project Manag… CAD Integrator-Publis… IBM TRIRIGA Connect… IBM TRIRIGA Anywhere IBM TRIRIGA Applicati… Best Practices for System Performance. Support Matrix 5 Database Server Tuning and Maintenance (continued) Hints and Tips Installing < Back to Table of Contents Admin Console 5 Database Server Tuning and Maintenance (continued) 5.3 IBM DB2 Database (was 5.6) Builder Tools 5.3.1 IBM DB2 Database Server Tuning (was 5.2) a. DB2 Automatic Buffer Pool Size and Auto Extends (was 5.5.5) Connector for Busin… b. DB2 Diagnostic Log (was 5.5.6) 5.3.2 IBM DB2 Application Platform Indexes (was 5.4.2.a) Connector for Esri GIS 5.3.3 Reserve Indexes for DB2 (was 5.4.2.d) Document Manager 5.3.4 Lease Indexes for DB2 5.3.5 Internal Lease Benchmark Findings Extended Formula a. Performance Benchmark Index Objectives b. Performance Environments Gantt Scheduler b.1 Data-Load Hardware Environment b.2 Multi-User Benchmark Test Environment Globalization b.3 Software Environment Group Object b.4 Key Configurations b.4.1 IBM DB2 Database Server Label Manager b.4.2 IBM WebSphere Application Server b.4.3 Operating System Licensing b.4.4 IBM TRIRIGA Platform c. -

IBM DB2 Database (Was 5.6) Builder Tools 5.3.1 IBM DB2 Database Server Tuning (Was 5.2) A

IBM Jay.Manaloto Search Submit IBM Profiles Communities Apps Share Wikis This Wiki Search IBM TRIRIGA Following Actions Wiki Actions TRIRIGA Wiki Home You are in: IBM TRIRIGA > IBM TRIRIGA Application Platform > Performance > Best Practices for System Performance > Database specific considerations > DB2 database Facilities Management … Facilities Maintenance DB2 database Like | Updated December 4, 2019 by Jay.Manaloto | Tags: db2, db2_database, db2_performance, db2_tuning, performance, reserve_performance, system_performance Add or Environmental & Ener… remove tags Real Estate Management Edit Page Actions Capital Project Manag… CAD Integrator-Publis… IBM TRIRIGA Connect… IBM TRIRIGA Anywhere IBM TRIRIGA Applicati… Best Practices for System Performance. Support Matrix 5 Database Server Tuning and Maintenance (continued) Hints and Tips Installing < Back to Table of Contents Admin Console 5 Database Server Tuning and Maintenance (continued) 5.3 IBM DB2 Database (was 5.6) Builder Tools 5.3.1 IBM DB2 Database Server Tuning (was 5.2) a. DB2 Automatic Buffer Pool Size and Auto Extends (was 5.5.5) Connector for Busin… b. DB2 Diagnostic Log (was 5.5.6) 5.3.2 IBM DB2 Application Platform Indexes (was 5.4.2.a) Connector for Esri GIS 5.3.3 Reserve Indexes for DB2 (was 5.4.2.d) Document Manager 5.3.4 Lease Indexes for DB2 5.3.5 Internal Lease Benchmark Findings Extended Formula a. Performance Benchmark Index Objectives b. Performance Environments Gantt Scheduler b.1 Data-Load Hardware Environment b.2 Multi-User Benchmark Test Environment Globalization b.3 Software Environment Group Object b.4 Key Configurations b.4.1 IBM DB2 Database Server Label Manager b.4.2 IBM WebSphere Application Server b.4.3 Operating System Licensing b.4.4 IBM TRIRIGA Platform c. -

A Survey: Security Perspectives of ORACLE and IBM-DB2 Databases

International Journal of Scientific and Research Publications, Volume 3, Issue 1, January 2013 1 ISSN 2250-3153 A Survey: Security Perspectives of ORACLE and IBM- DB2 Databases Lavanya Pamulaparty*, T. Praveen Kumar**, P. Vijaya Babu Varma** * Associate Professor and Head of CSE, Methodist College of Engineering. & Technology. ** Assistant Professor, Dept. of CSE, Methodist College of Engineering. & Technology. *** Assistant Professor, Dept. of CSE, Methodist College of Engineering & Technology. Abstract- As storage of data plays an integral part of databases, independent assurance of the security built into DB2. Whereas security issues becomes major concerns. Relational databases Oracle has undergone multiple evaluations of its database, IBM hold a significant portion of data stored in software, therefore has failed to have independent experts formally evaluate DB2, today’s database purchase decisions revolve around how secure making it difficult to qualify their assertions about their security the product is. This paper provides a categorical feature implementations. Oracle’s business model is to secure products comparison between Oracle9i Database (Oracle) and IBM DB2 out-of-the-box, and IBM’s is to make customers pay to secure Universal Database (DB2), in addition to examining features the products they purchase. This divergence in approach provided in the SecureWay product line from Tivoli, an IBM demonstrates the value of security to these database competitors subsidiary [7]. It explores the impact of IBM’s and Oracle’s and the resulting security built-in to their customers’ security models on users seeking to protect their critical deployments [7]. information systems and contrasts IBM’s strategy of building security outside of the DB2 database against Oracle’s strategy of securing information in the database server[6].In addition to the II. -

Z/OS Basics Preface

Contents Preface . iii How this course is organized . iii How each topic is organized . iv Part 1. Introduction to z/OS and the mainframe environment Chapter 1. Introduction to the new mainframe . 3 1.1 The new mainframe. 4 1.2 The S/360: A turning point in mainframe history . 4 1.3 An evolving architecture . 5 1.4 Mainframes in our midst . 6 1.5 What is a mainframe? . 7 1.6 Who uses mainframe computers?. 10 1.7 Factors contributing to mainframe use . 11 1.8 Typical mainframe workloads . 14 1.9 Roles in the mainframe world . 21 1.10 z/OS and other mainframe operating systems . 27 1.11 Summary . 29 Chapter 2. z/OS overview. 31 2.1 What is an operating system? . 32 2.2 Overview of z/OS facilities. 32 2.3 What is z/OS? . 34 2.4 Virtual storage and other mainframe concepts . 39 2.5 What is workload management? . 57 2.6 I/O and data management. 60 2.7 Supervising the execution of work in the system . 60 2.8 Defining characteristics of z/OS . 68 2.9 Licensed programs for z/OS . 69 2.10 Middleware for z/OS . 70 2.11 A brief comparison of z/OS and UNIX. 71 2.12 Summary . 73 Chapter 3. TSO/E, ISPF, and UNIX: Interactive facilities of z/OS . 75 3.1 How do we interact with z/OS? . 76 3.2 TSO overview . 76 3.3 ISPF overview . 80 3.4 z/OS UNIX interactive interfaces. 99 3.5 Summary .