Delaunay Refinement Algorithms Steven Elliot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Constrained a Fast Algorithm for Generating Delaunay

004s7949/93 56.00 + 0.00 if) 1993 Pcrgamon Press Ltd A FAST ALGORITHM FOR GENERATING CONSTRAINED DELAUNAY TRIANGULATIONS S. W. SLOAN Department of Civil Engineering and Surveying, University of Newcastle, Shortland, NSW 2308, Australia {Received 4 March 1992) Abstract-A fast algorithm for generating constrained two-dimensional Delaunay triangulations is described. The scheme permits certain edges to be specified in the final t~an~ation, such as those that correspond to domain boundaries or natural interfaces, and is suitable for mesh generation and contour plotting applications. Detailed timing statistics indicate that its CPU time requhement is roughly proportional to the number of points in the data set. Subject to the conditions imposed by the edge constraints, the Delaunay scheme automatically avoids the formation of long thin triangles and thus gives high quality grids. A major advantage of the method is that it does not require extra points to be added to the data set in order to ensure that the specified edges are present. I~RODU~ON to be degenerate since two valid Delaunay triangu- lations are possible. Although it leads to a loss of Triangulation schemes are used in a variety of uniqueness, degeneracy is seldom a cause for concern scientific applications including contour plotting, since a triangulation may always be generated by volume estimation, and mesh generation for finite making an arbitrary, but consistent, choice between element analysis. Some of the most successful tech- two alternative patterns. niques are undoubtedly those that are based on the One major advantage of the Delaunay triangu- Delaunay triangulation. lation, as opposed to a triangulation constructed To describe the construction of a Delaunay triangu- heu~stically, is that it automati~ily avoids the lation, and hence explain some of its properties, it is creation of long thin triangles, with small included convenient to consider the corresponding Voronoi angles, wherever this is possible. -

An Approach of Using Delaunay Refinement to Mesh Continuous Height Fields

DEGREE PROJECT IN TECHNOLOGY, FIRST CYCLE, 15 CREDITS STOCKHOLM, SWEDEN 2017 An approach of using Delaunay refinement to mesh continuous height fields NOAH TELL ANTON THUN KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF COMPUTER SCIENCE AND COMMUNICATION An approach of using Delaunay refinement to mesh continuous height fields NOAH TELL ANTON THUN Degree Programme in Computer Science Date: June 5, 2017 Supervisor: Alexander Kozlov Examiner: Örjan Ekeberg Swedish title: En metod att använda Delaunay-raffinemang för att skapa polygonytor av kontinuerliga höjdfält School of Computer Science and Communication Abstract Delaunay refinement is a mesh triangulation method with the goal of generating well-shaped triangles to obtain a valid Delaunay triangulation. In this thesis, an approach of using this method for meshing continuous height field terrains is presented using Perlin noise as the height field. The Delaunay approach is compared to grid-based meshing to verify that the theoretical time complexity O(n log n) holds and how accurately and deterministically the Delaunay approach can represent the height field. However, even though grid-based mesh generation is faster due to an O(n) time complexity, the focus of the report is to find out if De- launay refinement can be used to generate meshes quick enough for real-time applications. As the available memory for rendering the meshes is limited, a solution for providing a co- hesive mesh surface is presented using a hole filling algorithm since the Delaunay approach ends up leaving gaps in the mesh when a chunk division is used to limit the total mesh count present in the application. -

Triangulation of Simple 3D Shapes with Well-Centered Tetrahedra

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Illinois Digital Environment for Access to Learning and Scholarship Repository TRIANGULATION OF SIMPLE 3D SHAPES WITH WELL-CENTERED TETRAHEDRA EVAN VANDERZEE, ANIL N. HIRANI, AND DAMRONG GUOY Abstract. A completely well-centered tetrahedral mesh is a triangulation of a three dimensional domain in which every tetrahedron and every triangle contains its circumcenter in its interior. Such meshes have applications in scientific computing and other fields. We show how to triangulate simple domains using completely well-centered tetrahedra. The domains we consider here are space, infinite slab, infinite rectangular prism, cube, and regular tetrahedron. We also demonstrate single tetrahedra with various combinations of the properties of dihedral acuteness, 2-well-centeredness, and 3-well-centeredness. 1. Introduction 3 In this paper we demonstrate well-centered triangulation of simple domains in R . A well- centered simplex is one for which the circumcenter lies in the interior of the simplex [8]. This definition is further refined to that of a k-well-centered simplex which is one whose k-dimensional faces have the well-centeredness property. An n-dimensional simplex which is k-well-centered for all 1 ≤ k ≤ n is called completely well-centered [15]. These properties extend to simplicial complexes, i.e. to meshes. Thus a mesh can be completely well-centered or k-well-centered if all its simplices have that property. For triangles, being well-centered is the same as being acute-angled. But a tetrahedron can be dihedral acute without being 3-well-centered as we show by example in Sect. -

Computing Three-Dimensional Constrained Delaunay Refinement Using the GPU

Computing Three-dimensional Constrained Delaunay Refinement Using the GPU Zhenghai Chen Tiow-Seng Tan School of Computing School of Computing National University of Singapore National University of Singapore [email protected] [email protected] ABSTRACT collectively called elements, are split in this order in many rounds We propose the first GPU algorithm for the 3D triangulation refine- until there are no more bad tetrahedra. Each round, the splitting ment problem. For an input of a piecewise linear complex G and is done to many elements in parallel with many GPU threads. The a constant B, it produces, by adding Steiner points, a constrained algorithm first calculates the so-called splitting points that can split Delaunay triangulation conforming to G and containing tetrahedra elements into smaller ones, then decides on a subset of them to mostly of radius-edge ratios smaller than B. Our implementation of be Steiner points for actual insertions into the triangulation T . the algorithm shows that it can be an order of magnitude faster than Note first that a splitting point is calculated by a GPU threadas the best CPU algorithm while using a similar amount of Steiner the midpoint of a subsegment, the circumcenter of the circumcircle points to produce triangulations of comparable quality. of the subface, and the circumcenter of the circumsphere of the tetrahedron. Note second that not all splitting points calculated CCS CONCEPTS can be inserted as Steiner points in a same round as they together can potentially create undesirable short edges in T to cause non- • Theory of computation → Computational geometry; • Com- termination of the algorithm. -

Triangulations and Simplex Tilings of Polyhedra

Triangulations and Simplex Tilings of Polyhedra by Braxton Carrigan A dissertation submitted to the Graduate Faculty of Auburn University in partial fulfillment of the requirements for the Degree of Doctor of Philosophy Auburn, Alabama August 4, 2012 Keywords: triangulation, tiling, tetrahedralization Copyright 2012 by Braxton Carrigan Approved by Andras Bezdek, Chair, Professor of Mathematics Krystyna Kuperberg, Professor of Mathematics Wlodzimierz Kuperberg, Professor of Mathematics Chris Rodger, Don Logan Endowed Chair of Mathematics Abstract This dissertation summarizes my research in the area of Discrete Geometry. The par- ticular problems of Discrete Geometry discussed in this dissertation are concerned with partitioning three dimensional polyhedra into tetrahedra. The most widely used partition of a polyhedra is triangulation, where a polyhedron is broken into a set of convex polyhedra all with four vertices, called tetrahedra, joined together in a face-to-face manner. If one does not require that the tetrahedra to meet along common faces, then we say that the partition is a tiling. Many of the algorithmic implementations in the field of Computational Geometry are dependent on the results of triangulation. For example computing the volume of a polyhedron is done by adding volumes of tetrahedra of a triangulation. In Chapter 2 we will provide a brief history of triangulation and present a number of known non-triangulable polyhedra. In this dissertation we will particularly address non-triangulable polyhedra. Our research was motivated by a recent paper of J. Rambau [20], who showed that a nonconvex twisted prisms cannot be triangulated. As in algebra when proving a number is not divisible by 2012 one may show it is not divisible by 2, we will revisit Rambau's results and show a new shorter proof that the twisted prism is non-triangulable by proving it is non-tilable. -

Two Algorithms for Constructing a Delaunay Triangulation 1

International Journal of Computer and Information Sciences, Vol. 9, No. 3, 1980 Two Algorithms for Constructing a Delaunay Triangulation 1 D. T. Lee 2 and B. J. Schachter 3 Received July 1978; revised February 1980 This paper provides a unified discussion of the Delaunay triangulation. Its geometric properties are reviewed and several applications are discussed. Two algorithms are presented for constructing the triangulation over a planar set of Npoints. The first algorithm uses a divide-and-conquer approach. It runs in O(Nlog N) time, which is asymptotically optimal. The second algorithm is iterative and requires O(N 2) time in the worst case. However, its average case performance is comparable to that of the first algorithm. KEY WORDS: Delaunay triangulation; triangulation; divide-and-con- quer; Voronoi tessellation; computational geometry; analysis of algorithms. 1. INTRODUCTION In this paper we consider the problem of triangulating a set of points in the plane. Let V be a set of N ~> 3 distinct points in the Euclidean plane. We assume that these points are not all colinear. Let E be the set of (n) straight- line segments (edges) between vertices in V. Two edges el, e~ ~ E, el ~ e~, will be said to properly intersect if they intersect at a point other than their endpoints. A triangulation of V is a planar straight-line graph G(V, E') for which E' is a maximal subset of E such that no two edges of E' properly intersect.~16~ 1 This work was supported in part by the National Science Foundation under grant MCS-76-17321 and the Joint Services Electronics Program under contract DAAB-07- 72-0259. -

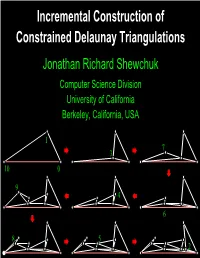

Incremental Construction of Constrained Delaunay Triangulations Jonathan Richard Shewchuk Computer Science Division University of California Berkeley, California, USA

Incremental Construction of Constrained Delaunay Triangulations Jonathan Richard Shewchuk Computer Science Division University of California Berkeley, California, USA 1 7 3 10 0 9 4 6 8 5 2 The Delaunay Triangulation An edge is locally Delaunay if the two triangles sharing it have no vertex in each others’ circumcircles. A Delaunay triangulation is a triangulation of a point set in which every edge is locally Delaunay. Constraining Edges Sometimes we need to force a triangulation to contain specified edges. Nonconvex shapes; internal boundaries Discontinuities in interpolated functions 2 Ways to Recover Segments Conforming Delaunay triangulations Edges are all locally Delaunay. Worst−case input needsΩ (n²) to O(n2.5 ) extra vertices. Constrained Delaunay triangulations (CDTs) Edges are locally Delaunay or are domain boundaries. Goal segments Input: planar straight line graph (PSLG) Output: constrained Delaunay triangulation (CDT) Every edge is locally Delaunay except segments. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Randomized Incremental CDT Construction Start with Delaunay triangulation of vertices. Do segment location. Insert segment. Topics Inserting a segment in expected linear time. -

Generalized Barycentric Coordinate Finite Element Methods on Polytope Meshes

Generalized Barycentric Coordinate Finite Element Methods on Polytope Meshes Andrew Gillette Department of Mathematics University of Arizona Andrew Gillette - U. ArizonaGBC FEM( ) on Polytope Meshes CERMICS - June 2014 1 / 41 What are a priori FEM error estimates? n n Poisson’s equation in R : Given a domain D ⊂ R and f : D! R, find u such that strong form −∆u = f u 2 H2(D) Z Z weak form ru · rφ = f φ 8φ 2 H1(D) D D Z Z 1 discrete form ruh · rφh = f φh 8φh 2 Vh finite dim. ⊂ H (D) D D Typical finite element method: ! Mesh D by polytopes fPg with vertices fvi g; define h := max diam(P). ! Fix basis functions λi with local piecewise support, e.g. barycentric functions. P ! Define uh such that it uses the λi to approximate u, e.g. uh := i u(vi )λi A linear system for uh can then be derived, admitting an a priori error estimate: p p+1 jju − uhjjH1(P) ≤ Ch jujHp+1(P); 8u 2 H (P); | {z } | {z } approximation error optimal error bound provided that the λi span all degree p polynomials on each polytope P. Andrew Gillette - U. ArizonaGBC FEM( ) on Polytope Meshes CERMICS - June 2014 2 / 41 The generalized barycentric coordinate approach Let P be a convex polytope with vertex set V . We say that λv : P ! R are generalized barycentric coordinates (GBCs) on P X if they satisfy λv ≥ 0 on P and L = L(vv)λv; 8 L : P ! R linear. v2V Familiar properties are implied by this definition: X X λv ≡ 1 vλv(x) = x λvi (vj ) = δij v2V v2V | {z } | {z } | {z } interpolation partition of unity linear precision traditional FEM family of GBC reference elements Unit Affine Map T Bilinear Map Diameter Reference Physical T Ω Ω Element Element Andrew Gillette - U. -

Delaunay Triangulations in the Plane

DELAUNAY TRIANGULATIONS IN THE PLANE HANG SI Contents Introduction 1 1. Definitions and properties 1 1.1. Voronoi diagrams 2 1.2. The empty circumcircle property 4 1.3. The lifting transformation 7 2. Lawson's flip algorithm 10 2.1. The Delaunay lemma 10 2.2. Edge flips and Lawson's algorithm 12 2.3. Optimal properties of Delaunay triangulations 15 2.4. The (undirected) flip graph 17 3. Randomized incremental flip algorithm 18 3.1. Inserting a vertex 18 3.2. Description of the algorithm 18 3.3. Worst-case running time 19 3.4. The expected number of flips 20 3.5. Point location 21 Exercises 23 References 25 Introduction This chapter introduces the most fundamental geometric structures { Delaunay tri- angulation as well as its dual Voronoi diagram. We will start with their definitions and properties. Then we introduce simple and efficient algorithms to construct them in the plane. We first learn a useful and straightforward algorithm { Lawson's edge flip algo- rithm { which transforms any triangulation into the Delaunay triangulation. We will prove its termination and correctness. From this algorithm, we will show many optimal properties of Delaunay triangulations. We then introduce a randomized incremental flip algorithm to construct Delaunay triangulations and prove its expected runtime is optimal. 1. Definitions and properties This section introduces the Delaunay triangulation of a finite point set in the plane. It is introduced by the Russian mathematician Boris Nikolaevich Delone (1890{1980) 1 2 HANG SI in 1934 [4]. It is a triangulation with many optimal properties. There are many ways to define Delaunay triangulation, which also shows different properties of it. -

TRIANGULATIONS of MANIFOLDS in Topology, a Basic Building Block for Spaces Is the N-Simplex. a 0-Simplex Is a Point, a 1-Simplex

TRIANGULATIONS OF MANIFOLDS CIPRIAN MANOLESCU In topology, a basic building block for spaces is the n-simplex. A 0-simplex is a point, a 1-simplex is a closed interval, a 2-simplex is a triangle, and a 3-simplex is a tetrahedron. In general, an n-simplex is the convex hull of n + 1 vertices in n-dimensional space. One constructs more complicated spaces by gluing together several simplices along their faces, and a space constructed in this fashion is called a simplicial complex. For example, the surface of a cube can be built out of twelve triangles|two for each face, as in the following picture: Apart from simplicial complexes, manifolds form another fundamental class of spaces studied in topology. An n-dimensional topological manifold is a space that looks locally like the n-dimensional Euclidean space; i.e., such that it can be covered by open sets (charts) n homeomorphic to R . Furthermore, for the purposes of this note, we will only consider manifolds that are second countable and Hausdorff, as topological spaces. One can consider topological manifolds with additional structure: (i)A smooth manifold is a topological manifold equipped with a (maximal) open cover by charts such that the transition maps between charts are smooth (C1); (ii)A Ck manifold is similar to the above, but requiring that the transition maps are only Ck, for 0 ≤ k < 1. In particular, C0 manifolds are the same as topological manifolds. For k ≥ 1, it can be shown that every Ck manifold has a unique compatible C1 structure. Thus, for k ≥ 1 the study of Ck manifolds reduces to that of smooth manifolds. -

From Triangulation to Simplex Mesh: a Simple and Efficient

From Triangulation to Simplex Mesh: a Simple and Efficient Transformation Francisco J. GALDAMESa;c, Fabrice JAILLETb;c (a) Department of Electrical Engineering, Universidad de Chile, Av. Tupper 2007, Santiago, Chile (b) Universit´ede Lyon, IUT Lyon 1, D´epartement Informatique, F-01000, France (c) Universit´ede Lyon, CNRS, Universit´eLyon 1, LIRIS, ´equipe SAARA, UMR5205, F-69622, France Preprint { April 2010 Abstract In the field of 3D images, relevant information can be difficult to in- terpret without further computer-aided processing. Generally, and this is particularly true in medical imaging, a segmentation process is run and coupled with a visualization of the delineated structures to help un- derstanding the underlying information. To achieve the extraction of the boundary structures, deformable models are frequently used tools. Amongst all techniques, Simplex Meshes are valuable models thanks to their good propensity to handle a large variety of shape alterations alto- gether with a fine resolution and stability. However, despite all these great characteristics, Simplex Meshes are lacking to cope satisfyingly with other related tasks, as rendering, mechanical simulation or reconstruction from iso-surfaces. As a consequence, Triangulation Meshes are often preferred. In order to face this problem, we propose an accurate method to shift from a model to another, and conversely. For this, we are taking advantage of the fact that triangulation and simplex meshes are topologically duals, turning it into a natural swap between these two models. A difficulty arise as they are not geometrically equivalent, leading to loss of informa- tion and to geometry deterioration whenever a transformation between these dual meshes takes place. -

Efficient Mesh Optimization Schemes Based on Optimal Delaunay

Comput. Methods Appl. Mech. Engrg. 200 (2011) 967–984 Contents lists available at ScienceDirect Comput. Methods Appl. Mech. Engrg. journal homepage: www.elsevier.com/locate/cma Efficient mesh optimization schemes based on Optimal Delaunay Triangulations q ⇑ Long Chen a, , Michael Holst b a Department of Mathematics, University of California at Irvine, Irvine, CA 92697, USA b Department of Mathematics, University of California at San Diego, La Jolla, CA 92093, USA article info abstract Article history: In this paper, several mesh optimization schemes based on Optimal Delaunay Triangulations are devel- Received 15 April 2010 oped. High-quality meshes are obtained by minimizing the interpolation error in the weighted L1 norm. Received in revised form 2 November 2010 Our schemes are divided into classes of local and global schemes. For local schemes, several old and new Accepted 10 November 2010 schemes, known as mesh smoothing, are derived from our approach. For global schemes, a graph Lapla- Available online 16 November 2010 cian is used in a modified Newton iteration to speed up the local approach. Our work provides a math- ematical foundation for a number of mesh smoothing schemes often used in practice, and leads to a new Keywords: global mesh optimization scheme. Numerical experiments indicate that our methods can produce well- Mesh smoothing shaped triangulations in a robust and efficient way. Mesh optimization Mesh generation Ó 2010 Elsevier B.V. All rights reserved. Delaunay triangulation Optimal Delaunay Triangulation 1. Introduction widely used harmonic energy in moving mesh methods [8–10], summation of weighted edge lengths [11,12], and the distortion We shall develop fast and efficient mesh optimization schemes energy used in the approach of Centroid Voronoi Tessellation based on Optimal Delaunay Triangulations (ODTs) [1–3].