A Brief Review of Generalized Entropies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Guide for the Use of the International System of Units (SI)

Guide for the Use of the International System of Units (SI) m kg s cd SI mol K A NIST Special Publication 811 2008 Edition Ambler Thompson and Barry N. Taylor NIST Special Publication 811 2008 Edition Guide for the Use of the International System of Units (SI) Ambler Thompson Technology Services and Barry N. Taylor Physics Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899 (Supersedes NIST Special Publication 811, 1995 Edition, April 1995) March 2008 U.S. Department of Commerce Carlos M. Gutierrez, Secretary National Institute of Standards and Technology James M. Turner, Acting Director National Institute of Standards and Technology Special Publication 811, 2008 Edition (Supersedes NIST Special Publication 811, April 1995 Edition) Natl. Inst. Stand. Technol. Spec. Publ. 811, 2008 Ed., 85 pages (March 2008; 2nd printing November 2008) CODEN: NSPUE3 Note on 2nd printing: This 2nd printing dated November 2008 of NIST SP811 corrects a number of minor typographical errors present in the 1st printing dated March 2008. Guide for the Use of the International System of Units (SI) Preface The International System of Units, universally abbreviated SI (from the French Le Système International d’Unités), is the modern metric system of measurement. Long the dominant measurement system used in science, the SI is becoming the dominant measurement system used in international commerce. The Omnibus Trade and Competitiveness Act of August 1988 [Public Law (PL) 100-418] changed the name of the National Bureau of Standards (NBS) to the National Institute of Standards and Technology (NIST) and gave to NIST the added task of helping U.S. -

Quantum Entanglement Inferred by the Principle of Maximum Tsallis Entropy

Quantum entanglement inferred by the principle of maximum Tsallis entropy Sumiyoshi Abe1 and A. K. Rajagopal2 1College of Science and Technology, Nihon University, Funabashi, Chiba 274-8501, Japan 2Naval Research Laboratory, Washington D.C., 20375-5320, U.S.A. The problem of quantum state inference and the concept of quantum entanglement are studied using a non-additive measure in the form of Tsallis’ entropy indexed by the positive parameter q. The maximum entropy principle associated with this entropy along with its thermodynamic interpretation are discussed in detail for the Einstein- Podolsky-Rosen pair of two spin-1/2 particles. Given the data on the Bell-Clauser- Horne-Shimony-Holt observable, the analytic expression is given for the inferred quantum entangled state. It is shown that for q greater than unity, indicating the sub- additive feature of the Tsallis entropy, the entangled region is small and enlarges as one goes into the super-additive regime where q is less than unity. It is also shown that quantum entanglement can be quantified by the generalized Kullback-Leibler entropy. quant-ph/9904088 26 Apr 1999 PACS numbers: 03.67.-a, 03.65.Bz I. INTRODUCTION Entanglement is a fundamental concept highlighting non-locality of quantum mechanics. It is well known [1] that the Bell inequalities, which any local hidden variable theories should satisfy, can be violated by entangled states of a composite system described by quantum mechanics. Experimental results [2] suggest that naive local realism à la Einstein, Podolsky, and Rosen [3] may not actually hold. Related issues arising out of the concept of quantum entanglement are quantum cryptography, quantum teleportation, and quantum computation. -

On the Irresistible Efficiency of Signal Processing Methods in Quantum

On the Irresistible Efficiency of Signal Processing Methods in Quantum Computing 1,2 2 Andreas Klappenecker∗ , Martin R¨otteler† 1Department of Computer Science, Texas A&M University, College Station, TX 77843-3112, USA 2 Institut f¨ur Algorithmen und Kognitive Systeme, Universit¨at Karlsruhe,‡ Am Fasanengarten 5, D-76 128 Karlsruhe, Germany February 1, 2008 Abstract We show that many well-known signal transforms allow highly ef- ficient realizations on a quantum computer. We explain some elemen- tary quantum circuits and review the construction of the Quantum Fourier Transform. We derive quantum circuits for the Discrete Co- sine and Sine Transforms, and for the Discrete Hartley transform. We show that at most O(log2 N) elementary quantum gates are necessary to implement any of those transforms for input sequences of length N. arXiv:quant-ph/0111039v1 7 Nov 2001 §1 Introduction Quantum computers have the potential to solve certain problems at much higher speed than any classical computer. Some evidence for this statement is given by Shor’s algorithm to factor integers in polynomial time on a quan- tum computer. A crucial part of Shor’s algorithm depends on the discrete Fourier transform. The time complexity of the quantum Fourier transform is polylogarithmic in the length of the input signal. It is natural to ask whether other signal transforms allow for similar speed-ups. We briefly recall some properties of quantum circuits and construct the quantum Fourier transform. The main part of this paper is concerned with ∗e-mail: [email protected] †e-mail: [email protected] ‡research group Quantum Computing, Professor Thomas Beth the construction of quantum circuits for the discrete Cosine transforms, for the discrete Sine transforms, and for the discrete Hartley transform. -

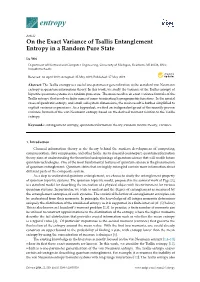

On the Exact Variance of Tsallis Entanglement Entropy in a Random Pure State

entropy Article On the Exact Variance of Tsallis Entanglement Entropy in a Random Pure State Lu Wei Department of Electrical and Computer Engineering, University of Michigan, Dearborn, MI 48128, USA; [email protected] Received: 26 April 2019; Accepted: 25 May 2019; Published: 27 May 2019 Abstract: The Tsallis entropy is a useful one-parameter generalization to the standard von Neumann entropy in quantum information theory. In this work, we study the variance of the Tsallis entropy of bipartite quantum systems in a random pure state. The main result is an exact variance formula of the Tsallis entropy that involves finite sums of some terminating hypergeometric functions. In the special cases of quadratic entropy and small subsystem dimensions, the main result is further simplified to explicit variance expressions. As a byproduct, we find an independent proof of the recently proven variance formula of the von Neumann entropy based on the derived moment relation to the Tsallis entropy. Keywords: entanglement entropy; quantum information theory; random matrix theory; variance 1. Introduction Classical information theory is the theory behind the modern development of computing, communication, data compression, and other fields. As its classical counterpart, quantum information theory aims at understanding the theoretical underpinnings of quantum science that will enable future quantum technologies. One of the most fundamental features of quantum science is the phenomenon of quantum entanglement. Quantum states that are highly entangled contain more information about different parts of the composite system. As a step to understand quantum entanglement, we choose to study the entanglement property of quantum bipartite systems. The quantum bipartite model, proposed in the seminal work of Page [1], is a standard model for describing the interaction of a physical object with its environment for various quantum systems. -

![Arxiv:1707.03526V1 [Cond-Mat.Stat-Mech] 12 Jul 2017 Eq](https://docslib.b-cdn.net/cover/7780/arxiv-1707-03526v1-cond-mat-stat-mech-12-jul-2017-eq-1057780.webp)

Arxiv:1707.03526V1 [Cond-Mat.Stat-Mech] 12 Jul 2017 Eq

Generalized Ensemble Theory with Non-extensive Statistics Ke-Ming Shen,∗ Ben-Wei Zhang,y and En-Ke Wang Key Laboratory of Quark & Lepton Physics (MOE) and Institute of Particle Physics, Central China Normal University, Wuhan 430079, China (Dated: October 17, 2018) The non-extensive canonical ensemble theory is reconsidered with the method of Lagrange multipliers by maximizing Tsallis entropy, with the constraint that the normalized term of P q Tsallis' q−average of physical quantities, the sum pj , is independent of the probability pi for Tsallis parameter q. The self-referential problem in the deduced probability and thermal quantities in non-extensive statistics is thus avoided, and thermodynamical relationships are obtained in a consistent and natural way. We also extend the study to the non-extensive grand canonical ensemble theory and obtain the q-deformed Bose-Einstein distribution as well as the q-deformed Fermi-Dirac distribution. The theory is further applied to the general- ized Planck law to demonstrate the distinct behaviors of the various generalized q-distribution functions discussed in literature. I. INTRODUCTION In the last thirty years the non-extensive statistical mechanics, based on Tsallis entropy [1,2] and the corresponding deformed exponential function, has been developed and attracted a lot of attentions with a large amount of applications in rather diversified fields [3]. Tsallis non- extensive statistical mechanics is a generalization of the common Boltzmann-Gibbs (BG) statistical PW mechanics by postulating a generalized entropy of the classical one, S = −k i=1 pi ln pi: W X q Sq = −k pi lnq pi ; (1) i=1 where k is a positive constant and denotes Boltzmann constant in BG statistical mechanics. -

Conceptual Inadequacy of the Shore and Johnson Axioms for Wide Classes of Complex Systems

Entropy 2015, 17, 2853-2861; doi:10.3390/e17052853 OPEN ACCESS entropy ISSN 1099-4300 www.mdpi.com/journal/entropy Article Conceptual Inadequacy of the Shore and Johnson Axioms for Wide Classes of Complex Systems Constantino Tsallis 1;2 1 Centro Brasileiro de Pesquisas Físicas and National Institute of Science and Technology for Complex Systems, Rua Xavier Sigaud 150, 22290-180, Rio de Janeiro - RJ, Brazil; E-Mail: [email protected] 2 Santa Fe Institute, 1399 Hyde Park Road, Santa Fe, New Mexico 87501, NM, USA Academic Editor: Antonio M. Scarfone Received: 9 April 2015 / Accepted: 4 May 2015 / Published: 5 May 2015 Abstract: It is by now well known that the Boltzmann-Gibbs-von Neumann-Shannon logarithmic entropic functional (SBG) is inadequate for wide classes of strongly correlated systems: see for instance the 2001 Brukner and Zeilinger’s Conceptual inadequacy of the Shannon information in quantum measurements, among many other systems exhibiting various forms of complexity. On the other hand, the Shannon and Khinchin axioms uniquely P mandate the BG form SBG = −k i pi ln pi; the Shore and Johnson axioms follow the same path. Many natural, artificial and social systems have been satisfactorily approached P q 1− i pi with nonadditive entropies such as the Sq = k q−1 one (q 2 R; S1 = SBG), basis of nonextensive statistical mechanics. Consistently, the Shannon 1948 and Khinchine 1953 uniqueness theorems have already been generalized in the literature, by Santos 1997 and Abe 2000 respectively, in order to uniquely mandate Sq. We argue here that the same remains to be done with the Shore and Johnson 1980 axioms. -

Grand Canonical Ensemble of the Extended Two-Site Hubbard Model Via a Nonextensive Distribution

Grand canonical ensemble of the extended two-site Hubbard model via a nonextensive distribution Felipe Américo Reyes Navarro1;2∗ Email: [email protected] Eusebio Castor Torres-Tapia2 Email: [email protected] Pedro Pacheco Peña3 Email: [email protected] 1Facultad de Ciencias Naturales y Matemática, Universidad Nacional del Callao (UNAC) Av. Juan Pablo II 306, Bellavista, Callao, Peru 2Facultad de Ciencias Físicas, Universidad Nacional Mayor de San Marcos (UNMSM) Av. Venezuela s/n Cdra. 34, Apartado Postal 14-0149, Lima 14, Peru 3Universidad Nacional Tecnológica del Cono Sur (UNTECS), Av. Revolución s/n, Sector 3, Grupo 10, Mz. M Lt. 17, Villa El Salvador, Lima, Peru ∗Corresponding author. Facultad de Ciencias Físicas, Universidad Nacional Mayor de San Marcos (UNMSM), Av. Venezuela s/n Cdra. 34, Apartado Postal 14-0149, Lima 14, Peru Abstract We hereby introduce a research about a grand canonical ensemble for the extended two-site Hubbard model, that is, we consider the intersite interaction term in addition to those of the simple Hubbard model. To calculate the thermodynamical parameters, we utilize the nonextensive statistical mechan- ics; specifically, we perform the simulations of magnetic internal energy, specific heat, susceptibility, and thermal mean value of the particle number operator. We found out that the addition of the inter- site interaction term provokes a shifting in all the simulated curves. Furthermore, for some values of the on-site Coulombian potential, we realize that, near absolute zero, the consideration of a chemical potential varying with temperature causes a nonzero entropy. Keywords Extended Hubbard model,Archive Quantum statistical mechanics, Thermalof properties SID of small particles PACS 75.10.Jm, 05.30.-d,65.80.+n Introduction Currently, several researches exist on the subject of the application of a generalized statistics for mag- netic systems in the literature [1-3]. -

Generalized Molecular Chaos Hypothesis and the H-Theorem: Problem of Constraints and Amendment of Nonextensive Statistical Mechanics

Generalized molecular chaos hypothesis and the H-theorem: Problem of constraints and amendment of nonextensive statistical mechanics Sumiyoshi Abe1,2,3 1Department of Physical Engineering, Mie University, Mie 514-8507, Japan*) 2Institut Supérieur des Matériaux et Mécaniques Avancés, 44 F. A. Bartholdi, 72000 Le Mans, France 3Inspire Institute Inc., McLean, Virginia 22101, USA Abstract Quite unexpectedly, kinetic theory is found to specify the correct definition of average value to be employed in nonextensive statistical mechanics. It is shown that the normal average is consistent with the generalized Stosszahlansatz (i.e., molecular chaos hypothesis) and the associated H-theorem, whereas the q-average widely used in the relevant literature is not. In the course of the analysis, the distributions with finite cut-off factors are rigorously treated. Accordingly, the formulation of nonextensive statistical mechanics is amended based on the normal average. In addition, the Shore- Johnson theorem, which supports the use of the q-average, is carefully reexamined, and it is found that one of the axioms may not be appropriate for systems to be treated within the framework of nonextensive statistical mechanics. PACS number(s): 05.20.Dd, 05.20.Gg, 05.90.+m ____________________________ *) Permanent address 1 I. INTRODUCTION There exist a number of physical systems that possess exotic properties in view of traditional Boltzmann-Gibbs statistical mechanics. They are said to be statistical- mechanically anomalous, since they often exhibit and realize broken ergodicity, strong correlation between elements, (multi)fractality of phase-space/configuration-space portraits, and long-range interactions, for example. In the past decade, nonextensive statistical mechanics [1,2], which is a generalization of the Boltzmann-Gibbs theory, has been drawing continuous attention as a possible theoretical framework for describing these systems. -

Nanothermodynamics – a Generic Approach to Material Properties at Nanoscale

Nanothermodynamics – A generic approach to material properties at nanoscale A. K. Rajagopal (1) , C. S. Pande (1) , and Sumiyoshi Abe (2) (1) Naval Research Laboratory, Washington D.C., 20375, USA (2) Institute of Physics, University of Tsukuba, Ibaraki 305-8571, Japan ABSTRACT Granular and nanoscale materials containing a relatively small number of constituents have been studied to discover how their properties differ from their macroscopic counterparts. These studies are designed to test how far the known macroscopic approaches such as thermodynamics may be applicable in these cases. A brief review of the recent literature on these topics is given as a motivation to introduce a generic approach called “Nanothermodynamics”. An important feature that must be incorporated into the theory is the non-additive property because of the importance of ‘surface’ contributions to the Physics of these systems. This is achieved by incorporating fluctuations into the theory right from the start. There are currently two approaches to incorporate this property: Hill (and further elaborated more recently with Chamberlin) initiated an approach by modifying the thermodynamic relations by taking into account the surface effects; the other generalizes Boltzmann-Gibbs statistical mechanics by relaxing the additivity properties of thermodynamic quantities to include nonextensive features of such systems. An outline of this generalization of the macroscopic thermodynamics to nano-systems will be given here. Invited presentation at the Indo-US Workshop on “Nanoscale -

Bits, Bans Y Nats: Unidades De Medida De Cantidad De Información

Bits, bans y nats: Unidades de medida de cantidad de información Junio de 2017 Apellidos, Nombre: Flores Asenjo, Santiago J. (sfl[email protected]) Departamento: Dep. de Comunicaciones Centro: EPS de Gandia 1 Introducción: bits Resumen Todo el mundo conoce el bit como unidad de medida de can- tidad de información, pero pocos utilizan otras unidades también válidas tanto para esta magnitud como para la entropía en el ám- bito de la Teoría de la Información. En este artículo se presentará el nat y el ban (también deno- minado hartley), y se relacionarán con el bit. Objetivos y conocimientos previos Los objetivos de aprendizaje este artículo docente se presentan en la tabla 1. Aunque se trata de un artículo divulgativo, cualquier conocimiento sobre Teo- ría de la Información que tenga el lector le será útil para asimilar mejor el contenido. 1. Mostrar unidades alternativas al bit para medir la cantidad de información y la entropía 2. Presentar las unidades ban (o hartley) y nat 3. Contextualizar cada unidad con la historia de la Teoría de la Información 4. Utilizar la Wikipedia como herramienta de referencia 5. Animar a la edición de la Wikipedia, completando los artículos en español, una vez aprendidos los conceptos presentados y su contexto histórico Tabla 1: Objetivos de aprendizaje del artículo docente 1 Introducción: bits El bit es utilizado de forma universal como unidad de medida básica de canti- dad de información. Su nombre proviene de la composición de las dos palabras binary digit (dígito binario, en inglés) por ser históricamente utilizado para denominar cada uno de los dos posibles valores binarios utilizados en compu- tación (0 y 1). -

Shannon's Formula Wlog(1+SNR): a Historical Perspective

Shannon’s Formula W log(1 + SNR): A Historical· Perspective on the occasion of Shannon’s Centenary Oct. 26th, 2016 Olivier Rioul <[email protected]> Outline Who is Claude Shannon? Shannon’s Seminal Paper Shannon’s Main Contributions Shannon’s Capacity Formula A Hartley’s rule C0 = log2 1 + ∆ is not Hartley’s 1 P Many authors independently derived C = 2 log2 1 + N in 1948. Hartley’s rule is exact: C0 = C (a coincidence?) C0 is the capacity of the “uniform” channel Shannon’s Conclusion 2 / 85 26/10/2016 Olivier Rioul Shannon’s Formula W log(1 + SNR): A Historical Perspective April 30, 2016 centennial day celebrated by Google: here Shannon is juggling with bits (1,0,0) in his communication scheme “father of the information age’’ Claude Shannon (1916–2001) 100th birthday 2016 April 30, 1916 Claude Elwood Shannon was born in Petoskey, Michigan, USA 3 / 85 26/10/2016 Olivier Rioul Shannon’s Formula W log(1 + SNR): A Historical Perspective here Shannon is juggling with bits (1,0,0) in his communication scheme “father of the information age’’ Claude Shannon (1916–2001) 100th birthday 2016 April 30, 1916 Claude Elwood Shannon was born in Petoskey, Michigan, USA April 30, 2016 centennial day celebrated by Google: 3 / 85 26/10/2016 Olivier Rioul Shannon’s Formula W log(1 + SNR): A Historical Perspective Claude Shannon (1916–2001) 100th birthday 2016 April 30, 1916 Claude Elwood Shannon was born in Petoskey, Michigan, USA April 30, 2016 centennial day celebrated by Google: here Shannon is juggling with bits (1,0,0) in his communication scheme “father of the information age’’ 3 / 85 26/10/2016 Olivier Rioul Shannon’s Formula W log(1 + SNR): A Historical Perspective “the most important man.. -

![[Cond-Mat.Stat-Mech] 3 Sep 2004 H Ee Om Esalte S Tt E H Specific the Get to It Use Then and Shall Tsallis We for Simple Temperature Form](https://docslib.b-cdn.net/cover/4983/cond-mat-stat-mech-3-sep-2004-h-ee-om-esalte-s-tt-e-h-speci-c-the-get-to-it-use-then-and-shall-tsallis-we-for-simple-temperature-form-1814983.webp)

[Cond-Mat.Stat-Mech] 3 Sep 2004 H Ee Om Esalte S Tt E H Specific the Get to It Use Then and Shall Tsallis We for Simple Temperature Form

Generalized Entropies and Statistical Mechanics Fariel Shafee Department of Physics Princeton University Princeton, NJ 08540 USA.∗ We consider the problem of defining free energy and other thermodynamic functions when the entropy is given as a general function of the probability distribution, including that for nonextensive forms. We find that the free energy, which is central to the determination of all other quantities, can be obtained uniquely numerically even when it is the root of a transcendental equation. In particular we study the cases of the Tsallis form and a new form proposed by us recently. We compare the free energy, the internal energy and the specific heat of a simple system of two energy states for each of these forms. PACS numbers: 05.70.-a, 05.90 +m, 89.90.+n Keywords: entropy, nonextensive, probability distribution function, free energy, specific heat I. INTRODUCTION heat in both cases and see how it changes with the change of the parameter at different temperatures. We have recently [1] proposed a new form of nonex- tensive entropy which depends on a parameter similar II. ENTROPY AND PDF to Tsallis entropy [2, 3, 4], and in a similar limit ap- proaches Shannon’s classical extensive entropy. We have shown how the definition for this new form of entropy can The pdf is found by optimizing the function arise naturally in terms of mixing of states in a phase cell when the cell is re-scaled, the parameter being a measure L = S + α(1 − pi)+ β(U − piEi) (1) of the rescaling, and how Shannon’s coding theorem [5] Xi Xi elucidates such an approach.