Application of Immersive Technologies and Natural Language to Hyper-Redundant Robot Teleoperation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Remote Control (RC) Monitor Electrical Controls Supplemental Instructions for Use with RC Monitor Manual

MANUAL: Remote Control (RC) Monitor Electrical Controls Supplemental Instructions for use with RC Monitor Manual INSTRUCTIONS FOR INSTALLATION, SAFE OPERATION AND MAINTENANCE Understand manual before use. Operation of this device without understanding the manual and DANGER receiving proper training is a misuse of this equipment. Obtain safety information at www.tft. com/serial-number SECTION 3.0 SECTION 4.9 General Information and Specifications Toggle Switch Monitor Operator Station SECTION 4.0 (Y4E-TS) Electrical Controls Installation and Operation SECTION 4.10 SECTION 4.1 Monitor Communication Monitor Mounted Interface Control Operator Station (Y4E-COMM) SECTION 4.2 SECTION 4.11 Panel Mount Monitor Position Display Operator Station (Y4E-DISP) (Y4E-RP) SECTION 4.12 SECTION 4.3 Remote Auxiliary Function Panel Mount With Display Interface Control Operator Station (YE-REMAUX) (Y4E-RP-D) SECTION 4.4 SECTION 4.13 Tethered Electric RC Monitor Aerial Operator Station Truck Installation (Y4E-CT-##) SECTION 4.14 SECTION 4.5 Multiplex Interface Control (YE-CAN#) Tethered Operator Station With Display (Y4E-CT-##-D) SECTION 4.15 Ethernet Interface Control SECTION 4.6 Wireless Operator Station (YE-RF-##) SECTION 4.16 Electric Nozzle Actuator SECTION 4.7 Wireless Operator Station With Display (YE-RF-##-D) SECTION 4.17 Valve Kits SECTION 4.8 (YE-VK-PH) Joystick Operator Station SECTION 5.0 (Y4E-JS) Troubleshooting TASK FORCE TIPS LLC 3701 Innovation Way, IN 46383-9327 USA MADE IN USA • tft.com 800-348-2686 • 219-462-6161 • Fax 219-464-7155 ©Copyright Task Force Tips LLC 2008-2018 LIY-500 November 21, 2018 Rev13 DANGER PERSONAL RESPONSIBILITY CODE The member companies of FEMSA that provide emergency response equipment and services want responders to know and understand the following: 1. -

Virtual Reality Headsets

VIRTUAL REALITY HEADSETS LILY CHIANG VR HISTORY • Many companies (Virtuality, Sega, Atari, Sony) jumped on the VR hype in the 1990s; but commercialization flopped because both hardware and software failed to deliver on the promised VR vision. • Any use of the VR devices in the 2000s was limited to the military, aviation, and medical industry for simulation and training. • VR hype resurged after Oculus successful KickStarter campaign; subsequently acquired by Facebook for $2.4 bn. • Investments rushed into the VR industry as major tech firms such as Google, Samsung, and Microsoft and prominent VC firms bet big on the VR revolution. LIST OF VIRTUAL REALITY HEADSET FIRMS Company Name Entered Exited Disposition Company Name Entered Exited Disposition Company Name Entered Exited Disposition LEEP Optics 1979 1998 Bankrupt Meta Altergaze 2014 Ongoing VPL Research 1984 1990 Bankrupt SpaceGlasses 2012 Ongoing Archos VR 2014 Ongoing Division Group Sulon Cortex 2012 Ongoing AirVr 2014 Ongoing LTD 1989 1999 Acquired Epson Moverio Sega VR 1991 1994 Bankrupt BT-200 2012 Ongoing 360Specs 2014 Ongoing Virtuality 1991 1997 Acquired i2i iPal 2012 Ongoing Microsoft VictorMaxx 1992 1998 Bankrupt Star VR 2013 Ongoing Hololens Systems 2015 Ongoing Durovis Dive 2013 Ongoing Razr OSVR 2015 Ongoing Atari Jaguar VR 1993 1996 Discontinued Vrizzmo 2013 Ongoing Virtual I-O 1993 1997 Bankrupt Cmoar 2015 Ongoing CastAR 2013 Ongoing eMagin 1993 Ongoing Dior Eyes VR 2015 Ongoing VRAse 2013 Ongoing Virtual Boy 1994 1995 Discontinued Yay3d VR 2013 Ongoing Impression Pi -

An Augmented Reality Social Communication Aid for Children and Adults with Autism: User and Caregiver Report of Safety and Lack of Negative Effects

bioRxiv preprint doi: https://doi.org/10.1101/164335; this version posted July 19, 2017. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. An Augmented Reality Social Communication Aid for Children and Adults with Autism: User and caregiver report of safety and lack of negative effects. An Augmented Reality Social Communication Aid for Children and Adults with Autism: User and caregiver report of safety and lack of negative effects. Ned T. Sahin1,2*, Neha U. Keshav1, Joseph P. Salisbury1, Arshya Vahabzadeh1,3 1Brain Power, 1 Broadway 14th Fl, Cambridge MA 02142, United States 2Department of Psychology, Harvard University, United States 3Department of Psychiatry, Massachusetts General Hospital, Boston * Corresponding Author. Ned T. Sahin, PhD, Brain Power, 1 Broadway 14th Fl, Cambridge, MA 02142, USA. Email: [email protected]. Abstract Background: Interest has been growing in the use of augmented reality (AR) based social communication interventions in autism spectrum disorders (ASD), yet little is known about their safety or negative effects, particularly in head-worn digital smartglasses. Research to understand the safety of smartglasses in people with ASD is crucial given that these individuals may have altered sensory sensitivity, impaired verbal and non-verbal communication, and may experience extreme distress in response to changes in routine or environment. Objective: The objective of this report was to assess the safety and negative effects of the Brain Power Autism System (BPAS), a novel AR smartglasses-based social communication aid for children and adults with ASD. BPAS uses emotion-based artificial intelligence and a smartglasses hardware platform that keeps users engaged in the social world by encouraging “heads-up” interaction, unlike tablet- or phone-based apps. -

Robot Explorer Program Manual

25 Valleywood Drive, Unit 20 Markham, Ontario, L3R 5L9, Canada Tel: (905) 943-9572 Fax: (905) 943-9197 i90 Robot Explorer Program Copyright © 2006, Dr Robot Inc. All Rights Reserved. www.DrRobot.com - 1 - Copyright Statement This manual or any portion of it may not be copied or duplicated without the expressed written consent of Dr Robot. All the software, firmware, hardware and product design accompanying with Dr Robot’s product are solely owned and copyrighted by Dr Robot. End users are authorized to use for personal research and educational use only. Duplication, distribution, reverse-engineering, or commercial application of the Dr Robot or licensed software and hardware without the expressed written consent of Dr Robot is explicitly forbidden. Copyright © 2006, Dr Robot Inc. All Rights Reserved. www.DrRobot.com - 2 - Table of Contents I. Introduction 4 II. System Requirements 4 III. Software Installation 5 Installing the i90 Robot Explorer Programs 5 Install the Joystick Controller 5 IV. Robot Operations 6 Using the Joystick Controls 6 Controlling Camera 7 Driving the Robot 7 Using i90 Robot Explorer Program Control 8 Video display 8 Operation Option 9 Utility Panel 17 Robot & Map Display 18 Robot Status 19 Using i90 Robot Explorer Client Program Control 20 Video 21 Robot & Map Display 21 Camera Operation 21 Robot Operation 21 Robot Data Display 21 Copyright © 2006, Dr Robot Inc. All Rights Reserved. www.DrRobot.com - 3 - I. Introduction This manual will provide you information on using the i90 Robot Explorer program to operate the robot. Please refer to the i90 Quick Guide regarding other documents related to i90. -

(VR): a Case Study of the HTC Vive Pro Eye's Usability

healthcare Article Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability Alexandra Sipatchin 1,*, Siegfried Wahl 1,2 and Katharina Rifai 1,2 1 Institute for Ophthalmic Research, University of Tübingen, 72076 Tübingen, Germany; [email protected] (S.W.); [email protected] (K.R.) 2 Carl Zeiss Vision International GmbH, 73430 Aalen, Germany * Correspondence: [email protected] Abstract: Background: A case study is proposed to empirically test and discuss the eye-tracking status-quo hardware capabilities and limitations of an off-the-shelf virtual reality (VR) headset with embedded eye-tracking for at-home ready-to-go online usability in ophthalmology applications. Methods: The eye-tracking status-quo data quality of the HTC Vive Pro Eye is investigated with novel testing specific to objective online VR perimetry. Testing was done across a wide visual field of the head-mounted-display’s (HMD) screen and in two different moving conditions. A new automatic and low-cost Raspberry Pi system is introduced for VR temporal precision testing for assessing the usability of the HTC Vive Pro Eye as an online assistance tool for visual loss. Results: The target position on the screen and head movement evidenced limitations of the eye-tracker capabilities as a perimetry assessment tool. Temporal precision testing showed the system’s latency of 58.1 milliseconds (ms), evidencing its good potential usage as a ready-to-go online assistance tool for visual loss. Conclusions: The test of the eye-tracking data quality provides novel analysis useful for testing upcoming VR headsets with embedded eye-tracking and opens discussion regarding expanding future introduction of these HMDs into patients’ homes for low-vision clinical usability. -

ACCESSORIES for PLAYSTATION®3 BECOME AVAILABLE Wireless Controller (SIXAXIS™), Memory Card Adaptor and BD Remote Control

ACCESSORIES FOR PLAYSTATION®3 BECOME AVAILABLE Wireless Controller (SIXAXIS™), Memory Card Adaptor and BD Remote Control Tokyo, October 3, 2006 – Sony Computer Entertainment Inc. (SCEI) today announced that Wireless Controller (SIXAXIS™) and Memory Card Adaptor would become available simultaneously with the launch of PLAYSTATION®3 (PS3) computer entertainment system on November 11th, 2006, in Japan, at a recommended retail price of 5,000 yen (tax included) and 1,500 yen (tax included) respectively. BD Remote Control will also become available on December 7th 2006, at a recommended retail price of 3,600 yen (tax included). Wireless Controller (SIXAXIS) for PS3 employs a high-precision, highly sensitive six-axis sensing system, which detects natural and intuitive movements of hands for real-time interactive play. With the adoption of Bluetooth® wireless technology, it allows up to 7 players to play at the same time, without having to attach any other external device such as a multitap. In addition, by simply plugging a USB cable to the controller, users can seamlessly switch from wireless to wired connection and automatically charge its battery while the controller is in use. Controller battery lasts up to 30 hours when fully charged *1). The new Memory Card Adaptor enables users to transfer data saved on Memory Cards for PlayStation® and PlayStation®2 onto the hard disk drive of PS3. To transfer data, users need to simply insert their Memory Cards to the Memory Card Adaptor connected to PS3 via a USB port. In December, BD Remote Control will also become available, which enables users to easily operate movies and music content on BD (Blu-ray Disc) and DVD on PS3. -

An Alternative Natural Action Interface for Virtual Reality

EPiC Series in Computing Volume 58, 2019, Pages 271{281 Proceedings of 34th International Confer- ence on Computers and Their Applications An Alternative Natural Action Interface for Virtual Reality Jay Woo1, Andrew Frost1, Tyler Goffinet1, Vinh Le1, Connor Scully-Allison1, Chase Carthen1, and Sergiu Dascalu Department of Computer Science and Engineering University of Nevada, Reno Reno NV, 89557 jjwoo95,vdacle,[email protected] [email protected] [email protected] Abstract The development of affordable virtual reality (VR) hardware represents a keystone of progress in modern software development and human-computer interaction. Despite the ready availability of robust hardware tools, there is presently a lack of video games or software in VR that demonstrates the gamut of unique and novel interfaces a virtual environment can provide. In this paper, we present a virtual reality video game which introduces unique user interface elements that can only be achieved in a 3D virtual en- vironment. The video game, titled Wolf Hunt, provides users with a menu system that innovates on traditional interfaces with a virtual representation of a common item people interact with daily: a mobile phone. Wolf Hunt throws users into a procedurally generated world where they take the role of an individual escaping a wolf assailant. Deviating from traditional locomotion options in VR interfaces, such as teleportation, Wolf Hunt measures the displacement of hand-held VR controllers with the VR headset to simulate the natural action of running. Wolf Hunt provides an alternate interfacing solution for VR systems without having to conform to common 2D interface design schemes. -

Facebook, Ray-Ban Launch Smart Glasses—Who Will Wear Them? 9 September 2021, by Barbara Ortutay

Facebook, Ray-Ban launch smart glasses—who will wear them? 9 September 2021, by Barbara Ortutay In a blog post, Facebook said the glasses let people "capture life's spontaneous moments as they happen from a unique first-person perspective," as well as listen to music, talk to people and, using the Facebook View app, share photos and videos on social media. Facebook signed a multi-year partnership with EssilorLuxottica. The glasses are the first version of what's likely to be more wearable gadgets as the social media giant looks for platforms beyond smartphones. Ray-Ban Stories come out of This photo provided by Facebook shows Ray-Ban Facebook Reality Labs which also oversees the internet-connected smart glasses. In a partnership with Oculus virtual reality headset and the Portal video Ray-Ban, parent EssilorLuxottica, Facebook on calling gadget. Thursday, Sept. 9, 2021, unveiled Ray-Ban Stories—connected eyewear with built-in speakers and Anticipating privacy concerns, Facebook said that microphone for making calls, a companion app that isn't by default the glasses "collect data that's needed to Facebook and a charging case. Credit: Facebook via AP make your glasses work and function, like your battery status to alert you when your battery is low, your email address and password for your Facebook login to verify it's really you when you log Seven years after the ill-fated Google Glass, and into the Facebook View app." Users can take five years after Snap rolled out Spectacles, photos and videos using the glasses, but they can't another tech giant is trying its hand at internet- post directly to Facebook or any other social media connected smart glasses, hoping that this time platform. -

Virtual Reality Sickness During Immersion: an Investigation of Potential Obstacles Towards General Accessibility of VR Technology

Examensarbete 30hp August 2016 Virtual Reality sickness during immersion: An investigation of potential obstacles towards general accessibility of VR technology. A controlled study for investigating the accessibility of modern VR hardware and the usability of HTC Vive’s motion controllers. Dongsheng Lu Abstract People call the year of 2016 as the year of virtual reality. As the world leading tech giants are releasing their own Virtual Reality (VR) products, the technology of VR has been more available than ever for the mass market now. However, the fact that the technology becomes cheaper and by that reaches a mass-market, does not in itself imply that long-standing usability issues with VR have been addressed. Problems regarding motion sickness (MS) and motion control (MC) has been two of the most important obstacles for VR technology in the past. The main research question of this study is: “Are there persistent universal access issues with VR related to motion control and motion sickness?” In this study a mixed method approach has been utilized for finding more answers related to these two important aspects. A literature review in the area of VR, MS and MC was followed by a quantitative controlled study and a qualitative evaluation. 32 participants were carefully selected for this study, they were divided into different groups and the quantitative data collected from them were processed and analyzed by using statistical test. An interview was also carried out with all of the participants of this study in order to gather more details about the usability of the motion controllers used in this study. -

Virtual Reality Controllers

Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets By Summer Lindsey Bachelor of Arts Psychology Florida Institute of Technology May 2015 A thesis Submitted to the College of Aeronautics at Florida Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science In Aviation Human Factors Melbourne, Florida April 2017 © Copyright 2017 Summer Lindsey All Rights Reserved The author grants permission to make single copies. _________________________________ The undersigned committee, having examined the attached thesis " Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets," by Summer Lindsey hereby indicates its unanimous approval. _________________________________ Deborah Carstens, Ph.D. Professor and Graduate Program Chair College of Aeronautics Major Advisor _________________________________ Meredith Carroll, Ph.D. Associate Professor College of Aeronautics Committee Member _________________________________ Neil Ganey, Ph.D. Human Factors Engineer Northrop Grumman Committee Member _________________________________ Christian Sonnenberg, Ph.D. Assistant Professor and Assistant Dean College of Business Committee Member _________________________________ Korhan Oyman, Ph.D. Dean and Professor College of Aeronautics Abstract Title: Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets Author: Summer Lindsey Major Advisor: Dr. Deborah Carstens Virtual Reality (VR) is no longer just for training purposes. The consumer VR market has become a large part of the VR world and is growing at a rapid pace. In spite of this growth, there is no standard controller for VR. This study evaluated three different controllers: a gamepad, the Leap Motion, and a touchpad as means of interacting with a virtual environment (VE). There were 23 participants that performed a matching task while wearing a Samsung Gear VR mobile based VR headset. -

Immersive Robotic Telepresence for Remote Educational Scenarios

sustainability Article Immersive Robotic Telepresence for Remote Educational Scenarios Jean Botev 1,* and Francisco J. Rodríguez Lera 2 1 Department of Computer Science, University of Luxembourg, L-4364 Esch-sur-Alzette, Luxembourg 2 Department of Mechanical, Informatics and Aerospace Engineering, University of León, 24071 León, Spain; [email protected] * Correspondence: [email protected] Abstract: Social robots have an enormous potential for educational applications and allow for cognitive outcomes that are similar to those with human involvement. Remotely controlling a social robot to interact with students and peers in an immersive fashion opens up new possibilities for instructors and learners alike. Using immersive approaches can promote engagement and have beneficial effects on remote lesson delivery and participation. However, the performance and power consumption associated with the involved devices are often not sufficiently contemplated, despite being particularly important in light of sustainability considerations. The contributions of this research are thus twofold. On the one hand, we present telepresence solutions for a social robot’s location-independent operation using (a) a virtual reality headset with controllers and (b) a mobile augmented reality application. On the other hand, we perform a thorough analysis of their power consumption and system performance, discussing the impact of employing the various technologies. Using the QTrobot as a platform, direct and immersive control via different interaction modes, including motion, emotion, and voice output, is possible. By not focusing on individual subsystems or motor chains, but the cumulative Citation: Botev, J.; Rodríguez Lera, energy consumption of an unaltered robot performing remote tasks, this research provides orientation F.J. Immersive Robotic Telepresence regarding the actual cost of deploying immersive robotic telepresence solutions. -

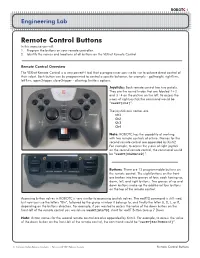

Remote Control Buttons in This Exercise You Will: 1

ROBOTC 1 Engineering Lab Remote Control Buttons In this exercise you will: 1. Program the buttons on your remote controller. 2. Identify the names and locations of all buttons on the VEXnet Remote Control. Remote Control Overview The VEXnet Remote Control is a very powerful tool that a programmer can use to use to achieve direct control of their robot. Each button can be programmed to control a specific behavior, for example - goStraight, rightTurn, leftTurn, openGripper, closeGripper - allowing limitless options. Joysticks: Each remote control has two josticks. They are the round knobs that are labeled 1+2 and 3+4 on the picture on the left. To access the y-axis of right joystick the command would be “vexRT[Ch2]”. The joystick axis names are: Ch1 Ch2 Ch3 Ch4 Note: ROBOTC has the capability of working with two remote controls at a time. Names for the second remote control are appended by Xmtr2. For example, to access the y-axis of right joystick on the second remote control, the command would be “vexRT[Ch2Xmtr2]”. Buttons: There are 12 programmable buttons on the remote control. The eight buttons on the front are broken into two groups of four, each having up, down, left, and right buttons. Two groups of up and down buttons make up the additional four buttons on the top of the remote control. Accessing button values in ROBOTC is very similar to accessing joystick values. The vexRT[] command is still used, but now you use the letters “Btn”, followed by the group number it belongs to, and finally the letter U, D, L, or R, depending on the buttons direction.