1619Fulltext.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Softnas Deployment Guide for High- Performance SQL Storage

SoftNAS Deployment Guide for High- Performance SQL Storage Introduction SoftNAS cloud NAS systems are based on an innovative, memory-centric storage architecture that delivers unparalleled NAS performance, efficiency, and value. They incorporate a hybrid disk storage technology that tailors the usage of data disks, log solid- state cache drives (SSDs), and read cache SSDs to the data share's specific needs. Additional features include variable storage record size, data compression, and multiple connectivity options. As a Cloud NAS solution, SoftNAS cloud NAS systems provide an excellent base for Microsoft Windows Server deployments by providing iSCSI or Fibre Channel block storage for Microsoft SQL Server, and network file system (NFS) or server message block (SMB) file storage for Microsoft Windows client access. This document covers the best practices to follow when deploying Microsoft SQL Server on a SoftNAS cloud NAS system. The intended audience is storage administrators and Microsoft SQL Server database administrators. Maintaining High Availability As with any business-critical application, high availability is a crucial design criterion to be considered when deploying a Microsoft SQL Server installation. Microsoft SQL Server 2016 can be installed on local and/or shared file systems, and SoftNAS cloud NAS systems can satisfy both of these options. Local file systems (from the Microsoft Windows Server perspective) are hosted as block volumes—iSCSI and/or Fibre-Channel-connected LUNs and file systems as SMB and/or NFS volumes. High availability starts with the network connectivity supporting the storage and server interconnectivity. Any design for the storage infrastructure should avoid single points of failure. Because many white papers and publications cover storage-area networking and network-attached storage resilience, those topics are not covered in detail in this paper. -

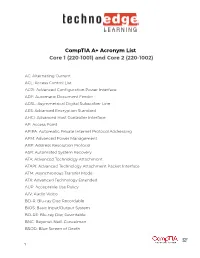

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

File Systems

File Systems Profs. Bracy and Van Renesse based on slides by Prof. Sirer Storing Information • Applications could store information in the process address space • Why is this a bad idea? – Size is limited to size of virtual address space – The data is lost when the application terminates • Even when computer doesn’t crash! – Multiple process might want to access the same data File Systems • 3 criteria for long-term information storage: 1. Able to store very large amount of information 2. Information must survive the processes using it 3. Provide concurrent access to multiple processes • Solution: – Store information on disks in units called files – Files are persistent, only owner can delete it – Files are managed by the OS File Systems: How the OS manages files! File Naming • Motivation: Files abstract information stored on disk – You do not need to remember block, sector, … – We have human readable names • How does it work? – Process creates a file, and gives it a name • Other processes can access the file by that name – Naming conventions are OS dependent • Usually names as long as 255 characters is allowed • Windows names not case sensitive, UNIX family is File Extensions • Name divided into 2 parts: Name+Extension • On UNIX, extensions are not enforced by OS – Some applications might insist upon them • Think: .c, .h, .o, .s, etc. for C compiler • Windows attaches meaning to extensions – Tries to associate applications to file extensions File Access • Sequential access – read all bytes/records from the beginning – particularly convenient for magnetic tape • Random access – bytes/records read in any order – essential for database systems File Attributes • File-specific info maintained by the OS – File size, modification date, creation time, etc. -

SMB Remote File Protocol (Including SMB 3.X) Approved SNIA Tutorial © 2015 Storage Networking Industry Association

SMBPRESENTATION remote TITLE file GOES protocol HERE (including SMB 3.x) Tom Talpey Microsoft SNIA Legal Notice The material contained in this tutorial is copyrighted by the SNIA unless otherwise noted. Member companies and individual members may use this material in presentations and literature under the following conditions: Any slide or slides used must be reproduced in their entirety without modification The SNIA must be acknowledged as the source of any material used in the body of any document containing material from these presentations. This presentation is a project of the SNIA Education Committee. Neither the author nor the presenter is an attorney and nothing in this presentation is intended to be, or should be construed as legal advice or an opinion of counsel. If you need legal advice or a legal opinion please contact your attorney. The information presented herein represents the author's personal opinion and current understanding of the relevant issues involved. The author, the presenter, and the SNIA do not assume any responsibility or liability for damages arising out of any reliance on or use of this information. NO WARRANTIES, EXPRESS OR IMPLIED. USE AT YOUR OWN RISK. SMB remote file protocol (including SMB 3.x) Approved SNIA Tutorial © 2015 Storage Networking Industry Association. All Rights Reserved. 2 Abstract and Learning Objectives Title: SMB remote file protocol (including SMB 3.x) Abstract The SMB protocol evolved over time from CIFS to SMB1 to SMB2, with implementations by dozens of vendors including most major Operating Systems and NAS solutions. The SMB 3.0 protocol had its first commercial implementations by Microsoft, NetApp and EMC by the end of 2012, and many other implementations exist or are in-progress. -

Server Message Block in the Age of Microsoft Glasnost

Server Message Block in the Age of Microsoft Glasnost CHRISTOPHER R. HERTEL Christopher R. Hertel is a The EU anti-trust case against Microsoft concluded in late 2007 . Related or not, long-haul member of the that’s when things started to change . One pleasant surprise for third-party devel- Samba Team and co-founder opers was the release of hundreds of specifications covering Windows file formats, of the jCIFS project. He is also system internals, and protocols . Microsoft was opening up . Four years later, about the author of Implementing CIFS—The Common 400 specifications have been published . It took a while for some of those docu- Internet File System, the only developer’s guide ments to appear, mostly because Microsoft didn’t actually have them all written to the SMB/CIFS protocol suite. Not too long yet . And now they have surprised us again . Well before the beta release of Windows ago, he had the opportunity to work directly 8, they have provided preview documentation for an overhauled and compelling with Microsoft’s File Server team when the new version of the venerable Server Message Block Protocol: SMB2 .2 . This is going company he founded, ubiqx Consulting, to be epic . Inc., was tapped to write Microsoft’s official SMB/CIFS specifications. Chris has also A Gathering of Storage Geeks been adjunct faculty at the University of Once a year, typically in September and typically somewhere near San Jose, Minnesota College of Continuing Education California, the Storage Networking Industry Association (SNIA) hosts the Stor- (CCE) and is currently a member of the CCE IT age Developer Conference (SDC) . -

Backupassist and Windows Server 2012

BackupAssist and Windows Server 2012 BackupAssist v7.1 and later, in a Windows Server 2012 environment. BackupAssist 7.1 & Windows Server 2012 Windows Server 2012 introduces new technologies and features that can affect how BackupAssist operates. This resource explains how some of the key changes impact BackupAssist and what you need to know when performing backups and restores in a Windows 2012 environment. Resilient Files System (ReFS) ReFS is a new file system that introduces reliability and compatibility features to Windows Server. BackupAssist supports ReFS formatted drives as a backup source and destination for all backup types. BackupAssist can also restore from ReFS formatted drives. Considerations: File Protection backups cannot use single-instance store when the backup is saved on a ReFS formatted destination. This means all of the data will be backed up each time the backup job runs. System Protection cannot incrementally back up data from a ReFS formatted drive (source). This means a full backup of all selections will take place each time the backup job runs. De-duplication De-duplication for Windows Server 2012 is a technology that efficiently stores and transfers data using less space. All BackupAssist backups can operate in a de-duplicated environment. Considerations: BackupAssist will backup files from a de-duplicated volume in the non-optimized mode. Therefore, the backup will not retain the de-duplicated format. Server Message Block Server Message Block (SMB) is a network protocol for sharing resources. SMB 3.0 includes changes such as improved performance and VSS support. BackupAssist supports SMB 3.0 as both a backup source and destination. -

Gigabit Ethernet Hard Drive User Guide

Gigabit Ethernet Hard Drive User Guide Contents Introduction ...................................................................................2 Controls, Connectors and Indicators .................................................2 Front Panel Area ...............................................................................2 Rear Panel Area ................................................................................3 About the Hard Disk ..........................................................................4 Locating NAS Drive on Your Desk ......................................................5 Bundled Software ..............................................................................5 Finder.exe ........................................................................................5 Backup Software ..............................................................................5 TorrentFlux .......................................................................................5 Connecting To Your Network ..............................................5 About NAS Drive User Accounts ........................................................7 Connecting The NAS Drive To Your LAN ............................................8 Web-Based Administration Tool .....................................................10 Administration Login .....................................................................10 Basic Settings For Initial Setup ......................................................11 NAS Drive Operation .............................................................17 -

SMB Analysis

NAP-3 Microsoft SMB Troubleshooting Rolf Leutert, Leutert NetServices, Switzerland © Leutert NetServices 2013 www.wireshark.ch Server Message Block (SMB) Protokoll SMB History Server Message Block (SMB) is Microsoft's client-server protocol and is most commonly used in networked environments where Windows® operating systems are in place. Invented by IBM in 1983, SMB has become Microsoft’s core protocol for shared services like files, printers etc. Initially SMB was running on top of non routable NetBIOS/NetBEUI API and was designed to work in small to medium size workgroups. 1996 Microsoft renamed SMB to Common Internet File System (CIFS) and added more features like larger file sizes, Windows RPC, the NT domain service and many more. Samba is the open source SMB/CIFS implementation for Unix and Linux systems 2 © Leutert NetServices 2013 www.wireshark.ch Server Message Block (SMB) Protokoll SMB over TCP/UDP/IP SMB over NetBIOS over UDP/TCP SMB / NetBIOS was made routable by running Application over TCP/IP (NBT) using encapsulation over 137/138 139 TCP/UDP-Ports 137–139 .. Port 137 = NetBIOS Name Service (NS) Port 138 = NetBIOS Datagram Service (DGM) Port 139 = NetBIOS Session Service (SS) Data Link Ethernet, WLAN etc. Since Windows 2000, SMB runs, by default, with a thin layer, the NBT's Session Service, on SMB “naked” over TCP top of TCP-Port 445. Application 445 DNS and LLMNR (Link Local Multicast Name . Resolution) is used for name resolution. Port 445 = Microsoft Directory Services (DS) SMB File Sharing, Windows Shares, Data Link Ethernet, WLAN etc. Printer Sharing, Active Directory 3 © Leutert NetServices 2013 www.wireshark.ch Server Message Block (SMB) Protokoll NetBIOS / SMB History NetBIOS Name Service (UDP Port 137) Application • Using NetBIOS names for clients and services. -

Microsoft SMB File Sharing Best Practices Guide

Technical White Paper Microsoft SMB File Sharing Best Practices Guide Tintri VMstore, Microsoft SMB 3.0 Protocol, and VMware 6.x Author: Neil Glick Version 1.0 06/15/2016 @tintri www.tintri.com Contents Executive Summary ...............................................................................1 Consolidated List of Practices...................................................................1 Benefits of Native Microsoft Windows 2012R2 File Sharing . 1 SMB 3.0 . 2 Data Deduplication......................................................................................2 DFS Namespaces and DFS Replication (DFS-R) ..............................................................2 File Classification Infrastructure (FCI) and File Server Resource Management Tools (FSRM) ..........................2 NTFS and Folder Security ................................................................................2 Shadow Copy of Shared Folders ...........................................................................3 Disk Quotas ...........................................................................................3 Deployment Architecture for a Windows File Server ........................................3 Microsoft Windows 2012R2 File Share Virtual Hardware Configurations................4 Network Best Practices for SMB and the Tintri VMstore ..................................5 Subnets ..............................................................................................6 Jumbo Frames .........................................................................................6 -

Architecture Principles and Implementation Practices for Remote Replication Using Oracle ZFS Storage Appliance

Implementing Microsoft Windows SMB Encryption With Oracle ZFS Storage Appliance ORACLE WHITE P A P E R | NOVEMBER 2 0 1 8 Table of Contents Introduction 2 Overview of Oracle ZFS Storage Appliance SMB Encryption Support 3 Understanding SMB 3.0/3.1 Encryption 3 SMB Client Support Levels 3 Differences between SMB 3.0 and SMB 3.1 Encryption 4 Using SMB 3.0/3.1 Encryption Settings With Oracle ZFS Storage Appliance 4 General SMB Encryption Recommendations 9 Conclusion 11 Appendix A: References 12 1 | IMPLEMENTING MICROSOFT WINDOWS SMB ENCRYPTION WITH ORACLE ZFS STORAGE APPLIANCE Introduction SMB (Server Message Block) is the primary protocol used in Microsoft Windows systems for network storage access. SMB Version 3.0 and above include support for data packet encryption of SMB network traffic, to help prevent packet-snooping and man-in-the-middle attacks that would compromise transmitted data. Oracle ZFS Storage Appliance OS 8.8 firmware release includes support for SMB 3.0 /3.1 encryption, either at a global level or at an individual share level. Enabling SMB 3.0/3.1 encryption is recommended in any environment subject to hostile network access. This paper will demonstrate implementation of SMB encryption for SMB 3.0/3.1 shares resident on an Oracle ZFS Storage Appliance running firmware OS 8.8 or above. Overview Understanding SMB 3.0/3.1 encryption Discussion of differences between SMB 3.0 and SMB 3.1 encryption Implementation of SMB 3.0/3.1 encryption on Oracle ZFS Storage Appliance Global setting Share Level setting Reject unencrypted access setting General recommendations for SMB 3.0/3.1 encryption Note that the functions and features of Oracle ZFS Storage Appliance described in this white paper are applicable to Oracle ZFS Storage Appliance firmware release OS 8.8 and above. -

Using the Z/OS SMB Server to Access Z/OS Data from Windows -- Hands-On Lab Session 10634-10879

Using the z/OS SMB Server to access z/OS data from Windows -- Hands-On Lab Session 10634-10879 Using the z/OS SMB server to access z/OS data from Windows Hands-On-Lab Marna Walle Jim Showalter Karl Lavo IBM March 12-13, 2011 Session 10634-10879 Trademark Information The following are trademarks of the International Business Machines Corporation in the United States and/or other countries. • z/OS® The following are trademarks or registered trademarks of other companies. • Linux is a registered trademark of Linus Torvalis in the United States, other countries or both • Microsoft, Windows and Windows NT are registered trademarks of Microsoft Corporation • Red Hat is a registered trademark of Red Hat, Inc. • UNIX is a registered trademark of The Open Group in the United States and other countries • SUSE is a registered trademark of Novell, Inc. in the United States and other countries Using the z/OS SMB server Hands-On Lab SHARE Session 9712 Objective of this Lab: At the end of this lab, you will be able to … • Connect to the z/OS SMB server from Windows • Access z/OS UNIX data from Windows • Understand what is necessary to configure the z/OS SMB server Files and directories in the lab /sharelab – parent directory of home directories /sharelab/sharaxx – your z/OS UNIX home directory /sharelab/sharaxx/smblab – your z/OS UNIX working directory Page 2 of 69 Using the z/OS SMB server Hands-On Lab SHARE Session 9712 What are we going to do? In this session, you are going to: • Get a short, high level overview of what the z/OS SMB server is (page -

![[MS-SMB2]: Server Message Block (SMB) Protocol Versions 2 and 3](https://docslib.b-cdn.net/cover/7752/ms-smb2-server-message-block-smb-protocol-versions-2-and-3-2247752.webp)

[MS-SMB2]: Server Message Block (SMB) Protocol Versions 2 and 3

[MS-SMB2]: Server Message Block (SMB) Protocol Versions 2 and 3 Intellectual Property Rights Notice for Open Specifications Documentation . Technical Documentation. Microsoft publishes Open Specifications documentation (“this documentation”) for protocols, file formats, data portability, computer languages, and standards support. Additionally, overview documents cover inter-protocol relationships and interactions. Copyrights. This documentation is covered by Microsoft copyrights. Regardless of any other terms that are contained in the terms of use for the Microsoft website that hosts this documentation, you can make copies of it in order to develop implementations of the technologies that are described in this documentation and can distribute portions of it in your implementations that use these technologies or in your documentation as necessary to properly document the implementation. You can also distribute in your implementation, with or without modification, any schemas, IDLs, or code samples that are included in the documentation. This permission also applies to any documents that are referenced in the Open Specifications documentation. No Trade Secrets. Microsoft does not claim any trade secret rights in this documentation. Patents. Microsoft has patents that might cover your implementations of the technologies described in the Open Specifications documentation. Neither this notice nor Microsoft's delivery of this documentation grants any licenses under those patents or any other Microsoft patents. However, a given Open Specifications document might be covered by the Microsoft Open Specifications Promise or the Microsoft Community Promise. If you would prefer a written license, or if the technologies described in this documentation are not covered by the Open Specifications Promise or Community Promise, as applicable, patent licenses are available by contacting [email protected].