Temporal Interpolation of Geostationary Satellite Imagery with Task Specific Optical Flow

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Generation of GOES-16 True Color Imagery Without a Green Band

Confidential manuscript submitted to Earth and Space Science 1 Generation of GOES-16 True Color Imagery without a Green Band 2 M.K. Bah1, M. M. Gunshor1, T. J. Schmit2 3 1 Cooperative Institute for Meteorological Satellite Studies (CIMSS), 1225 West Dayton Street, 4 Madison, University of Wisconsin-Madison, Madison, Wisconsin, USA 5 2 NOAA/NESDIS Center for Satellite Applications and Research, Advanced Satellite Products 6 Branch (ASPB), Madison, Wisconsin, USA 7 8 Corresponding Author: Kaba Bah: ([email protected]) 9 Key Points: 10 • The Advanced Baseline Imager (ABI) is the latest generation Geostationary Operational 11 Environmental Satellite (GOES) imagers operated by the U.S. The ABI is improved in 12 many ways over preceding GOES imagers. 13 • There are a number of approaches to generating true color images; all approaches that use 14 the GOES-16 ABI need to first generate the visible “green” spectral band. 15 • Comparisons are shown between different methods for generating true color images from 16 the ABI observations and those from the Earth Polychromatic Imaging Camera (EPIC) on 17 Deep Space Climate Observatory (DSCOVR). 18 Confidential manuscript submitted to Earth and Space Science 19 Abstract 20 A number of approaches have been developed to generate true color images from the Advanced 21 Baseline Imager (ABI) on the Geostationary Operational Environmental Satellite (GOES)-16. 22 GOES-16 is the first of a series of four spacecraft with the ABI onboard. These approaches are 23 complicated since the ABI does not have a “green” (0.55 µm) spectral band. Despite this 24 limitation, representative true color images can be built. -

The Progress of Fengyun Satellite Program and Its Applications

The Progress of Fengyun Satellite Program and Its Applications Qifeng LU National Satellite Meteorological Center, China Meteorological Administration (NSMC/CMA) United Nations/China Forum on Space Solutions: Realizing the Sustainable Development Goals Outline Fengyun Program Overview Current Missions and Services Latest Progress Future Programs National Satellite Meteorological Center ,CMA 1. Fengyun Program Overview Chinese FENGYUN Meteorological Satellites Polar System Geostationary System First Generation First FY-1 A, B, C, D Generation FY-2 A, B, C, D, E, F, G, H Second Second Generation Generation FY-3 A, B, C, D FY-4 A FY-3E, F, G planned until 2025 FY-4B, C, D planned until 2025 2019/5/3 United Nations/China Forum on Space Solutions: Realizing the Sustainable Development Goals 3 Launched Satellites Since Jan. 1969, China began to develop his own meteorological Satellite Leo Launch Data Geo Launch Data FY-1A Sept. 7, 1988 FY-2A Jun. 10, 1997 FY-1B Sept. 3, 1990 FY-2B Jun. 25, 2000 FY-1C May 10, 1999 FY-2C Oct. 18, 2004 FY-1D May 15, 2002 FY-2D Dec. 8, 2006 FY-3A May 27, 2008 FY-2E Dec. 23, 2008 FY-3B Nov. 5, 2010 FY-2F Jan. 13, 2012 FY-3C Sept. 23, 2013 FY-2G Dec. 31, 2014 FY-3D Nov. 15, 2017 FY-4A Dec. 11, 2016 FY-2H Jun. 5, 2018 Overall Development Strategy (4 stages): 1) 1970 - 1990: Conducting satellite research and development 2) 1990 - 2000: Implementing transition from R&D to operational 3) 2000 - 2010: Implementing transition from 1st generation to 2nd generation 4) 2010 - 2020: Pursuing accuracy and precision of satellite measurements 2019/5/3 United Nations/China Forum on Space Solutions: Realizing the Sustainable Development Goals 4 International User Community Global Data Regional Data Kangerlussu Svalbard aq Lannion MaspalomaAthens s FY-3C sounding data have been assimilated into CMA GRAPES, ECMWF, EUMETSAT Advanced UK NWP model operationally. -

An Improved Global Remote-Sensing-Based Surface Soil Moisture (RSSSM) Dataset Covering 2003–2018

Earth Syst. Sci. Data, 13, 1–31, 2021 https://doi.org/10.5194/essd-13-1-2021 © Author(s) 2021. This work is distributed under the Creative Commons Attribution 4.0 License. An improved global remote-sensing-based surface soil moisture (RSSSM) dataset covering 2003–2018 Yongzhe Chen1,2, Xiaoming Feng1, and Bojie Fu1,2 1State Key Laboratory of Urban and Regional Ecology, Research Center for Eco-Environmental Sciences, Chinese Academy of Sciences, Beijing 100085, China 2College of Resources and Environment, University of Chinese Academy of Sciences, Beijing 100049, China Correspondence: Xiaoming Feng ([email protected]) Received: 9 March 2020 – Discussion started: 8 May 2020 Revised: 13 November 2020 – Accepted: 18 November 2020 – Published: 5 January 2021 Abstract. Soil moisture is an important variable linking the atmosphere and terrestrial ecosystems. However, long-term satellite monitoring of surface soil moisture at the global scale needs improvement. In this study, we conducted data calibration and data fusion of 11 well-acknowledged microwave remote-sensing soil moisture products since 2003 through a neural network approach, with Soil Moisture Active Passive (SMAP) soil moisture data applied as the primary training target. The training efficiency was high (R2 D 0:95) due to the selection of nine quality impact factors of microwave soil moisture products and the complicated organizational structure of multiple neural networks (five rounds of iterative simulations, eight substeps, 67 independent neural networks, and more than 1 million localized subnetworks). Then, we developed the global remote-sensing-based surface soil moisture dataset (RSSSM) covering 2003–2018 at 0.1◦ resolution. The temporal resolution is approximately 10 d, meaning that three data records are obtained within a month, for days 1–10, 11–20, and from the 21st to the last day of that month. -

2007 Chinese Anti-Satellite Test Fact Sheet

2007 Chinese Anti-Satellite Test Fact Sheet Updated November 23, 2010 Main Author: Brian Weeden, [email protected] www.swfound.org Summary On January 11, 2007, China launched a ballistic missile from Xichang Space Launch Center. The payload was a kinetic kill vehicle (KKV) that collided with a non-operational Chinese weather satellite, the Fengyun-1C (FY-1C), at an altitude of 863 km (534 mi), completely destroying the satellite.1 This is known as a direct ascent antisatellite (ASAT) attack, where the KKV does not enter into orbit but instead travels through space on a ballistic arc. The destruction created a cloud of more than 3,000 pieces of space debris, the largest ever tracked, and much of it will remain in orbit for decades, posing a significant collision threat to other space objects in Low Earth Orbit (LEO). The Satellite2 The Fengyun 1 (FY-1) series represents China’s first meteorological satellite system. Between 1998 and 2005, China launched a total of four FY satellites into Sun-synchronous orbits (SSO), FY-1C through FY-1D, with an average altitude of 900 kilometers and an inclination of 99°. The FY-1C and its support systems were designed and developed by the Shanghai Satellite Engineering and Research Centre of the China Academy of Space Technology (CAST), and FY-1’s payload was developed by the Shanghai Technical Physics Institute of the Chinese Academy of Sciences. FengYun-1C Satellite2 The Missile The Chinese ballistic missile used for the 2007 ASAT test was labeled the SC-19 by the U.S. military, and is believed to be a modified version of the DF-21 two-stage, road-mobile, solid-fuel, medium-range ballistic missile (MRBM), also known as the CSS-5.3 The DF-21 has a nominal ballistic range of 2,500 km for a 600 kg payload, which if it was used means the SC-19 has a rough upper altitude limit of 1,000 to 1,200 km when used as a direct-ascent ASAT. -

Introducing the New Generation of Chinese Geostationary Weather Satellites, Fengyun-4

INTRODUCING THE NEW GENERATION OF CHINESE GEOSTATIONARY WEATHER SATELLITES, FENGYUN-4 JUN YANG, ZHIQING ZHANG, CAIYING WEI, FENG LU, AND QIANG GUO Fengyun-4 is the new generation of Chinese geostationary meteorological satellites with greatly enhanced capabilities for high-impact weather event monitoring, warning, and forecasting. n 7 September 1988, the Long March rocket FY-2 satellites carry the Visible and Infrared Spin Scan carried the Fengyun-1A (FY-1A) polar-orbiting Radiometer (VISSR) capable of imagery in five spectral O satellite into orbit, marking the start of the bands. Derived products include atmospheric motion Chinese Fengyun (FY; meaning wind and cloud in vectors (AMVs), sea surface temperatures (SSTs), total Chinese) meteorological satellite observing systems pro- precipitable water vapor (TPW), quantitative precipi- gram. On 10 June 1997, the FY-2A geostationary satellite tation estimations (QPEs), fire locations and intensity, was successfully launched and the Chinese meteorologi- surface albedo, and several others. cal satellite program took a large step toward its goal The FY-4 introduces a new generation of Chinese of establishing both polar-orbiting and geostationary geostationary meteorological satellites, with the first observational systems. Over time the Fengyun satellites have become increasingly important for protecting lives and property from natural disasters in China. TABLE 1. FY-2D/FY-2E overlap region observation The Chinese FY satellites are launched as a series. schedule. Full-disc and north-disc observations The odd numbers denote the polar-orbiting satellite are abbreviated as F and N, respectively. series, and the even numbers denote the geostationary Time (UTC) Region Satellite satellite series. After launch, a letter is appended to 0000 F FY-2E indicate the order in the satellite series; for instance, 0015 F FY-2D FY-2F is the sixth satellite that has been launched 0030 N FY-2E in the first generation of the Chinese geostationary 0045 N FY-2D satellites (FY-2). -

China Dream, Space Dream: China's Progress in Space Technologies and Implications for the United States

China Dream, Space Dream 中国梦,航天梦China’s Progress in Space Technologies and Implications for the United States A report prepared for the U.S.-China Economic and Security Review Commission Kevin Pollpeter Eric Anderson Jordan Wilson Fan Yang Acknowledgements: The authors would like to thank Dr. Patrick Besha and Dr. Scott Pace for reviewing a previous draft of this report. They would also like to thank Lynne Bush and Bret Silvis for their master editing skills. Of course, any errors or omissions are the fault of authors. Disclaimer: This research report was prepared at the request of the Commission to support its deliberations. Posting of the report to the Commission's website is intended to promote greater public understanding of the issues addressed by the Commission in its ongoing assessment of U.S.-China economic relations and their implications for U.S. security, as mandated by Public Law 106-398 and Public Law 108-7. However, it does not necessarily imply an endorsement by the Commission or any individual Commissioner of the views or conclusions expressed in this commissioned research report. CONTENTS Acronyms ......................................................................................................................................... i Executive Summary ....................................................................................................................... iii Introduction ................................................................................................................................... 1 -

A Preliminary Analysis of the Chinese ASAT Test Geoff Forden MIT

A Preliminary Analysis of the Chinese ASAT Test Geoff Forden MIT 1. A Review of the Fengyun 1C physical and orbital properties 2. Estimates for the Interceptor booster 3. An analysis of the debris pattern from the collision 4. Possible use of China’s phased array radar facility 5. Optical vs. Radar guidance: 6. Conclusions 1 The Target Satellite 8.8 m ~1.8 m Mass: 880 kg At time of interception: Orbital parameters: Longitudinal speed: 7.42 km/s Apogee: 875 km Altitude: 856 km Inclination: 98.59o Launched May 10, 1999, the Fengyun 1C satellite broadcast on the 137 MHz band reserved for meteorological satellites. The satellite carried high density reaction wheels (for keeping the solar cells pointed toward the sun) and batteries which could be important for colliding with the interceptor. 2 Fengyun-1C was placed in a sun- synchronous orbit The orbital plane of the Fengyun 1C satellite processes around the Earth in 24 hours so that it keeps the same position with respect to the sun and the Earth. While the interception occurred during the night at the launch site, the satellite remained fully illuminated by the sun because of its 860 km altitude. 3 There is no evidence that China changed the orbit of the target satellite anytime in the week before the interception. These correspond to the TLEs from 3 January to 11 January 2007. Taking the satellite TLE’s for the week before the collision and extrapolating forward toward the time of the collsion gives a very small bunching. This indicates that the Chinese did not maneuver the satellite prior to the interception; contrary to some reports in the press. -

(Cssar) Chinese Academy of Sciences

CSSAR Space Science Cooperation WANG Shuzhi Center for Space Science and Applied Research Chinese Academy of Science(CSSAR) Table of Contents Brief History of CSSAR International Cooperation CAS Strategic Pioneer Research Program on Space Science Part 1: Brief History of CSSAR It was set up by CAS on Oct. 1958, dedicated for the development of the first Chinese satellite Jeoujang Jaw First Director of CSSAR Mainly participation in first Chinese satellite DFH-1,which was launched on April 24, 1970 participation in Chinese many space science programs and satellite programs,including manned space flight programs、SJ series satellites、Fengyun series satellites、Chang’e series satellites… Chang’E-1 FengYun-3 Haiyang-2 Part 2: International Cooperation International Space Weather Meridian Circle Program (ISWMCP) The International Space Weather Meridian Circle Program (ISWMCP), propose to connect 120ºE and 60ºW meridian chains of ground based observatories and enhance the ability of monitoring space environment worldwide. China+ Russia+ Australia+ Canada+ Brazil … … international Meridian project Cooperation with ESA Established stable cooperative relationship with ESA Holding annual bilateral meetings on space science since 2004 Joint missions: DSP, KUAFU, HXMT, YH-1 Double Star Project Two satellite running in equatorial and polar orbits Measurement of Earth magnetosphere with fields and particle detectors Cooperation with ESA, Nearly 50% of science payloads are provided from European countries Forming a six-point measurement with ESA’s Cluster mission first time in the human history to explore the geo-space Double Star Project National Award on Scientific and Technological Advancement, 2010 Laurels Team Achievement Award, International Academy of Astronautics, 2010 China-Russia Joint Research Center on Space Weather (2000- ) More than 100 joint publications 10 Bilateral Scientific Workshop Cooperative Program More than ten Institutes involved between Russia and China Yinghuo-1 Yinghuo-1 is the first Chinese Mars exploration program, will be launched Nov. -

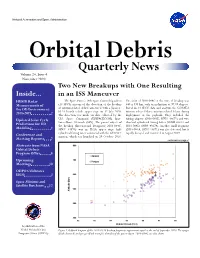

Quarterly News Volume 24, Issue 4 November 2020 Two New Breakups with One Resulting Inside

National Aeronautics and Space Administration Orbital Debris Quarterly News Volume 24, Issue 4 November 2020 Two New Breakups with One Resulting Inside... in an ISS Maneuver HUSIR Radar The Space Force’s 18th Space Control Squadron The orbit of 2018-084C at the time of breakup was Measurements of (18 SPCS) announced the detection of the breakup 643 x 595 km, with an inclination of 97.89 degrees. the OD Environment: of mission-related debris associated with a Japanese Based on 18 SPCS’ data and analysis, the GOSAT-2 H-2A launch vehicle upper stage on 12 July 2020. mission released three mission-related debris during 2018-2019 2 The detection was made via data collected by the deployment of the payloads. They included the Updated Solar Cycle U.S. Space Command (USSPACECOM) Space fairing adapter (2018-084E, SSN# 43675) and two Surveillance Network (SSN). The parent object of identical cylindrical fairing halves (SSN# 43673 and Predictions for OD the breakup (International Designator 2018-084C, 2018-084D, SSN# 43674). Another small fragment Modeling 4 SSN# 43673) was an H-2A upper stage half- (2018-084A, SSN# 43671) was also detected, but it cylindrical fairing cover associated with the GOSAT-2 rapidly decayed and reentered in August 2019. Conference and mission, which was launched on 29 October 2018. Meeting Reports 7 continued on page 2 Abstracts from NASA ϴϬϬ Orbital Debris Program Office 8 ƉŽŐĞĞ ϳϬϬ Upcoming WĞƌŝŐĞĞ Meetings 10 ODPO Celebrates ISS20 2 ϲϬϬ ;ŬŵͿ Space Missions and Satellite Box Score 12 ϱϬϬ ůƚŝƚƵĚĞ ϰϬϬ ϯϬϬ ϵϮ ϵϯ ϵϰ ϵϱ ϵϲ ϵϳ ϵϴ KƌďŝƚĂůWĞƌŝŽĚ;ŵŝŶͿ A publication of the NASA Orbital Debris Figure 1. -

CGMS-37 Report

REPORT OF THE 37th MEETING OF THE COORDINATION GROUP FOR METEOROLOGICAL SATELLITES CGMS-37 Jeju Island, South Korea 26-30 October 2009 1 Cover photo: Jeju Island, South Korea (courtesy of NASA) Please note that this report is published together with a CD-ROM containing an electronic version of the report and all working papers presented at CGMS-37. Report edited on behalf of CGMS by: CGMS Secretariat EUMETSAT Eumetsat Allee 1 D-64295 Darmstadt Germany www.eumetsat.int CGMS MR 37 © 2 February 2010 EUMETSAT 2 TABLE OF CONTENTS FINAL REPORT OF THE PLENARY SESSION ....................................................................... 4 A. INTRODUCTION .......................................................................................................... 4 B. REPORT ON THE STATUS OF CURRENT SATELLITE SYSTEMS........................ 18 C. REPORT ON FUTURE SATELLITE SYSTEMS ........................................................ 31 D. OPERATIONAL CONTINUITY AND RELIABILITY.................................................... 42 E. SATELLITE REQUIREMENTS OF WMO AND IOC .................................................. 45 F. INTERACTION WITH GEO........................................................................................ 49 G. WORKING GROUP REPORTS ................................................................................. 51 H. OTHER ITEMS OF INTEREST .................................................................................. 51 I. FINAL SESSION ....................................................................................................... -

Future NOAA Satellites Planning for Microwave Remote Sensing Readiness Robbie Iacovazzi, Quanhua “Mark” Liu, and Changyong Cao

Meeting Summary Future NOAA Satellites Planning for Microwave Remote Sensing Readiness Robbie Iacovazzi, Quanhua “Mark” Liu, and Changyong Cao 2020 Community Meeting on NOAA Satellites: Informing the Future of NOAA Satellite Observations What: NOAA met with data users, partners, and stakeholders to communicate strategic NESDIS objectives and current activities and future plans for the Geostationary Extended Observations, low-Earth-orbit, and space weather programs, and garner feedback to help inform these important decisions. When: 29 September–2 October 2020 Where: Virtual KEYWORDS: Instrumentation/sensors; Microwave observations; Remote sensing; Satellite observations; Numerical weather prediction/forecasting https://doi.org/10.1175/BAMS-D-20-0291.1 Corresponding author: Robbie Iacovazzi, [email protected] In final form 5 March 2021 ©2021 American Meteorological Society For information regarding reuse of this content and general copyright information, consult the AMS Copyright Policy. AFFILIATIONS: Iacovazzi—GST, Inc., Greenbelt, Maryland; Liu and Cao—NOAA/NESDIS/STAR, College Park, Maryland AMERICAN METEOROLOGICAL SOCIETY UnauthenticatedMAY | 2021DownloadedE1123 09/29/21 10:57 AM UTC OVID-19 has changed our daily life and reshaped the venue and format of international sci- ence conferences. The first Community Meeting on NOAA Satellites was held virtually via CWebEx between 29 September and 2 October 2020. More than a thousand people registered for the meeting from more than 250 organizations across 33 countries. The meeting focused on operational space-based environmental observing system planning developments out to the 2030–50 time frame. It emphasized the needs for user-driven data-source-agnostic environmental products, as well as disaggregated satellite observing systems and enterprise ground segment solutions that can deliver those products. -

China Aerospace Studies Institute Sep 2020 1 the Emergence of China's Commercial Space Companies and Start-Ups Erika Solem, CA

The Emergence of China’s Commercial Space Companies and Start-Ups Erika Solem, CASI Associate Introduction As a legacy space power, China has a strong foundation for its current efforts and pursuit of space development. In recent years, the nation’s space program and capabilities have grown at an impressive pace. Recent achievements include the Chang’e lunar probe missions, deployment of the Tianwen-1 Mars probe, and the reported completion of the BeiDou global navigation satellite system (GNSS).1 The history of China’s space program is inextricably linked with its military; but its overall space strategy in recent years has broadened to align closer to emerging global trends such as space commercialization. Multiple developments in China’s national policy and overall space strategy have lent support to the shift of space-related research and development away from the military and towards the private sector. However, China’s remaining state-owned defense industrial base, initiatives such as military-civil fusion (MCF), and competitive industrial policy show that China’s opening of the space sector still aligns with its strategic national competitive goals and remains tied to the state and People’s Liberation Army (PLA). Historically, global space development was concentrated in the government sphere and activities were carried out by government agencies, the military, and legacy government contractors. However, in more recent years, commercial space companies have been emerging in both established and developing space powers around the world. In 2019, start-up space companies garnered $5.7 billion in funding, which is a $2.2 billion increase from the previous year.