Logic and Computation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

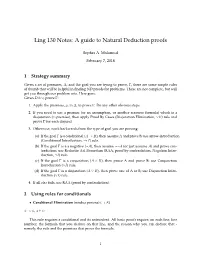

Ling 130 Notes: a Guide to Natural Deduction Proofs

Ling 130 Notes: A guide to Natural Deduction proofs Sophia A. Malamud February 7, 2018 1 Strategy summary Given a set of premises, ∆, and the goal you are trying to prove, Γ, there are some simple rules of thumb that will be helpful in finding ND proofs for problems. These are not complete, but will get you through our problem sets. Here goes: Given Delta, prove Γ: 1. Apply the premises, pi in ∆, to prove Γ. Do any other obvious steps. 2. If you need to use a premise (or an assumption, or another resource formula) which is a disjunction (_-premise), then apply Proof By Cases (Disjunction Elimination, _E) rule and prove Γ for each disjunct. 3. Otherwise, work backwards from the type of goal you are proving: (a) If the goal Γ is a conditional (A ! B), then assume A and prove B; use arrow-introduction (Conditional Introduction, ! I) rule. (b) If the goal Γ is a a negative (:A), then assume ::A (or just assume A) and prove con- tradiction; use Reductio Ad Absurdum (RAA, proof by contradiction, Negation Intro- duction, :I) rule. (c) If the goal Γ is a conjunction (A ^ B), then prove A and prove B; use Conjunction Introduction (^I) rule. (d) If the goal Γ is a disjunction (A _ B), then prove one of A or B; use Disjunction Intro- duction (_I) rule. 4. If all else fails, use RAA (proof by contradiction). 2 Using rules for conditionals • Conditional Elimination (modus ponens) (! E) φ ! , φ ` This rule requires a conditional and its antecedent. -

Chapter 5: Methods of Proof for Boolean Logic

Chapter 5: Methods of Proof for Boolean Logic § 5.1 Valid inference steps Conjunction elimination Sometimes called simplification. From a conjunction, infer any of the conjuncts. • From P ∧ Q, infer P (or infer Q). Conjunction introduction Sometimes called conjunction. From a pair of sentences, infer their conjunction. • From P and Q, infer P ∧ Q. § 5.2 Proof by cases This is another valid inference step (it will form the rule of disjunction elimination in our formal deductive system and in Fitch), but it is also a powerful proof strategy. In a proof by cases, one begins with a disjunction (as a premise, or as an intermediate conclusion already proved). One then shows that a certain consequence may be deduced from each of the disjuncts taken separately. One concludes that that same sentence is a consequence of the entire disjunction. • From P ∨ Q, and from the fact that S follows from P and S also follows from Q, infer S. The general proof strategy looks like this: if you have a disjunction, then you know that at least one of the disjuncts is true—you just don’t know which one. So you consider the individual “cases” (i.e., disjuncts), one at a time. You assume the first disjunct, and then derive your conclusion from it. You repeat this process for each disjunct. So it doesn’t matter which disjunct is true—you get the same conclusion in any case. Hence you may infer that it follows from the entire disjunction. In practice, this method of proof requires the use of “subproofs”—we will take these up in the next chapter when we look at formal proofs. -

Proof Theory Can Be Viewed As the General Study of Formal Deductive Systems

Proof Theory Jeremy Avigad December 19, 2017 Abstract Proof theory began in the 1920’s as a part of Hilbert’s program, which aimed to secure the foundations of mathematics by modeling infinitary mathematics with formal axiomatic systems and proving those systems consistent using restricted, finitary means. The program thus viewed mathematics as a system of reasoning with precise linguistic norms, governed by rules that can be described and studied in concrete terms. Today such a viewpoint has applications in mathematics, computer science, and the philosophy of mathematics. Keywords: proof theory, Hilbert’s program, foundations of mathematics 1 Introduction At the turn of the nineteenth century, mathematics exhibited a style of argumentation that was more explicitly computational than is common today. Over the course of the century, the introduction of abstract algebraic methods helped unify developments in analysis, number theory, geometry, and the theory of equations, and work by mathematicians like Richard Dedekind, Georg Cantor, and David Hilbert towards the end of the century introduced set-theoretic language and infinitary methods that served to downplay or suppress computational content. This shift in emphasis away from calculation gave rise to concerns as to whether such methods were meaningful and appropriate in mathematics. The discovery of paradoxes stemming from overly naive use of set-theoretic language and methods led to even more pressing concerns as to whether the modern methods were even consistent. This led to heated debates in the early twentieth century and what is sometimes called the “crisis of foundations.” In lectures presented in 1922, Hilbert launched his Beweistheorie, or Proof Theory, which aimed to justify the use of modern methods and settle the problem of foundations once and for all. -

Starting the Dismantling of Classical Mathematics

Australasian Journal of Logic Starting the Dismantling of Classical Mathematics Ross T. Brady La Trobe University Melbourne, Australia [email protected] Dedicated to Richard Routley/Sylvan, on the occasion of the 20th anniversary of his untimely death. 1 Introduction Richard Sylvan (n´eRoutley) has been the greatest influence on my career in logic. We met at the University of New England in 1966, when I was a Master's student and he was one of my lecturers in the M.A. course in Formal Logic. He was an inspirational leader, who thought his own thoughts and was not afraid to speak his mind. I hold him in the highest regard. He was very critical of the standard Anglo-American way of doing logic, the so-called classical logic, which can be seen in everything he wrote. One of his many critical comments was: “G¨odel's(First) Theorem would not be provable using a decent logic". This contribution, written to honour him and his works, will examine this point among some others. Hilbert referred to non-constructive set theory based on classical logic as \Cantor's paradise". In this historical setting, the constructive logic and mathematics concerned was that of intuitionism. (The Preface of Mendelson [2010] refers to this.) We wish to start the process of dismantling this classi- cal paradise, and more generally classical mathematics. Our starting point will be the various diagonal-style arguments, where we examine whether the Law of Excluded Middle (LEM) is implicitly used in carrying them out. This will include the proof of G¨odel'sFirst Theorem, and also the proof of the undecidability of Turing's Halting Problem. -

Deep Inference

Deep Inference Alessio Guglielmi University of Bath [email protected] 1 Introduction Deep inference could succinctly be described as an extreme form of linear logic [12]. It is a methodology for designing proof formalisms that generalise Gentzen formalisms, i.e. the sequent calculus and natural deduction [11]. In a sense, deep inference is obtained by applying some of the main concepts behind linear logic to the formalisms, i.e., to the rules by which proof systems are designed. By do- ing so, we obtain a better proof theory than the traditional one due to Gentzen. In fact, in deep inference we can provide proof systems for more logics, in a more regular and modular way, with smaller proofs, less syntax, less bureau- cracy and we have a chance to make substantial progress towards a solution to the century-old problem of the identity of proofs. The first manuscript on deep inference appeared in 1999 and the first refereed papers in 2001 [6, 19]. So far, two formalisms have been designed and developed in deep inference: the calcu- lus of structures [15] and open deduction [17]. A third one, nested sequents [5], introduces deep inference features into a more traditional Gentzen formalism. Essentially, deep inference tries to understand proof composition and proof normalisation (in a very liberal sense including cut elimination [11]) in the most logic-agnostic way. Thanks to it we obtain a deeper understanding of the nature of normalisation. It seems that normalisation is a primitive, simple phenomenon that manifests itself in more or less complicated ways that depend more on the choice of representation for proofs rather than their true mathematical na- ture. -

Qvp) P :: ~~Pp :: (Pvp) ~(P → Q

TEN BASIC RULES OF INFERENCE Negation Introduction (~I – indirect proof IP) Disjunction Introduction (vI – addition ADD) Assume p p Get q & ~q ˫ p v q ˫ ~p Disjunction Elimination (vE – version of CD) Negation Elimination (~E – version of DN) p v q ~~p → p p → r Conditional Introduction (→I – conditional proof CP) q → r Assume p ˫ r Get q Biconditional Introduction (↔I – version of ME) ˫ p → q p → q Conditional Elimination (→E – modus ponens MP) q → p p → q ˫ p ↔ q p Biconditional Elimination (↔E – version of ME) ˫ q p ↔ q Conjunction Introduction (&I – conjunction CONJ) ˫ p → q p or q ˫ q → p ˫ p & q Conjunction Elimination (&E – simplification SIMP) p & q ˫ p IMPORTANT DERIVED RULES OF INFERENCE Modus Tollens (MT) Constructive Dilemma (CD) p → q p v q ~q p → r ˫ ~P q → s Hypothetical Syllogism (HS) ˫ r v s p → q Repeat (RE) q → r p ˫ p → r ˫ p Disjunctive Syllogism (DS) Contradiction (CON) p v q p ~p ~p ˫ q ˫ Any wff Absorption (ABS) Theorem Introduction (TI) p → q Introduce any tautology, e.g., ~(P & ~P) ˫ p → (p & q) EQUIVALENCES De Morgan’s Law (DM) (p → q) :: (~q→~p) ~(p & q) :: (~p v ~q) Material implication (MI) ~(p v q) :: (~p & ~q) (p → q) :: (~p v q) Commutation (COM) Material Equivalence (ME) (p v q) :: (q v p) (p ↔ q) :: [(p & q ) v (~p & ~q)] (p & q) :: (q & p) (p ↔ q) :: [(p → q ) & (q → p)] Association (ASSOC) Exportation (EXP) [p v (q v r)] :: [(p v q) v r] [(p & q) → r] :: [p → (q → r)] [p & (q & r)] :: [(p & q) & r] Tautology (TAUT) Distribution (DIST) p :: (p & p) [p & (q v r)] :: [(p & q) v (p & r)] p :: (p v p) [p v (q & r)] :: [(p v q) & (p v r)] Conditional-Biconditional Refutation Tree Rules Double Negation (DN) ~(p → q) :: (p & ~q) p :: ~~p ~(p ↔ q) :: [(p & ~q) v (~p & q)] Transposition (TRANS) CATEGORICAL SYLLOGISM RULES (e.g., Ǝx(Fx) / ˫ Fy). -

Systematic Construction of Natural Deduction Systems for Many-Valued Logics

23rd International Symposium on Multiple Valued Logic. Sacramento, CA, May 1993 Proceedings. (IEEE Press, Los Alamitos, 1993) pp. 208{213 Systematic Construction of Natural Deduction Systems for Many-valued Logics Matthias Baaz∗ Christian G. Ferm¨ullery Richard Zachy Technische Universit¨atWien, Austria Abstract sion.) Each position i corresponds to one of the truth values, vm is the distinguished truth value. The in- A construction principle for natural deduction sys- tended meaning is as follows: Derive that at least tems for arbitrary finitely-many-valued first order log- one formula of Γm takes the value vm under the as- ics is exhibited. These systems are systematically ob- sumption that no formula in Γi takes the value vi tained from sequent calculi, which in turn can be au- (1 i m 1). ≤ ≤ − tomatically extracted from the truth tables of the log- Our starting point for the construction of natural ics under consideration. Soundness and cut-free com- deduction systems are sequent calculi. (A sequent is pleteness of these sequent calculi translate into sound- a tuple Γ1 ::: Γm, defined to be satisfied by an ness, completeness and normal form theorems for the interpretationj iffj for some i 1; : : : ; m at least one 2 f g natural deduction systems. formula in Γi takes the truth value vi.) For each pair of an operator 2 or quantifier Q and a truth value vi 1 Introduction we construct a rule introducing a formula of the form 2(A ;:::;A ) or (Qx)A(x), respectively, at position i The study of natural deduction systems for many- 1 n of a sequent. -

Propositional Logic

Chapter 3 Propositional Logic 3.1 ARGUMENT FORMS This chapter begins our treatment of formal logic. Formal logic is the study of argument forms, abstract patterns of reasoning shared by many different arguments. The study of argument forms facilitates broad and illuminating generalizations about validity and related topics. We shall initially focus on the notion of deductive validity, leaving inductive arguments to a later treatment (Chapters 8 to 10). Specifically, our concern in this chapter will be with the idea that a valid deductive argument is one whose conclusion cannot be false while the premises are all true (see Section 2.3). By studying argument forms, we shall be able to give this idea a very precise and rigorous characterization. We begin with three arguments which all have the same form: (1) Today is either Monday or Tuesday. Today is not Monday. Today is Tuesday. (2) Either Rembrandt painted the Mona Lisa or Michelangelo did. Rembrandt didn't do it. :. Michelangelo did. (3) Either he's at least 18 or he's a juvenile. He's not at least 18. :. He's a juvenile. It is easy to see that these three arguments are all deductively valid. Their common form is known by logicians as disjunctive syllogism, and can be represented as follows: Either P or Q. It is not the case that P :. Q. The letters 'P' and 'Q' function here as placeholders for declarative' sentences. We shall call such letters sentence letters. Each argument which has this form is obtainable from the form by replacing the sentence letters with sentences, each occurrence of the same letter being replaced by the same sentence. -

Complexities of Proof-Theoretical Reductions

Complexities of Proof-Theoretical Reductions Michael Toppel Submitted in accordance with the requirements for the degree of Doctor of Philosophy The University of Leeds Department of Pure Mathematics September 2016 iii The candidate confirms that the work submitted is his own and that appropriate credit has been given where reference has been made to the work of others. This copy has been supplied on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. c 2016 The University of Leeds and Michael Toppel iv v Abstract The present thesis is a contribution to a project that is carried out by Michael Rathjen 0 and Andreas Weiermann to give a general method to study the proof-complexity of Σ1- sentences. This general method uses the generalised ordinal-analysis that was given by Buchholz, Ruede¨ and Strahm in [5] and [44] as well as the generalised characterisation of provable-recursive functions of PA + TI(≺ α) that was given by Weiermann in [60]. The present thesis links these two methods by giving an explicit elementary bound for the i proof-complexity increment that occurs after the transition from the theory IDc ! + TI(≺ α), which was used by Ruede¨ and Strahm, to the theory PA + TI(≺ α), which was analysed by Weiermann. vi vii Contents Abstract . v Contents . vii Introduction 1 1 Justifying the use of Multi-Conclusion Sequents 5 1.1 Introduction . 5 1.2 A Gentzen-semantics . 8 1.3 Multi-Conclusion Sequents . 15 1.4 Comparison with other Positions and Responses to Objections . -

Logical Verification Course Notes

Logical Verification Course Notes Femke van Raamsdonk [email protected] Vrije Universiteit Amsterdam autumn 2008 Contents 1 1st-order propositional logic 3 1.1 Formulas . 3 1.2 Natural deduction for intuitionistic logic . 4 1.3 Detours in minimal logic . 10 1.4 From intuitionistic to classical logic . 12 1.5 1st-order propositional logic in Coq . 14 2 Simply typed λ-calculus 25 2.1 Types . 25 2.2 Terms . 26 2.3 Substitution . 28 2.4 Beta-reduction . 30 2.5 Curry-Howard-De Bruijn isomorphism . 32 2.6 Coq . 38 3 Inductive types 41 3.1 Expressivity . 41 3.2 Universes of Coq . 44 3.3 Inductive types . 45 3.4 Coq . 48 3.5 Inversion . 50 4 1st-order predicate logic 53 4.1 Terms and formulas . 53 4.2 Natural deduction . 56 4.2.1 Intuitionistic logic . 56 4.2.2 Minimal logic . 60 4.3 Coq . 62 5 Program extraction 67 5.1 Program specification . 67 5.2 Proof of existence . 68 5.3 Program extraction . 68 5.4 Insertion sort . 68 i 1 5.5 Coq . 69 6 λ-calculus with dependent types 71 6.1 Dependent types: introduction . 71 6.2 λP ................................... 75 6.3 Predicate logic in λP ......................... 79 7 Second-order propositional logic 83 7.1 Formulas . 83 7.2 Intuitionistic logic . 85 7.3 Minimal logic . 88 7.4 Classical logic . 90 8 Polymorphic λ-calculus 93 8.1 Polymorphic types: introduction . 93 8.2 λ2 ................................... 94 8.3 Properties of λ2............................ 98 8.4 Expressiveness of λ2........................ -

An Integration of Resolution and Natural Deduction Theorem Proving

From: AAAI-86 Proceedings. Copyright ©1986, AAAI (www.aaai.org). All rights reserved. An Integration of Resolution and Natural Deduction Theorem Proving Dale Miller and Amy Felty Computer and Information Science University of Pennsylvania Philadelphia, PA 19104 Abstract: We present a high-level approach to the integra- ble of translating between them. In order to achieve this goal, tion of such different theorem proving technologies as resolution we have designed a programming language which permits proof and natural deduction. This system represents natural deduc- structures as values and types. This approach builds on and ex- tion proofs as X-terms and resolution refutations as the types of tends the LCF approach to natural deduction theorem provers such X-terms. These type structures, called ezpansion trees, are by replacing the LCF notion of a uakfation with explicit term essentially formulas in which substitution terms are attached to representation of proofs. The terms which represent proofs are quantifiers. As such, this approach to proofs and their types ex- given types which generalize the formulas-as-type notion found tends the formulas-as-type notion found in proof theory. The in proof theory [Howard, 19691. Resolution refutations are seen LCF notion of tactics and tacticals can also be extended to in- aa specifying the type of a natural deduction proofs. This high corporate proofs as typed X-terms. Such extended tacticals can level view of proofs as typed terms can be easily combined with be used to program different interactive and automatic natural more standard aspects of LCF to yield the integration for which Explicit representation of proofs deduction theorem provers. -

Inversion by Definitional Reflection and the Admissibility of Logical Rules

THE REVIEW OF SYMBOLIC LOGIC Volume 2, Number 3, September 2009 INVERSION BY DEFINITIONAL REFLECTION AND THE ADMISSIBILITY OF LOGICAL RULES WAGNER DE CAMPOS SANZ Faculdade de Filosofia, Universidade Federal de Goias´ THOMAS PIECHA Wilhelm-Schickard-Institut, Universitat¨ Tubingen¨ Abstract. The inversion principle for logical rules expresses a relationship between introduction and elimination rules for logical constants. Hallnas¨ & Schroeder-Heister (1990, 1991) proposed the principle of definitional reflection, which embodies basic ideas of inversion in the more general context of clausal definitions. For the context of admissibility statements, this has been further elaborated by Schroeder-Heister (2007). Using the framework of definitional reflection and its admis- sibility interpretation, we show that, in the sequent calculus of minimal propositional logic, the left introduction rules are admissible when the right introduction rules are taken as the definitions of the logical constants and vice versa. This generalizes the well-known relationship between introduction and elimination rules in natural deduction to the framework of the sequent calculus. §1. Inversion principle. The idea of inverting logical rules can be found in a well- known remark by Gentzen: “The introductions are so to say the ‘definitions’ of the sym- bols concerned, and the eliminations are ultimately only consequences hereof, what can approximately be expressed as follows: In eliminating a symbol, the formula concerned – of which the outermost symbol is in question – may only ‘be used as that what it means on the ground of the introduction of that symbol’.”1 The inversion principle itself was formulated by Lorenzen (1955) in the general context of rule-based systems and is thus not restricted to logical rules.