Automatic Whiteout++: Correcting Mini-QWERTY Typing Errors Usi Ng Keypress Ti Mi Ng

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mac Keyboard Shortcuts Cut, Copy, Paste, and Other Common Shortcuts

Mac keyboard shortcuts By pressing a combination of keys, you can do things that normally need a mouse, trackpad, or other input device. To use a keyboard shortcut, hold down one or more modifier keys while pressing the last key of the shortcut. For example, to use the shortcut Command-C (copy), hold down Command, press C, then release both keys. Mac menus and keyboards often use symbols for certain keys, including the modifier keys: Command ⌘ Option ⌥ Caps Lock ⇪ Shift ⇧ Control ⌃ Fn If you're using a keyboard made for Windows PCs, use the Alt key instead of Option, and the Windows logo key instead of Command. Some Mac keyboards and shortcuts use special keys in the top row, which include icons for volume, display brightness, and other functions. Press the icon key to perform that function, or combine it with the Fn key to use it as an F1, F2, F3, or other standard function key. To learn more shortcuts, check the menus of the app you're using. Every app can have its own shortcuts, and shortcuts that work in one app may not work in another. Cut, copy, paste, and other common shortcuts Shortcut Description Command-X Cut: Remove the selected item and copy it to the Clipboard. Command-C Copy the selected item to the Clipboard. This also works for files in the Finder. Command-V Paste the contents of the Clipboard into the current document or app. This also works for files in the Finder. Command-Z Undo the previous command. You can then press Command-Shift-Z to Redo, reversing the undo command. -

DEC Text Processing Utility Reference Manual

DEC Text Processing Utility Reference Manual Order Number: AA–PWCCD–TE April 2001 This manual describes the elements of the DEC Text Processing Utility (DECTPU). It is intended as a reference manual for experienced programmers. Revision/Update Information: This manual supersedes the DEC Text Processing Utility Reference Manual, Version 3.1 for OpenVMS Version 7.2. Software Version: DEC Text Processing Utility Version 3.1 for OpenVMS Alpha Version 7.3 and OpenVMS VAX Version 7.3 The content of this document has not changed since OpenVMS Version 7.1. Compaq Computer Corporation Houston, Texas © 2001 Compaq Computer Corporation COMPAQ, VAX, VMS, and the Compaq logo Registered in U.S. Patent and Trademark Office. OpenVMS is a trademark of Compaq Information Technologies Group, L.P. Motif is a trademark of The Open Group. PostScript is a registered trademark of Adobe Systems Incorporated. All other product names mentioned herein may be the trademarks or registered trademarks of their respective companies. Confidential computer software. Valid license from Compaq or authorized sublicensor required for possession, use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. Compaq shall not be liable for technical or editorial errors or omissions contained herein. The information in this document is provided "as is" without warranty of any kind and is subject to change without notice. The warranties for Compaq products are set forth in the express limited warranty statements accompanying such products. -

Openvms: an Introduction

The Operating System Handbook or, Fake Your Way Through Minis and Mainframes by Bob DuCharme VMS Table of Contents Chapter 7 OpenVMS: An Introduction.............................................................................. 7.1 History..........................................................................................................................2 7.1.1 Today........................................................................................................................3 7.1.1.1 Popular VMS Software..........................................................................................4 7.1.2 VMS, DCL................................................................................................................4 Chapter 8 Getting Started with OpenVMS........................................................................ 8.1 Starting Up...................................................................................................................7 8.1.1 Finishing Your VMS Session...................................................................................7 8.1.1.1 Reconnecting..........................................................................................................7 8.1.2 Entering Commands..................................................................................................8 8.1.2.1 Retrieving Previous Commands............................................................................9 8.1.2.2 Aborting Screen Output.........................................................................................9 -

(12) Patent Application Publication (10) Pub. No.: US 2010/0120003 A1 Herman (43) Pub

US 201001 20003A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2010/0120003 A1 Herman (43) Pub. Date: May 13, 2010 (54) HOLISTIC APPROACH TO LEARNING TO Publication Classification TYPE (51) Int. Cl. G09B I3/00 (2006.01) (75) Inventor: Rita P. Herman, Pittsburgh, PA (52) U.S. Cl. 434/227 Us) - - - - -- r. (US) (57) ABSTRACT Correspondence Address: The present invention is directed to a method of teaching THE WEBB LAW FIRM, P.C. typing wherein the student associates the keys on the key 700 KOPPERS BUILDING, 436 SEVENTH board with a person, place, thing or emotion and is able to AVENUE more readily learn the position of each key. Further, the PITTSBURGH, PA 15219 (US) method of the present invention provides immediate gratifi cation to the student as they are able to instantly visually and (73) Assignee: Keyboard Town PALS, LLC, auditorily appreciate the accuracy of their typing on a com Pittsburgh, PA (US) puter monitor or screen that is attached to the keyboard. The delete key and/or backspace key may be inactivated during (21) Appl. No.: 12/269,916 operation of the program. Further, the color of the letters and background displayed on the monitor or screen may be vari (22) Filed: Nov. 13, 2008 able. As Patent Application Publication May 13, 2010 Sheet 1 of 4 US 2010/01 20003 A1 z NMOINMOGA. Patent Application Publication May 13, 2010 Sheet 2 of 4 US 2010/01 20003 A1 Nºwolqawoaxax zºsi Patent Application Publication May 13, 2010 Sheet 3 of 4 US 2010/0120003 A1 Patent Application Publication May 13, 2010 Sheet 4 of 4 US 2010/01 20003 A1 FIG. -

Chapter 4 Using a Word Processor

Chapter 4 Using a Word Processor Word is the Microsoft Office word processor application. This chapter introduces Word for creating letters and simple documents. Modifying a document and collaborating on a document are explained. What is a Word Processor? A word processor is a computer application for creating, modifying, printing, and e-mailing documents. It is used to produce easy-to-read, professional-looking documents such as letters, résumés, and reports. The Microsoft Word 2003 window looks similar to: ��������� �������� ������� ������ ��������������������� ��������������� ���������� The Word window displays information about a document and includes tools for working with documents: • The file name of the current document is displayed in the title bar. The name Document1 is used temporarily until the document is saved with a descriptive name. • Select commands from menus in the menu bar. Using a Word Processor 87 • Click a button on the toolbar to perform an action. Click the New Blank Document button ( ) to create a new document. • The rulers show the paper size. Markers on the rulers are used for formatting text. • The vertical line is the insertion point that indicates where the next character typed will be placed. In a new document the insertion Overtype Mode point is in the upper-left corner. It blinks to draw attention to its Overtype mode means that as location. text is typed it replaces exist- ing text, instead of inserting • View information about the current document in the status bar. characters. Overtype mode is • Links for opening a document or creating a new document are in on if the OVR indicator on the the Getting Started task pane. -

COMPUTER Class – I Chapter-6 Part 1 COMPUTER KEYBOARD

COMPUTER Class – I Chapter-6 Part 1 COMPUTER KEYBOARD A pencil or a pen is needed to write in a notebook, but we cannot use a pencil or pen for writing on a computer. You have to use the keyboard to write/enter the information and instruction into a computer. It looks like a typewriter’s keyboard. It has many small button on it. These buttons are called keys. Whatever we type on the keyboard appears on the monitor at the cursor position. Cursor is a small blinking line on the monitor. There are usually 104 keys on the keyboard. There are different types of keys to perform different tasks. The different types of keys are as follows: ❖ Alphabet Keys ❖ Number Keys ❖ Function Keys ❖ Special Keys ALPHABET KEYS These keys are used for typing letters and words. The letters A to Z are written on these keys. The alphabet keys resemble the keys of a typewriter. Due to its first row of alphabet (Q,W,E,R,T,Y), it is called a QWERTY keyboard/keypad. NUMBER KEYS These are keys with numbers written on them. These keys are used to type numbers. One set of number keys are placed above the alphabet keys and another set is on the right side of the keyboard.(0-9) Numeric keypad can be used only when the NUM LOCK is ON. EXERCISES 1. Fill in the blanks: a. Small buttons on the keyboard are called -------------. b. There are usually------------keys on the keyboard. c. Keyboard is used to enter ------------- and -------------- into the computer. -

Title Keyboard : All Special Keys : Enter, Del, Shift, Backspace ,Tab … Contributors Dhanya.P Std II Reviewers Submission Approval Date Date Ref No

Title Keyboard : All special keys : Enter, Del, Shift, Backspace ,Tab ¼ Contributors Dhanya.P Std II Reviewers Submission Approval Date Date Ref No: This topic describes the special keys on the keyboard of a computer Brief Description and their functionalities . Goal To familiarize the special keys on the keyboard of a computer. Pre-requisites Familiarity with computer. Learning Concepts that special keys on a keyboard has special functionalities. Outcome One Period Duration http://www.ckls.org/~crippel/computerlab/tutorials/keyboard/ References http://computer.howstuffworks.com/ Page Nos: 2,3,4,5,6 Detailed Description Page No: 7 Lesson Plan Page No: 7 Worksheet Page No: 8 Evaluation Page No: 8 Other Notes Detailed Description A computer keyboard is a peripheral , partially modeled after the typewriter keyboard. Keyboards are designed for the input of text and characters. Special Keys Function Keys Cursor Control Keys Esc Key Control Key Shift Key Enter Key Tab Key Insert Key Delete Key ScrollLock Key NumLock Key CapsLock Key Pasue/Break Key PrtScr Key Function Keys F1 through F12 are the function keys. They have special purposes. The following are mainly the purpose of the function keys. But it may vary according to the software currently running. # F1 - Help # F2 - Renames selected file # F3 - Opens the file search box # F4 - Opens the address bar in Windows Explorer # F5 - Refreshes the screen in Windows Explorer # F6 - Navigates between different sections of a Windows Explorer window # F8 - Opens the start-up menu when booting Windows # F11 - Opens full screen mode in Explorer Function Keys F1 through F12 are the function keys. -

Emulator User's Reference

Personal Communications for Windows, Ver sion 5.8 Emulator User’s Reference SC31-8960-00 Personal Communications for Windows, Ver sion 5.8 Emulator User’s Reference SC31-8960-00 Note Before using this information and the product it supports, read the information in “Notices,” on page 217. First Edition (September 2004) This edition applies to Version 5.8 of Personal Communications (program number: 5639–I70) and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 1989, 2004. All rights reserved. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Figures . vii Using PDT Files . .24 Double-Byte Character Support. .25 Tables . .ix Printing to Disk . .26 Workstation Profile Parameter for Code Page . .27 About This Book. .xi Chapter 5. Key Functions and Who Should Read This Book. .xi How to Use This Book . .xi Keyboard Setup . .29 Command Syntax Symbols . .xi Default Key Function Assignments . .29 Where to Find More Information . xii Setting the 3270 Keyboard Layout Default . .29 InfoCenter. xii Default Key Functions for a 3270 Layout . .29 Online Help . xii Setting the 5250 Keyboard Layout Default . .32 Personal Communications Library. xii Default Key Functions for a 5250 Layout . .32 Related Publications . xiii Default Key Functions for the Combined Package 34 Contacting IBM. xiii Setting the VT Keyboard Layout Default . .34 Support Options . xiv Default Key Functions for the VT Emulator Layout . .35 Keyboard Setup (3270 and 5250) . .36 Part 1. General Information . .1 Keyboard File . .36 Win32 Cut, Copy, and Paste Hotkeys . -

'Delete' Key? Lenovo Did. Here's Why. 25 June 2009, by JESSICA MINTZ , AP Technology Writer

Who moved my 'Delete' key? Lenovo did. Here's why. 25 June 2009, By JESSICA MINTZ , AP Technology Writer Typewriter technology evolved. Mainframe computing led to function keys and others of uncertain use today. The PC era dawned. Yet many laws of keyboard layout remain sacred, like the 19-millimeter distance between the centers of the letter keys. Tom Hardy, who designed the original IBM PC of 1981, said companies have tried many times to change the sizes of keys. That first PC had a In this product image released by Lenovo, the Lenovo smaller "Shift" key than IBM's popular Selectric Thinkpad T400S Laptop keyboard, is shown. (AP typewriter did, and it was placed in a different spot, Photo/Lenovo) in part because the industry didn't think computers would replace typewriters for high-volume typing tasks. Lenovo put nearly a year of research into two IBM reversed course with the next version to quiet design changes that debuted on an updated the outcry from skilled touch-typists. ThinkPad laptop this week. No, not the thinner, lighter form or the textured touchpad - rather, the "Customers have responded with a resounding, extra-large "Delete" and "Escape" keys. 'Don't fool with the key unless you can you can improve it,'" said Hardy, now a design strategist It may seem like a small change, but David Hill, based in Atlanta. vice president of corporate identity and design at Lenovo, points out, "Any time you start messing PC makers relearned this lesson in the past year, around with the keyboard, people get nervous." as netbooks - tiny, cheap laptops - have become popular with budget-conscious consumers. -

Using Backspace & Delete Keys

DDEELLEETTEE TTEEXXTT IINN WWOORRDD 22001100 http://www.tutorialspoint.com/word/word_delete_text.htm Copyright © tutorialspoint.com It is very common to delete text and retype the content in your word document. You might type something you did not want to type or there is something extra which is not required in the document. Regardless of the reason, Word offers you various ways of deleting the text in partial or complete content of the document. Using Backspace & Delete Keys: The most basic deletion technique is to delete characters one at a time by pressing either backspace or delete keys. Following table describes how you can delete single character or whole word by using either of these two keys: SN Keys & Deletion Methods 1 Backspace Keep the insertion point just after the character you want to delete and press backspace key. Word deletes the character immediately to the left of the insertion point. 2 Ctrl + Backspace Keep the insertion point just after the word you want to delete and press Ctrl + Backspace key. Word deletes the whole word immediately to the left of the insertion point. 3 Delete Keep the insertion point just before the character you want to delete and press delete key. Word deletes the character immediately to the right of the insertion point. 4 Ctrl + Delete Keep the insertion point just before the word you want to delete and press Ctrl + Delete key. Word deletes the word immediately to the right of the insertion point. Using Selection Method: You have learnt how to select various parts of a word document. -

The ASCII Character Set

Appendix The ASCII Character Set The various sorting, text processing, and character handling operations for which Unix utilities are available assume that characters are ordered in the sequence prescribed by the ASCII character set. An ASCII (Amer ican Standard for Computer Information Interchange) character is defined as a string of 7 bits which represents a printable character or a nonprintable control function. For example, 1 101 000 represents the printable char acter h, while a 001 000 represents the backspace function. For ease in reading, the binary digits are often written in groups of three, and even more frequently each group of three is given its natural numerical inter pretation, that is, an octal representation is used. Thus 1 101 000 is normally written as 150, the groups 101 and 000 having been interpreted as the octal numbers 5 and 0, respectively. Decimal and hexadecimal representations, in which 1 101 000 appears as 104 or 6a, are also commonly used. Since an ASCII character is exactly seven bits long, 128 distinct char acters can be formed. These are given in Table A.l, The ASCII Character Set. Perhaps surprisingly, there are some installation-dependent differences between printed symbols and the corresponding ASCII bit configurations. For example, 043 (0 100 all) is rendered as the crosshatched "pounds" sign in American practice and as the "pounds sterling" symbol on many printers in Britain. Some other British printers and terminals substitute the "pounds sterling" sign for the dollar sign. There is no ambiguity, how ever, about the alphabetic and numeric characters, nor about the math ematicaloperators. -

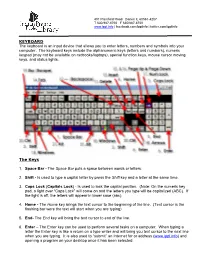

KEYBOARD the Keyboard Is an Input Device That Allows You to Enter Letters, Numbers and Symbols Into Your Computer. the Keyboard

401 Plainfield Road Darien IL 60561-4207 T 630/887-8760 F 630/887-8760 www.ippl.info | facebook.com/ipplinfo | twitter.com/ipplinfo KEYBOARD The keyboard is an input device that allows you to enter letters, numbers and symbols into your computer. The keyboard keys include the alphanumeric keys (letters and numbers), numeric keypad (may not be available on netbooks/laptops), special function keys, mouse cursor moving keys, and status lights. The Keys 1. Space Bar - The Space Bar puts a space between words or letters. 2. Shift - Is used to type a capital letter by press the Shift key and a letter at the same time. 3. Caps Lock (Capitals Lock) - Is used to lock the capital position. (Note: On the numeric key pad, a light over “Caps Lock” will come on and the letters you type will be capitalized (ABC). If the light is off, the letters will appear in lower case (abc). 4. Home - The Home key brings the text cursor to the beginning of the line. (Text cursor is the flashing bar were the text will start when you are typing) 5. End- The End key will bring the text cursor to end of the line. 6. Enter – The Enter key can be used to perform several tasks on a computer. When typing a letter the Enter key is like a return on a type writer and will bring you text cursor to the next line when you are typing. It is also used to “submit” an Internet for or address (www.ippl.info) and opening a program on your desktop once it has been selected.