Computer Hardware

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1 Introduction

Cambridge University Press 978-0-521-76992-1 - Microprocessor Architecture: From Simple Pipelines to Chip Multiprocessors Jean-Loup Baer Excerpt More information 1 Introduction Modern computer systems built from the most sophisticated microprocessors and extensive memory hierarchies achieve their high performance through a combina- tion of dramatic improvements in technology and advances in computer architec- ture. Advances in technology have resulted in exponential growth rates in raw speed (i.e., clock frequency) and in the amount of logic (number of transistors) that can be put on a chip. Computer architects have exploited these factors in order to further enhance performance using architectural techniques, which are the main subject of this book. Microprocessors are over 30 years old: the Intel 4004 was introduced in 1971. The functionality of the 4004 compared to that of the mainframes of that period (for example, the IBM System/370) was minuscule. Today, just over thirty years later, workstations powered by engines such as (in alphabetical order and without specific processor numbers) the AMD Athlon, IBM PowerPC, Intel Pentium, and Sun UltraSPARC can rival or surpass in both performance and functionality the few remaining mainframes and at a much lower cost. Servers and supercomputers are more often than not made up of collections of microprocessor systems. It would be wrong to assume, though, that the three tenets that computer archi- tects have followed, namely pipelining, parallelism, and the principle of locality, were discovered with the birth of microprocessors. They were all at the basis of the design of previous (super)computers. The advances in technology made their implementa- tions more practical and spurred further refinements. -

Instruction Latencies and Throughput for AMD and Intel X86 Processors

Instruction latencies and throughput for AMD and Intel x86 processors Torbj¨ornGranlund 2019-08-02 09:05Z Copyright Torbj¨ornGranlund 2005{2019. Verbatim copying and distribution of this entire article is permitted in any medium, provided this notice is preserved. This report is work-in-progress. A newer version might be available here: https://gmplib.org/~tege/x86-timing.pdf In this short report we present latency and throughput data for various x86 processors. We only present data on integer operations. The data on integer MMX and SSE2 instructions is currently limited. We might present more complete data in the future, if there is enough interest. There are several reasons for presenting this report: 1. Intel's published data were in the past incomplete and full of errors. 2. Intel did not publish any data for 64-bit operations. 3. To allow straightforward comparison of an important aspect of AMD and Intel pipelines. The here presented data is the result of extensive timing tests. While we have made an effort to make sure the data is accurate, the reader is cautioned that some errors might have crept in. 1 Nomenclature and notation LNN means latency for NN-bit operation.TNN means throughput for NN-bit operation. The term throughput is used to mean number of instructions per cycle of this type that can be sustained. That implies that more throughput is better, which is consistent with how most people understand the term. Intel use that same term in the exact opposite meaning in their manuals. The notation "P6 0-E", "P4 F0", etc, are used to save table header space. -

TA770XE3 Motherboard

TA770XE3 Motherboard • Supported Socket AM3 processors AMD Phenom II X4 / Phenom II X3 / Athlon II processor • AMD 125W processor support • AMD 770 Chipset • Dual-Channel DDR3 -1600(OC)/1333/1066/800 • AMD OverDrive Utility Support TA770XE3 Specifcation CPU SUPPORT AMD Phenom™ II X6 Processor AMD Phenom™ II X4 Processor AMD Phenom™ II X3 Processor AMD Phenom™ II X2 Processor AMD Athlon™ II X4 Processor AMD Athlon™ II X3 Processor AMD Athlon™ II X2 Processor Maximum CPU TDP (Thermal Design Power) : 125Watt HT Support HT 5.2G MEMORY Support Dual Channel DDR3 800/1066/1333/1600(OC) MHz 4 x DDR3 DIMM Memory Slot Max. Supports up to 16GB Memory STORAGE 6 x SATA II Connector BIOSTAR1 x IDE Connector Support SATA RAID: 0,1,10 LAN Realtek RTL8111DL - 10/100/1000 Controller AUDIO CODEC Realtek ALC662 6-Channel HD Audio USB 6 x USB 2.0 Port 3 x USB 2.0 Header EXPANSION SLOT 1 x PCI-E 2.0 x16 Slot 2 x PCI-E 2.0 x1 Slot 3 x PCI Slot REAR I/O 1 x PS/2 Mouse 1 x PS/2 Keyboard 6 x USB 2.0 Port 1 x LAN Port 3 x Audio Jacks INTERNAL I/O 3 x USB 2.0 Header 6 x SATA II Connector (3Gb/s ) 1 x IDE Connector 1 x Floppy Connector 1 x Front Audio Header 1 x Front Panel Header 1 x CD-IN Header 1 x S/PDIF-Out Header 1 x CPU Fan Connector 2 x System Fan Connector 1 x COM Port Header DIMENSION ATX Form Factor Dimension: 30.5cm X 22cm ( W x L ) OS SUPPORT Supports Windows XP / Vista / 7 ACCESSORIES 3 x SATA Cable 1 x SATA Power Cable 1 x I/O Shield 1 x Fully Setup Driver CD 1 x User Manual FEATURES Supports BIO-unlocKING Windows 7 Compatible Supports Phenom II x6 Supports AM3 Supports 100% Solid capacitor Supports Fanless Supports Clean-Tone Supports O.N.E Supports Rapid Switch Supports Rapid Debug BIOS-Flasher Supports Toverclcoker OC software BIOSTARSupports GPU TA770XE3 OVERVIEW *The specification and pictures are subject to change without notice and the package contents may differ by area or your motherboard version!. -

Motherboard Gigabyte Ga-A75n-Usb3

Socket AM3+ - AMD 990FX - GA-990FXA-UD5 (rev. 1.x) Socket AM3+ Pagina 1/3 GA- Model 990FXA- Motherboard UD5 PCB 1.x Since L2 L3Core System vendor CPU Model Frequency Process Stepping Wattage BIOS Name Cache Cache Bus(MT/s) Version AMD FX-8150 3600MHz 1MBx8 8MB Bulldozer 32nm B2 125W 5200 F5 AMD FX-8120 3100MHz 1MBx8 8MB Bulldozer 32nm B2 125W 5200 F5 AMD FX-8120 3100MHz 1MBx8 8MB Bulldozer 32nm B2 95W 5200 F5 AMD FX-8100 2800MHz 1MBx8 8MB Bulldozer 32nm B2 95W 5200 F5 AMD FX-6100 3300MHz 1MBx6 8MB Bulldozer 32nm B2 95W 5200 F5 AMD FX-4100 3600MHz 1MBx4 8MB Bulldozer 32nm B2 95W 5200 F5 Socket AM3 GA- Model 990FXA- Motherboard UD5 PCB 1.x Since L2 L3Core System vendor CPU Model Frequency Process Stepping Wattage BIOS Name Cache Cache Bus(MT/s) Version AMD Phenom II X6 1100T 3300MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1090T 3200MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1075T 3000MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1065T 2900MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X6 1055T 2800MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1055T 2800MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X6 1045T 2700MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X6 1035T 2600MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X4 980 3700MHz 512KBx4 6MB Deneb 45nm C3 125W 4000 F6 AMD Phenom II X4 975 3600MHz 512KBx4 6MB Deneb 45nm C3 125W 4000 F2 AMD Phenom II X4 970 3500MHz 512KBx4 6MB Deneb 45nm C3 125W 4000 F2 AMD Phenom II X4 965 3400MHz 512KBx4 -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Amd Athlon Ii X2 270 Manual

Amd Athlon Ii X2 270 Manual Specifications. Please visit AMD Athlon II X2 215 (rev. C3) and AMD Athlon II X2 270 pages for more detailed specifications. Review, Differences, Benchmarks, Specifications, Comments Athlon II X2 270. CPUBoss recommends the AMD Athlon II X2 270 based on its. See full details. Specifications. Please visit AMD Athlon II X2 270 and AMD Athlon II X2 280 pages for more detailed specifications of both. Far Cry 4 on AMD Athlon x2 340(Dual Core) 4GB RAM HD 6570 PC Specifications. Specifications. Please visit AMD Athlon II X2 270 and AMD FX-6300 pages for more detailed specifications of both. AMD Athlon II x2 260 (3.2GHz) Although the specifications of this cpu list 74C as the max temp, i prefer to stick to the old "65C max" rule-of-thumb for amd cpus. Amd Athlon Ii X2 270 Manual Read/Download AMD Athlon II X2 270u. 2 GHz, Dual core. Front view of AMD Athlon II X2 270u. 5.9 Out of 10. VS Review, Differences, Benchmarks, Specifications, Comments. Photos of the AMD Athlon II X2 270 Black Edition from the KitGuru Price Comparison Engine. Specifications. Please visit AMD Athlon II X2 270 and AMD FX-4300 pages for more detailed specifications of both. SPECIFICATIONS : Model : AMD Athlon II X2 270. CPU Clock Speed : 3.4 GHz. Core : 2. Total L2 Cache : 2 MB Sockets : Socket AM2+,Socket AM3 Supported. up vote -5 down vote favorite. These are my specifications: AMD Athlon II x2 270 CPU, AMD 760g GPU, 4GB RAM. grand-theft-auto-5. -

Exam 1 Solutions

Midterm Exam ECE 741 – Advanced Computer Architecture, Spring 2009 Instructor: Onur Mutlu TAs: Michael Papamichael, Theodoros Strigkos, Evangelos Vlachos February 25, 2009 EXAM 1 SOLUTIONS Problem Points Score 1 40 2 20 3 15 4 20 5 25 6 20 7 (bonus) 15 Total 140+15 • This is a closed book midterm. You are allowed to have only two letter-sized cheat sheets. • No electronic devices may be used. • This exam lasts 1 hour 50 minutes. • If you make a mess, clearly indicate your final answer. • For questions requiring brief answers, please provide brief answers. Do not write an essay. You can be penalized for verbosity. • Please show your work when needed. We cannot give you partial credit if you do not clearly show how you arrive at a numerical answer. • Please write your name on every sheet. EXAM 1 SOLUTIONS Problem 1 (Short answers – 40 points) i. (3 points) A cache has the block size equal to the word length. What property of program behavior, which usually contributes to higher performance if we use a cache, does not help the performance if we use THIS cache? Spatial locality ii. (3 points) Pipelining increases the performance of a processor if the pipeline can be kept full with useful instructions. Two reasons that often prevent the pipeline from staying full with useful instructions are (in two words each): Data dependencies Control dependencies iii. (3 points) The reference bit (sometimes called “access” bit) in a PTE (Page Table Entry) is used for what purpose? Page replacement The similar function is performed by what bit or bits in a cache’s tag store entry? Replacement policy bits (e.g. -

Trends in Processor Architecture

A. González Trends in Processor Architecture Trends in Processor Architecture Antonio González Universitat Politècnica de Catalunya, Barcelona, Spain 1. Past Trends Processors have undergone a tremendous evolution throughout their history. A key milestone in this evolution was the introduction of the microprocessor, term that refers to a processor that is implemented in a single chip. The first microprocessor was introduced by Intel under the name of Intel 4004 in 1971. It contained about 2,300 transistors, was clocked at 740 KHz and delivered 92,000 instructions per second while dissipating around 0.5 watts. Since then, practically every year we have witnessed the launch of a new microprocessor, delivering significant performance improvements over previous ones. Some studies have estimated this growth to be exponential, in the order of about 50% per year, which results in a cumulative growth of over three orders of magnitude in a time span of two decades [12]. These improvements have been fueled by advances in the manufacturing process and innovations in processor architecture. According to several studies [4][6], both aspects contributed in a similar amount to the global gains. The manufacturing process technology has tried to follow the scaling recipe laid down by Robert N. Dennard in the early 1970s [7]. The basics of this technology scaling consists of reducing transistor dimensions by a factor of 30% every generation (typically 2 years) while keeping electric fields constant. The 30% scaling in the dimensions results in doubling the transistor density (doubling transistor density every two years was predicted in 1975 by Gordon Moore and is normally referred to as Moore’s Law [21][22]). -

Theoretical Peak FLOPS Per Instruction Set on Modern Intel Cpus

Theoretical Peak FLOPS per instruction set on modern Intel CPUs Romain Dolbeau Bull – Center for Excellence in Parallel Programming Email: [email protected] Abstract—It used to be that evaluating the theoretical and potentially multiple threads per core. Vector of peak performance of a CPU in FLOPS (floating point varying sizes. And more sophisticated instructions. operations per seconds) was merely a matter of multiplying Equation2 describes a more realistic view, that we the frequency by the number of floating-point instructions will explain in details in the rest of the paper, first per cycles. Today however, CPUs have features such as vectorization, fused multiply-add, hyper-threading or in general in sectionII and then for the specific “turbo” mode. In this paper, we look into this theoretical cases of Intel CPUs: first a simple one from the peak for recent full-featured Intel CPUs., taking into Nehalem/Westmere era in section III and then the account not only the simple absolute peak, but also the full complexity of the Haswell family in sectionIV. relevant instruction sets and encoding and the frequency A complement to this paper titled “Theoretical Peak scaling behavior of current Intel CPUs. FLOPS per instruction set on less conventional Revision 1.41, 2016/10/04 08:49:16 Index Terms—FLOPS hardware” [1] covers other computing devices. flop 9 I. INTRODUCTION > operation> High performance computing thrives on fast com- > > putations and high memory bandwidth. But before > operations => any code or even benchmark is run, the very first × micro − architecture instruction number to evaluate a system is the theoretical peak > > - how many floating-point operations the system > can theoretically execute in a given time. -

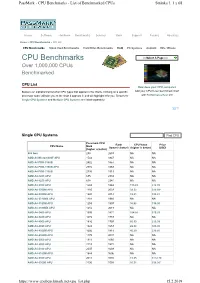

CPU Benchmarks - List of Benchmarked Cpus Stránka Č

PassMark - CPU Benchmarks - List of Benchmarked CPUs Stránka č. 1 z 68 Home Software Hardware Benchmarks Services Store Support Forums About Us Home » CPU Benchmarks » CPU List CPU Benchmarks Video Card Benchmarks Hard Drive Benchmarks RAM PC Systems Android iOS / iPhone CPU Benchmarks ----Select A Page ---- Over 1,000,000 CPUs Benchmarked CPU List How does your CPU compare? Below is an alphabetical list of all CPU types that appear in the charts. Clicking on a specific Add your CPU to our benchmark chart processor name will take you to the chart it appears in and will highlight it for you. Results for with PerformanceTest V9 ! Single CPU Systems and Multiple CPU Systems are listed separately. Single CPU Systems Find CPU Passmark CPU Rank CPU Value Price CPU Name Mark (lower is better) (higher is better) (USD) (higher is better) 686 Gen 288 2653 NA NA AMD A4 Micro-6400T APU 1544 1847 NA NA AMD A4 PRO-3340B 2402 1457 NA NA AMD A4 PRO-7300B APU 2372 1468 NA NA AMD A4 PRO-7350B 2794 1319 NA NA AMD A4-1200 APU 635 2334 NA NA AMD A4-1250 APU 618 2341 NA NA AMD A4-3300 APU 1504 1868 115.83 $12.99 AMD A4-3300M APU 1185 2033 39.52 $29.99* AMD A4-3305M APU 1220 2014 39.61 $30.81 AMD A4-3310MX APU 1281 1980 NA NA AMD A4-3320M APU 1253 1997 36.88 $34.00 AMD A4-3330MX APU 1212 2019 NA NA AMD A4-3400 APU 1593 1831 154.86 $10.29 AMD A4-3420 APU 1672 1783 NA NA AMD A4-4000 APU 1826 1707 60.90 $29.99 AMD A4-4020 APU 1924 1653 22.38 $86.00 AMD A4-4300M APU 1606 1819 40.29 $39.86 AMD A4-4355M APU 1175 2037 NA NA AMD A4-5000 APU 1911 1660 NA NA AMD A4-5050 APU 2113 1572 NA NA AMD A4-5100 APU 2037 1603 NA NA AMD A4-5150M APU 1844 1696 NA NA AMD A4-5300 APU 2011 1616 11.65 $172.70 AMD A4-5300B APU 2136 1558 56.21 $38.00* https://www.cpubenchmark.net/cpu_list.php 15. -

Energy Efficient Spin-Locking in Multi-Core Machines

Energy efficient spin-locking in multi-core machines Facoltà di INGEGNERIA DELL'INFORMAZIONE, INFORMATICA E STATISTICA Corso di laurea in INGEGNERIA INFORMATICA - ENGINEERING IN COMPUTER SCIENCE - LM Cattedra di Advanced Operating Systems and Virtualization Candidato Salvatore Rivieccio 1405255 Relatore Correlatore Francesco Quaglia Pierangelo Di Sanzo A/A 2015/2016 !1 0 - Abstract In this thesis I will show an implementation of spin-locks that works in an energy efficient fashion, exploiting the capability of last generation hardware and new software components in order to rise or reduce the CPU frequency when running spinlock operation. In particular this work consists in a linux kernel module and a user-space program that make possible to run with the lowest frequency admissible when a thread is spin-locking, waiting to enter a critical section. These changes are thread-grain, which means that only interested threads are affected whereas the system keeps running as usual. Standard libraries’ spinlocks do not provide energy efficiency support, those kind of optimizations are related to the application behaviors or to kernel-level solutions, like governors. !2 Table of Contents List of Figures pag List of Tables pag 0 - Abstract pag 3 1 - Energy-efficient Computing pag 4 1.1 - TDP and Turbo Mode pag 4 1.2 - RAPL pag 6 1.3 - Combined Components 2 - The Linux Architectures pag 7 2.1 - The Kernel 2.3 - System Libraries pag 8 2.3 - System Tools pag 9 3 - Into the Core 3.1 - The Ring Model 3.2 - Devices 4 - Low Frequency Spin-lock 4.1 - Spin-lock vs. -

On the Performance of MPI-Openmp on a 12 Nodes Multi-Core Cluster

On the Performance of MPI-OpenMP on a 12 nodes Multi-core Cluster Abdelgadir Tageldin Abdelgadir1, Al-Sakib Khan Pathan1∗ , Mohiuddin Ahmed2 1 Department of Computer Science, International Islamic University Malaysia, Gombak 53100, Kuala Lumpur, Malaysia 2 Department of Computer Network, Jazan University, Saudi Arabia [email protected] , [email protected] , [email protected] Abstract. With the increasing number of Quad-Core-based clusters and the introduction of compute nodes designed with large memory capacity shared by multiple cores, new problems related to scalability arise. In this paper, we analyze the overall performance of a cluster built with nodes having a dual Quad-Core Processor on each node. Some benchmark results are presented and some observations are mentioned when handling such processors on a benchmark test. A Quad-Core-based cluster's complexity arises from the fact that both local communication and network communications between the running processes need to be addressed. The potentials of an MPI-OpenMP approach are pinpointed because of its reduced communication overhead. At the end, we come to a conclusion that an MPI-OpenMP solution should be considered in such clusters since optimizing network communications between nodes is as important as optimizing local communications between processors in a multi-core cluster. Keywords: MPI-OpenMP, hybrid, Multi-Core, Cluster. 1 Introduction The integration of two or more processors within a single chip is an advanced technology for tackling the disadvantages exposed by a single core when it comes to increasing the speed, as more heat is generated and more power is consumed by those single cores.