UNIT 1 CHAPTER 1 Introduction 1.0 Objectives 1.1 Introduction 1.2 What

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Definitive Guide to Windows 10 Management: a Vmware Whitepaper

A Definitive Guide to Windows 10 Management: A VMware Whitepaper November 2015 Table of Contents Executive Summary.................................................................................................................3 Challenges with Windows Management..........................................................................5 How Windows 10 Differs........................................................................................................7 Windows 10 Management Features....................................................................................9 New Methods of Updates......................................................................................................10 New Methods of Enrollment and Device Provisioning................................................11 Unified Application Experiences.........................................................................................13 Domain Joined Management................................................................................................16 Application Delivery.............................................................................................................17 Universal Applications.........................................................................................................17 Classic Windows Applications.........................................................................................17 Cloud-based Applications.................................................................................................17 -

STATEMENT of WORK: SGS File System

ATTACHMENT A STATEMENT OF WORK: SGS File System DOE National Nuclear Security Administration & the DOD Maryland Office April 25, 2001 File Systems SOW April 25, 2001 Table of Contents TABLE OF CONTENTS ..................................................................................................................................2 1.0 OVERVIEW.................................................................................................................................................4 1.1 INTRODUCTION ..........................................................................................................................................4 2.0 MOTIVATION ............................................................................................................................................5 2.1 THE NEED FOR IMPROVED FILE SYSTEMS .................................................................................................5 2.2 I/O CHARACTERIZATION OF IMPORTANT APPLICATIONS...........................................................................6 2.3 CURRENT AND PROJECTED ENVIRONMENTS AT LLNL, LANL, SANDIA, AND THE DOD .........................6 2.4 SUMMARY OF FIVE TECHNOLOGY CATEGORIES ........................................................................................9 3.0 MINIMUM REQUIREMENTS (GO/NO-GO CRITERIA)..................................................................12 3.1 POSIX-LIKE INTERFACE [MANDATORY]........................................................................................12 3.2 INTEGRATION -

A Bare Machine Sensor Application for an ARM Processor

A Bare Machine Sensor Application for an ARM Processor Alexander Peter, Ramesh K. Karne and Alexander L. Wijesinha Department of Computer & Information Sciences Towson, MD 21252 [email protected], (rkarne, awijesinha)@towson.edu Abstract- Sensor devices that monitor environmental changes in In order to build a bare machine temperature sensor device temperature, sound, light, vibration and pressure usually run application, it requires several components as shown in Fig. 1. applications that require the support of a small operating system, A brief functional description of this application is as follows. lean kernel, or an embedded system. This paper presents a The application is loaded from the SD Card interface (Label 9) methodology for developing sensor device applications that can into memory using U-Boot. A temperature sensor (Label 1) is be directly run on the bare hardware without any need for middleware. Such bare sensor device applications only depend plugged into the ADB to monitor the current room on the underlying processor architecture, enabling them to run temperature with a sensing granularity of 900 microseconds. on a variety of devices. The methodology is used for developing, As the temperature changes, its value (Label 8) and a graphic designing, and implementing a temperature sensor application image (Label 7) is displayed on the LCD screen (Label 2). An that runs on an ARM processor. The same methodology can be output console is used to debug the inner working of the ADB used to build other bare machine sensor device applications for and temperature (Label 3). An LED light (Label 4) provides a ARM processors, and is easily extended to different processor visual indication of temperature sensor operation. -

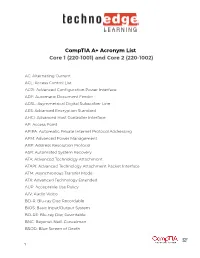

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

Application Performance and Flexibility on Exokernel Systems

Application Performance and Flexibility On Exokernel Systems –M. F. Kaashoek, D.R. Engler, G.R. Ganger et. al. Presented by Henry Monti Exokernel ● What is an Exokernel? ● What are its advantages? ● What are its disadvantages? ● Performance ● Conclusions 2 The Problem ● Traditional operating systems provide both protection and resource management ● Will protect processes address space, and manages the systems virtual memory ● Provides abstractions such as processes, files, pipes, sockets,etc ● Problems with this? 3 Exokernel's Solution ● The problem is that OS designers must try and accommodate every possible use of abstractions, and choose general solutions for resource management ● This causes the performance of applications to suffer, since they are restricted to general abstractions, interfaces, and management ● Research has shown that more application control of resources yields better performance 4 (Separation vs. Protection) ● An exokernel solves these problems by separating protection from management ● Creates idea of LibOS, which is like a virtual machine with out isolation ● Moves abstractions (processes, files, virtual memory, filesystems), to the user space inside of LibOSes ● Provides a low level interface as close to the hardware as possible 5 General Design Principles ● Exposes as much as possible of the hardware, most of the time using hardware names, allowing libOSes access to things like individual data blocks ● Provides methods to securely bind to resources, revoke resources, and an abort protocol that the kernel itself -

Operating Systems Privileged Instructions

November 2, 1999 Operating Systems • Operating systems manage processes and resources • Processes are executing instances of programs may be the same or different programs process 1 process 2 process 3 code code code data data data SV SV SV state vector: information necessary to start, stop, and restart the process • State vector: registers, program counter, memory mgmt registers, etc. user-level processes assembly & high-level languages operating system, “kernel” machine language & system calls bare machine machine language Copyright 1997 Computer Science 217: Operating System Page 187 November 2, 1999 Privileged Instructions • Machines have two kinds of instructions 1. “normal” instructions, e.g., add, sub, etc. 2. “privileged” instructions, e.g., initiate I/O switch state vectors or contexts load/save from protected memory etc. • Operating systems hide privileged instructions and provide virtual instructions to access and manipulate virtual resources, e.g., I/O to and from disc files • Virtual instructions are system calls • Operating systems interpret virtual instructions Copyright 1997 Computer Science 217: Operating System Page 188 November 2, 1999 Processor Modes • Machine level typically has 2 modes, e.g., “user” mode and “kernel” mode • User mode processor executes “normal” instructions in the user’s program upon encountering a “privileged” instruction, processor switches to kernel mode, and the operating system performs a service • Kernel mode processor executes both normal and privileged instructions • User-to-kernel switch -

Using Microsoft Visual Studio to Create a Graphical User Interface ECE 480: Design Team 11

Using Microsoft Visual Studio to Create a Graphical User Interface ECE 480: Design Team 11 Application Note Joshua Folks April 3, 2015 Abstract: Software Application programming involves the concept of human-computer interaction and in this area of the program, a graphical user interface is very important. Visual widgets such as checkboxes and buttons are used to manipulate information to simulate interactions with the program. A well-designed GUI gives a flexible structure where the interface is independent from, but directly connected to the application functionality. This quality is directly proportional to the user friendliness of the application. This note will briefly explain how to properly create a Graphical User Interface (GUI) while ensuring that the user friendliness and the functionality of the application are maintained at a high standard. 1 | P a g e Table of Contents Abstract…………..…………………………………………………………………………………………………………………………1 Introduction….……………………………………………………………………………………………………………………………3 Operation….………………………………………………….……………………………………………………………………………3 Operation….………………………………………………….……………………………………………………………………………3 Visual Studio Methods.…..…………………………….……………………………………………………………………………4 Interface Types………….…..…………………………….……………………………………………………………………………6 Understanding Variables..…………………………….……………………………………………………………………………7 Final Forms…………………....…………………………….……………………………………………………………………………7 Conclusion.…………………....…………………………….……………………………………………………………………………8 2 | P a g e Key Words: Interface, GUI, IDE Introduction: Establishing a connection between -

Introduction to Computer Concepts

MANAGING PUBLIC SECTOR RECORDS A Training Programme Understanding Computers: An Overview for Records and Archives Staff INTERNATIONAL INTERNATIONAL RECORDS COUNCIL ON ARCHIVES MANAGEMENT TRUST MANAGING PUBLIC SECTOR RECORDS: A STUDY PROGRAMME UNDERSTANDING COMPUTERS: AN OVERVIEW FOR RECORDS AND ARCHIVES STAFF MANAGING PUBLIC SECTOR RECORDS A STUDY PROGRAMME General Editor, Michael Roper; Managing Editor, Laura Millar UNDERSTANDING COMPUTERS: AN OVERVIEW FOR RECORDS AND ARCHIVES STAFF INTERNATIONAL RECORDS INTERNATIONAL MANAGEMENT TRUST COUNCIL ON ARCHIVES MANAGING PUBLIC SECTOR RECORDS: A STUDY PROGRAMME Understanding Computers: An Overview for Records and Archives Staff © International Records Management Trust, 1999. Reproduction in whole or in part, without the express written permission of the International Records Management Trust, is strictly prohibited. Produced by the International Records Management Trust 12 John Street London WC1N 2EB UK Printed in the United Kingdom. Inquiries concerning reproduction or rights and requests for additional training materials should be addressed to International Records Management Trust 12 John Street London WC1N 2EB UK Tel: +44 (0) 20 7831 4101 Fax: +44 (0) 20 7831 7404 E-mail: [email protected] Website: http://www.irmt.org Version 1/1999 MPSR Project Personnel Project Director Anne Thurston has been working to define international solutions for the management of public sector records for nearly three decades. Between 1970 and 1980 she lived in Kenya, initially conducting research and then as an employee of the Kenya National Archives. She joined the staff of the School of Library, Archive and Information Studies at University College London in 1980, where she developed the MA course in Records and Archives Management (International) and a post-graduate research programme. -

Winreporter Documentation

WinReporter documentation Table Of Contents 1.1 WinReporter overview ............................................................................................... 1 1.1.1.................................................................................................................................... 1 1.1.2 Web site support................................................................................................. 1 1.2 Requirements.............................................................................................................. 1 1.3 License ....................................................................................................................... 1 2.1 Welcome to WinReporter........................................................................................... 2 2.2 Scan requirements ...................................................................................................... 2 2.3 Simplified Wizard ...................................................................................................... 3 2.3.1 Simplified Wizard .............................................................................................. 3 2.3.2 Computer selection............................................................................................. 3 2.3.3 Validate .............................................................................................................. 4 2.4 Advanced Wizard....................................................................................................... 5 2.4.1 -

Flare-On 5: Challenge 7 Solution – Worldofwarcraft.Exe

Flare-On 5: Challenge 7 Solution – WorldOfWarcraft.exe Challenge Author: Ryan Warns Summary This challenge implements a 32-bit Windows binary meant to run in a Windows on Windows (WOW) environment. Analysis I often start my analysis of samples by quickly skimming the output of static analysis tools and looking through IDA. Performing basic static analysis on the binary we see that WorldOfWarcraft.exe is a simple 32-bit DLL. Running strings.exe on this binary shows us several strings that look like they might be related to the key. USER32 WS2_32 %[email protected] Cannot read payload! n1ght_4lve$_R_c00L.bin A_l1ttl3_P1C_0f_h3aV3n RSDS R:\objchk_win7_x86\i386\WorldOfWarcraft.pdb Figure 1 - strings in WorldOfWarcraft.exe Opening the binary in IDA we can see that the binary doesn’t appear to implement much in the way of functionality, with the main function only calling 3 subroutines. The subroutine at address 0x1001A60 contains references to our strings of interest. FireEye, Inc., 1440 McCarthy Blvd., Milpitas, CA 95035 | +1 408.321.6300 | +1 877.FIREEYE (347.3393) | [email protected] | www.FireEye.com 1 Figure 2 - Decompilation of function using interesting strings I’ve cleaned up the decompilation in the screenshot above to be slightly more accurate. Quickly skimming sub_1001910 reveals that this function grabs the contents of a file, so it looks like sub_0x1001A60 will read the file n1ght_4lve$_R_c00L.bin and XOR the contents against the string A_l1ttl3_P1C_0f_h3aV3n. The result of this operation is compared to a static array on the stack, and if the two match the sample will print our key. -

Practical ASP.NET Web API

Practical ASP.NET Web API Badrinarayanan Lakshmiraghavan Practical ASP.NET Web API Copyright © 2013 by Badrinarayanan Lakshmiraghavan This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Exempted from this legal reservation are brief excerpts in connection with reviews or scholarly analysis or material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. Duplication of this publication or parts thereof is permitted only under the provisions of the Copyright Law of the Publisher’s location, in its current version, and permission for use must always be obtained from Springer. Permissions for use may be obtained through RightsLink at the Copyright Clearance Center. Violations are liable to prosecution under the respective Copyright Law. ISBN-13 (pbk): 978-1-4302-6175-9 ISBN-13 (electronic): 978-1-4302-6176-6 Trademarked names, logos, and images may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, logo, or image we use the names, logos, and images only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. -

Winter 2004 ISSN 1741-4229

IIRRMMAA INFORMATION RISK MANAGEMENT & AUDIT JOURNAL ◆ SPECIALIST ROUP OF THE ◆ JOURNAL A G BCS volume 14 number 5 winter 2004 ISSN 1741-4229 Programme for members’ meetings 2004 – 2005 Tuesday 7 September 2004 Computer Audit Basics 2: Auditing 16:00 for 16:30 Late afternoon the Infrastructure and Operations KPMG Thursday 7 October 2004 Regulatory issues affecting IT in the 10:00 to 16:00 Full day Financial Industry Old Sessions House Tuesday 16 November 2004 Networks Attacks – quantifying and 10:00 to 16:00 Full day dealing with future threats Chartered Accountants Hall Tuesday 18 January 2005 Database Security 16:00 for 16:30 Late afternoon KPMG Tuesday 15 March 2005 IT Governance 10:00 to 16:00 Full day BCS – The Davidson Building, 5 Southampton Street, London WC2 7HA Tuesday 17 May 2005 Computer Audit Basics 3: CAATS 16:00 for 16:30 Late afternoon Preceded by IRMA AGM KPMG AGM precedes the meeting Please note that these are provisional details and are subject to change. The late afternoon meetings are free of charge to members. For full day briefings a modest, very competitive charge is made to cover both lunch and a full printed delegate’s pack. For venue maps see back cover. Contents of the Journal Technical Briefings Front Cover Editorial John Mitchell 3 The Down Under Column Bob Ashton 4 Members’ Benefits 5 Creating and Using Issue Analysis Memos Greg Krehel 6 Computer Forensics Science – Part II Celeste Rush 11 Membership Application 25 Management Committee 27 Advertising in the Journal 28 IRMA Venues Map 28 GUIDELINES FOR POTENTIAL AUTHORS The Journal publishes various types of article.