Efficiency and Complexity of Algorithms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

Average-Case Analysis of Algorithms and Data Structures L’Analyse En Moyenne Des Algorithmes Et Des Structures De Donn�Ees

(page i) Average-Case Analysis of Algorithms and Data Structures L'analyse en moyenne des algorithmes et des structures de donn¶ees Je®rey Scott Vitter 1 and Philippe Flajolet 2 Abstract. This report is a contributed chapter to the Handbook of Theoretical Computer Science (North-Holland, 1990). Its aim is to describe the main mathematical methods and applications in the average-case analysis of algorithms and data structures. It comprises two parts: First, we present basic combinatorial enumerations based on symbolic methods and asymptotic methods with emphasis on complex analysis techniques (such as singularity analysis, saddle point, Mellin transforms). Next, we show how to apply these general methods to the analysis of sorting, searching, tree data structures, hashing, and dynamic algorithms. The emphasis is on algorithms for which exact \analytic models" can be derived. R¶esum¶e. Ce rapport est un chapitre qui para^³t dans le Handbook of Theoretical Com- puter Science (North-Holland, 1990). Son but est de d¶ecrire les principales m¶ethodes et applications de l'analyse de complexit¶e en moyenne des algorithmes. Il comprend deux parties. Tout d'abord, nous donnons une pr¶esentation des m¶ethodes de d¶enombrements combinatoires qui repose sur l'utilisation de m¶ethodes symboliques, ainsi que des tech- niques asymptotiques fond¶ees sur l'analyse complexe (analyse de singularit¶es, m¶ethode du col, transformation de Mellin). Ensuite, nous d¶ecrivons l'application de ces m¶ethodes g¶enerales a l'analyse du tri, de la recherche, de la manipulation d'arbres, du hachage et des algorithmes dynamiques. -

Design and Analysis of Algorithms Credits and Contact Hours: 3 Credits

Course number and name: CS 07340: Design and Analysis of Algorithms Credits and contact hours: 3 credits. / 3 contact hours Faculty Coordinator: Andrea Lobo Text book, title, author, and year: The Design and Analysis of Algorithms, Anany Levitin, 2012. Specific course information Catalog description: In this course, students will learn to design and analyze efficient algorithms for sorting, searching, graphs, sets, matrices, and other applications. Students will also learn to recognize and prove NP- Completeness. Prerequisites: CS07210 Foundations of Computer Science and CS04222 Data Structures and Algorithms Type of Course: ☒ Required ☐ Elective ☐ Selected Elective Specific goals for the course 1. algorithm complexity. Students have analyzed the worst-case runtime complexity of algorithms including the quantification of resources required for computation of basic problems. o ABET (j) An ability to apply mathematical foundations, algorithmic principles, and computer science theory in the modeling and design of computer-based systems in a way that demonstrates comprehension of the tradeoffs involved in design choices 2. algorithm design. Students have applied multiple algorithm design strategies. o ABET (j) An ability to apply mathematical foundations, algorithmic principles, and computer science theory in the modeling and design of computer-based systems in a way that demonstrates comprehension of the tradeoffs involved in design choices 3. classic algorithms. Students have demonstrated understanding of algorithms for several well-known computer science problems o ABET (j) An ability to apply mathematical foundations, algorithmic principles, and computer science theory in the modeling and design of computer-based systems in a way that demonstrates comprehension of the tradeoffs involved in design choices and are able to implement these algorithms. -

Introduction to the Design and Analysis of Algorithms

This page intentionally left blank Vice President and Editorial Director, ECS Marcia Horton Editor-in-Chief Michael Hirsch Acquisitions Editor Matt Goldstein Editorial Assistant Chelsea Bell Vice President, Marketing Patrice Jones Marketing Manager Yezan Alayan Senior Marketing Coordinator Kathryn Ferranti Marketing Assistant Emma Snider Vice President, Production Vince O’Brien Managing Editor Jeff Holcomb Production Project Manager Kayla Smith-Tarbox Senior Operations Supervisor Alan Fischer Manufacturing Buyer Lisa McDowell Art Director Anthony Gemmellaro Text Designer Sandra Rigney Cover Designer Anthony Gemmellaro Cover Illustration Jennifer Kohnke Media Editor Daniel Sandin Full-Service Project Management Windfall Software Composition Windfall Software, using ZzTEX Printer/Binder Courier Westford Cover Printer Courier Westford Text Font Times Ten Copyright © 2012, 2007, 2003 Pearson Education, Inc., publishing as Addison-Wesley. All rights reserved. Printed in the United States of America. This publication is protected by Copyright, and permission should be obtained from the publisher prior to any prohibited reproduction, storage in a retrieval system, or transmission in any form or by any means, electronic, mechanical, photocopying, recording, or likewise. To obtain permission(s) to use material from this work, please submit a written request to Pearson Education, Inc., Permissions Department, One Lake Street, Upper Saddle River, New Jersey 07458, or you may fax your request to 201-236-3290. This is the eBook of the printed book and may not include any media, Website access codes or print supplements that may come packaged with the bound book. Many of the designations by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed in initial caps or all caps. -

Algorithms and Computational Complexity: an Overview

Algorithms and Computational Complexity: an Overview Winter 2011 Larry Ruzzo Thanks to Paul Beame, James Lee, Kevin Wayne for some slides 1 goals Design of Algorithms – a taste design methods common or important types of problems analysis of algorithms - efficiency 2 goals Complexity & intractability – a taste solving problems in principle is not enough algorithms must be efficient some problems have no efficient solution NP-complete problems important & useful class of problems whose solutions (seemingly) cannot be found efficiently 3 complexity example Cryptography (e.g. RSA, SSL in browsers) Secret: p,q prime, say 512 bits each Public: n which equals p x q, 1024 bits In principle there is an algorithm that given n will find p and q:" try all 2512 possible p’s, but an astronomical number In practice no fast algorithm known for this problem (on non-quantum computers) security of RSA depends on this fact (and research in “quantum computing” is strongly driven by the possibility of changing this) 4 algorithms versus machines Moore’s Law and the exponential improvements in hardware... Ex: sparse linear equations over 25 years 10 orders of magnitude improvement! 5 algorithms or hardware? 107 25 years G.E. / CDC 3600 progress solving CDC 6600 G.E. = Gaussian Elimination 106 sparse linear CDC 7600 Cray 1 systems 105 Cray 2 Hardware " 104 alone: 4 orders Seconds Cray 3 (Est.) 103 of magnitude 102 101 Source: Sandia, via M. Schultz! 100 1960 1970 1980 1990 6 2000 algorithms or hardware? 107 25 years G.E. / CDC 3600 CDC 6600 G.E. = Gaussian Elimination progress solving SOR = Successive OverRelaxation 106 CG = Conjugate Gradient sparse linear CDC 7600 Cray 1 systems 105 Cray 2 Hardware " 104 alone: 4 orders Seconds Cray 3 (Est.) 103 of magnitude Sparse G.E. -

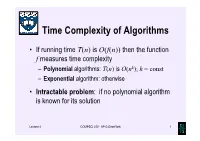

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns. -

From Analysis of Algorithms to Analytic Combinatorics a Journey with Philippe Flajolet

From Analysis of Algorithms to Analytic Combinatorics a journey with Philippe Flajolet Robert Sedgewick Princeton University This talk is dedicated to the memory of Philippe Flajolet Philippe Flajolet 1948-2011 From Analysis of Algorithms to Analytic Combinatorics 47. PF. Elements of a general theory of combinatorial structures. 1985. 69. PF. Mathematical methods in the analysis of algorithms and data structures. 1988. 70. PF L'analyse d'algorithmes ou le risque calculé. 1986. 72. PF. Random tree models in the analysis of algorithms. 1988. 88. PF and Michèle Soria. Gaussian limiting distributions for the number of components in combinatorial structures. 1990. 91. Jeffrey Scott Vitter and PF. Average-case analysis of algorithms and data structures. 1990. 95. PF and Michèle Soria. The cycle construction.1991. 97. PF. Analytic analysis of algorithms. 1992. 99. PF. Introduction à l'analyse d'algorithmes. 1992. 112. PF and Michèle Soria. General combinatorial schemas: Gaussian limit distributions and exponential tails. 1993. 130. RS and PF. An Introduction to the Analysis of Algorithms. 1996. 131. RS and PF. Introduction à l'analyse des algorithmes. 1996. 171. PF. Singular combinatorics. 2002. 175. Philippe Chassaing and PF. Hachage, arbres, chemins & graphes. 2003. 189. PF, Éric Fusy, Xavier Gourdon, Daniel Panario, and Nicolas Pouyanne. A hybrid of Darboux's method and singularity analysis in combinatorial asymptotics. 2006. 192. PF. Analytic combinatorics—a calculus of discrete structures. 2007. 201. PF and RS. Analytic Combinatorics. 2009. Analysis of Algorithms Pioneering research by Knuth put the study of the performance of computer programs on a scientific basis. “As soon as an Analytic Engine exists, it will necessarily guide the future course of the science. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm In computer science, a sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) that require sorted lists to work correctly; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation, or reordering, of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2004). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and lower bounds. Classification Sorting algorithms used in computer science are often classified by: • Computational complexity (worst, average and best behaviour) of element comparisons in terms of the size of the list . For typical sorting algorithms good behavior is and bad behavior is . -

A Survey and Analysis of Algorithms for the Detection of Termination in a Distributed System

Rochester Institute of Technology RIT Scholar Works Theses 1991 A Survey and analysis of algorithms for the detection of termination in a distributed system Lois Rixner Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Rixner, Lois, "A Survey and analysis of algorithms for the detection of termination in a distributed system" (1991). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Rochester Institute of Technology Computer Science Department A Survey and Analysis of Algorithms for the Detection of Termination rna Distributed System by Lois R. Romer A thesis, submitted to The Faculty of the Computer Science Department, in partial fulfillment of the requirements for the degree of Master of Science in Computer Science. Approved by: Dr. Andrew Kitchen Dr. James Heliotis "22 Jit~ 1/ Dr. Peter Anderson Date Title of Thesis: A Survey and Analysis of Algorithms for the Detection of Termination in a Distributed System I, Lois R. Rixner hereby deny permission to the Wallace Memorial Library, of RITj to reproduce my thesis in whole or in part. Date: ~ oj'~, /f7t:J/ Abstract - This paper looks at algorithms for the detection of termination in a distributed system and analyzes them for effectiveness and efficiency. A survey is done of the pub lished algorithms for distributed termination and each is evaluated. Both centralized dis tributed systems and fully distributed systems are reviewed. -

Algorithm Time Cost Measurement

CSE 12 Algorithm Time Cost Measurement • Algorithm analysis vs. measurement • Timing an algorithm • Average and standard deviation • Improving measurement accuracy 06 Introduction • These three characteristics of programs are important: – robustness: a program’s ability to spot exceptional conditions and deal with them or shutdown gracefully – correctness: does the program do what it is “supposed to” do? – efficiency: all programs use resources (time, space, and energy); how can we measure efficiency so that we can compare algorithms? 06-2/19 Analysis and Measurement An algorithm’s performance can be described by: – time complexity or cost – how long it takes to execute. In general, less time is better! – space complexity or cost – how much computer memory it uses. In general, less space is better! – energy complexity or cost – how much energy uses. In general, less energy is better! • Costs are usually given as functions of the size of the input to the algorithm • A big instance of the problem will probably take more resources to solve than a small one, but how much more? Figuring algorithm costs • For a given algorithm, we would like to know the following as functions of n, the size of the problem: T(n) , the time cost of solving the problem S(n) , the space cost of solving the problem E(n) , the energy cost of solving the problem • Two approaches: – We can analyze the written algorithm – Or we could implement the algorithm and run it and measure the time, memory, and energy usage 06-4/19 Asymptotic algorithm analysis • Asymptotic