A Closer Look at Instruction Set Architectures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ADSP-21065L SHARC User's Manual; Chapter 2, Computation

&20387$7,2181,76 Figure 2-0. Table 2-0. Listing 2-0. The processor’s computation units provide the numeric processing power for performing DSP algorithms, performing operations on both fixed-point and floating-point numbers. Each computation unit executes instructions in a single cycle. The processor contains three computation units: • An arithmetic/logic unit (ALU) Performs a standard set of arithmetic and logic operations in both fixed-point and floating-point formats. • A multiplier Performs floating-point and fixed-point multiplication as well as fixed-point dual multiply/add or multiply/subtract operations. •A shifter Performs logical and arithmetic shifts, bit manipulation, field deposit and extraction operations on 32-bit operands and can derive exponents as well. ADSP-21065L SHARC User’s Manual 2-1 PM Data Bus DM Data Bus Register File Multiplier Shifter ALU 16 × 40-bit MR2 MR1 MR0 Figure 2-1. Computation units block diagram The computation units are architecturally arranged in parallel, as shown in Figure 2-1. The output from any computation unit can be input to any computation unit on the next cycle. The computation units store input operands and results locally in a ten-port register file. The Register File is accessible to the processor’s pro- gram memory data (PMD) bus and its data memory data (DMD) bus. Both of these buses transfer data between the computation units and internal memory, external memory, or other parts of the processor. This chapter covers these topics: • Data formats • Register File data storage and transfers -

MIPS Architecture

Introduction to the MIPS Architecture January 14–16, 2013 1 / 24 Unofficial textbook MIPS Assembly Language Programming by Robert Britton A beta version of this book (2003) is available free online 2 / 24 Exercise 1 clarification This is a question about converting between bases • bit – base-2 (states: 0 and 1) • flash cell – base-4 (states: 0–3) • hex digit – base-16 (states: 0–9, A–F) • Each hex digit represents 4 bits of information: 0xE ) 1110 • It takes two hex digits to represent one byte: 1010 0111 ) 0xA7 3 / 24 Outline Overview of the MIPS architecture What is a computer architecture? Fetch-decode-execute cycle Datapath and control unit Components of the MIPS architecture Memory Other components of the datapath Control unit 4 / 24 What is a computer architecture? One view: The machine language the CPU implements Instruction set architecture (ISA) • Built in data types (integers, floating point numbers) • Fixed set of instructions • Fixed set of on-processor variables (registers) • Interface for reading/writing memory • Mechanisms to do input/output 5 / 24 What is a computer architecture? Another view: How the ISA is implemented Microarchitecture 6 / 24 How a computer executes a program Fetch-decode-execute cycle (FDX) 1. fetch the next instruction from memory 2. decode the instruction 3. execute the instruction Decode determines: • operation to execute • arguments to use • where the result will be stored Execute: • performs the operation • determines next instruction to fetch (by default, next one) 7 / 24 Datapath and control unit -

6.004 Computation Structures Spring 2009

MIT OpenCourseWare http://ocw.mit.edu 6.004 Computation Structures Spring 2009 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms. M A S S A C H U S E T T S I N S T I T U T E O F T E C H N O L O G Y DEPARTMENT OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE 6.004 Computation Structures β Documentation 1. Introduction This handout is a reference guide for the β, the RISC processor design for 6.004. This is intended to be a complete and thorough specification of the programmer-visible state and instruction set. 2. Machine Model The β is a general-purpose 32-bit architecture: all registers are 32 bits wide and when loaded with an address can point to any location in the byte-addressed memory. When read, register 31 is always 0; when written, the new value is discarded. Program Counter Main Memory PC always a multiple of 4 0x00000000: 3 2 1 0 0x00000004: 32 bits … Registers SUB(R3,R4,R5) 232 bytes R0 ST(R5,1000) R1 … … R30 0xFFFFFFF8: R31 always 0 0xFFFFFFFC: 32 bits 32 bits 3. Instruction Encoding Each β instruction is 32 bits long. All integer manipulation is between registers, with up to two source operands (one may be a sign-extended 16-bit literal), and one destination register. Memory is referenced through load and store instructions that perform no other computation. Conditional branch instructions are separated from comparison instructions: branch instructions test the value of a register that can be the result of a previous compare instruction. -

Frequently Asked Questions in Mathematics

Frequently Asked Questions in Mathematics The Sci.Math FAQ Team. Editor: Alex L´opez-Ortiz e-mail: [email protected] Contents 1 Introduction 4 1.1 Why a list of Frequently Asked Questions? . 4 1.2 Frequently Asked Questions in Mathematics? . 4 2 Fundamentals 5 2.1 Algebraic structures . 5 2.1.1 Monoids and Groups . 6 2.1.2 Rings . 7 2.1.3 Fields . 7 2.1.4 Ordering . 8 2.2 What are numbers? . 9 2.2.1 Introduction . 9 2.2.2 Construction of the Number System . 9 2.2.3 Construction of N ............................... 10 2.2.4 Construction of Z ................................ 10 2.2.5 Construction of Q ............................... 11 2.2.6 Construction of R ............................... 11 2.2.7 Construction of C ............................... 12 2.2.8 Rounding things up . 12 2.2.9 What’s next? . 12 3 Number Theory 14 3.1 Fermat’s Last Theorem . 14 3.1.1 History of Fermat’s Last Theorem . 14 3.1.2 What is the current status of FLT? . 14 3.1.3 Related Conjectures . 15 3.1.4 Did Fermat prove this theorem? . 16 3.2 Prime Numbers . 17 3.2.1 Largest known Mersenne prime . 17 3.2.2 Largest known prime . 17 3.2.3 Largest known twin primes . 18 3.2.4 Largest Fermat number with known factorization . 18 3.2.5 Algorithms to factor integer numbers . 18 3.2.6 Primality Testing . 19 3.2.7 List of record numbers . 20 3.2.8 What is the current status on Mersenne primes? . -

PIPELINING and ASSOCIATED TIMING ISSUES Introduction: While

PIPELINING AND ASSOCIATED TIMING ISSUES Introduction: While studying sequential circuits, we studied about Latches and Flip Flops. While Latches formed the heart of a Flip Flop, we have explored the use of Flip Flops in applications like counters, shift registers, sequence detectors, sequence generators and design of Finite State machines. Another important application of latches and flip flops is in pipelining combinational/algebraic operation. To understand what is pipelining consider the following example. Let us take a simple calculation which has three operations to be performed viz. 1. add a and b to get (a+b), 2. get magnitude of (a+b) and 3. evaluate log |(a + b)|. Each operation would consume a finite period of time. Let us assume that each operation consumes 40 nsec., 35 nsec. and 60 nsec. respectively. The process can be represented pictorially as in Fig. 1. Consider a situation when we need to carry this out for a set of 100 such pairs. In a normal course when we do it one by one it would take a total of 100 * 135 = 13,500 nsec. We can however reduce this time by the realization that the whole process is a sequential process. Let the values to be evaluated be a1 to a100 and the corresponding values to be added be b1 to b100. Since the operations are sequential, we can first evaluate (a1 + b1) while the value |(a1 + b1)| is being evaluated the unit evaluating the sum is dormant and we can use it to evaluate (a2 + b2) giving us both |(a1 + b1)| and (a2 + b2) at the end of another evaluation period. -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Most Computer Instructions Can Be Classified Into Three Categories

Introduction: Data Transfer and Manipulation Most computer instructions can be classified into three categories: 1) Data transfer, 2) Data manipulation, 3) Program control instructions » Data transfer instruction cause transfer of data from one location to another » Data manipulation performs arithmatic, logic and shift operations. » Program control instructions provide decision making capabilities and change the path taken by the program when executed in computer. Data Transfer Instruction Typical Data Transfer Instruction : LD » Load : transfer from memory to a processor register, usually an AC (memory read) ST » Store : transfer from a processor register into memory (memory write) MOV » Move : transfer from one register to another register XCH » Exchange : swap information between two registers or a register and a memory word IN/OUT » Input/Output : transfer data among processor registers and input/output device PUSH/POP » Push/Pop : transfer data between processor registers and a memory stack MODE ASSEMBLY REGISTER TRANSFER CONVENTION Direct Address LD ADR ACM[ADR] Indirect Address LD @ADR ACM[M[ADR]] Relative Address LD $ADR ACM[PC+ADR] Immediate Address LD #NBR ACNBR Index Address LD ADR(X) ACM[ADR+XR] Register LD R1 ACR1 Register Indirect LD (R1) ACM[R1] Autoincrement LD (R1)+ ACM[R1], R1R1+1 8 Addressing Mode for the LOAD Instruction Data Manipulation Instruction 1) Arithmetic, 2) Logical and bit manipulation, 3) Shift Instruction Arithmetic Instructions : NAME MNEMONIC Increment INC Decrement DEC Add ADD Subtract SUB Multiply -

Chapter 7 Expressions and Assignment Statements

Chapter 7 Expressions and Assignment Statements Chapter 7 Topics Introduction Arithmetic Expressions Overloaded Operators Type Conversions Relational and Boolean Expressions Short-Circuit Evaluation Assignment Statements Mixed-Mode Assignment Chapter 7 Expressions and Assignment Statements Introduction Expressions are the fundamental means of specifying computations in a programming language. To understand expression evaluation, need to be familiar with the orders of operator and operand evaluation. Essence of imperative languages is dominant role of assignment statements. Arithmetic Expressions Their evaluation was one of the motivations for the development of the first programming languages. Most of the characteristics of arithmetic expressions in programming languages were inherited from conventions that had evolved in math. Arithmetic expressions consist of operators, operands, parentheses, and function calls. The operators can be unary, or binary. C-based languages include a ternary operator, which has three operands (conditional expression). The purpose of an arithmetic expression is to specify an arithmetic computation. An implementation of such a computation must cause two actions: o Fetching the operands from memory o Executing the arithmetic operations on those operands. Design issues for arithmetic expressions: 1. What are the operator precedence rules? 2. What are the operator associativity rules? 3. What is the order of operand evaluation? 4. Are there restrictions on operand evaluation side effects? 5. Does the language allow user-defined operator overloading? 6. What mode mixing is allowed in expressions? Operator Evaluation Order 1. Precedence The operator precedence rules for expression evaluation define the order in which “adjacent” operators of different precedence levels are evaluated (“adjacent” means they are separated by at most one operand). -

Binary and Hexadecimal

Binary and Hexadecimal Binary and Hexadecimal Introduction This document introduces the binary (base two) and hexadecimal (base sixteen) number systems as a foundation for systems programming tasks and debugging. In this document, if the base of a number might be ambiguous, we use a base subscript to indicate the base of the value. For example: 45310 is a base ten (decimal) value 11012 is a base two (binary) value 821716 is a base sixteen (hexadecimal) value Why do we need binary? The binary number system (also called the base two number system) is the native language of digital computers. No matter how elegantly or naturally we might interact with today’s computers, phones, and other smart devices, all instructions and data are ultimately stored and manipulated in the computer as binary digits (also called bits, where a bit is either a 0 or a 1). CPUs (central processing units) and storage devices can only deal with information in this form. In a computer system, absolutely everything is represented as binary values, whether it’s a program you’re running, an image you’re viewing, a video you’re watching, or a document you’re writing. Everything, including text and images, is represented in binary. In the “old days,” before computer programming languages were available, programmers had to use sequences of binary digits to communicate directly with the computer. Today’s high level languages attempt to shield us from having to deal with binary at all. However, there are a few reasons why it’s important for programmers to understand the binary number system: 1. -

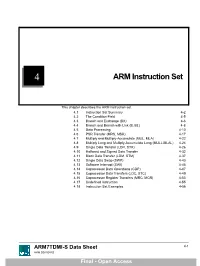

ARM Instruction Set

4 ARM Instruction Set This chapter describes the ARM instruction set. 4.1 Instruction Set Summary 4-2 4.2 The Condition Field 4-5 4.3 Branch and Exchange (BX) 4-6 4.4 Branch and Branch with Link (B, BL) 4-8 4.5 Data Processing 4-10 4.6 PSR Transfer (MRS, MSR) 4-17 4.7 Multiply and Multiply-Accumulate (MUL, MLA) 4-22 4.8 Multiply Long and Multiply-Accumulate Long (MULL,MLAL) 4-24 4.9 Single Data Transfer (LDR, STR) 4-26 4.10 Halfword and Signed Data Transfer 4-32 4.11 Block Data Transfer (LDM, STM) 4-37 4.12 Single Data Swap (SWP) 4-43 4.13 Software Interrupt (SWI) 4-45 4.14 Coprocessor Data Operations (CDP) 4-47 4.15 Coprocessor Data Transfers (LDC, STC) 4-49 4.16 Coprocessor Register Transfers (MRC, MCR) 4-53 4.17 Undefined Instruction 4-55 4.18 Instruction Set Examples 4-56 ARM7TDMI-S Data Sheet 4-1 ARM DDI 0084D Final - Open Access ARM Instruction Set 4.1 Instruction Set Summary 4.1.1 Format summary The ARM instruction set formats are shown below. 3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 9876543210 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 Cond 0 0 I Opcode S Rn Rd Operand 2 Data Processing / PSR Transfer Cond 0 0 0 0 0 0 A S Rd Rn Rs 1 0 0 1 Rm Multiply Cond 0 0 0 0 1 U A S RdHi RdLo Rn 1 0 0 1 Rm Multiply Long Cond 0 0 0 1 0 B 0 0 Rn Rd 0 0 0 0 1 0 0 1 Rm Single Data Swap Cond 0 0 0 1 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 Rn Branch and Exchange Cond 0 0 0 P U 0 W L Rn Rd 0 0 0 0 1 S H 1 Rm Halfword Data Transfer: register offset Cond 0 0 0 P U 1 W L Rn Rd Offset 1 S H 1 Offset Halfword Data Transfer: immediate offset Cond 0 -

Endian: from the Ground up a Coordinated Approach

WHITEPAPER Endian: From the Ground Up A Coordinated Approach By Kevin Johnston Senior Staff Engineer, Verilab July 2008 © 2008 Verilab, Inc. 7320 N MOPAC Expressway | Suite 203 | Austin, TX 78731-2309 | 512.372.8367 | www.verilab.com WHITEPAPER INTRODUCTION WHat DOES ENDIAN MEAN? Data in Imagine XYZ Corp finally receives first silicon for the main Endian relates the significance order of symbols to the computers chip for its new camera phone. All initial testing proceeds position order of symbols in any representation of any flawlessly until they try an image capture. The display is kind of data, if significance is position-dependent in that regularly completely garbled. representation. undergoes Of course there are many possible causes, and the debug Let’s take a specific type of data, and a specific form of dozens if not team analyzes code traces, packet traces, memory dumps. representation that possesses position-dependent signifi- There is no problem with the code. There is no problem cance: A digit sequence representing a numeric value, like hundreds of with data transport. The problem is eventually tracked “5896”. Each digit position has significance relative to all down to the data format. other digit positions. transformations The development team ran many, many pre-silicon simula- I’m using the word “digit” in the generalized sense of an between tions of the system to check datapath integrity, bandwidth, arbitrary radix, not necessarily decimal. Decimal and a few producer and error correction. The verification effort checked that all other specific radixes happen to be particularly useful for the data submitted at the camera port eventually emerged illustration simply due to their familiarity, but all of the consumer. -

MIPS IV Instruction Set

MIPS IV Instruction Set Revision 3.2 September, 1995 Charles Price MIPS Technologies, Inc. All Right Reserved RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and / or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor / manufacturer is MIPS Technologies, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94039-7311. R2000, R3000, R6000, R4000, R4400, R4200, R8000, R4300 and R10000 are trademarks of MIPS Technologies, Inc. MIPS and R3000 are registered trademarks of MIPS Technologies, Inc. The information in this document is preliminary and subject to change without notice. MIPS Technologies, Inc. (MTI) reserves the right to change any portion of the product described herein to improve function or design. MTI does not assume liability arising out of the application or use of any product or circuit described herein. Information on MIPS products is available electronically: (a) Through the World Wide Web. Point your WWW client to: http://www.mips.com (b) Through ftp from the internet site “sgigate.sgi.com”. Login as “ftp” or “anonymous” and then cd to the directory “pub/doc”. (c) Through an automated FAX service: Inside the USA toll free: (800) 446-6477 (800-IGO-MIPS) Outside the USA: (415) 688-4321 (call from a FAX machine) MIPS Technologies, Inc.