Informative Provenance for Repurposed Data: a Case Study Using Clinical Research Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Byrd, Disney Are New Members

LOCAL: Chris Rock’s mother will speak to parents in Sumter on Saturday A3 TELEVISION 8-part series on Unabomber begins tonight TUESDAY, AUGUST 1, 2017 | Serving South Carolina since October 15, 1894 75 cents B5 Byrd, Disney are new members school board on Monday night serve as short-term political ap- Delegation appoints 2 to school board in a public meeting at Central pointments on the board until Carolina Technical College. the November 2018 election, BY BRUCE MILLS members of the Sumter School With the two additions, the when the public will vote on the [email protected] District Board of Trustees. The Sumter school board now ex- two at-large seats. Both will be Sumter County Legislative Del- pands from seven voting mem- eligible to run for that election. BYRD DISNEY William Byrd and Bonnie egation unanimously voted bers to nine. Disney are the two newest both as at-large members to the Both Byrd and Disney will SEE MEMBERS, PAGE A4 Getting their orders for deployment New nuclear plants in S.C. are scrapped Billions wasted after owners decide to abandon partially built reactors BY SEANNA ADCOX The Associated Press COLUMBIA — Billions of dollars spent on two new nuclear reactors in South Carolina went up in smoke Monday when the owners nixed plans to finish them after years of delays and cost overruns, dealing a severe blow to the industry’s future. The reactors were set to be among the first built in the U.S. in decades. While the decision will save customers billions in additional costs, customers of the two utilities — Santee Cooper and South Carolina Electric & Gas — may get little to nothing refunded of the billions they’ve already paid for the now-abandoned project. -

Arts and Laughs ALL SOFT CLOTH CAR WASH $ 00 OFF 3ANY CAR WASH! EXPIRES 8/31/18

FINAL-1 Sat, Jul 21, 2018 6:13:44 PM Your Weekly Guide to TV Entertainment for the week of July 28 - August 3, 2018 HARTNETT’S Arts and laughs ALL SOFT CLOTH CAR WASH $ 00 OFF 3ANY CAR WASH! EXPIRES 8/31/18 BUMPER Nick Offerman and Amy Hartnett's Car Poehler host “Making It” SPECIALISTS Wash H1artnett x 5` Auto Body, Inc. COLLISION REPAIR SPECIALISTS & APPRAISERS MA R.S. #2313 R. ALAN HARTNETT LIC. #2037 DANA F. HARTNETT LIC. #9482 15 WATER STREET DANVERS (Exit 23, Rte. 128) TEL. (978) 774-2474 FAX (978) 750-4663 Open 7 Days Mon.-Fri. 8-7, Sat. 8-6, Sun. 8-4 ** Gift Certificates Available ** Choosing the right OLD FASHIONED SERVICE Attorney is no accident FREE REGISTRY SERVICE Free Consultation PERSONAL INJURYCLAIMS • Automobile Accident Victims • Work Accidents • Slip &Fall • Motorcycle &Pedestrian Accidents John Doyle Forlizzi• Wrongfu Lawl Death Office INSURANCEDoyle Insurance AGENCY • Dog Attacks • Injuries2 x to 3 Children Voted #1 1 x 3 With 35 years experience on the North Insurance Shore we have aproven record of recovery Agency No Fee Unless Successful “Parks and Recreation” alumni Amy Poehler and Nick Offerman reunite in the artisanal The LawOffice of event of the summer to celebrate the creativity and craftiness in all of us. “Making It” STEPHEN M. FORLIZZI features artisans competing in themed challenges that are inspired by crafting and Auto • Homeowners DIY trends that test their creativity, skills and outside-the-box thinking — but there Business • Life Insurance 978.739.4898 can only be one Master Maker. Get inspired and laugh with the fun summer series pre- Harthorne Office Park •Suite 106 www.ForlizziLaw.com 978-777-6344 491 Maple Street, Danvers, MA 01923 [email protected] miering Tuesday, July 31, on NBC. -

Arts and Laughs

FINAL-1 Sat, Jul 21, 2018 6:10:09 PM tvspotlight OMNI Security Team Your Weekly Guide to TV Entertainment Omni Security •FortheweekofJuly 28 - August 3, 2018• SERVING OUR COMMUNITY FOR OVER 30 YEARS Put Your Trust in Our2 Familyx 3.5” to Protect Your Family Big enough to Residential & serve you Fire & Access Commercial Small enough to Systems and Video Security know you Surveillance Remote access 24/7 Alarm & Security Monitoring puts you in control Remote Access & Wireless Technology Fire, Smoke & Carbon Detection of your security Personal Emergency Response Systems system at all times. Medical Alert Systems 978-465-5000 | 1-800-698-1800 | www.securityteam.com MA Lic. 444C Nick Offerman and Amy Poehler host “Making It” GRANITE A/LA Tiles 2 x 3.5” COUNTERTOPS CHOOSE FROM: ONLY 10 Different Colors of Granite $ 99 4 Different Edges 36 FREE D’Shape or Rectangle Sink sq. ft. Template & Installation Included 978.378.4340 Arts and NEW ADDRESS - 4 PERKINS WAY, NEWBURYPORT MA laughs www.latilesandgranite.com AUTHENTIC ITALIAN FOOD TRADITIONAL ITALIAN RECIPES MADE WITH NATURAL INGREDIENTS Come Welcome Summer and our New LunchGiuseppe's Time Bartender! Enjoy 50% off Apps AT 2THE x BAR3” When you purchase any wine or cocktail from our full bar. 11am to 4pm – Wed. through Fri. (Limited to bar seating only, as available. One per customer & not to be combined with other offers. Not Valid on Takeout) FULL BAR! LUNCH & DINNER! MON.-THURS. 11-8 • FRI. & SAT. 11-9 • CLOSED SUNDAYS 257 Low St., Newburyport, MA 978-465-2225 www.giuseppesfinefood.com FINAL-1 Sat, Jul 21, 2018 6:10:10 PM 2•NewburyportDailyNews•July 28 - August 3, 2018 pay for a night nanny, a wom- an to look after the newborn Video at night so that Marlo can releases sleep, she takes him up on the Getting creative offer. -

MATTHEW W. MUNGLE Special Make-Up Effects IATSE Local 706, SAG

MATTHEW W. MUNGLE Special Make-Up Effects IATSE Local 706, SAG The following is a partial list of recent Film Credits. For full credits, including Television and Commercials, please contact The Milton Agency. AWARDS AND NOMINATIONS ACADEMY AWARDS EMMY AWARDS (Cont.) Nominee: ALBERT NOBBS Nominee: GEORGE WALLACE Winner: BRAM STOKER’S DRACULA Nominee: OLIVER TWIST Nominee: GHOSTS OF MISSISSIPPI Nominee: MISS EVERS’ BOYS Nominee: SCHINDLER’S LIST Nominee: THE LATE SHIFT Nominee: LIZ: THE ELIZABETH TAYLOR STORY EMMY AWARDS Nominee: PERFECT STRANGERS, “Citizenship” Winner: JOHN ADAMS BRITISH ACADEMY FILM AWARDS Winner: STATE OF THE UNION, “Episode 102” Winner: SIX FEET UNDER, “Everyone’s Waiting” Nominee: LEE DANIELS’ THE BUTLER Winner: DOOR TO DOOR Nominee: BRAM STOKER’S DRACULA Winner: THE X-FILES, “Deadalive” Nominee: SCHINDLER’S LIST Winner: CITIZEN COHN Nominee: THE CAPE, “Razer” HOLLYWOOD MAKE-UP ARTIST Nominee: LITTLE BRITAIN USA, “Episode 106” AND HAIR STYLIST GUILD AWARDS Nominee: CSI: CRIME SCENE INVESTIGATION, Winner: TRACEY ULLMAN IN THE TRAILER “A Space Oddity” TALES Nominee: LITTLE BRITAIN USA, ”Episode 105” Winner: AND THE BEAT GOES ON: THE SONNY Nominee: STATE OF THE UNION, “Episode 205” AND CHER STORY Nominee: STATE OF THE UNION, “Episode104” Nominee: RED DRAGON Nominee: CSI: CRIME SCENE INVESTIGATION, Nominee: FRIDA “Dead Doll” Nominee: LANSKY Nominee: CSI: CRIME SCENE INVESTIGATION, “Living Legend” CABLE ACE AWARDS Nominee: CSI: CRIME SCENE INVESTIGATION, “Fannysmackin’” Winner: GEORGE WALLACE Nominee: INTO THE WEST, “Wheel To The Stars” Nominee: ANDERSONVILLE Nominee: CSI: CRIME SCENE INVESTIGATION, Nominee: CITIZEN COHN “Ch-Ch-Changes” Nominee: TRACEY ULLMAN IN THE TRAILER ACADEMY OF SCIENCE FICTION, TALES FANTASY AND HORROR FILMS, USA Nominee: RUDY: THE RUDY GIULIANI STORY Nominee: BRAM STOKER’S DRACULA Nominee: HOUDINI THE MILTON AGENCY Matthew W. -

Word Search Blaise (St

August 3 - 9, 2018 2 x 2" ad 2 x 2" ad C A E M J Y S Q O B I C F T E 2 x 3" ad A O G C E T P U P H U O Z S J J Z N L U F A H T L C P I S E A E D N A R H Z I S Y A P E N 2 x 3.5" ad R U N M I Z U G M O L N E R N D Q A D N E M S I B Y C X D I A C P A S Q U E S I Z E Q S N L J B L Z L D S T E R K E U G K I E R B I U Q I S L I G E S N N A D H Z C F C J R L A D I Z D C A M A R A D E R I E L B A U H R D M Z Q T M A K M C D C O B Z M C A S S I D Y O M C E R N I E S Y Q M J P V N E Z V A H V E M E F A B R A D O N “Lodge 49” on AMC Bargain Box (Words in parentheses not in puzzle) (Sean “Dud”) Dudley (Wyatt) Russell (Long) Beach Classified Merchandise Specials Solution on page 13 Ernie (Fontaine) (Brent) Jennings (Order of the) Lynx Merchandise High-End 2 x 3" ad Liz (Dudley) (Sonya) Cassidy Camaraderie Connie (Mills) (Linda) Emond (Alchemical) Mysteries 1 x 4" ad Deal Merchandise Word Search Blaise (St. -

Complete Book of Necromancers by Steve Kurtz

2151 ® ¥DUNGEON MASTER® Rules Supplement Guide The Complete Book of Necromancers By Steve Kurtz ª Table of Contents Introduction Bodily Afflictions How to Use This Book Insanity and Madness Necromancy and the PC Unholy Compulsions What You Will Need Paid In Full Chapter 1: Necromancers Chapter 4: The Dark Art The Standard Necromancer Spell Selection for the Wizard Ability Scores Criminal or Black Necromancy Race Gray or Neutral Necromancy Experience Level Advancement Benign or White Necromancy Spells New Wizard Spells Spell Restrictions 1st-Level Spells Magic Item Restrictions 2nd-Level Spells Proficiencies 3rd-Level Spells New Necromancer Wizard Kits 4th-Level Spells Archetypal Necromancer 5th-Level Spells Anatomist 6th-Level Spells Deathslayer 7th-Level Spells Philosopher 8th-Level Spells Undead Master 9th-Level Spells Other Necromancer Kits Chapter 5: Death Priests Witch Necromantic Priesthoods Ghul Lord The God of the Dead New Nonweapon Proficiencies The Goddess of Murder Anatomy The God of Pestilence Necrology The God of Suffering Netherworld Knowledge The Lord of Undead Spirit Lore Other Priestly Resources Venom Handling Chapter 6: The Priest Sphere Chapter 2: Dark Gifts New Priest Spells Dual-Classed Characters 1st-Level Spells Fighter/Necromancer 2nd-Level Spells Thief / Necromancer 3rd-Level Spells Cleric/Necromancer 4th-Level Spells Psionicist/Necromancer 5th-Level Spells Wild Talents 6th-Level Spells Vile Pacts and Dark Gifts 7th-Level Spells Nonhuman Necromancers Chapter 7: Allies Humanoid Necromancers Apprentices Drow Necromancers -

Cómo Citar El Artículo Número Completo Más Información Del

Anagramas -Rumbos y sentidos de la comunicación- ISSN: 1692-2522 Sello Editorial - Universidad de Medellín Nitrihual-Valdebenito, Luis; Fierro-Bustos, Juan Manuel; Reyes-Velásquez, Carlos; Henríquez-Morales, Francisco Conspiración y nuda vida ¿The X-Files, I want to believe: mundo posible o mundo presente?* Anagramas -Rumbos y sentidos de la comunicación-, vol. 16, núm. 31, 2017, Septiembre-Diciembre, pp. 91-112 Sello Editorial - Universidad de Medellín DOI: https://doi.org/10.22395/angr.v16n31a3 Disponible en: https://www.redalyc.org/articulo.oa?id=491555087004 Cómo citar el artículo Número completo Sistema de Información Científica Redalyc Más información del artículo Red de Revistas Científicas de América Latina y el Caribe, España y Portugal Página de la revista en redalyc.org Proyecto académico sin fines de lucro, desarrollado bajo la iniciativa de acceso abierto Universidad de Medellín Conspiración y nuda vida ¿The X-Files, I want to believe: mundo posible o mundo presente?* Luis Nitrihual Valdebenito** Juan Manuel Fierro Bustos*** Carlos Reyes Velásquez**** Francisco Henríquez Morales***** Recibido: 2017-04-22 Enviado a pares: 2017-05-25 Aprobado por pares: 2017-07-18 Aceptado: 2017-07-25 DOI: 10.22395/angr.v16n31a3 Resumen El presente artículo problematiza, en general, la conspiración como una estructura política y narrativa fundamental de la Modernidad. A través de un análisis fílmico de la serie The X-Files, I want to believe, planteamos como objetivo revelar como el tópico de la conspiración, en un nivel superficial, es un articulador de la trama de la serie, pero en un nivel profundo y amplio entendemos la conspiración como una estrategia articuladora de la vida moderna y, en este marco, del control biopolítico. -

Star Channels, Feb. 10-16

FEBRUARY 10 - 16, 2019 staradvertiser.com HELL ON EARTH Picking up where the fall fi nale left off and six years after Rick’s disappearance, Michonne and our survivors fi nd themselves facing off against the Whisperers, a new and mysterious group, while Negan has managed to escape confi nement after more than half a decade locked up. Who lives, who dies? The Walking Dead returns for the second half of its ninth season. Airing Sunday, Feb. 10, on AMC. WEEKLY NEWS UPDATE LIVE @ THE LEGISLATURE Join Senate and House leadership as they discuss upcoming legislation and issues of importance to the community. TOMORROW, 8:30AM | CHANNEL 49 | olelo.org/49 olelo.org ON THE COVER | THE WALKING DEAD Whispers in the dark ‘The Walking Dead’ returns (Norman Reedus, “Ride with Norman Reedus”) fall’s cliffhanger that saw Jesus (Tom Payne, Saviors and Ezekiel’s (Khary Payton, “General “Luck”) struck down at the hands of a new, with new foes Hospital”) Kingdom in order to build a sustain- more dangerous kind of walker while the able future for everyone. Eventually, his vision surrounded Michonne (Danai Gurira, “Black By Francis Babin and leadership were questioned, and amid the Panther,” 2018), Aaron (Ross Marquand, TV Media rising tensions and infighting within and be- “Avengers: Infinity War,” 2018), Yumiko tween the communities, an insurrection took (Eleanor Matsuura, “Wonder Woman,” 2017) ast month marked an important milestone place. and Daryl discovered the shocking truth re- in the history of entertainment. Twenty Unfortunately for Rick, he was not be able garding these grotesque monsters. Lyears ago, “The Sopranos” debuted to see the communities overcome their dif- It was revealed in the last moments of the on HBO to high praise and instantaneously ferences and live harmoniously with one an- half-season finale that the walkers that killed changed the television landscape forever. -

DECLARATION of Jane Sunderland in Support of Request For

Columbia Pictures Industries Inc v. Bunnell Doc. 373 Att. 1 Exhibit 1 Twentieth Century Fox Film Corporation Motion Pictures 28 DAYS LATER 28 WEEKS LATER ALIEN 3 Alien vs. Predator ANASTASIA Anna And The King (1999) AQUAMARINE Banger Sisters, The Battle For The Planet Of The Apes Beach, The Beauty and the Geek BECAUSE OF WINN-DIXIE BEDAZZLED BEE SEASON BEHIND ENEMY LINES Bend It Like Beckham Beneath The Planet Of The Apes BIG MOMMA'S HOUSE BIG MOMMA'S HOUSE 2 BLACK KNIGHT Black Knight, The Brokedown Palace BROKEN ARROW Broken Arrow (1996) BROKEN LIZARD'S CLUB DREAD BROWN SUGAR BULWORTH CAST AWAY CATCH THAT KID CHAIN REACTION CHASING PAPI CHEAPER BY THE DOZEN CHEAPER BY THE DOZEN 2 Clearing, The CLEOPATRA COMEBACKS, THE Commando Conquest Of The Planet Of The Apes COURAGE UNDER FIRE DAREDEVIL DATE MOVIE 4 Dockets.Justia.com DAY AFTER TOMORROW, THE DECK THE HALLS Deep End, The DEVIL WEARS PRADA, THE DIE HARD DIE HARD 2 DIE HARD WITH A VENGEANCE DODGEBALL: A TRUE UNDERDOG STORY DOWN PERISCOPE DOWN WITH LOVE DRIVE ME CRAZY DRUMLINE DUDE, WHERE'S MY CAR? Edge, The EDWARD SCISSORHANDS ELEKTRA Entrapment EPIC MOVIE ERAGON Escape From The Planet Of The Apes Everyone's Hero Family Stone, The FANTASTIC FOUR FAST FOOD NATION FAT ALBERT FEVER PITCH Fight Club, The FIREHOUSE DOG First $20 Million, The FIRST DAUGHTER FLICKA Flight 93 Flight of the Phoenix, The Fly, The FROM HELL Full Monty, The Garage Days GARDEN STATE GARFIELD GARFIELD A TAIL OF TWO KITTIES GRANDMA'S BOY Great Expectations (1998) HERE ON EARTH HIDE AND SEEK HIGH CRIMES 5 HILLS HAVE -

00001. Rugby Pass Live 1 00002. Rugby Pass Live 2 00003

00001. RUGBY PASS LIVE 1 00002. RUGBY PASS LIVE 2 00003. RUGBY PASS LIVE 3 00004. RUGBY PASS LIVE 4 00005. RUGBY PASS LIVE 5 00006. RUGBY PASS LIVE 6 00007. RUGBY PASS LIVE 7 00008. RUGBY PASS LIVE 8 00009. RUGBY PASS LIVE 9 00010. RUGBY PASS LIVE 10 00011. NFL GAMEPASS 1 00012. NFL GAMEPASS 2 00013. NFL GAMEPASS 3 00014. NFL GAMEPASS 4 00015. NFL GAMEPASS 5 00016. NFL GAMEPASS 6 00017. NFL GAMEPASS 7 00018. NFL GAMEPASS 8 00019. NFL GAMEPASS 9 00020. NFL GAMEPASS 10 00021. NFL GAMEPASS 11 00022. NFL GAMEPASS 12 00023. NFL GAMEPASS 13 00024. NFL GAMEPASS 14 00025. NFL GAMEPASS 15 00026. NFL GAMEPASS 16 00027. 24 KITCHEN (PT) 00028. AFRO MUSIC (PT) 00029. AMC HD (PT) 00030. AXN HD (PT) 00031. AXN WHITE HD (PT) 00032. BBC ENTERTAINMENT (PT) 00033. BBC WORLD NEWS (PT) 00034. BLOOMBERG (PT) 00035. BTV 1 FHD (PT) 00036. BTV 1 HD (PT) 00037. CACA E PESCA (PT) 00038. CBS REALITY (PT) 00039. CINEMUNDO (PT) 00040. CM TV FHD (PT) 00041. DISCOVERY CHANNEL (PT) 00042. DISNEY JUNIOR (PT) 00043. E! ENTERTAINMENT(PT) 00044. EURONEWS (PT) 00045. EUROSPORT 1 (PT) 00046. EUROSPORT 2 (PT) 00047. FOX (PT) 00048. FOX COMEDY (PT) 00049. FOX CRIME (PT) 00050. FOX MOVIES (PT) 00051. GLOBO PORTUGAL (PT) 00052. GLOBO PREMIUM (PT) 00053. HISTORIA (PT) 00054. HOLLYWOOD (PT) 00055. MCM POP (PT) 00056. NATGEO WILD (PT) 00057. NATIONAL GEOGRAPHIC HD (PT) 00058. NICKJR (PT) 00059. ODISSEIA (PT) 00060. PFC (PT) 00061. PORTO CANAL (PT) 00062. PT-TPAINTERNACIONAL (PT) 00063. RECORD NEWS (PT) 00064. -

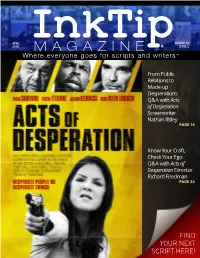

M a G a Z I N

APRIL VOLUME 19 2019 MAGAZINE ® ISSUE 2 Where everyone goes for scripts and writers™ From Public Relations to Made-up Desperations: Q&A with Acts of Desperation Screenwriter Nathan Illsley PAGE 16 Know Your Craft, Check Your Ego: Q&A with Acts of Desperation Director Richard Friedman PAGE 30 FIND YOUR NEXT SCRIPT HERE! CONTENTS Contest/Festival Winners 4 FIND YOUR Feature Scripts – SCRIPTS FAST Grouped by Genre ON INKTIP! 5 From Public Relations to Made-up Desperations: Q&A with Acts of Desperation Screenwriter Nathan Illsley INKTIP OFFERS: 16 • Listings of Scripts and Writers Updated Daily • Mandates Catered to Your Needs • Newsletters of the Latest Scripts and Writers Know Your Craft, Check Your Ego: Q&A with • Personalized Customer Service Acts of Desperation Director • Comprehensive Film Commissions Directory Richard Friedman 30 Scripts Represented by Agents/Managers 43 Teleplays You will find what you need on InkTip Sign up at InkTip.com! 44 Note: For your protection, writers are required to sign a comprehensive release form before they place their scripts on our site. 3 WHAT PEOPLE SAY ABOUT INKTIP WRITERS “[InkTip] was the resource that connected “Without InkTip, I wouldn’t be a produced a director/producer with my screenplay screenwriter. I’d like to think I’d have – and quite quickly. I HAVE BEEN gotten there eventually, but INKTIP ABSOLUTELY DELIGHTED CERTAINLY MADE IT HAPPEN WITH THE SUPPORT AND FASTER … InkTip puts screenwriters into OPPORTUNITIES I’ve gotten through contact with working producers.” being associated with InkTip.” – ANN KIMBROUGH, GOOD KID/BAD KID – DENNIS BUSH, LOVE OR WHATEVER “InkTip gave me the access that I needed “There is nobody out there doing more to directors that I BELIEVE ARE for writers than InkTip – nobody. -

The X-Files, I Want to Believe: Mundo Posible O Mundo Presente?*

Universidad de Medellín Conspiración y nuda vida ¿The X-Files, I want to believe: mundo posible o mundo presente?* Luis Nitrihual Valdebenito** Juan Manuel Fierro Bustos*** Carlos Reyes Velásquez**** Francisco Henríquez Morales***** Recibido: 2017-04-22 Enviado a pares: 2017-05-25 Aprobado por pares: 2017-07-18 Aceptado: 2017-07-25 DOI: 10.22395/angr.v16n31a3 Resumen El presente artículo problematiza, en general, la conspiración como una estructura política y narrativa fundamental de la Modernidad. A través de un análisis fílmico de la serie The X-Files, I want to believe, planteamos como objetivo revelar como el tópico de la conspiración, en un nivel superficial, es un articulador de la trama de la serie, pero en un nivel profundo y amplio entendemos la conspiración como una estrategia articuladora de la vida moderna y, en este marco, del control biopolítico. Así entonces, el artículo muestra cómo la estructura argumentativa de la serie propone tramas y personajes a través de los cuales el poder gubernamental interviene los cuerpos y el espacio, quita y permite la vida, distorsiona la realidad, informa y desinforma. Todo es parte de una estrategia global de confabulación y ocultamiento de la verdad. En conclusión, la serie de televisión permite observar los regímenes de hipervisibilidad e intervención de la máquina gubernamental actual y es, en esta medida, un punto de entrada para reconocer problemas sociales y políticos de total actualidad. Palabras clave: series de televisión; cine; conspiración; nuda vida; control; Archivos Secretos X; estado de excepción; biopolítica. * Artículo producto de los proyectos de investigación FONDECYT n.º 1141289 y FONDECYT n.º 1161253, PLU n.º 1110 y PLU n.º 120010 financiados por el Consejo Nacional de Ciencia y Tecnología.