Characterizing Shadow Price Via Lagrangian Multiplier for Nonsmooth Problem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Shadow Price of Health

This PDF is a selection from an out-of-print volume from the National Bureau of Economic Research Volume Title: The Demand for Health: A Theoretical and Empirical Investigation Volume Author/Editor: Michael Grossman Volume Publisher: NBER Volume ISBN: 0-87014-248-8 Volume URL: http://www.nber.org/books/gros72-1 Publication Date: 1972 Chapter Title: The Shadow Price of Health Chapter Author: Michael Grossman Chapter URL: http://www.nber.org/chapters/c3486 Chapter pages in book: (p. 11 - 30) II THE SHADOW PRICE OF HEALTH In the previous chapter, 1 showed how a consumer selects the optimal quantity of health in any period of his life. In this chapter, 1 explore the effects of changes in the supply and demand prices of health in the context of the pure investment model. In Section 1, I comment on the demand curve for health capital in the investment model.. In Section 2, I relate variations in depreciation rates with age to life cycle patterns of health and gross investment. I also examine the impact of changes in depreciation rates among individuals of the same age and briefly incorporate uncertainty into the model. In the third section, I consider the effects of shifts in market efficiency, measured by the wage rate, and nonmarket efficiency, measured by human capital, on supply and demand prices. 1. THE INVESTMENT DEMAND CURVE if the marginal utility of healthy days or the marginal disutility of sick days were equal to zero, health would be solely an investment commodity. The optimal amount of could then be found by equating the marginal monetary rate of return on an investment in health to the cost of health capital: = = r — + (2-1) Setting Uh1 =0in equation (1-13'), one derives equation (2-1). -

Optimization Basics for Electric Power Markets

Optimization Basics for Electric Power Markets Presenter: Leigh Tesfatsion Professor of Econ, Math, and ECpE Iowa State University Ames, Iowa 50011-1070 http://www.econ.iastate.edu/tesfatsi/ [email protected] Last Revised: 20 September 2020 Important Acknowledgement: These lecture materials incorporate edited slides and figures originally developed by R. Baldick, S. Bradley et al., A. Hallam, T. Overbye, and M. Wachowiak 1 Topic Outline ⚫ ISO Market Optimization on a Typical Operating Day D ⚫ Alternative modeling formulations ⚫ Optimization illustration: Real-time economic dispatch ⚫ Classic Nonlinear Programming Problem (NPP): Minimization subject to equality constraints ⚫ NPP via the Lagrange multiplier approach ⚫ NPP Lagrange multipliers as shadow prices ⚫ Real-time economic dispatch: Numerical example ⚫ General Nonlinear Programming Problem (GNPP): Minimization subject to equality and inequality constraints ⚫ GNPP via the Lagrange multiplier approach ⚫ GNPP Lagrange multipliers as shadow prices ⚫ Necessary versus sufficient conditions for optimization ⚫ Technical references 2 Key Objective of EE/Econ 458 ◆ Understand the optimization processes undertaken by participants in restructured wholesale power markets ◆ For ISOs, these processes include: ◼ Security-Constrained Unit Commitment (SCUC) for determining which Generating Companies (GenCos) will be asked to commit to the production of energy the following day ◼ Security-Constrained Economic Dispatch (SCED) for determining energy dispatch and locational marginal price (LMP) levels -

Use of Multiple Regression Analysis to Summarize and Interpret Linear Programming Shadow Prices in an Economic Planning Model

/Îj^'/T'f USE OF MULTIPLE REGRESSION ANALYSIS TO SUMMARIZE AND INTERPRET LINEAR PROGRAMMING SHADOW PRICES IN AN ECONOMIC PLANNING MODEL Daniel G. Williams U.S. Department of Agriculture Economics, Statistics, and Cooperatives Service Technical Bulletin No. 1622 Use of Multiple Regression Analysis to Summarize and Interpret Linear Program- ming Shadow Prices in an Economic Planning Model. By Daniel G. Williams, Economic Development Division; Economics, Statistics, and Cooperatives Service, U.S. Department of Agriculture. Technical Bulletin No. 1622. ABSTRACT A simple method is presented for evaluating the benefit to a region (regional objective function) of new manufacturing firms. These firms are subsets of the more aggregated 4-digit SIC manufacturing industries, some included and some not, in a rural multicounty economic planning model. The model must contain many types of industries to include the full range of industry; such addition is costly. Multiple regression analysis can summarize and interpret shadow prices of export industries so that local planners in their decisionmaking can use the underlying economic characteristics of the model industries rather than use only their industry product classifications. Keywords: Linear programming, shadow prices, objective function, multiple regression, regional exports, rural development. Washington, D.C. 20250 May 1980 CONTENTS Summary iü Introduction 1 Objective Function and the Generalized Shadow Price 4 The Multiple Regression and Linear Programming Models 5 Interpretation of the Multiple -

Economic Evaluation Glossary of Terms

Economic Evaluation Glossary of Terms A Attributable fraction: indirect health expenditures associated with a given diagnosis through other diseases or conditions (Prevented fraction: indicates the proportion of an outcome averted by the presence of an exposure that decreases the likelihood of the outcome; indicates the number or proportion of an outcome prevented by the “exposure”) Average cost: total resource cost, including all support and overhead costs, divided by the total units of output B Benefit-cost analysis (BCA): (or cost-benefit analysis) a type of economic analysis in which all costs and benefits are converted into monetary (dollar) values and results are expressed as either the net present value or the dollars of benefits per dollars expended Benefit-cost ratio: a mathematical comparison of the benefits divided by the costs of a project or intervention. When the benefit-cost ratio is greater than 1, benefits exceed costs C Comorbidity: presence of one or more serious conditions in addition to the primary disease or disorder Cost analysis: the process of estimating the cost of prevention activities; also called cost identification, programmatic cost analysis, cost outcome analysis, cost minimization analysis, or cost consequence analysis Cost effectiveness analysis (CEA): an economic analysis in which all costs are related to a single, common effect. Results are usually stated as additional cost expended per additional health outcome achieved. Results can be categorized as average cost-effectiveness, marginal cost-effectiveness, -

Essays on Productive Efficiency, Shadow Prices, and Human Capital

ESSAYS ON PRODUCTIVE EFFICIENCY, SHADOW PRICES, AND HUMAN CAPITAL by Per-Olov Marklund Abstract This thesis consists of five papers, four of them basically concerning environmental issues, while the fifth paper addresses the issue of measuring output from the educational sector. The first paper starts from the fact that industrial activity causes environmental damage. Therefore, public authorities are called upon to regulate the behavior of producers by, e.g., legislating maximally allowed emission levels, which give rise to abatement costs. In this paper, marginal abatement costs (MACs) are estimated and a procedure to empirically analyze why MACs may vary between producers is suggested. The main focus is on whether the MACs in the Swedish pulp industry reveal that differences between counties in, e.g., economical characteristics, were influential when the authority, during 1983-1990, restricted 12 geographically scattered plants regarding emissions. The result indicates that county differences were influential. The second paper analyses and suggests a procedure for testing the Porter hypothesis. Part of this hypothesis is based on the argument that increased environmental stringency not only brings a cleaner environment, but also makes the polluting producers aware of the opportunity of using resources more efficiently. The particular test suggested considers whether there is a positive correlation between producers’ technical output efficiency and environmental stringency, approximated by a regulatory intensity index. It is empirically applied on 12 Swedish pulp plants during 1983-1990. No support for the Porter hypothesis is found in this particular case. The third paper deals with the climate policy issue. Under the Kyoto Protocol, the European Union agreed to reduce its emission of greenhouse gases by 8 percent in comparison with the level in 1990. -

METHOD FACTSHEET Shadow Pricing

METHOD FACTSHEET Shadow pricing Introduction When society sets environmental targets on provision of non-marketed ecosystem services it is an implicit valuation of the services. As an example, when a country complies with e.g. the Water Framework Directive it accepts any costs incurred to reach specific water quality levels. Using this approach assumes that it is a socially optimal and well-informed decision to produce a mix of services where water quality regulation is provided at a specific level. If society increases the relative valuation of water quality regulation, the optimal mix of provision of fresh water quality and other services including agricultural products is assumed to be updated through the agreement of new targets. The shadow price is the marginal cost of obtaining an additional unit of the ecosystem service by implementing the environmental target. As an additional unit of the targeted ecosystem service restricts production of other marketed ecosystem services, the change in the mix reflects the relative values. The shadow price society ‘pays’ for provision of the ecosystem services is the loss from not obtaining the value from producing marketed goods. The method takes into account that economic actors can adjust the way in which the mix of services is produced. Taking this into account avoids exaggeration of the costs and in turn the value of the ecosystem services. Keywords Opportunity costs; Implicit valuation; Targets for environmental quality. Why would I chose this approach? The methodology is particularly useful in an ecosystem service context to illustrate the scope of ecosystem- based approaches to meet environmental quality targets in contrast to technology-based approaches. -

Shadow Prices in EMIX

Ed Cazalet 2/24/2010 Shadow Prices In EMIX This is in response to an action item assigned to me after I made a comment in an EMIX meeting that there are prices not determined by a tariff, negotiation or a market process. One example is a "shadow price". The definition of a shadow price from Wikipedia is below. Wikipedia further explains the concept beyond what I have attached below. A power example is the case of a micro grid disconnected from the main grid. In this case supply and demand must balance within the microgrid. There is no market price. If the microgrid has an optimization method to minimize the cost of serving the load then the constraint "load - generation = 0" must be satisfied. At the optimal solution, the shadow price is the incremental cost of one additional unit of generation. The is also the price that could be used internally to communicate scarcity to any price-responsive loads. Shadow price From Wikipedia, the free encyclopedia In constrained optimization in economics, the shadow price is the change in the objective value of the optimal solution of an optimization problem obtained by relaxing the constraint by one unit – it is the marginal utility of relaxing the constraint, or equivalently the mar gi nal cost of strengthening the constraint. In a business application, a shadow price is the maximum price that management is willing to pay for an extra unit of a given limited resource.[1] For example, if a production line is already operating at its maximum 40 hour limit, the shadow price would be the maximum price the manager would be willing to pay for operating it for an additional hour, based on the benefits he would get from this change. -

Discounting for Public Cost-Benefit Analysis

NBER WORKING PAPER SERIES DISCOUNTING FOR PUBLIC COST-BENEFIT ANALYSIS Qingran Li William A. Pizer Working Paper 25413 http://www.nber.org/papers/w25413 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 December 2018 We thank Alex Marten, Dalia Patino-Echeverri, Martin Smith, Steven Sexton, Juan-Carlos Suárez Serrato for comments and feedback, as well as Helen Ladd for very helpful discussions. Jeffrey Vincent provided invaluable comments on an earlier draft. Xiaochen Sun provided excellent research assistance. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research. NBER working papers are circulated for discussion and comment purposes. They have not been peer-reviewed or been subject to the review by the NBER Board of Directors that accompanies official NBER publications. © 2018 by Qingran Li and William A. Pizer. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Discounting for Public Cost-Benefit Analysis Qingran Li and William A. Pizer NBER Working Paper No. 25413 December 2018 JEL No. D61,H43,Q54 ABSTRACT Standard U.S. practice for public cost-benefit analysis is to bound the discount rate with the interest rate paid by capital investment and rate received by consumers. These bounding cases arise when future benefits accrue to consumers in either a two-period model or as a perpetuity. We generalize to consider benefits paid in any future period. We find that the appropriate discount rate converges to the consumption rate for benefits in the distant future. -

The Shadow Prices of Voluntary Caregiving: Using Panel Data of Well-Being to Estimate the Cost of Informal Care

DISCUSSION PAPER SERIES IZA DP No. 11545 The Shadow Prices of Voluntary Caregiving: Using Panel Data of Well-Being to Estimate the Cost of Informal Care Rebecca McDonald Nattavudh Powdthavee MAY 2018 DISCUSSION PAPER SERIES IZA DP No. 11545 The Shadow Prices of Voluntary Caregiving: Using Panel Data of Well-Being to Estimate the Cost of Informal Care Rebecca McDonald University of Birmingham Nattavudh Powdthavee Warwick Business School and IZA MAY 2018 Any opinions expressed in this paper are those of the author(s) and not those of IZA. Research published in this series may include views on policy, but IZA takes no institutional policy positions. The IZA research network is committed to the IZA Guiding Principles of Research Integrity. The IZA Institute of Labor Economics is an independent economic research institute that conducts research in labor economics and offers evidence-based policy advice on labor market issues. Supported by the Deutsche Post Foundation, IZA runs the world’s largest network of economists, whose research aims to provide answers to the global labor market challenges of our time. Our key objective is to build bridges between academic research, policymakers and society. IZA Discussion Papers often represent preliminary work and are circulated to encourage discussion. Citation of such a paper should account for its provisional character. A revised version may be available directly from the author. IZA – Institute of Labor Economics Schaumburg-Lippe-Straße 5–9 Phone: +49-228-3894-0 53113 Bonn, Germany Email: [email protected] www.iza.org IZA DP No. 11545 MAY 2018 ABSTRACT The Shadow Prices of Voluntary Caregiving: Using Panel Data of Well-Being to Estimate the Cost of Informal Care This paper uses the wellbeing valuation (WV) approach to estimate and monetize the wellbeing impacts of informal care provision on caregivers. -

Guidelines for the Economic Analysis of Projects

GUIDELINES FOR THE ECONOMIC ANALYSIS OF PROJECTS Economics and Development Resource Center February 1997 FOREWORD A Bank Task Force on Project Quality established in 1993 considered several means of enhancing the effectiveness of Bank operations, in conjunction with borrowing member countries. A number of steps were identified to improve the quality of projects at the preparation stage as well as at the implementation stage. To this end, an Interdepartmental Working Group was established to reconsider the nature and role of the economic analysis of projects, and in particular to review the existing guidelines for project economic analysis, as a means of enhancing project quality at entry. This review has led to these new Guidelines for the Economic Analysis of Projects. Bank staff and consultants are required to undertake economic analysis of Bank loan projects in a relatively uniform way. Guidelines for this purpose were issued last in 1987. Several factors have come together to make it necessary to produce this new general guidelines. First, the Asian Development Bank has reconsidered its own priorities. A new set of objectives for classifying Bank projects has been established, resulting in a greater emphasis on projects producing nontraded outputs that meet peoples needs directly. Second, the source of finance for many types of project is changing with a greater role for the private sector. Greater emphasis therefore needs to be placed on the appropriate roles of the public and private sectors in the provision of goods and services before specific project proposals are brought forward for analysis. Third, Bank staff and consultants now have to deal with a broader range of issues than before. -

Multi-Page.Pdf

WORLD BANK TECHNICAL PAPER NO. 338 k-p 33 Workin progress for publicdiscussion [9C( O Public Disclosure Authorized Measuring Economic Benefits for Water Investments and Policies Public Disclosure Authorized Public Disclosure Authorized Public Disclosure Authorized RobertA. Young RECENT WORLD BANK TECHNICAL PAPERS No. 263 Le Moigne, Subramanian, Xie, and Giltner, editors, A Guide to the Formulationof WaterResources Strategy No. 264 Miller and Jones, Organicand Compost-BasedGrowing Media for TreeSeedling Nurseries No. 265 Viswanath, BuildingPartnerships for PovertyReduction: The ParticipatoryProject Planning Approach of the Women's EnterpriseManagement Training Outreach Program (WEMTOP) No. 266 Hill and Bender, Developingthe RegulatoryEnvironment for CompetitiveAgricultural Markets No. 267 Valdes and Schaeffer, Surveillanceof AgriculturalPrices and Trade:A Handbookforthe DominicanRepublic No. 268 Valdes and Schaeffer, Surveillanceof AgriculturalPrices and Trade:A HandbookforColombia No. 269 Scheierling, OvercomingAgricultural Pollution of Water:The Challengeof IntegratingAgricultural and EnvironmentalPolicies in the EuropeanUnion No. 270 Banerjee,Rehabilitation of DegradedForests in Asia No. 271 Ahmed, TechnologicalDevelopment and PollutionAbatement: A Study of How EnterprisesAre FindingAlternatives to Chlorofluorocarbons No. 272 Greaney and Kellaghan, EquityIssues in PublicExaminations in DevelopingCountries No. 273 Grimshaw and Helfer, editors, VetiverGrass for Soil and WaterConservation, Land Rehabilitation,and Embankment Stabilization:A -

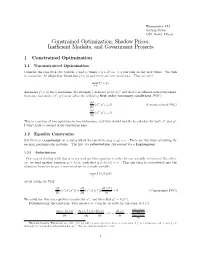

Constrained Optimization, Shadow Prices, Inefficient Markets, And

Economics 131 Section Notes GSI: David Albouy Constrained Optimization, Shadow Prices, Inefficient Markets, and Government Projects 1 Constrained Optimization 1.1 Unconstrained Optimization Consider the case with two variable x and y,wherex, y R, i.e. x, y cantakeonanyrealvalues. Wewish to maximize the objective function f (x, y) and there∈ are no constraints. Thus we solve max f (x, y) x,y Assuming f (x, y) has a maximum (for example f does not go to )1 andthatitisdifferentiable everywhere, ∞ then any maximum (x∗,y∗) must solve the following first order necessary conditions (FOC) ∂f (x ,y )=0 (Unconstrained FOC) ∂x ∗ ∗ ∂f (x ,y )=0 ∂y ∗ ∗ This is a system of two equations in two unknowns, and thus should usually be solvable for both x∗ and y∗. I won’t look at second order conditions here. 1.2 Equality Constraints Say there is a constraint on x and y which we can write as g (x, y)=c. There are two ways of solving the ensuing maximization problem. The first via substitution, the second via a Lagrangean 1.2.1 Substitution One way of dealing with this is to try and use this equation to solve for one variable in terms of the other, i.e. we find another function y = h (x), such that g (x, h (x)) = c. This can then be substituted into the objective function to get a maximization in a single variable max f (x, h (x)) x which yields the FOC ∂f ∂f dh (x ) (x ,h(x )) + (x ,h(x )) ∗ =0 (Constrained FOC) ∂x ∗ ∗ ∂y ∗ ∗ dx We could use this one equation to solve for x∗,andthenfind y∗ = h (x∗).