Natural Deduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ling 130 Notes: a Guide to Natural Deduction Proofs

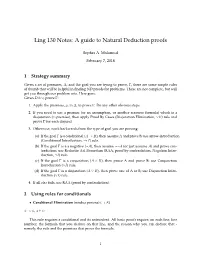

Ling 130 Notes: A guide to Natural Deduction proofs Sophia A. Malamud February 7, 2018 1 Strategy summary Given a set of premises, ∆, and the goal you are trying to prove, Γ, there are some simple rules of thumb that will be helpful in finding ND proofs for problems. These are not complete, but will get you through our problem sets. Here goes: Given Delta, prove Γ: 1. Apply the premises, pi in ∆, to prove Γ. Do any other obvious steps. 2. If you need to use a premise (or an assumption, or another resource formula) which is a disjunction (_-premise), then apply Proof By Cases (Disjunction Elimination, _E) rule and prove Γ for each disjunct. 3. Otherwise, work backwards from the type of goal you are proving: (a) If the goal Γ is a conditional (A ! B), then assume A and prove B; use arrow-introduction (Conditional Introduction, ! I) rule. (b) If the goal Γ is a a negative (:A), then assume ::A (or just assume A) and prove con- tradiction; use Reductio Ad Absurdum (RAA, proof by contradiction, Negation Intro- duction, :I) rule. (c) If the goal Γ is a conjunction (A ^ B), then prove A and prove B; use Conjunction Introduction (^I) rule. (d) If the goal Γ is a disjunction (A _ B), then prove one of A or B; use Disjunction Intro- duction (_I) rule. 4. If all else fails, use RAA (proof by contradiction). 2 Using rules for conditionals • Conditional Elimination (modus ponens) (! E) φ ! , φ ` This rule requires a conditional and its antecedent. -

Classifying Material Implications Over Minimal Logic

Classifying Material Implications over Minimal Logic Hannes Diener and Maarten McKubre-Jordens March 28, 2018 Abstract The so-called paradoxes of material implication have motivated the development of many non- classical logics over the years [2–5, 11]. In this note, we investigate some of these paradoxes and classify them, over minimal logic. We provide proofs of equivalence and semantic models separating the paradoxes where appropriate. A number of equivalent groups arise, all of which collapse with unrestricted use of double negation elimination. Interestingly, the principle ex falso quodlibet, and several weaker principles, turn out to be distinguishable, giving perhaps supporting motivation for adopting minimal logic as the ambient logic for reasoning in the possible presence of inconsistency. Keywords: reverse mathematics; minimal logic; ex falso quodlibet; implication; paraconsistent logic; Peirce’s principle. 1 Introduction The project of constructive reverse mathematics [6] has given rise to a wide literature where various the- orems of mathematics and principles of logic have been classified over intuitionistic logic. What is less well-known is that the subtle difference that arises when the principle of explosion, ex falso quodlibet, is dropped from intuitionistic logic (thus giving (Johansson’s) minimal logic) enables the distinction of many more principles. The focus of the present paper are a range of principles known collectively (but not exhaustively) as the paradoxes of material implication; paradoxes because they illustrate that the usual interpretation of formal statements of the form “. → . .” as informal statements of the form “if. then. ” produces counter-intuitive results. Some of these principles were hinted at in [9]. Here we present a carefully worked-out chart, classifying a number of such principles over minimal logic. -

Chapter 5: Methods of Proof for Boolean Logic

Chapter 5: Methods of Proof for Boolean Logic § 5.1 Valid inference steps Conjunction elimination Sometimes called simplification. From a conjunction, infer any of the conjuncts. • From P ∧ Q, infer P (or infer Q). Conjunction introduction Sometimes called conjunction. From a pair of sentences, infer their conjunction. • From P and Q, infer P ∧ Q. § 5.2 Proof by cases This is another valid inference step (it will form the rule of disjunction elimination in our formal deductive system and in Fitch), but it is also a powerful proof strategy. In a proof by cases, one begins with a disjunction (as a premise, or as an intermediate conclusion already proved). One then shows that a certain consequence may be deduced from each of the disjuncts taken separately. One concludes that that same sentence is a consequence of the entire disjunction. • From P ∨ Q, and from the fact that S follows from P and S also follows from Q, infer S. The general proof strategy looks like this: if you have a disjunction, then you know that at least one of the disjuncts is true—you just don’t know which one. So you consider the individual “cases” (i.e., disjuncts), one at a time. You assume the first disjunct, and then derive your conclusion from it. You repeat this process for each disjunct. So it doesn’t matter which disjunct is true—you get the same conclusion in any case. Hence you may infer that it follows from the entire disjunction. In practice, this method of proof requires the use of “subproofs”—we will take these up in the next chapter when we look at formal proofs. -

The Deduction Rule and Linear and Near-Linear Proof Simulations

The Deduction Rule and Linear and Near-linear Proof Simulations Maria Luisa Bonet¤ Department of Mathematics University of California, San Diego Samuel R. Buss¤ Department of Mathematics University of California, San Diego Abstract We introduce new proof systems for propositional logic, simple deduction Frege systems, general deduction Frege systems and nested deduction Frege systems, which augment Frege systems with variants of the deduction rule. We give upper bounds on the lengths of proofs in Frege proof systems compared to lengths in these new systems. As applications we give near-linear simulations of the propositional Gentzen sequent calculus and the natural deduction calculus by Frege proofs. The length of a proof is the number of lines (or formulas) in the proof. A general deduction Frege proof system provides at most quadratic speedup over Frege proof systems. A nested deduction Frege proof system provides at most a nearly linear speedup over Frege system where by \nearly linear" is meant the ratio of proof lengths is O(®(n)) where ® is the inverse Ackermann function. A nested deduction Frege system can linearly simulate the propositional sequent calculus, the tree-like general deduction Frege calculus, and the natural deduction calculus. Hence a Frege proof system can simulate all those proof systems with proof lengths bounded by O(n ¢ ®(n)). Also we show ¤Supported in part by NSF Grant DMS-8902480. 1 that a Frege proof of n lines can be transformed into a tree-like Frege proof of O(n log n) lines and of height O(log n). As a corollary of this fact we can prove that natural deduction and sequent calculus tree-like systems simulate Frege systems with proof lengths bounded by O(n log n). -

MOTIVATING HILBERT SYSTEMS Hilbert Systems Are Formal Systems

MOTIVATING HILBERT SYSTEMS Hilbert systems are formal systems that encode the notion of a mathematical proof, which are used in the branch of mathematics known as proof theory. There are other formal systems that proof theorists use, with their own advantages and disadvantages. The advantage of Hilbert systems is that they are simpler than the alternatives in terms of the number of primitive notions they involve. Formal systems for proof theory work by deriving theorems from axioms using rules of inference. The distinctive characteristic of Hilbert systems is that they have very few primitive rules of inference|in fact a Hilbert system with just one primitive rule of inference, modus ponens, suffices to formalize proofs using first- order logic, and first-order logic is sufficient for all mathematical reasoning. Modus ponens is the rule of inference that says that if we have theorems of the forms p ! q and p, where p and q are formulas, then χ is also a theorem. This makes sense given the interpretation of p ! q as meaning \if p is true, then so is q". The simplicity of inference in Hilbert systems is compensated for by a somewhat more complicated set of axioms. For minimal propositional logic, the two axiom schemes below suffice: (1) For every pair of formulas p and q, the formula p ! (q ! p) is an axiom. (2) For every triple of formulas p, q and r, the formula (p ! (q ! r)) ! ((p ! q) ! (p ! r)) is an axiom. One thing that had always bothered me when reading about Hilbert systems was that I couldn't see how people could come up with these axioms other than by a stroke of luck or genius. -

Proof Theory Can Be Viewed As the General Study of Formal Deductive Systems

Proof Theory Jeremy Avigad December 19, 2017 Abstract Proof theory began in the 1920’s as a part of Hilbert’s program, which aimed to secure the foundations of mathematics by modeling infinitary mathematics with formal axiomatic systems and proving those systems consistent using restricted, finitary means. The program thus viewed mathematics as a system of reasoning with precise linguistic norms, governed by rules that can be described and studied in concrete terms. Today such a viewpoint has applications in mathematics, computer science, and the philosophy of mathematics. Keywords: proof theory, Hilbert’s program, foundations of mathematics 1 Introduction At the turn of the nineteenth century, mathematics exhibited a style of argumentation that was more explicitly computational than is common today. Over the course of the century, the introduction of abstract algebraic methods helped unify developments in analysis, number theory, geometry, and the theory of equations, and work by mathematicians like Richard Dedekind, Georg Cantor, and David Hilbert towards the end of the century introduced set-theoretic language and infinitary methods that served to downplay or suppress computational content. This shift in emphasis away from calculation gave rise to concerns as to whether such methods were meaningful and appropriate in mathematics. The discovery of paradoxes stemming from overly naive use of set-theoretic language and methods led to even more pressing concerns as to whether the modern methods were even consistent. This led to heated debates in the early twentieth century and what is sometimes called the “crisis of foundations.” In lectures presented in 1922, Hilbert launched his Beweistheorie, or Proof Theory, which aimed to justify the use of modern methods and settle the problem of foundations once and for all. -

Two Sources of Explosion

Two sources of explosion Eric Kao Computer Science Department Stanford University Stanford, CA 94305 United States of America Abstract. In pursuit of enhancing the deductive power of Direct Logic while avoiding explosiveness, Hewitt has proposed including the law of excluded middle and proof by self-refutation. In this paper, I show that the inclusion of either one of these inference patterns causes paracon- sistent logics such as Hewitt's Direct Logic and Besnard and Hunter's quasi-classical logic to become explosive. 1 Introduction A central goal of a paraconsistent logic is to avoid explosiveness { the inference of any arbitrary sentence β from an inconsistent premise set fp; :pg (ex falso quodlibet). Hewitt [2] Direct Logic and Besnard and Hunter's quasi-classical logic (QC) [1, 5, 4] both seek to preserve the deductive power of classical logic \as much as pos- sible" while still avoiding explosiveness. Their work fits into the ongoing research program of identifying some \reasonable" and \maximal" subsets of classically valid rules and axioms that do not lead to explosiveness. To this end, it is natural to consider which classically sound deductive rules and axioms one can introduce into a paraconsistent logic without causing explo- siveness. Hewitt [3] proposed including the law of excluded middle and the proof by self-refutation rule (a very special case of proof by contradiction) but did not show whether the resulting logic would be explosive. In this paper, I show that for quasi-classical logic and its variant, the addition of either the law of excluded middle or the proof by self-refutation rule in fact leads to explosiveness. -

The Corcoran-Smiley Interpretation of Aristotle's Syllogistic As

November 11, 2013 15:31 History and Philosophy of Logic Aristotelian_syllogisms_HPL_house_style_color HISTORY AND PHILOSOPHY OF LOGIC, 00 (Month 200x), 1{27 Aristotle's Syllogistic and Core Logic by Neil Tennant Department of Philosophy The Ohio State University Columbus, Ohio 43210 email [email protected] Received 00 Month 200x; final version received 00 Month 200x I use the Corcoran-Smiley interpretation of Aristotle's syllogistic as my starting point for an examination of the syllogistic from the vantage point of modern proof theory. I aim to show that fresh logical insights are afforded by a proof-theoretically more systematic account of all four figures. First I regiment the syllogisms in the Gentzen{Prawitz system of natural deduction, using the universal and existential quantifiers of standard first- order logic, and the usual formalizations of Aristotle's sentence-forms. I explain how the syllogistic is a fragment of my (constructive and relevant) system of Core Logic. Then I introduce my main innovation: the use of binary quantifiers, governed by introduction and elimination rules. The syllogisms in all four figures are re-proved in the binary system, and are thereby revealed as all on a par with each other. I conclude with some comments and results about grammatical generativity, ecthesis, perfect validity, skeletal validity and Aristotle's chain principle. 1. Introduction: the Corcoran-Smiley interpretation of Aristotle's syllogistic as concerned with deductions Two influential articles, Corcoran 1972 and Smiley 1973, convincingly argued that Aristotle's syllogistic logic anticipated the twentieth century's systematizations of logic in terms of natural deductions. They also showed how Aristotle in effect advanced a completeness proof for his deductive system. -

Chapter 9: Initial Theorems About Axiom System

Initial Theorems about Axiom 9 System AS1 1. Theorems in Axiom Systems versus Theorems about Axiom Systems ..................................2 2. Proofs about Axiom Systems ................................................................................................3 3. Initial Examples of Proofs in the Metalanguage about AS1 ..................................................4 4. The Deduction Theorem.......................................................................................................7 5. Using Mathematical Induction to do Proofs about Derivations .............................................8 6. Setting up the Proof of the Deduction Theorem.....................................................................9 7. Informal Proof of the Deduction Theorem..........................................................................10 8. The Lemmas Supporting the Deduction Theorem................................................................11 9. Rules R1 and R2 are Required for any DT-MP-Logic........................................................12 10. The Converse of the Deduction Theorem and Modus Ponens .............................................14 11. Some General Theorems About ......................................................................................15 12. Further Theorems About AS1.............................................................................................16 13. Appendix: Summary of Theorems about AS1.....................................................................18 2 Hardegree, -

Does Reductive Proof Theory Have a Viable Rationale?

DOES REDUCTIVE PROOF THEORY HAVE A VIABLE RATIONALE? Solomon Feferman Abstract The goals of reduction and reductionism in the natural sciences are mainly explanatory in character, while those in mathematics are primarily foundational. In contrast to global reductionist programs which aim to reduce all of mathematics to one supposedly “univer- sal” system or foundational scheme, reductive proof theory pursues local reductions of one formal system to another which is more jus- tified in some sense. In this direction, two specific rationales have been proposed as aims for reductive proof theory, the constructive consistency-proof rationale and the foundational reduction rationale. However, recent advances in proof theory force one to consider the viability of these rationales. Despite the genuine problems of foun- dational significance raised by that work, the paper concludes with a defense of reductive proof theory at a minimum as one of the principal means to lay out what rests on what in mathematics. In an extensive appendix to the paper, various reduction relations between systems are explained and compared, and arguments against proof-theoretic reduction as a “good” reducibility relation are taken up and rebutted. 1 1 Reduction and reductionism in the natural sciencesandinmathematics. The purposes of reduction in the natural sciences and in mathematics are quite different. In the natural sciences, one main purpose is to explain cer- tain phenomena in terms of more basic phenomena, such as the nature of the chemical bond in terms of quantum mechanics, and of macroscopic ge- netics in terms of molecular biology. In mathematics, the main purpose is foundational. This is not to be understood univocally; as I have argued in (Feferman 1984), there are a number of foundational ways that are pursued in practice. -

Notes on Proof Theory

Notes on Proof Theory Master 1 “Informatique”, Univ. Paris 13 Master 2 “Logique Mathématique et Fondements de l’Informatique”, Univ. Paris 7 Damiano Mazza November 2016 1Last edit: March 29, 2021 Contents 1 Propositional Classical Logic 5 1.1 Formulas and truth semantics . 5 1.2 Atomic negation . 8 2 Sequent Calculus 10 2.1 Two-sided formulation . 10 2.2 One-sided formulation . 13 3 First-order Quantification 16 3.1 Formulas and truth semantics . 16 3.2 Sequent calculus . 19 3.3 Ultrafilters . 21 4 Completeness 24 4.1 Exhaustive search . 25 4.2 The completeness proof . 30 5 Undecidability and Incompleteness 33 5.1 Informal computability . 33 5.2 Incompleteness: a road map . 35 5.3 Logical theories . 38 5.4 Arithmetical theories . 40 5.5 The incompleteness theorems . 44 6 Cut Elimination 47 7 Intuitionistic Logic 53 7.1 Sequent calculus . 55 7.2 The relationship between intuitionistic and classical logic . 60 7.3 Minimal logic . 65 8 Natural Deduction 67 8.1 Sequent presentation . 68 8.2 Natural deduction and sequent calculus . 70 8.3 Proof tree presentation . 73 8.3.1 Minimal natural deduction . 73 8.3.2 Intuitionistic natural deduction . 75 1 8.3.3 Classical natural deduction . 75 8.4 Normalization (cut-elimination in natural deduction) . 76 9 The Curry-Howard Correspondence 80 9.1 The simply typed l-calculus . 80 9.2 Product and sum types . 81 10 System F 83 10.1 Intuitionistic second-order propositional logic . 83 10.2 Polymorphic types . 84 10.3 Programming in system F ...................... 85 10.3.1 Free structures . -

An Anti-Locally-Nameless Approach to Formalizing Quantifiers Olivier Laurent

An Anti-Locally-Nameless Approach to Formalizing Quantifiers Olivier Laurent To cite this version: Olivier Laurent. An Anti-Locally-Nameless Approach to Formalizing Quantifiers. Certified Programs and Proofs, Jan 2021, virtual, Denmark. pp.300-312, 10.1145/3437992.3439926. hal-03096253 HAL Id: hal-03096253 https://hal.archives-ouvertes.fr/hal-03096253 Submitted on 4 Jan 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. An Anti-Locally-Nameless Approach to Formalizing Quantifiers Olivier Laurent Univ Lyon, EnsL, UCBL, CNRS, LIP LYON, France [email protected] Abstract all cases have been covered. This works perfectly well for We investigate the possibility of formalizing quantifiers in various propositional systems, but as soon as (first-order) proof theory while avoiding, as far as possible, the use of quantifiers enter the picture, we have to face one ofthe true binding structures, α-equivalence or variable renam- nightmares of formalization: quantifiers are binders... The ings. We propose a solution with two kinds of variables in question of the formalization of binders does not yet have an 1 terms and formulas, as originally done by Gentzen. In this answer which makes perfect consensus .