Efficient Implementation of 3D Finite Difference Schemes on Recent Processor Architectures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hydroinformatik II ”Prozess-Simulation Und Systemanalyse”

Version 6.01 - 5. Mai 2017 Hydroinformatik II ”Prozess-Simulation und Systemanalyse” Prof. Dr.-Ing. Olaf Kolditz TU Dresden / UFZ Leipzig Angewandte Umweltsystemanalyse Department Umweltinformatik Sommersemester 2015 2 Vorlesungskonzept Es geht weiter ... Nachdem wir uns im erstem Semester Hydroinformatik mit dem objekt-orientierten Programmierung mit C++ ”angefreundet” haben, soll es in diesem Semester auch um die Anwendung in den Disziplinen Hydrologie, Wasser- und Abfallwirtschaft gehen. Naturlich¨ werden wir uns mit sehr einfa- chen Beispielen besch¨aftigen (und z.B. keine kompletten Kl¨aranlagen rechnen, dafur¨ sind die Kollegen von der Siedlungswasserwirtschaft zust¨andig). Dennoch wollen wir ihnen am Ende des Semesters auch einen Einblick in unsere laufende Forschung geben, z.B. den Einsatz von numerischen Modellen bei der Abfallla- gerung [11], in der Hydrologie [5] und in der Grundwassermodellierung [15]. Der Vorlesungsfahrplan sieht wir folgt aus: Grundlagen - Mechanik (2-3V) • Grundlagen - Numerik (2-3V) • Prozessverst¨andnis - Benchmarks (3V) • Anwendungen (2V) • Visual C++ mit Qt (4V) • Abbildung 1: Lehrbuch zur ”Numerischen Hydromechanik” [8] 3 Grundlagen Fur¨ die ersten beiden Teile: Mechanik und Numerik, verwende ich weitgehend Material aus meinem Springer-Lehrbuch ”Numerical Methods in Environmental Fluid Mechanics” [8] (fur¨ Bauingenieure, siehe Abb. 1). Als deutsprachige Lite- ratur empfehle ich ihnen naturlich¨ die Dresdner Klassiker [7] und [2] sowie [3] (Hydromechanik, generell) [12] (Gerinnehydraulik), [6] (Grundwasserhydraulik). -

Numerical Methods in Fluid Flow and Heat Transfer Z Dr

Numerical Methods in Fluid Flow and Heat Transfer z Dr. Hasan Gunes z [email protected] z http://atlas.cc.itu.edu.tr/ ~guneshasa INTRODUCTION The Scientific Method and Mathematical Modeling The mathematical formulation of the problem is the reduction of the physical problem to a set of either algebraic or differential equations subject to certain assumptions. The process of modeling of physical systems in the real world should generally follow the path illustrated schematically in the chart below: Numerical Solution Procedure Physical System i.e. Reality Physical Laws + 1 Models Mathematical Model i.e. Governing Equations Analytical Solution (Fluid Dynamics Æ PDEs) 2 Discretization System of Algebraic Equations (or ODEs) 3 Matrix Solver Numerical Solution Physical World Physical Properties Assumptions •Algebraic eqs. •Differential eqs. oOrdinary diff. eqs. Mathematical Model oPartial diff.eqs. (Equations) Conservation Laws Linear Non linear Iterations Linearized Non linear Approximation (Exact or approximate) Nonlinear Linear solution solution (Analytical, Numerical) •FORTRAN •C Test solution •MATLAB •MAPLE •TECPLOT Solution Approaches Three approaches or methods are used to solve a problem in fluid mechanics & heat transfer 1. Experimental methods: capable of being most realistic, experiment required, scaling problems, measurement difficulties, operating costs. 2. Theoretical (analytical) methods: clean, general information in formula form, usually restricted to simple geometry &physics, usually restricted to linear problems. 3. Numerical (CFD) (computational) methods (Simulation): No restriction to linearity Complicated physics can be treated Time evolution of flow Large Re flow Disadvantages: Truncation errors Boundary condition problems Computer costs Need mathematical model for certain complex phenomena Simulation: The Third Pillar of Science z Traditional scientific and engineering paradigm: 1) Do theory or paper design. -

Achieving a Sustainable Environment Using Numerical Method for the Solution of Advection Equation in Fluid Dynamics

631 A publication of CHEMICAL ENGINEERING TRANSACTIONS VOL. 63, 2018 The Italian Association of Chemical Engineering Online at www.aidic.it/cet Guest Editors: Jeng Shiun Lim, Wai Shin Ho, Jiří J. Klemeš Copyright © 2018, AIDIC Servizi S.r.l. ISBN 978-88-95608-61-7; ISSN 2283-9216 DOI: 10.3303/CET1863106 Achieving a Sustainable Environment using Numerical Method for the Solution of Advection Equation in Fluid Dynamics Olusegun Adeyemi Olaijua,*, Yeak Su Hoea, Ezekiel Babatunde Ogunbodeb, c d Jonathan Kehinde Fabi , Ernest Ituma Egba aDepartment of Mathematics. Faculty of Science, Universiti Teknologi Malaysia, Skudai, 81300. Johor Bahru, Malaysia. bDepartment of Building. Federal University of Technology Minna, Niger State, Nigeria. cDepartment of Quantity Surveying. Federal Polytechnic Ilaro. Ogun State. Nigeria. dDepartment of Technology and Vocational Education, Ebonyi State University, Abakaliki. Ebonyi State. Nigeria. [email protected] The paper compares some explicit numerical methods for solving the advection equation that could be used to describe transportation of a pollutant in one direction. The one-dimensional advection equation is solved by using five different standard finite difference schemes (the Upwind, FTCS, Lax- Friedrichs, Lax wendroff and Leith’s methods) via C codes. An example is used for comparison; the numerical results are compared with analytical solution. It is found that, for the example studied, all the explicit finite difference methods give satisfactory solutions to the advection problem. 1. Introduction Advection can be referred to as a lateral or horizontal transfer of mass, heat, or other properties or movement of some material dissolved or suspended in fluid. This definition suggested winds that blow across earth's surface to represent air advection. -

27Th Biennial Conference on Numerical Analysis Contents

27th Biennial Conference on Numerical Analysis 27 { 30 June, 2017 Contents Introduction 1 Invited Speakers 3 Abstracts of Invited Talks 4 Abstracts of Minisymposia 8 Abstracts of Contributed Talks 41 Nothing in here Introduction Dear Participant, On behalf of the University of Strathclyde's Numerical Analysis and Scientific Computing Group, it is our pleasure to welcome you to the 27th Biennial Numerical Analysis conference. This is the fifth time the meeting is being held at Strathclyde, continuing the long series of conferences originally hosted in Dundee. We are looking forward to meeting delegates from around the world, with attendees from many different countries across six continents. Once again, we have been lucky to secure a stellar list of invited speakers who will address us on a variety of topics across all areas of numerical analysis. As well as a selection of spe- cialised minisymposia, we will also have a wide range of contributed talks, so hopefully there is something to suit everyone's interests. Although the meeting is funded almost entirely from the registration fees of the participants, additional financial support for some overseas participants has been provided by the Dundee Numerical Analysis Fund, started by Professor Gene Golub from Stanford University in 2007. As well as attending the scientific sessions, we hope you will also take advantage of the oppor- tunity to renew old friendships and meet new people as part of the communal meals and social programme. We are indebted to the City of Glasgow for once again generously sponsoring a wine reception at the City Chambers on Tuesday evening. -

ANALYSIS of STABILITY and ACCURACY for FORWARD TIME CENTERED SPACE APPROXIMATION by USING MODIFIED EQUATION Tahir.Ch1, N.A.Shahid1, *M.F.Tabassum2, A

Sci.Int.(Lahore),27(3),2027-2029,2015 ISSN 1013-5316; CODEN: SINTE 8 2027 ANALYSIS OF STABILITY AND ACCURACY FOR FORWARD TIME CENTERED SPACE APPROXIMATION BY USING MODIFIED EQUATION Tahir.Ch1, N.A.Shahid1, *M.F.Tabassum2, A. Sana1, S.Nazir3 1Department of Mathematics, Lahore Garrison University, Lahore, Pakistan. 2Department of Mathematics, University of Management and Technology, Lahore, Pakistan. 3Department of Mathematics, University of Engineering and Technology, Lahore, Pakistan. *Corresponding Author: Muhammad Farhan Tabassum, [email protected] , +92-321-4280420 Abstract: In this paper we investigate the quantitative behavior of a wide range of numerical methods for solving linear partial differential equations [PDE’s]. In order to study the properties of the numerical solutions, such as accuracy, consistency, and stability, we use the method of modified equation, which is an effective approach. To determine the necessary and sufficient conditions for computing the stability, we use a truncated version of modified equation which helps us in a better way to look into the nature of dispersive as well as dissipative errors. The heat equation with Drichlet Boundary Conditions can serve as a model for heat conduction, soil consolidation, ground water flow etc. Accuracy and Stability of Forward Time Centered Space (FTCS) scheme is checked by using Modified Differential Equation [MDE]. Key words: Accuracy, Stability, Modified Equation, Dispersive error, Forward Time Center Space Scheme. 1. INTRODUCTION To analyze the simple linear partial differential equation with the help of modified equation, we consider one dimensional heat equation (Transient diffusion equation) which is parabolic partial differential equation. This equation 2.3 Forward Time Centered Space Scheme describes the temperature distribution in a bar as a function of The partial differential equation of the following form [5] time. -

UNIVERSITY of CALIFORNIA, SAN DIEGO Fractional Diffusion

UNIVERSITY OF CALIFORNIA, SAN DIEGO Fractional Diffusion: Numerical Methods and Applications in Neuroscience A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Bioengineering with a specialization in Multi-Scale Biology by Nirupama Bhattacharya Committee in charge: Professor Gabriel A. Silva, Chair Professor Henry Abarbanel Professor Gert Cauwenberghs Professor Todd Coleman Professor Marcos Intaglietta 2014 Copyright Nirupama Bhattacharya, 2014 All rights reserved. The dissertation of Nirupama Bhattacharya is approved, and it is acceptable in quality and form for publication on microfilm and electronically: Chair University of California, San Diego 2014 iii DEDICATION Thank you to my dearest family and friends for all your love and support over the years. iv TABLE OF CONTENTS Signature Page . iii Dedication . iv Table of Contents . .v List of Figures . viii List of Tables . .x Acknowledgements . xi Vita ......................................... xii Abstract of the Dissertation . xiii Chapter 1 Introduction . .1 1.1 Fractional Calculus, Fractional Differential Equations, and Applications in Science and Engineering . .1 1.2 Fractional Diffusion . .2 Chapter 2 From Random Walk to the Fractional Diffusion Equation . .5 2.1 Introduction to the Random Walk Framework . .5 2.2 Einstein’s Formalism . .7 2.3 Continuous Time Random Walk and the Fractional Diffusion Equation . .9 2.4 Fundamental Solution . 15 Chapter 3 The Fractional Diffusion Equation and Adaptive Time Step Memory 18 3.1 Introduction . 18 3.2 The Grunwald-Letnikov¨ Derivative . 22 3.2.1 Derivation of the Fractional Diffusion Equation . 22 3.2.2 Definition of the Grunwald-Letnikov¨ Derivative . 24 3.2.3 Discretization of the Fractional Diffusion Equation . -

Introductory Finite Difference Methods for Pdes

PROFESSOR D. M. CAUSON & PROFESSOR C. G. MINGHAM INTRODUCTORY FINITE DIFFERENCE METHODS FOR PDES DOWNLOAD FREE TEXTBOOKS AT BOOKBOON.COM NO REGISTRATION NEEDED Professor D. M. Causon & Professor C. G. Mingham Introductory Finite Difference Methods for PDEs Download free books at BookBooN.com 2 Introductory Finite Difference Methods for PDEs © 2010 Professor D. M. Causon, Professor C. G. Mingham & Ventus Publishing ApS ISBN 978-87-7681-642-1 Download free books at BookBooN.com 3 Introductory Finite Difference Methods for PDEs Contents Contents Preface 9 1. Introduction 10 1.1 Partial Differential Equations 10 1.2 Solution to a Partial Differential Equation 10 1.3 PDE Models 11 1.4 Classification of PDEs 11 1.5 Discrete Notation 15 1.6 Checking Results 15 1.7 Exercise 1 16 2. Fundamentals 17 2.1 Taylor’s Theorem 17 2.2 Taylor’s Theorem Applied to the Finite Difference Method (FDM) 17 2.3 Simple Finite Difference Approximation to a Derivative 18 2.4 Example: Simple Finite Difference Approximations to a Derivative 18 2.5 Constructing a Finite Difference Toolkit 20 2.6 Simple Example of a Finite Difference Scheme 24 2.7 Pen and Paper Calculation (very important) 28 2.8 Exercise 2a 32 2.9 Exercise 2b 33 WHAt‘s missing in this equaTION? You could be one of our future talents Please click the advert MAERSK INTERNATIONAL TECHNOLOGY & SCIENCE PROGRAMME Are you about to graduate as an engineer or geoscientist? Or have you already graduated? If so, there may be an exciting future for you with A.P. -

Numerical Solution of Partial Differential Equations

Numerical solution of partial differential equations Dr. Louise Olsen-Kettle The University of Queensland School of Earth Sciences Centre for Geoscience Computing E{mail: [email protected] Web: http://researchers.uq.edu.au/researcher/768 @DrOlsenKettle ISBN: 978-1-74272-149-1 Acknowledgements Special thanks to Cinnamon Eliot who helped typeset these lecture notes in LATEX. Note to reader This document and code for the examples can be downloaded from http://espace.library.uq.edu.au/view/UQ:239427. Please note if any of the links to code are not working please contact my email address and I can send you the code. Contents 1 Overview of PDEs 9 1.1 Classification of PDEs . 9 1.1.1 Elliptic . 9 1.1.2 Hyperbolic . 9 1.1.3 Parabolic . 10 1.2 Implicit Vs Explicit Methods to Solve PDEs . 10 1.3 Well-posed and ill-posed PDEs . 10 I Numerical solution of parabolic equations 12 2 Explicit methods for 1-D heat or diffusion equation 13 2.1 Analytic solution: Separation of variables . 13 2.2 Numerical solution of 1-D heat equation . 15 2.2.1 Difference Approximations for Derivative Terms in PDEs . 15 2.2.2 Numerical solution of 1-D heat equation using the finite difference method . 16 2.2.3 Explicit Forward Euler method . 17 2.2.4 Stability criteria for forward Euler method . 20 2.3 Method of lines . 21 2.3.1 Example . 21 3 Implicit methods for 1-D heat equation 23 3.1 Implicit Backward Euler Method for 1-D heat equation . -

Finite-Difference Approximations to the Heat Equation

Finite-Difference Approximations to the Heat Equation Gerald W. Recktenwald∗ January 21, 2004 Abstract This article provides a practical overview of numerical solutions to the heat equation using the finite difference method. The forward time, centered space (FTCS), the backward time, centered space (BTCS), and Crank-Nicolson schemes are developed, and applied to a simple problem involving the one-dimensional heat equation. Complete, working Mat- lab codes for each scheme are presented. The results of running the codes on finer (one-dimensional) meshes, and with smaller time steps is demonstrated. These sample calculations show that the schemes real- ize theoretical predictions of how their truncation errors depend on mesh spacing and time step. The Matlab codes are straightforward and al- low the reader to see the differences in implementation between explicit method (FTCS) and implicit methods (BTCS and Crank-Nicolson). The codes also allow the reader to experiment with the stability limit of the FTCS scheme. 1 The Heat Equation The one dimensional heat equation is ∂φ ∂2φ α , ≤ x ≤ L, t ≥ ∂t = ∂x2 0 0 (1) where φ = φ(x, t) is the dependent variable, and α is a constant coefficient. Equation (1) is a model of transient heat conduction in a slab of material with thickness L. The domain of the solution is a semi-infinite strip of width L that continues indefinitely in time. The material property α is the thermal diffusivity. In a practical computation, the solution is obtained only for a finite time, say tmax. Solution to Equation (1) requires specification of boundary conditions at x =0andx = L, and initial conditions at t = 0. -

Math 563 Lecture Notes Numerical Methods for Boundary Value Problems

Math 563 Lecture Notes Numerical methods for boundary value problems Jeffrey Wong April 22, 2020 Related reading: Leveque, Chapter 9. 1 PDEs: an introduction Now we consider solving a parabolic PDE (a time dependent diffusion problem) in a finite interval. For this discussion, we consider as an example the heat equation ut = uxx; x 2 [0;L]; t > 0 u(0; t) =u(L; t) = 0; t > 0 (HD) u(x; 0) = f(x) to be solved for x 2 [0;L] and t > 0: Due to the initial condition at t = 0, we can think of the heat equation as taking an initial function u(x; 0) = f(x) and evolving it over time, i.e. the function u(·; t) defined in the interval [0;L] is changing as t increases. 1.1 The method of lines The fact that the heat equation looks like an IVP of sorts for the function u(·; t) suggests that IVP can be used. To do so, we can use the method of lines: 1) Discretize the PDE in space on a grid fxjg 2) Write down a system of ODEs for the functions u(xj; t) from the discretization, BCs 3) Solve the system of IVPs for u(xj; t) (by any reasonable method) We have already seen step (1) in discussing finite differences. The process is similar here. Take a uniform mesh xj = jh; h = L=(N + 1) and let uj(t) denote the approximation to u(xj; t): Using centered differences, we get the (space) discretized system du u (t) − 2u (t) + u (t) j = j+1 j j−1 ; j = 1; ··· N dt h2 1 along with u0(t) = uN (t) = 0 from the BCs. -

Lecture Notes

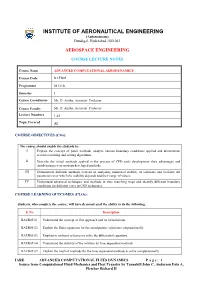

INSTITUTE OF AERONAUTICAL ENGINEERING (Autonomous) Dundigal, Hyderabad -500 043 AEROSPACE ENGINEERING COURSE LECTURE NOTES Course Name ADVANCED COMPUTATIONAL AERODYNAMICS Course Code BAEB05 Programme M.Tech Semester I Course Coordinator Ms. D. Anitha, Assistant Professor Course Faculty Ms. D. Anitha, Assistant Professor Lecture Numbers 1-45 Topic Covered All COURSE OBJECTIVES (COs): The course should enable the students to: I Explain the concept of panel methods, analyze various boundary conditions applied and demonstrate several searching and sorting algorithms. II Describe the initial methods applied in the process of CFD tools development their advantages and disadvantages over modern developed methods. III Demonstrate different methods evolved in analyzing numerical stability of solutions and evaluate the parameters over which the stability depends and their range of values. IV Understand advanced techniques and methods in time marching steps and identify different boundary conditions for different cases in CFD techniques. COURSE LEARNING OUTCOMES (CLOs): Students, who complete the course, will have demonstrated the ability to do the following: S. No Description BAEB05.01 Understand the concept of flux approach and its formulations. BAEB05.02 Explain the Euler equations for the aerodynamic solutions computationally. BAEB05.03 Emphasize on basic schemes to solve the differential equations. BAEB05.04 Understand the stability of the solution by time dependent methods. BAEB05.05 Explain the implicit methods for the time dependent methods to solve computationally. IARE ADVANCED COMPUTATIONAL FLUID DYNAMICS P a g e | 1 Source from Computational Fluid Mechanics and Heat Transfer by Tannehill John C, Anderson Dale A, Pletcher Richard H BAEB05.06 Develop the approximate factorization schemes for time dependent methods. -

University of Oklahoma Graduate College

UNIVERSITY OF OKLAHOMA GRADUATE COLLEGE CONSIDERATION OF LIE SYMMETRY GROUPS IN COMPUTATIONAL FLUID DYNAMICS A DISSERTATION SUBMITTED TO THE GRADUATE FACULTY in partial fulfillment of the requirements for the Degree of DOCTOR OF PHILOSOPHY By ERSIN OZBENLI Norman, Oklahoma 2018 CONSIDERATION OF LIE SYMMETRY GROUPS IN COMPUTATIONAL FLUID DYNAMICS A DISSERTATION APPROVED FOR THE SCHOOL OF AEROSPACE AND MECHANICAL ENGINEERING BY Dr. Prakash Vedula, Chair Dr. M. Cengiz Altan Dr. Wilson E. Merchán-Merchán Dr. Jivtesh Garg Dr. Dimitrios Papavassiliou © Copyright by ERSIN OZBENLI 2018 All Rights Reserved. This dissertation is dedicated to my parents and grandparents for their unconditional love and support: Mehmet Özbenli (Father) Ayşe Özbenli (Mother) Remzi Özbenli (Grandfather) Züleyha Özbenli (Grandmother) iv Acknowledgements I would like to express my sincere gratitude to my committee chair and adviser Dr. Prakash Vedula, who has set an example of excellence as a mentor and role model, for his invaluable guidance and support throughout the development of this dissertation. I will be forever thankful not only for the extra hours he took from his busy schedule just to help me overcome problems related to my research, but also for his persistent effort in preparing me for the life in academia. Without his guidance, this dissertation would have not been possible. I would also like to express my deepest appreciation to my committee member Dr. M. Cengiz Altan for his invaluable contributions to my development as a researcher. His dedication to research and persistent efforts to mentor graduate students were truly inspirational to me. Further, I would like to thank my outside committee member Dr.