Less Destructive Cleaning of Web Documents by Using Standoff Annotation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oauth 2.0 Dynamic Client Registration Management Protocol Draft-Jones-Oauth-Dyn-Reg-Management-00

OAuth Working Group J. Richer TOC Internet-Draft The MITRE Corporation Intended status: Standards Track M. Jones Expires: August 1, 2014 Microsoft J. Bradley Ping Identity M. Machulak Newcastle University January 28, 2014 OAuth 2.0 Dynamic Client Registration Management Protocol draft-jones-oauth-dyn-reg-management-00 Abstract This specification defines methods for management of dynamic OAuth 2.0 client registrations. Status of this Memo This Internet-Draft is submitted in full conformance with the provisions of BCP 78 and BCP 79. Internet-Drafts are working documents of the Internet Engineering Task Force (IETF). Note that other groups may also distribute working documents as Internet-Drafts. The list of current Internet-Drafts is at http://datatracker.ietf.org/drafts/current/. Internet-Drafts are draft documents valid for a maximum of six months and may be updated, replaced, or obsoleted by other documents at any time. It is inappropriate to use Internet- Drafts as reference material or to cite them other than as “work in progress.” This Internet-Draft will expire on August 1, 2014. Copyright Notice Copyright (c) 2014 IETF Trust and the persons identified as the document authors. All rights reserved. This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents (http://trustee.ietf.org/license-info) in effect on the date of publication of this document. Please review these documents carefully, as they describe your rights and restrictions with respect to this document. Code Components extracted from this document must include Simplified BSD License text as described in Section 4.e of the Trust Legal Provisions and are provided without warranty as described in the Simplified BSD License. -

The Atom Project

The Atom Project Tim Bray, Sun Microsystems Paul Hoffman, IMC Recent Numbers On June 23, 2004 (according to Technorati.com): • There were 2.8 million feeds tracked • 14,000 new blogs were created • 270,000 new updates were posted Technology: How Syndication Works Now 1. Publication makes an XML document available at a well-known URI describing recent updates 2. Clients retrieve it regularly (slow polling) 3. That’s all! Technology: What’s In a Syndication Feed • One Channel: Title, URI, logo, generator, copyright, author • Multiple Items: Author, title, URI, guid, date(s), category(ies), description (excerpt/summary/ full-text) Species of RSS Currently Observed in the Wild • RSS 0.9: Netscape, RDF-based • RSS 0.91: Netscape, non-RDF • RSS 1.0*: Ad-hoc group, RDF-based • RSS 0.92*: UserLand, non-RDF • RSS 2.0*: UserLand, non-RDF * significant market share Data Format Problems Too many formats, they’re vaguely specified, there are technical issues with embedded markup, relative URIs, XML namespaces, and permanent identifiers. The personality & political problems are much worse. Scaling and security may be OK, because it’s all HTTP. The Protocol Landscape The Blogger and MetaWeblog “APIs” are quick hacks based on XML-RPC. They lack extensibility, standards-friendliness, security, authentication and a future. Atom, Pre-IETF • Launched Summer 2003 by Sam Ruby • Quick buy-in from major vendors • Quick buy-in from backers of all RSS species, except RSS 2.0 • Active wiki at http://www.intertwingly.net/wiki/ pie Atom in the IETF • Charter • Documents • Mailing list • Not meeting here Starting the Atompub charter • Floated in mid-April 2004 • Some tweaking but no major glitches from the first proposal • Decided to have Sam Ruby be the WG secretary • Was almost ready for the IESG to approve in mid-May, then... -

Tim Bray 1 Tim Bray

Tim Bray 1 Tim Bray Tim Bray Born Timothy William Bray June 21, 1955 Residence Vancouver, British Columbia, Canada Website http:/ / www. tbray. org/ ongoing/ Timothy William Bray (born June 21, 1955) is a Canadian software developer and entrepreneur. He co-founded Open Text Corporation and Antarctica Systems. Bray was Director of Web Technologies at Sun Microsystems from early 2004 to early 2010. Since then he has served as a Developer Advocate at Google, focusing on Android.[1] Early life Bray was born on June 21, 1955 in Alberta, Canada. He grew up in Beirut, Lebanon and graduated in 1981 with a Bachelor of Science (double major in Mathematics and Computer Science) from the University of Guelph in Guelph, Ontario. Tim described his switch of focus from Math to Computer Science this way: "In math I’d worked like a dog for my Cs, but in CS I worked much less for As—and learned that you got paid well for doing it."[2] In June 2009, he received an honorary Doctor of Science degree from the University of Guelph.[3] Fresh out of university, Bray joined Digital Equipment Corporation in Toronto as a software specialist. In 1983, Bray left DEC for Microtel Pacific Research. He joined the New Oxford English Dictionary project at the University of Waterloo in 1987 as its manager. It was during this time Bray worked with SGML, a technology that would later become central to both Open Text Corporation and his XML and Atom standardization work. Tim Bray 2 Entrepreneurship Waterloo Maple Tim Bray served as the part-time CEO of Waterloo Maple Inc. -

Tim Bray [email protected] · Tbray.Org · @Timbray · +Timbray Left: 1997 Right: 2010 Java.Com Php.Net Rubyonrails.Org Djangoproject.Com Nodejs.Org

Does the browser have a future? Tim Bray [email protected] · tbray.org · @timbray · +TimBray Left: 1997 Right: 2010 java.com php.net rubyonrails.org djangoproject.com nodejs.org developer.android.com/reference/android/os/Vibrator.html Functional thinking with Erlang counter_loop(Count) -> incr(Counter) -> receive Counter ! { incr }. { incr } -> ! counter_loop(Count + 1); find_count(Counter) -> { report, To } -> Counter ! { report, self() }, To ! { count, Count }, receive counter_loop(Count) { count, Count } -> end. Count end. Scalable parallel counters! tbray.org/ongoing/When/200x/2007/09/21/Erlang clojure.org scala-lang.org fndIDP.appspot.com c := make(chan SearchResult) go timeout(c) for _, searcher := range Searchers { go searcher.Search(email, c, handles) } bestStrength := -1 outstanding := len(Searchers) bestResult = SearchResult{TimeoutType, []IDP{}} for outstanding > 0 { result := <-c outstanding-- if result.rtype == TimeoutType { break // timed out, don't wait for trailers } if len(result.idps) == 0 { continue // a result that found nothing, ignore it } resultClass := ResultStrengths[result.rtype] if resultClass.verified { bestResult = result break // verified results trump all others } if resultClass.strength > bestStrength { bestStrength = resultClass.strength bestResult = result } else if resultClass.strength == bestStrength { bestResult.idps = merge(bestResult.idps, result.idps) } } ... func timeout(c chan SearchResult) { time.Sleep(2000 * time.Millisecond) c <- SearchResult{rtype: TimeoutType} } twitter.com/levwalkin/status/510197979542614016/photo/1 -

An Introduction to Georss: a Standards Based Approach for Geo-Enabling RSS Feeds

Open Geospatial Consortium Inc. Date: 2006-07-19 Reference number of this document: OGC 06-050r3 Version: 1.0.0 Category: OpenGIS® White Paper Editors: Carl Reed OGC White Paper An Introduction to GeoRSS: A Standards Based Approach for Geo-enabling RSS feeds. Warning This document is not an OGC Standard. It is distributed for review and comment. It is subject to change without notice and may not be referred to as an OGC Standard. Recipients of this document are invited to submit, with their comments, notification of any relevant patent rights of which they are aware and to provide supporting do Document type: OpenGIS® White Paper Document subtype: White Paper Document stage: APPROVED Document language: English OGC 06-050r3 Contents Page i. Preface – Executive Summary........................................................................................ iv ii. Submitting organizations............................................................................................... iv iii. GeoRSS White Paper and OGC contact points............................................................ iv iv. Future work.....................................................................................................................v Foreword........................................................................................................................... vi Introduction...................................................................................................................... vii 1 Scope.................................................................................................................................1 -

AI, Robotics, and the Future of Jobs” Available At

NUMBERS, FACTS AND TRENDS SHAPING THE WORLD FOR RELEASE AUGU.S.T 6, 2014 FOR FURTHER INFORMATION ON THIS REPORT: Aaron Smith, Senior Researcher, Internet Project Janna Anderson, Director, Elon University’s Imagining the Internet Center 202.419.4372 www.pewresearch.org RECOMMENDED CITATION: Pew Research Center, August 2014, “AI, Robotics, and the Future of Jobs” Available at: http://www.pewinternet.org/2014/08/06/future-of-jobs/ 1 PEW RESEARCH CENTER About This Report This report is the latest in a sustained effort throughout 2014 by the Pew Research Center’s Internet Project to mark the 25th anniversary of the creation of the World Wide Web by Sir Tim Berners-Lee (The Web at 25). The report covers experts’ views about advances in artificial intelligence (AI) and robotics, and their impact on jobs and employment. The previous reports in this series include: . A February 2014 report from the Pew Research Center’s Internet Project tied to the Web’s anniversary looking at the strikingly fast adoption of the Internet and the generally positive attitudes users have about its role in their social environment. A March 2014 Digital Life in 2025 report issued by the Internet Project in association with Elon University’s Imagining the Internet Center focusing on the Internet’s future more broadly. Some 1,867 experts and stakeholders responded to an open-ended question about the future of the Internet by 2025. One common opinion: the Internet would become such an ingrained part of the environment that it would be “like electricity”—less visible even as it becomes more important in people’s daily lives. -

A Personal Research Agent for Semantic Knowledge Management of Scientific Literature

A Personal Research Agent for Semantic Knowledge Management of Scientific Literature Bahar Sateli A Thesis in the Department of Computer Science and Software Engineering Presented in Partial Fulfillment of the Requirements For the Degree of Doctor of Philosophy (Computer Science) at Concordia University Montréal, Québec, Canada February 2018 c Bahar Sateli, 2018 Concordia University School of Graduate Studies This is to certify that the thesis prepared By: Bahar Sateli Entitled: A Personal Research Agent for Semantic Knowledge Management of Scientific Literature and submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Computer Science) complies with the regulations of this University and meets the accepted standards with respect to originality and quality. Signed by the final examining commitee: Chair Dr. Georgios Vatistas External Examiner Dr. Guy Lapalme Examiner Dr. Ferhat Khendek Examiner Dr. Volker Haarslev Examiner Dr. Juergen Rilling Supervisor Dr. René Witte Approved by Dr. Volker Haarslev, Graduate Program Director 9 April 2018 Dr. Amir Asif, Dean Faculty of Engineering and Computer Science Abstract A Personal Research Agent for Semantic Knowledge Management of Scientific Literature Bahar Sateli, Ph.D. Concordia University, 2018 The unprecedented rate of scientific publications is a major threat to the productivity of knowledge workers, who rely on scrutinizing the latest scientific discoveries for their daily tasks. Online digital libraries, academic publishing databases and open access repositories grant access to a plethora of information that can overwhelm a researcher, who is looking to obtain fine-grained knowledge relevant for her task at hand. This overload of information has encouraged researchers from various disciplines to look for new approaches in extracting, organizing, and managing knowledge from the immense amount of available literature in ever-growing repositories. -

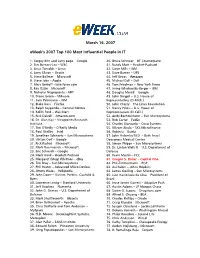

Eweek's 2007 Top 100 Most Influential People in IT

March 16, 2007 eWeek's 2007 Top 100 Most Influential People in IT 1. Sergey Brin and Larry page – Google 40. Bruce Schneier – BT Counterpane 2. Tim Berners-Lee – W3C 41. Randy Mott – Hewlett-Packard 3. Linus Torvalds – Linux 42. Steve Mills – IBM 4. Larry Ellison – Oracle 43. Dave Barnes – UPS 5. Steve Ballmer – Microsoft 44. Jeff Bezos – Amazon 6. Steve Jobs – Apple 45. Michael Dell – Dell 7. Marc Benioff –Salesforce.com 46. Tom Friedman – New York Times 8. Ray Ozzie – Microsoft 47. Irving Wladawsky-Berger – IBM 9. Nicholas Negroponte – MIT 48. Douglas Merrill – Google 10. Diane Green – VMware 49. John Dingell – U.S. House of 11. Sam Palmisano – IBM Representatives (D-Mich.) 12. Blake Ross – Firefox 50. John Cherry – The Linux Foundation 13. Ralph Szygenda – General Motors 51. Nancy Pelosi – U.S. House of 14. Rollin Ford – Wal-Mart Representatives (D-Calif.) 15. Rick Dalzell – Amazon.com 52. Andy Bechtolsheim – Sun Microsystems 16. Dr. Alan Kay – Viewpoints Research 53. Rob Carter – FedEx Institute 54. Charles Giancarlo – Cisco Systems 17. Tim O’Reilly – O’Reilly Media 55. Vikram Akula – SKS Microfinance 18. Paul Otellini – Intel 56. Robin Li – Baidu 19. Jonathan Schwartz – Sun Microsystems 57. John Halamka M.D. – Beth Israel 20. Vinton Cerf – Google Deaconess Medical Center 21. Rick Rashid – Microsoft 58. Simon Phipps – Sun Microsystems 22. Mark Russinovich – Microsoft 59. Dr. Linton Wells II – U.S. Department of 23. Eric Schmidt – Google Defense 24. Mark Hurd – Hewlett-Packard 60. Kevin Martin – FCC 25. Margaret (Meg) Whitman - eBay 61. Gregor S. Bailar – Capital One 26. Tim Bray – Sun Microsystems 62. -

Semantic Web Technologies and Legal Scholarly Publishing Law, Governance and Technology Series

Semantic Web Technologies and Legal Scholarly Publishing Law, Governance and Technology Series VOLUME 15 Series Editors: POMPEU CASANOVAS, Institute of Law and Technology, UAB, Spain GIOVANNI SARTOR, University of Bologna (Faculty of Law – CIRSFID) and European, University Institute of Florence, Italy Scientific Advisory Board: GIANMARIA AJANI, University of Turin, Italy; KEVIN ASHLEY, University of Pittsburgh, USA; KATIE ATKINSON, University of Liverpool, UK; TREVOR J.M. BENCH-CAPON, University of Liv- erpool, UK; V. RICHARDS BENJAMINS, Telefonica, Spain; GUIDO BOELLA, Universita’degli Studi di Torino, Italy; JOOST BREUKER, Universiteit van Amsterdam, The Netherlands; DANIÈLE BOUR- CIER, University of Paris 2-CERSA, France; TOM BRUCE, Cornell University, USA; NURIA CASEL- LAS, Institute of Law and Technology, UAB, Spain; CRISTIANO CASTELFRANCHI, ISTC-CNR, Italy; JACK G. CONRAD, Thomson Reuters, USA; ROSARIA CONTE, ISTC-CNR, Italy; FRAN- CESCO CONTINI, IRSIG-CNR, Italy; JESÚS CONTRERAS, iSOCO, Spain; JOHN DAVIES, British Telecommunications plc, UK; JOHN DOMINGUE, The Open University, UK; JAIME DELGADO, Uni- versitat Politécnica de Catalunya, Spain; MARCO FABRI, IRSIG-CNR, Italy; DIETER FENSEL, Uni- versity of Innsbruck, Austria; ENRICO FRANCESCONI, ITTIG-CNR, Italy; FERNANDO GALINDO, Universidad de Zaragoza, Spain; ALDO GANGEMI, ISTC-CNR, Italy; MICHAEL GENESERETH, Stanford University, USA; ASUNCIÓN GÓMEZ-PÉREZ, Universidad Politécnica de Madrid, Spain; THOMAS F. GORDON, Fraunhofer FOKUS, Germany; GUIDO GOVERNATORI, NICTA, Australia; GRAHAM -

Proceedings of Balisage: the Markup Conference 2012

Published in: Proceedings of Balisage: The Markup Conference 2012. at: http://www.balisage.net/Proceedings/vol6/print/Witt01/BalisageVol6- Witt01.html (downloaded on: 15.02.2017/14:19). Balisage: The Markup Conference 2012 Proceedings A standards-related web-based The Markup Conference M information system Maik Stührenberg Institut für Deutsche Sprache (IDS) Mannheim Universität Bielefeld <maik.Stuehrenberg0uni-bielefeld.de> Oliver Schonefeld Institut für Deutsche Sprache (IDS) Mannheim <[email protected]> Andreas Witt Institut für Deutsche Sprache (IDS) Mannheim <witt0 ids-mannheim.de> Bcilisage: The Markup Conference 2012 August 7 - 10, 2012 Copyright © 2012 by the authors. Used with permission. Howto cite this paper Stührenberg, Maik, Oliver Schonefeld and Andreas Witt. “A standards-related web-based information system.” Presented at Balisage: The Markup Conference 2012, Montreal, Canada, August 7-10, 2012. In Proceedings ofBalisage: The Markup Conference 2012. Balisage Series on Markup Technologies, vol. 8 (2012). DOI: io.4242/Baiisagevoi8.stuhrenbergoi. Abstract This late breaking proposal introduces the prototype of a web-based information system dealing with standards in the field of annotation. Table of Contents Introduction The problem with standards Providing guidance Information structure Representation Current state and future work Introduction This late breaking proposal is based on an ongoing effort started in the CLARIN project and which was presented briefly at the LREC 2012 Workshop on Collaborative Resource Development and Delivery. Initial point was the development of an easy to use information system for the description of standards developed in ISO/IEC TC37/SC4 Language Resources Management. Since these standards are heavily related to each other it is usually not feasible to adopt only a single standard for one's work but to dive into the standards jungle in full. -

Issues in Web Frameworks

ISSUES IN WEB FRAMEWORKS Tim Bray Director of Web Technologies Sun Microsystems Big Hot Issues Maintainability Scaling Developer Developer Tools Speed Intrinsic Integration In-Stack “Web 2.0” Identity External Issues in Scaling Observability Load balancing File I/O CPU Shared-nothing DBMS Issues in Developer Speed Compilation File I/O step? Code Size Deployment step? Configuration process Issues in Developer Tools Templating IDE How many tools? O/R Mapping Documentation Performance Issues in Maintainability MVC Code size Language count Object Readability orientation Comparing Intrinsics PHP Rails Java Scaling Dev Speed Dev Tools Maintainability Comparing Intrinsics Which is most PHP Rails Java important? Scaling Dev Speed Dev Tools Maintainability The Identity Problem The usual approach: “Make a Integration, SOA, USERS table.”and Web Services Integration, SOA, and Web Services Integration, SOA, and Web Services Stack Integration Options Download & Integration,Vendor- SOA, build: Apache, andintegrated Web Services PHP, MySQL, LAMP stack. add-on packages. Integration, SOA, and Web Services Apache, MySQL, PHP, Perl, Squid cooltools.sunsource.net/coolstack/index.html External integration Issues PHP will never go away. Rails will never go away. Java will never go away. .NET will never go away. The network is the computer. The network is heterogeneous. How do we get work done? SOA: WS-* is the Official Answer 36 specs, about 1,000 pages total. (msdn.microsoft.com/webservices/webservices/understanding/specs/default.aspx) Is WS-* A Little Bit Too Complex? SOA: An Alternative View Web Services: The Alternative Be like the Web! The theory: REST (Representational State Transfer). The practice: XML + HTTP. In action today at: Google, Amazon, AOL, Yahoo!, many others. -

Towards the Unification of Formats for Overlapping Markup

Towards the unification of formats for overlapping markup Paolo Marinelli∗ Fabio Vitali∗ Stefano Zacchiroli∗ [email protected] [email protected] [email protected] Abstract Overlapping markup refers to the issue of how to represent data structures more expressive than trees|for example direct acyclic graphs|using markup (meta-)languages which have been designed with trees in mind|for example XML. In this paper we observe that the state of the art in overlapping markup is far from being the widespread and consistent stack of standards and technologies readily available for XML and develop a roadmap for closing the gap. In particular we present in the paper the design and implementation of what we believe to be the first needed step, namely: a syntactic conversion framework among the plethora of overlapping markup serialization formats. The algorithms needed to perform the various conversions are presented in pseudo-code, they are meant to be used as blueprints for re- searchers and practitioners which need to write batch translation programs from one format to the other. 1 Introduction This paper is about markup, one of the key technological ingredient of hypertext. The particular aspects of markup we are concerned with are the limits of its expressivity. XML-based markup requires that the identified features of a document are organized hierarchically as a single tree, whereby each fragment of the content of the document is contained in one and only one XML element, each of which is contained within one and only one parent element all the way up to the single root element at the top.