Model Transformation Languages for Domain-Specific Workbenches

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Building Great Interfaces with OOABL

Building great Interfaces with OOABL Mike Fechner Director Übersicht © 2018 Consultingwerk Ltd. All rights reserved. 2 Übersicht © 2018 Consultingwerk Ltd. All rights reserved. 3 Übersicht Consultingwerk Software Services Ltd. ▪ Independent IT consulting organization ▪ Focusing on OpenEdge and related technology ▪ Located in Cologne, Germany, subsidiaries in UK and Romania ▪ Customers in Europe, North America, Australia and South Africa ▪ Vendor of developer tools and consulting services ▪ Specialized in GUI for .NET, Angular, OO, Software Architecture, Application Integration ▪ Experts in OpenEdge Application Modernization © 2018 Consultingwerk Ltd. All rights reserved. 4 Übersicht Mike Fechner ▪ Director, Lead Modernization Architect and Product Manager of the SmartComponent Library and WinKit ▪ Specialized on object oriented design, software architecture, desktop user interfaces and web technologies ▪ 28 years of Progress experience (V5 … OE11) ▪ Active member of the OpenEdge community ▪ Frequent speaker at OpenEdge related conferences around the world © 2018 Consultingwerk Ltd. All rights reserved. 5 Übersicht Agenda ▪ Introduction ▪ Interface vs. Implementation ▪ Enums ▪ Value or Parameter Objects ▪ Fluent Interfaces ▪ Builders ▪ Strong Typed Dynamic Query Interfaces ▪ Factories ▪ Facades and Decorators © 2018 Consultingwerk Ltd. All rights reserved. 6 Übersicht Introduction ▪ Object oriented (ABL) programming is more than just a bunch of new syntax elements ▪ It’s easy to continue producing procedural spaghetti code in classes ▪ -

The Model Transformation Language of the VIATRA2 Framework

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Science of Computer Programming 68 (2007) 214–234 www.elsevier.com/locate/scico The model transformation language of the VIATRA2 framework Daniel´ Varro´ ∗, Andras´ Balogh Budapest University of Technology and Economics, Department of Measurement and Information Systems, H-1117, Magyar tudosok krt. 2., Budapest, Hungary OptXware Research and Development LLC, Budapest, Hungary Received 15 August 2006; received in revised form 17 April 2007; accepted 14 May 2007 Available online 5 July 2007 Abstract We present the model transformation language of the VIATRA2 framework, which provides a rule- and pattern-based transformation language for manipulating graph models by combining graph transformation and abstract state machines into a single specification paradigm. This language offers advanced constructs for querying (e.g. recursive graph patterns) and manipulating models (e.g. generic transformation and meta-transformation rules) in unidirectional model transformations frequently used in formal model analysis to carry out powerful abstractions. c 2007 Elsevier B.V. All rights reserved. Keywords: Model transformation; Graph transformation; Abstract state machines 1. Introduction The crucial role of model transformation (MT) languages and tools for the overall success of model-driven system development has been revealed in many surveys and papers during the recent years. To provide a standardized support for capturing queries, views and transformations between modeling languages defined by their standard MOF metamodels, the Object Management Group (OMG) has recently issued the QVT standard. QVT provides an intuitive, pattern-based, bidirectional model transformation language, which is especially useful for synchronization kind of transformations between semantically equivalent modeling languages. -

Model Transformation Languages Under a Magnifying Glass:A Controlled Experiment with Xtend, ATL, And

CORE Metadata, citation and similar papers at core.ac.uk Provided by The IT University of Copenhagen's Repository Model Transformation Languages under a Magnifying Glass: A Controlled Experiment with Xtend, ATL, and QVT Regina Hebig Christoph Seidl Thorsten Berger Chalmers | University of Gothenburg Technische Universität Braunschweig Chalmers | University of Gothenburg Sweden Germany Sweden John Kook Pedersen Andrzej Wąsowski IT University of Copenhagen IT University of Copenhagen Denmark Denmark ABSTRACT NamedElement name : EString In Model-Driven Software Development, models are automatically processed to support the creation, build, and execution of systems. A large variety of dedicated model-transformation languages exists, Project Package Class StructuralElement modifiers : Modifier promising to efficiently realize the automated processing of models. [0..*] packages To investigate the actual benefit of using such specialized languages, [0..*] subpackages [0..*] elements Modifier [0..*] classes we performed a large-scale controlled experiment in which over 78 PUBLIC STATIC subjects solve 231 individual tasks using three languages. The exper- FINAL Attribute Method iment sheds light on commonalities and differences between model PRIVATE transformation languages (ATL, QVT-O) and on benefits of using them in common development tasks (comprehension, change, and Figure 1: Syntax model for source code creation) against a modern general-purpose language (Xtend). Our results show no statistically significant benefit of using a dedicated 1 INTRODUCTION transformation language over a modern general-purpose language. In Model-Driven Software Development (MDSD) [9, 35, 38] models However, we were able to identify several aspects of transformation are automatically processed to support creation, build and execution programming where domain-specific transformation languages do of systems. -

Functional Javascript

www.it-ebooks.info www.it-ebooks.info Functional JavaScript Michael Fogus www.it-ebooks.info Functional JavaScript by Michael Fogus Copyright © 2013 Michael Fogus. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/ institutional sales department: 800-998-9938 or [email protected]. Editor: Mary Treseler Indexer: Judith McConville Production Editor: Melanie Yarbrough Cover Designer: Karen Montgomery Copyeditor: Jasmine Kwityn Interior Designer: David Futato Proofreader: Jilly Gagnon Illustrator: Robert Romano May 2013: First Edition Revision History for the First Edition: 2013-05-24: First release See http://oreilly.com/catalog/errata.csp?isbn=9781449360726 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Functional JavaScript, the image of an eider duck, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trade‐ mark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. -

Automating Testing with Autofixture, Xunit.Net & Specflow

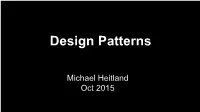

Design Patterns Michael Heitland Oct 2015 Creational Patterns • Abstract Factory • Builder • Factory Method • Object Pool* • Prototype • Simple Factory* • Singleton (* this pattern got added later by others) Structural Patterns ● Adapter ● Bridge ● Composite ● Decorator ● Facade ● Flyweight ● Proxy Behavioural Patterns 1 ● Chain of Responsibility ● Command ● Interpreter ● Iterator ● Mediator ● Memento Behavioural Patterns 2 ● Null Object * ● Observer ● State ● Strategy ● Template Method ● Visitor Initial Acronym Concept Single responsibility principle: A class should have only a single responsibility (i.e. only one potential change in the S SRP software's specification should be able to affect the specification of the class) Open/closed principle: “Software entities … should be open O OCP for extension, but closed for modification.” Liskov substitution principle: “Objects in a program should be L LSP replaceable with instances of their subtypes without altering the correctness of that program.” See also design by contract. Interface segregation principle: “Many client-specific I ISP interfaces are better than one general-purpose interface.”[8] Dependency inversion principle: One should “Depend upon D DIP Abstractions. Do not depend upon concretions.”[8] Creational Patterns Simple Factory* Encapsulating object creation. Clients will use object interfaces. Abstract Factory Provide an interface for creating families of related or dependent objects without specifying their concrete classes. Inject the factory into the object. Dependency Inversion Principle Depend upon abstractions. Do not depend upon concrete classes. Our high-level components should not depend on our low-level components; rather, they should both depend on abstractions. Builder Separate the construction of a complex object from its implementation so that the two can vary independently. The same construction process can create different representations. -

How to Develop Your Own Dsls in Eclipse Using Xtend and Xtext ?

How to develop your own DSLs in Eclipse using Xtend and Xtext ? Daniel Strmečki | Software Developer 19.05.2016. BUSINESS WEB APPLICATIONS HOW TO DEVELOP YOUR OWN DSLS IN ECLIPSE USING XTEXT AND XTEND? Content 1. DSLs › What are DSLs? › Kinds of DSLs? › Their usage and benefits… 2. Xtend › What kind of language is Xtend? › What are its benefits compared to classic Java? 3. Xtext › What is a language development framework? › How to develop you own DSL? 4. Short demo 5. Conclusion 2 | 19.05.2016. BUSINESS WEB APPLICATIONS | [email protected] | www.evolva.hr HOW TO DEVELOP YOUR OWN DSLS IN ECLIPSE USING XTEXT AND XTEND? DSL acronym Not in a Telecommunications context: Digital Subscriber Line But in a Software Engineering context: Domain Specific Languages 3 | 19.05.2016. BUSINESS WEB APPLICATIONS | [email protected] | www.evolva.hr HOW TO DEVELOP YOUR OWN DSLS IN ECLIPSE USING XTEXT AND XTEND? Domain Specific Languages General-Purpose Languages (GPLs) › Languages broadly applicable across application domains › Lack specialized features for a particular domain › XML, UML, Java , C++, Python, PHP… Domain specific languages (DSLs) › Languages tailored to for an application in a specific domain › Provide substantial gains in expressiveness and ease of use in their domain of application › SQL, HTML, CSS, Logo, Mathematica, Marcos, Regular expressions, Unix shell scripts, MediaWiki, LaTeX… 4 | 19.05.2016. BUSINESS WEB APPLICATIONS | [email protected] | www.evolva.hr HOW TO DEVELOP YOUR OWN DSLS IN ECLIPSE USING XTEXT AND XTEND? Domain Specific Languages A novelty in software development › DSL for programming numerically controlled machine tools, was developed in 1957–1958 › BNF dates back to 1959 (M. -

Data Transformation Language (DTL)

DTL Data Transformation Language Phillip H. Sherrod Copyright © 2005-2006 All rights reserved www.dtreg.com DTL is a full programming language built into the DTREG program. DTL makes it easy to generate new variables, transform and combine input variables and select records to be used in the analysis. Contents Contents...................................................................................................................................................3 Introduction .............................................................................................................................................6 Introduction to the DTL Language......................................................................................................6 Using DTL For Data Transformations ....................................................................................................7 The main() function.............................................................................................................................7 Global Variables..................................................................................................................................8 Implicit Global Variables ................................................................................................................8 Explicit Global Variables ................................................................................................................9 Static Global Variables..................................................................................................................11 -

Expressive and Efficient Model Transformation with an Internal DSL

Expressive and Efficient Model Transformation with an Internal DSL of Xtend Artur Boronat Department of Informatics, University of Leicester, UK [email protected] ABSTRACT 1 INTRODUCTION Model transformation (MT) of very large models (VLMs), with mil- Over the past decade the application of MDE technology in indus- lions of elements, is a challenging cornerstone for applying Model- try has led to the emergence of very large models (VLMs), with Driven Engineering (MDE) technology in industry. Recent research millions of elements, that may need to be updated using model efforts that tackle this problem have been directed at distributing transformation (MT). This situation is characterized by important MT on the Cloud, either directly, by managing clusters explicitly, or challenges [26], starting from model persistence using XMI serial- indirectly, via external NoSQL data stores. In this paper, we draw at- ization with the Eclipse Modeling Framework (EMF) [31] to scalable tention back to improving efficiency of model transformations that approaches to apply model updates. use EMF natively and that run on non-distributed environments, An important research effort to tackle these challenges was showing that substantial performance gains can still be reaped on performed in the European project MONDO [19] by scaling out that ground. efficient model query and MT engines, such as VIATRA[3] and We present Yet Another Model Transformation Language (YAMTL), ATL/EMFTVM [46], building on Cloud technology either directly, a new internal domain-specific language (DSL) of Xtend for defin- by using distributed clusters of servers explicitly, or indirectly, by ing declarative MT, and its execution engine. -

Model-Based Hardware Generation and Programming - the MADES Approach

1 Model-based hardware generation and programming - the MADES approach Ian Gray∗, Nikos Matragkas∗, Neil C. Audsley, Leandro Soares Indrusiak, Dimitris Kolovos, Richard Paige University of York, York, U.K. F Abstract—This paper gives an overview of the model-based hardware the proposed hardware development flow and the way generation and programming approach proposed within the MADES that embedded software can be targeted at the resulting project. MADES aims to develop a model-driven development process complex architectures. for safety-critical, real-time embedded systems. MADES defines a sys- tems modelling language based on subsets of MARTE and SysML that allows iterative refinement from high-level specification down to 1.1 MADES Project Goals final implementation. The MADES project specifically focusses on three The MADES project (Model-based methods and tools unique features which differentiate it from existing model-driven develop- ment frameworks. First, model transformations in the Epsilon modelling for Avionics and surveillance embeddeD systEmS) aims framework are used to move between system models and provide to develop the elements of a fully model-driven ap- traceability. Second, the Zot verification tool is employed to allow early proach for the design, validation, simulation, and code and frequent verification of the system being developed. Third, Compile- generation of complex embedded systems to improve Time Virtualisation is used to automatically retarget architecturally- the current practice in the field. MADES differentiates neutral software for execution on complex embedded architectures. itself from similar projects in that way that it covers This paper concentrates on MADES’s approach to the specification of hardware and the way in which software is refactored by Compile-Time all the phases of the development process: from system Virtualisation. -

Designpatternsphp Documentation Release 1.0

DesignPatternsPHP Documentation Release 1.0 Dominik Liebler and contributors Jul 18, 2021 Contents 1 Patterns 3 1.1 Creational................................................3 1.1.1 Abstract Factory........................................3 1.1.2 Builder.............................................8 1.1.3 Factory Method......................................... 13 1.1.4 Pool............................................... 18 1.1.5 Prototype............................................ 21 1.1.6 Simple Factory......................................... 24 1.1.7 Singleton............................................ 26 1.1.8 Static Factory.......................................... 28 1.2 Structural................................................. 30 1.2.1 Adapter / Wrapper....................................... 31 1.2.2 Bridge.............................................. 35 1.2.3 Composite............................................ 39 1.2.4 Data Mapper.......................................... 42 1.2.5 Decorator............................................ 46 1.2.6 Dependency Injection...................................... 50 1.2.7 Facade.............................................. 53 1.2.8 Fluent Interface......................................... 56 1.2.9 Flyweight............................................ 59 1.2.10 Proxy.............................................. 62 1.2.11 Registry............................................. 66 1.3 Behavioral................................................ 69 1.3.1 Chain Of Responsibilities................................... -

Chapter 2:Tools Selection

MODEL-BASED DESIGN & VERFICATION OF EMBEDDED SYTEMS (MODEVES) Tools Selection Report Chapter 2: Tools Selection MODEL-BASED DESIGN & VERFICATION OF EMBEDDED SYTEMS (MODEVES) Tools Selection Report 1. MBSE Tools Investigation Once the researches are classified into different categories, the next step is to identify the tools and frameworks used in selected researches to perform various MBSE activities. It is important to mention here that a tool is used to perform a specific MBSE activity whereas a framework is a complete environment supporting set of tools that can be used to perform various MBSE activities. On the basis of literature review, 39 preliminary MBSE tools have been identified as given in Table VI. Table I: Preliminary tools selection Sr. Name of Tool / Corresponding Relevant Researches # Framework MBSE Activities 1 Topcased [62] Modeling [3][8][9][52] 2 Modelio Editor Modeling [12] [68] 3 Magic Draw Modeling [6][16][20] [131] 4 Eclipse GEF Modeling [17] [89] 5 Rhapsody [132] Modeling [4][19][22][57] 6 PapyrusMDT Modeling [21][44][60] [98] 7 EA MDG [85] Modeling [27] 8 Visual paradigm Modeling [60] [126] 9 MediniQVT Model [2] [121] Transformation 10 ATL [63] Model [3][8][39][44][46][50][59] Transformation 11 Xpand [97] Model [8][20] Transformation 12 Acceleo [67] Model [9][21][42][44] Transformation 13 MODCO [83] Model [13] Transformation 14 MODEASY Model [17] [17] Transformation 15 Apache Model [16][18] Velocity [86] Transformation 16 Eclipse EMF Model [21][41][37] MODEL-BASED DESIGN & VERFICATION OF EMBEDDED SYTEMS (MODEVES) -

On Modularity and Performances of External Domain-Specific Language Implementations Manuel Leduc

On modularity and performances of external domain-specific language implementations Manuel Leduc To cite this version: Manuel Leduc. On modularity and performances of external domain-specific language implementa- tions. Software Engineering [cs.SE]. Université Rennes 1, 2019. English. NNT : 2019REN1S112. tel-02972666 HAL Id: tel-02972666 https://tel.archives-ouvertes.fr/tel-02972666 Submitted on 20 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE DE DOCTORAT DE L’UNIVERSITE DE RENNES 1 COMUE UNIVERSITE BRETAGNE LOIRE Ecole Doctorale N°601 Mathèmatique et Sciences et Technologies de l’Information et de la Communication Spécialité : Informatique Par Manuel LEDUC On modularity and performance of External Domain-Specific Language im- plementations Thèse présentée et soutenue à RENNES , le 18 Décembre 2019 Unité de recherche : Equipe DiverSE, IRISA Rapporteurs avant soutenance : Paul Klint, Professeur, CWI, Amsterdam, Pays-Bas Juan De Lara, Professeur, Université autonome de Madrid, Madrid, Espagne Composition du jury : Président : Olivier Ridoux, Professeur, Université de Rennes 1, Rennes, France Examinateurs : Nelly Bencomo, Lecturer in Computer Science, Aston University, Birmingham, Royaume-Uni Jean-Remy Falleri, Maître de conférences HDR, Université de Bordeaux, Bordeaux, France Gurvan Le Guernic, Ingénieur de recherche, DGA, Rennes, France Dir.