The Design and Implementation of an Interactive Zipper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BOND-X-Ymyl-Black-Lookbook

BOND X YMYL TECHNICAL MANUAL + TACTICAL GEAR Enclosed is a system of modular accessories. This collection focuses on two primary items - the 3-Pocket Holster and the 3-Pocket Belt. Accessories to the primary pieces are available for added function. THE IMPERATIVE OF RESPONSIBILITY © 2017 .,, 3-POCKET HOLSTER ITEM unisex black matte leather holster with 2 fixed pockets and 1 modular pocket that slides on and off for custom styling. PRICE msrp | $295 INCLUDES Oversized Key Ring. FIT adjustable shoulder straps with chrome-finished studs, one size. FEATURES chrome-finished hardware: d-rings for attachment options, textured snap closures on pockets, embossed logo. HOLDS fixed pockets fit passport and tech items like iPhone 7+, Galaxy S8, + other items. detachable slide-on pocket will fit cigarette case + other items. DIMENSIONS fixed pockets: h: 7” x w: 6” x d: 0.5” removable pocket: h: 4.5” x w: 2.75” x d: 1” ATTACHMENTS includes Oversized Key Ring. Tech Pocket, , D-Ring Strap, and Key Cover options. sold separately. 3-POCKET BELT ITEM unisex black matte leather belt with 3 modular pockets that slide on and off for custom pocket placement and styling. PRICE msrp | $295 INCLUDES Pocket A Pocket B - -up to iPhone 7+ and other items. will fit lighter + other items. size: h: 6.75” x w: 3.5” x d: 1” size: h: 3.5” x w: 1.5” x d: 1” Pocket C - Strap D - cigarette case + other items. to attach keychain + other items. size: h: 4.5” x w: 2.75” x d: 1” size: h: 3.5” x d: 1” FIT may be worn around the waist or crossbody. -

Dressing Aids F Are Available Through Specialtyretailers

The War Amps For Your Information Tel.: 1 877 622-2472 Fax: 1 855 860-5595 [email protected] Dressing Aids rom buttons, buckles, zippers and laces, these Fsimple fasteners can pose difficulties in an amputee’s daily activities. Featured below are various Velcro tabs dressing aids that can make many of these tasks easier. Some can be made using household items and others are available through specialty retailers. Hassle-free Fasteners • Velcro tabs under shirt or blouse buttons instead of conventional button holes. • A Velcro strip instead of a zipper in the fly of trousers. • Velcro on jacket cuffs. • Toggle buttons on outer wear which are easier to manage than stiff, flat buttons. Elastic cufflink • Cuff links made of elastic thread between two buttons keep cuffs looking tidy while letting you slide your hand in and out without undoing the button. • Some button-up shirts can be put on without being fully unbuttoned, simply leave enough buttons undone to allow room for your head, and then A C fasten the rest later. B D Button Hooks To assist the amputee with buttoning clothing, various button hooks are available including the regular handle(A), rubber handle(B), cuff handle(C), and ball or knob handle(D). Prosthetic Limbs and Devices Prosthetic A button hook has a small wire loop that slips over the button and when pulled, guides the button through the buttonhole. Zipper Pull Rings For those who have difficulty holding onto and pulling the regular zipper tabs, a variety of zipper pull rings are available which attach to the regular zipper tab and which can be grasped more easily by artificial limbs. -

Talabartero Collection Catalog

TALABARTERO COLLECTION The leather poufs and pillows in this collection are inspired by the equestrian heritage of Colombia’s coffee growing region. Talabartero translates as ‘master saddler,’ and the collection draws upon the expert craftsmanship and fine detailing—the stitching, the braiding, the embroidery, and the hand tooling— that characterize the best of vintage saddles from the area. We’ve partnered with master craftsman Edgar Beltran Jaramillo and the skilled team in his Bogotá-based workshop to bring this collection to life. Each piece is crafted from a saturated jewel-toned color palette that draws on the works of Colombian artist Fernando Botero. TALABARTERO | LEATHER POUFS ALMENDRA POUF NEGRO POUF PALMA POUF Natural grain leather Natural grain leather Natural grain leather Hidden bottom zipper closure Hidden bottom zipper closure Hidden bottom zipper closure Cast iron buckle, pewter finish Cast iron buckle, pewter finish Cast iron buckle, pewter finish Fiber wrapped high density foam filler Fiber wrapped high density foam filler Fiber wrapped high density foam filler SIZE A SIZE A SIZE A 22” L x 22” W x 12” H 22” L x 22” W x 12” H 22” L x 22” W x 12” H $1,525 $1,525 $1,525 SIZE B SIZE B SIZE B 25” L x 25” W x 14” H 25” L x 25” W x 14” H 25” L x 25” W x 14” H $1,765 $1,765 $1,765 TALABARTERO | LEATHER POUFS COBALTO POUF MORA POUF Natural grain leather Natural grain leather Hidden bottom zipper closure Hidden bottom zipper closure Cast iron buckle, pewter finish Cast iron buckle, pewter finish Fiber wrapped high density foam -

Price List Best Cleaners 03-18.Xlsx

Price List Pants, Skirts & Suits Shirts & Blouses Pants Plain…………………………………………… 10.20 Business Shirt Laundered and Machine Pants, Silk/Linen…………………………………… . 12.30 Pressed (Men’s & Women’s)…… 3.60 Pants, Rayon/Velvet………………………………… 11.80 Pants Shorts………………………………………. 10.20 Chamois Shirt…………………………………………… 5.35 Skirts, Plain………………………………………… . 10.20 Lab Smock, Karate Top………………………………… . 7.30 Skirts, Silk, Linen………………………………….. 12.30 Polo, Flannel Shirt……………………………………… .. 5.35 Skirts, Rayon Velvet……………………………… .. 11.80 Sweat Shirt……………………………………………… . 5.70 Skirts Fully Pleated………………………………. 20.95 T-Shirt…………………………………………………… .. 4.60 Skirts Accordion Pleated………………………… . 20.95 Tuxedo Shirt……………………………………………… . 6.10 Suit 2 pc. (Pants or Skirt and Blazer)……………… 22.40.. Wool Shirt………………………………………………… . 5.35 Suit 3 pc. (Pants or Skirt Blazer & Vest)……………… 27.75. Suit, body suit………………………………………… 10.60. Blouse/Shirt, Cotton, Poly…………………………………… 9.50.. Suit, Jumpsuit…………………………………… 25.10 Blouse/Shirt, Rayon, Velvet………………………………… 11.10.. Sport Jacket, Blazer……………………………… .. 12.20 Blouse/Shirt, Silk, Linen……………………………………… 11.60 Tuxedo……………………………………………… . 22.95 Blouse/Shirt, Sleeveless……………………………………… 7.80 Vest………………………………………………… . 5.35 Dresses Outerwear Dress, Plain, Cotton, Wool, Poly, Terry, Denim…….. 19.00 Blazer, Sport Jacket……………………………… . 12.20 Dress,Silk, Linen …….………………………………. 23.20 Bomber Jacket………………………………….. 16.20 Dress,Rayon,Velvet …………………………………. 22.20 Canvas Field Coat………………………………… 16.20 Dress, 2-Piece, Dress & Sleeveless Jkt……………………… 27.60 Canvas Barn Jacket……………………………… -

Lapped Zipper Lapped Zippers Are Often Found on Skirts at the Waistband

Sewing Technique: Lapped Zipper Lapped zippers are often found on skirts at the waistband. A lapped zipper is constructed so that the zipper is not visible. The zipper will remain closed throughout the process of inserting the zipper. All sewing is done on the inside of the garment except for topstitching. Remember to always keep the needle positioned between the zipper foot and the zipper teeth when sewing. NOTE: Throughout this tutorial, the term “placket” will be used. A placket is the fabric that surrounds and reinforces fasteners in a garment. In this instance, the fastener referred to in this tutorial is a zipper. STEP 1: On the wrong side of the fabric, measure and mark the placket opening BASTING where the zipper STITCH will be placed. Do this by measuring from the top of the garment down along the seam to the zipper bottom stop. Take into consideration the REGULAR composition of garment and if there will be a waistband or MACHINE STITCH any other special feature at the top of the zipper. Mark the placement of the bottom stop with a marking pencil. STEP 2: While sewing the seam, machine stitch the seam closed until the marked point of the bottom of the zipper, backstitch, then switch to a basting stitch for the distance of the placket seam. Press seam allowance open. $-05)*/( CONSTRUCTION 6UBI4UBUFJTBOBóSNBUJWFBDUJPOFRVBMPQQPSUVOJUZJOTUJUVUJPO STEP 3: Replace sewing machine foot with a zipper foot. Place zipper face down with the teeth centered on the pressed open seam on the inside of the garment, matching top and bottom stops with marks. -

Rescuetec PFD | USER's MANUAL

RescueTec PFD | USER’S MANUAL RescueTec PFD SPECIFICATIONS The RescueTec PFD is designed for the special requirements of a trained rescue professional. ENVIRONMENTS: This RescueTec PFD can be used in all river, lake, and ocean environments including: » Aerated moving water » Flood waters » Surf CERTIFICATION: USCG Type V Professional Rescue PFD FLOTATION: Minimum 22 lbs (10 kg) 2 SIZES: S/M for chest size 28” - 40” (71-102 cm) and L/XL for chest size 40”-52” (102-132 cm) FEATURES » Integrated 1.8 inch Mil Spec nylon webbing over shoulder strap with 6000 lb breaking strength. » High Tenacity 420 denier fabric. » 100% Nylon webbing for maximum wear resistance. » Many webbing loops to attach optional front pockets and other equipment. » Two inch Quick Release Rescue Belt with fast pull cam buckle and Stainless Steel hardware. » Soft, fl exible closed-cell foam. » Optional (detachable) Front (Molle) pockets for Rescue Swimming, (one with internal radio holster, one with internal multipurpose equipment attachment loops). » Knife / scissors and strobe light attachments on both sides /shoulders. » Handle on back to assist in pulling the rescuer to safety. » Refl ective tape front and back and on both detachable front pockets. » Inside quick drainage mesh. » Elastic loops to hold all excess webbing. » Over the shoulder anodized aluminum adjustment buckles. » Adjustable compression straps across front to protect the zipper (at top and bottom) » Three part adjustable 1.5” waistbelt for maximum fi t adjustment. » Waistbelt sleeves have sticky neoprene to hold the PFD in the best position for fi t. » Vertical waistbelt adjustment on L/XL size to fi t larger individuals. -

Trims, Labels, Treads, Elastics, Underlying Fabrics, Zippers, Butto

10/12/2009 1 TRIMS AND FINDINGS Chapter 8 2 Findings and Trims Findings: trims, labels, treads, elastics, underlying fabrics, zippers, buttons, other closures, other miscellaneous items Trims: subdivision of findings – decorative materials – ribbons, braids, laces, other narrow fabric trims, appliqués, flowers, beads, sequins, other decorative items Findings/trims usually aren’t the reason for purchase but they are often the reason for discarding a garment 3 Performance Finding/trim must reflect same quality level as the fabric Performance of finding or trim could negate the performance of the body fabric Ex. If fabric is dry-clean only, the trims must be dry-cleanable 4 Establishing specs Often established by the suppliers themselves or by the manufacturers in partnership w/ the suppliers Too many types of findings and trims to be an expert on all and be able to write accurate specs on all – best to rely on the supplier of the specific type of finding you need 5 Aesthetic performance Must complement the appearance of the garment Must be considered in concert with the design, construction, and finish of the garment Need to be attractive, fashionable, and functional to help sell the garment 6 Functional Performance Includes utility and durability of the finding Utility includes: dimensional stability, appearance retention, comfort, ease of care, and safety Durability includes: strength, abrasion resistance, and degradation by chemical & other elements 7 Performance, Cont. Design, materials, and constructions of a garment interact to determine -

How to Sew a Zipper Pouch

How to Sew a Zipper Pouch Raid your scrap bins and sew a zipper pouch! You can use large scraps or sew smaller scraps to- gether to make your own unique pouch. Be creative and have fun with it! Supplies: Quilting Cotton in two or more coordinating prints or colors Pellon SF101 Interfacing Thermolam (Pellon 971F) (You can also use fusible fleece. Your pouch will be less puffy and struc- tured, but this also depends on the quality of your fabric.) An all purpose 14” zipper (or longer) Scissors Pins or Wonder clips Coordinating thread Fabric glue (optional) *Note* These are written instructions only and meant to supplement the video done as a virtual program for Allen Public Library. Please read all of the instructions before beginning. Step 1: Cutting out Your Pieces Use the paper templates or a ruler to cut the following: From Exterior fabric cut 8.5” x5.5” (2) From Interior Fabric cut 8.5” x 5.5” (2) From SF101 interfacing cut 8.5”x5.5”(2) From Thermolam (or fusible fleece) cut 8” x5” (2) Step 2: Fuse interfacing to exterior fabric pieces. First SF101, then Thermolam or fleece. Follow the manufacturers instructions. Step 3: Attach the zipper. Make sure to iron the fabric away from the zipper after you attach each side. Next, add a line of topstitching 1/8” away from the edge of the fabric you just pressed. If you would like to add boxed corners to your pouch, please skip to the section labeled Optional Boxed Corners. This will be your Step 4. -

Improvement of Impact Strength of Polylactide Blends with a Thermoplastic Elastomer Compatibilized with Biobased Maleinized Lins

molecules Article Improvement of Impact Strength of Polylactide Blends with a Thermoplastic Elastomer Compatibilized with Biobased Maleinized Linseed Oil for Applications in Rigid Packaging Ramon Tejada-Oliveros, Rafael Balart * , Juan Ivorra-Martinez , Jaume Gomez-Caturla, Nestor Montanes and Luis Quiles-Carrillo * Technological Institute of Materials (ITM), Universitat Politècnica de València (UPV), Plaza Ferrándiz y Carbonell 1, 03801 Alcoy, Spain; [email protected] (R.T.-O.); [email protected] (J.I.-M.); [email protected] (J.G.-C.); [email protected] (N.M.) * Correspondence: [email protected] (R.B.); [email protected] (L.Q.-C.); Tel.: +34-966-528-433 (L.Q.-C.) Abstract: This research work reports the potential of maleinized linseed oil (MLO) as biobased compatibilizer in polylactide (PLA) and a thermoplastic elastomer, namely, polystyrene-b-(ethylene- ran-butylene)-b-styrene (SEBS) blends (PLA/SEBS), with improved impact strength for the packaging industry. The effects of MLO are compared with a conventional polystyrene-b-poly(ethylene-ran- butylene)-b-polystyrene-graft-maleic anhydride terpolymer (SEBS-g-MA) since it is widely used in these blends. Uncompatibilized and compatibilized PLA/SEBS blends can be manufactured by extrusion and then shaped into standard samples for further characterization by mechanical, thermal, morphological, dynamical-mechanical, wetting and colour standard tests. The obtained results indi- cate that the uncompatibilized PLA/SEBS blend containing 20 wt.% SEBS gives improved toughness Citation: Tejada-Oliveros, R.; (4.8 kJ/m2) compared to neat PLA (1.3 kJ/m2). Nevertheless, the same blend compatibilized with Balart, R.; Ivorra-Martinez, J.; 2 Gomez-Caturla, J.; Montanes, N.; MLO leads to an increase in impact strength up to 6.1 kJ/m , thus giving evidence of the potential Quiles-Carrillo, L. -

4-H CDM Skill-A-Thon Sewing & Clothing ID

4-H CDM Skill-a-Thon Sewing & Clothing ID The photos shown here are only one option of what an item may look like. Other options are available. Revised November 2020 Batik - Indonesian technique of wax resist dyeing applied to whole cloth. This technique originated from Java, Indonesia. It is made either by drawing dots and lines of the resist with a spouted tool called a tjanting, or by printing the resist with a copper stamp called a cap. Broadcloth - A very lightweight, smooth, flat looking fabric, with no pattern in the weave of the threads. It is similar in quality to pinpoint fabric but has less texture. This usually means it is slightly more transparent and not as shiny as the pinpoint. Chambray - A plain weave fabric woven with a colored yarn in the warp and a white yarn in the weft. Corduroy - A fabric with a distinct pattern, a “cord” or wale. It is mostly composed of tufted cords, sometimes exhibiting a channel (bare to the base fabric) between the tufts. Cotton - A fabric made from the fiber of its namesake plant. It is good for first time sewers to use because it’s easy to work with and used for everyday clothing. Eyelet - A type of fabric that has holes that are edged using a buttonhole stitch. The holes are precisely sized and situated to create a pattern or patterns, often floral designs or abstract geometric arrangements. Faux Fur - A fabric fashioned to simulate genuine animal hair. It is known as a pile fabric and is typically made from polymeric fibers that are processed, dyed, and cut to match a specific texture and color. -

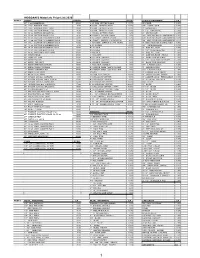

WEBPRICES 2020-MATERIALS Website

HOGGAN’S Materials Price List 2020 PAGE 1 FABRIC YRD/EA ZIPPERS FT/EA PLASTIC HARDWARE EA 46" 9 oz.SUMBRELLA 40.00 # 4.5 COIL ZIPPER CHAIN 0.75 ZIPCORD 0.50 36" 10oz. BANNER VINYL 7.00 # 5 COIL ZIPPER CHAIN 0.80 1/8" CORD LOCK 0.60 36" 13oz. BANNER VINYL (BO) 10.00 # 8 COIL ZIPPER CHAIN 0.90 1" GM BUCKLE 1.50 36" 10 oz. COTTON SINGLE FILL 4.00 # 9 COIL ZIPPER CHAIN 1.00 1 - 1/2" GM BUCKLE 2.00 36” 12 oz. COTTON SINGLE FILL 5.00 # 10 COIL ZIPPER CHAIN 1.20 2" GM BUCKLE 2.50 48" 15 oz. COTTON SINGLE FILL 6.00 # 5 TOOTH ZIPPER CHAIN 1.00 1/2“ SIDE RELEASE CONTOURED 0.50 60” 12 oz. COTTON 12 NUMBER DUCK 14.00 # 10 TOOTH ZIPPER CHAIN 1.25 5/8" SIDE RELEASE CONTOURED 0.60 48" 15 oz. COTTON 10 NUMBER DUCK 11.00 # 5 TOOTH ZIPPER CHAIN METAL 2.00 3/4“ SIDE RELEASE CONTOURED 0.75 84" 15oz. COTTON 10 NUMBER DUCK 16.00 # 10 TOOTH ZIPPER CHAIN METAL 3.50 1” SIDE RELEASE CONTOURED 0.90 36" 18 oz. COTTON 8 NUMBER DUCK 8.00 # 4.5 SLIDER 0.50 3/4" SIDE RELEASE 0.75 60” 24 oz. COTTON 4 NUMBER DUCK 21.00 # 5 SLIDER 0.50 1" SIDE RELEASE 0.90 36" 12 oz. COTTON BOAT DUCK 13.00 # 8 SLIDER 0.50 1 - 1/2" SIDE RELEASE 1.50 60” 18 oz. COTTON CHAIR DUCK 25.00 # 9 SLIDER 0.50 2" SIDE RELEASE 2.00 60" HEATSHIELD 30.00 # 10 SLIDER 0.50 1" SIDE RELEASE HEAVY 1.50 60" LOOP VELCRO 30.00 # 5 SLIDER DOUBLE 1.00 1" DUAL SIDE RELEASE 1.00 60" MESH POCKET 16.00 # 8 SLIDER DOUBLE 1.00 1 - 1/2" DUAL SIDE RELEASE 2.00 60" MESH FISH NET 18.00 # 9 SLIDER DOUBLE 1.00 2" DUAL SIDE RELEASE 2.50 48" MESH WINDOW SCREEN 10.00 # 10 SLIDER DOUBLE 1.00 3/4" LADDER LOCK 0.50 72" MESH TENNIS SCREEN 16.00 # 5 HORSE SHOE TOOTH SLIDER 1.00 1" LADDER LOCK 0.75 72" MESH TRAMPAULINE 30.00 # 8 HORSE SHOE TOOTH SLIDER 1.00 1.5" LADDER LOCK 1.00 54" MESH 12 oz VINYL 14.00 STOPS 0.10 1" LADDER LOCK-HEAVY 1.00 72" MESH 12 oz VINYL 16.00 # 8 COIL 110" ZIPPER 18.00 1" LADDER LOCK DOUBLE 1.00 60" NYLON 200 den. -

GENERAL SERVICE/REPAIR MANUAL Are of the Best Quality Available

Velcro® Care Snap Replacement The genuine Velcro brand hook and loop closures used in all Aerostich garments GENERAL SERVICE/REPAIR MANUAL are of the best quality available. Even so, they require careful cleaning and main- Instructions* tenance to maximize their useful life. The ‘hook’ component of Velcro is actually (View of right leg) Pad Removal and Replacement (Roadcrafter/Darien/Falstaff) comprised of hundreds of tiny hooked plastic fibers which have an affinity for dirt, hair, fibers, and even snow. Clean these materials by gently pulling debris off at an The pads used in Roadcrafter and Darien suits should be removed periodically for inspection or angle. When washing this garment, close all Velcro tabs and flaps to prevent snag- when laundering the garment. To identify Knee, Elbow, and Right and Left Shoulder pads, refer to the 1. On hard-armor ging other fabrics. Do not subject these closures to bleach or hot drying. Fraying is illustrations below. Knee and elbow pads are interchangeable left to right but have a top and bottom pads, avoid bending caused by excessive dryer heat which weakens the resins bonding the base of the orientation. The upward side of the knee pad is closest to the pad’s radius. To remove or replace the foam too near glued area. woven hook or loop closures. Use low heat settings when drying. Because Velcro elbow pads, first turn the sleeves inside out. After replacing the pads and pulling the sleeves right wears after long use, some loss of strength is not considered a warrantable defect side out, recheck the position of the pads (and the engagement of the Velcro if Darien) within the 2.