Enhancing Compressibility Through Data Approximation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Preparation & Descriptive Statistics

Data Preparation & Descriptive Statistics (ver. 2.4) Oscar Torres-Reyna Data Consultant [email protected] PU/DSS/OTR http://dss.princeton.edu/training/ Basic definitions… For statistical analysis we think of data as a collection of different pieces of information or facts. These pieces of information are called variables. A variable is an identifiable piece of data containing one or more values. Those values can take the form of a number or text (which could be converted into number) In the table below variables var1 thru var5 are a collection of seven values, ‘id’ is the identifier for each observation. This dataset has information for seven cases (in this case people, but could also be states, countries, etc) grouped into five variables. id var1 var2 var3 var4 var5 1 7.3 32.27 0.1 Yes Male 2 8.28 40.68 0.56 No Female 3 3.35 5.62 0.55 Yes Female 4 4.08 62.8 0.83 Yes Male 5 9.09 22.76 0.26 No Female 6 8.15 90.85 0.23 Yes Female 7 7.59 54.94 0.42 Yes Male PU/DSS/OTR Data structure… For data analysis your data should have variables as columns and observations as rows. The first row should have the column headings. Make sure your dataset has at least one identifier (for example, individual id, family id, etc.) id var1 var2 var3 var4 var5 First row should have the variable names 1 7.3 32.27 0.1 Yes Male 2 8.28 40.68 0.56 No Female Cross-sectional data 3 3.35 5.62 0.55 Yes Female 4 4.08 62.8 0.83 Yes Male 5 9.09 22.76 0.26 No Female 6 8.15 90.85 0.23 Yes Female 7 7.59 54.94 0.42 Yes Male id year var1 var2 var3 1 2000 7 74.03 0.55 Group 1 1 2001 2 4.6 0.44 At least one identifier 1 2002 2 25.56 0.77 2 2000 7 59.52 0.05 Cross-sectional time series data Group 2 2 2001 2 16.95 0.94 or panel data 2 2002 9 1.2 0.08 3 2000 9 85.85 0.5 Group 3 3 2001 3 98.85 0.32 3 2002 3 69.2 0.76 PU/DSS/OTR NOTE: See: http://www.statistics.com/resources/glossary/c/crossdat.php Data format (ASCII)… ASCII (American Standard Code for Information Interchange). -

Compress/Decompress Encrypt/Decrypt

Windows Compress/Decompress WinZip Standard WinZip Pro Compressed Folders Zip and unzip files instantly with 64-bit, best-in-class software ENHANCED! Compress MP3 files by 15 - 20 % on average Open and extract Zipx, RAR, 7Z, LHA, BZ2, IMG, ISO and all other major compression file formats Open more files types as a Zip, including DOCX, XLSX, PPTX, XPS, ODT, ODS, ODP, ODG,WMZ, WSZ, YFS, XPI, XAP, CRX, EPUB, and C4Z Use the super picker to unzip locally or to the cloud Open CAB, Zip and Zip 2.0 Methods Convert other major compressed file formats to Zip format Apply 'Best Compression' method to maximize efficiency automatically based on file type Reduce JPEG image files by 20 - 25% with no loss of photo quality or data integrity Compress using BZip2, LZMA, PPMD and Enhanced Deflate methods Compress using Zip 2.0 compatible methods 'Auto Open' a zipped Microsoft Office file by simply double-clicking the Zip file icon Employ advanced 'Unzip and Try' functionality to review interrelated components contained within a Zip file (such as an HTML page and its associated graphics). Windows Encrypt/Decrypt WinZip Standard WinZip Pro Compressed Folders Apply encryption and conversion options, including PDF conversion, watermarking and photo resizing, before, during or after creating your zip Apply separate conversion options to individual files in your zip Take advantage of hardware support in certain Intel-based computers for even faster AES encryption Administrative lockdown of encryption methods and password policies Check 'Encrypt' to password protect your files using banking-level encryption and keep them completely secure Secure sensitive data with strong, FIPS-197 certified AES encryption (128- and 256- bit) Auto-wipe ('shred') temporarily extracted copies of encrypted files using the U.S. -

Full Document

R&D Centre for Mobile Applications (RDC) FEE, Dept of Telecommunications Engineering Czech Technical University in Prague RDC Technical Report TR-13-4 Internship report Evaluation of Compressibility of the Output of the Information-Concealing Algorithm Julien Mamelli, [email protected] 2nd year student at the Ecole´ des Mines d'Al`es (N^ımes,France) Internship supervisor: Luk´aˇsKencl, [email protected] August 2013 Abstract Compression is a key element to exchange files over the Internet. By generating re- dundancies, the concealing algorithm proposed by Kencl and Loebl [?], appears at first glance to be particularly designed to be combined with a compression scheme [?]. Is the output of the concealing algorithm actually compressible? We have tried 16 compression techniques on 1 120 files, and the result is that we have not found a solution which could advantageously use repetitions of the concealing method. Acknowledgments I would like to express my gratitude to my supervisor, Dr Luk´aˇsKencl, for his guidance and expertise throughout the course of this work. I would like to thank Prof. Robert Beˇst´akand Mr Pierre Runtz, for giving me the opportunity to carry out my internship at the Czech Technical University in Prague. I would also like to thank all the members of the Research and Development Center for Mobile Applications as well as my colleagues for the assistance they have given me during this period. 1 Contents 1 Introduction 3 2 Related Work 4 2.1 Information concealing method . 4 2.2 Archive formats . 5 2.3 Compression algorithms . 5 2.3.1 Lempel-Ziv algorithm . -

Pack, Encrypt, Authenticate Document Revision: 2021 05 02

PEA Pack, Encrypt, Authenticate Document revision: 2021 05 02 Author: Giorgio Tani Translation: Giorgio Tani This document refers to: PEA file format specification version 1 revision 3 (1.3); PEA file format specification version 2.0; PEA 1.01 executable implementation; Present documentation is released under GNU GFDL License. PEA executable implementation is released under GNU LGPL License; please note that all units provided by the Author are released under LGPL, while Wolfgang Ehrhardt’s crypto library units used in PEA are released under zlib/libpng License. PEA file format and PCOMPRESS specifications are hereby released under PUBLIC DOMAIN: the Author neither has, nor is aware of, any patents or pending patents relevant to this technology and do not intend to apply for any patents covering it. As far as the Author knows, PEA file format in all of it’s parts is free and unencumbered for all uses. Pea is on PeaZip project official site: https://peazip.github.io , https://peazip.org , and https://peazip.sourceforge.io For more information about the licenses: GNU GFDL License, see http://www.gnu.org/licenses/fdl.txt GNU LGPL License, see http://www.gnu.org/licenses/lgpl.txt 1 Content: Section 1: PEA file format ..3 Description ..3 PEA 1.3 file format details ..5 Differences between 1.3 and older revisions ..5 PEA 2.0 file format details ..7 PEA file format’s and implementation’s limitations ..8 PCOMPRESS compression scheme ..9 Algorithms used in PEA format ..9 PEA security model .10 Cryptanalysis of PEA format .12 Data recovery from -

Metadefender Core V4.12.2

MetaDefender Core v4.12.2 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2. -

Steganography and Vulnerabilities in Popular Archives Formats.| Nyxengine Nyx.Reversinglabs.Com

Hiding in the Familiar: Steganography and Vulnerabilities in Popular Archives Formats.| NyxEngine nyx.reversinglabs.com Contents Introduction to NyxEngine ............................................................................................................................ 3 Introduction to ZIP file format ...................................................................................................................... 4 Introduction to steganography in ZIP archives ............................................................................................. 5 Steganography and file malformation security impacts ............................................................................... 8 References and tools .................................................................................................................................... 9 2 Introduction to NyxEngine Steganography1 is the art and science of writing hidden messages in such a way that no one, apart from the sender and intended recipient, suspects the existence of the message, a form of security through obscurity. When it comes to digital steganography no stone should be left unturned in the search for viable hidden data. Although digital steganography is commonly used to hide data inside multimedia files, a similar approach can be used to hide data in archives as well. Steganography imposes the following data hiding rule: Data must be hidden in such a fashion that the user has no clue about the hidden message or file's existence. This can be achieved by -

Improved Neural Network Based General-Purpose Lossless Compression Mohit Goyal, Kedar Tatwawadi, Shubham Chandak, Idoia Ochoa

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 1 DZip: improved neural network based general-purpose lossless compression Mohit Goyal, Kedar Tatwawadi, Shubham Chandak, Idoia Ochoa Abstract—We consider lossless compression based on statistical [4], [5] and generative modeling [6]). Neural network based data modeling followed by prediction-based encoding, where an models can typically learn highly complex patterns in the data accurate statistical model for the input data leads to substantial much better than traditional finite context and Markov models, improvements in compression. We propose DZip, a general- purpose compressor for sequential data that exploits the well- leading to significantly lower prediction error (measured as known modeling capabilities of neural networks (NNs) for pre- log-loss or perplexity [4]). This has led to the development of diction, followed by arithmetic coding. DZip uses a novel hybrid several compressors using neural networks as predictors [7]– architecture based on adaptive and semi-adaptive training. Unlike [9], including the recently proposed LSTM-Compress [10], most NN based compressors, DZip does not require additional NNCP [11] and DecMac [12]. Most of the previous works, training data and is not restricted to specific data types. The proposed compressor outperforms general-purpose compressors however, have been tailored for compression of certain data such as Gzip (29% size reduction on average) and 7zip (12% size types (e.g., text [12] [13] or images [14], [15]), where the reduction on average) on a variety of real datasets, achieves near- prediction model is trained in a supervised framework on optimal compression on synthetic datasets, and performs close to separate training data or the model architecture is tuned for specialized compressors for large sequence lengths, without any the specific data type. -

Winzip 12 Reviewer's Guide

Introducing WinZip® 12 WinZip® is the most trusted way to work with compressed files. No other compression utility is as easy to use or offers the comprehensive and productivity-enhancing approach that has made WinZip the gold standard for file-compression tools. With the new WinZip 12, you can quickly and securely zip and unzip files to conserve storage space, speed up e-mail transmission, and reduce download times. State-of-the-art file compression, strong AES encryption, compatibility with more compression formats, and new intuitive photo compression, make WinZip 12 the complete compression and archiving solution. Building on the favorite features of a worldwide base of several million users, WinZip 12 adds new features for image compression and management, support for new compression methods, improved compression performance, support for additional archive formats, and more. Users can work smarter, faster, and safer with WinZip 12. Who will benefit from WinZip® 12? The simple answer is anyone who uses a PC. Any PC user can benefit from the compression and encryption features in WinZip to protect data, save space, and reduce the time to transfer files on the Internet. There are, however, some PC users to whom WinZip is an even more valuable and essential tool. Digital photo enthusiasts: As the average file size of their digital photos increases, people are looking for ways to preserve storage space on their PCs. They have lots of photos, so they are always seeking better ways to manage them. Sharing their photos is also important, so they strive to simplify the process and reduce the time of e-mailing large numbers of images. -

Genomic Data Compression

Genomic data compression S. Cenk Sahinalp Bogaz’da Yaz Okulu 2018 Genome&storage&and&communication:&the&need • Research:)massive)genome)projects (e.g.)PCAWG))require)to)exchange) 10000s)of)genomes. • Need)to)cover)>200)cancer)types,)many)subtypes. • Within)PCAWG)TCGA)to)sequence)11K)patients)covering)33)cancer) types.)ICGC)to)cover)50)cancer)types)>15PB)data)at)The)Cancer) Genome)Collaboratory • Clinic:)$100s/genome (e.g.)NovaSeq))enable)sequencing)to)be)a) standard)tool)for)pathology • PCAWG)estimates)that)250M+)individuals)will)be)sequenced)by)2030 Current'needs • Typical(technology:(Illumina NovaSeq 1009400(bp reads,(2509500GB( uncompressed(data(for(high(coverage human(genome,(high(redundancy • 40%(of(the(human(genome(is(repetitive((mobile(genetic(elements,( centromeric DNA,(segmental(duplications,(etc.) • Upload/download:(55(hrs on(a(10Mbit(consumer(line;(5.5(hrs on(a( 100Mbit(high(speed(connection • Technologies(under(development:(expensive,(longer(reads,(higher(error( (PacBio,(Nanopore)(– lower(redundancy(and(higher(error(rates(limit( compression File%formats • Raw read'data:'FASTQ/FASTA'– dominant'fields:'(read'name,'sequence,' quality'score) • Mapped read'data:'SAM/BAM'– reads'reordered'based'on'mapping' locus'on'a'reference genome'(not'suitaBle'for'metagenomics,'organisms' with'no'reference) • Key'decisions'to'Be'made: • Each'format'can'Be'compressed'through'specialized'methods'– should' there'Be'a'standardized'format'for'compressed'genomes? • Better'file'formats'Based'on'mapping'loci'on'sequence'graphs' representing'common'variants'in'a'panJgenomic'reference? -

Redalyc.A Lossy Method for Compressing Raw CCD Images

Revista Mexicana de Astronomía y Astrofísica ISSN: 0185-1101 [email protected] Instituto de Astronomía México Watson, Alan M. A Lossy Method for Compressing Raw CCD Images Revista Mexicana de Astronomía y Astrofísica, vol. 38, núm. 2, octubre, 2002, pp. 233-249 Instituto de Astronomía Distrito Federal, México Available in: http://www.redalyc.org/articulo.oa?id=57138212 How to cite Complete issue Scientific Information System More information about this article Network of Scientific Journals from Latin America, the Caribbean, Spain and Portugal Journal's homepage in redalyc.org Non-profit academic project, developed under the open access initiative Revista Mexicana de Astronom´ıa y Astrof´ısica, 38, 233{249 (2002) A LOSSY METHOD FOR COMPRESSING RAW CCD IMAGES Alan M. Watson Instituto de Astronom´ıa Universidad Nacional Aut´onoma de M´exico, Campus Morelia, M´exico Received 2002 June 3; accepted 2002 August 7 RESUMEN Se presenta un m´etodo para comprimir las im´agenes en bruto de disposi- tivos como los CCD. El m´etodo es muy sencillo: cuantizaci´on con p´erdida y luego compresi´on sin p´erdida con herramientas de uso general como gzip o bzip2. Se convierten los archivos comprimidos a archivos de FITS descomprimi´endolos con gunzip o bunzip2, lo cual es una ventaja importante en la distribuci´on de datos com- primidos. El grado de cuantizaci´on se elige para eliminar los bits de bajo orden, los cuales sobre-muestrean el ruido, no proporcionan informaci´on, y son dif´ıciles o imposibles de comprimir. El m´etodo es con p´erdida, pero proporciona ciertas garant´ıas sobre la diferencia absoluta m´axima, la diferencia RMS y la diferencia promedio entre la imagen comprimida y la imagen original; tales garant´ıas implican que el m´etodo es adecuado para comprimir im´agenes en bruto. -

Winzip 15 Matrix 4.Cdr

15 Feature Comparison NEW! Compress/Decompress Standard Pro NEW! Zip and unzip files quickly and easily with a brand new zip engine Compress files to save space and reduce transmission times Compress multiple files instantly using WinZip's handy Zip button* Split large Zip files so they fit on removable media Zip and unzip very large Zip files (>4GB) Create Zip, LHA, and Zipx files—our smallest Zip files ever Open and extract Zip, Zipx, RAR, 7Z, LHA BZ2, CAB, IMG, ISO, and most other compressed file formats NEW! Drag files onto the new WinZip Desktop Gadget to instantly zip/unzip them Utilize 'Best Compression' method to maximize efficiency automatically based on file type Reduce JPEG image files by 20 to 25% with no loss of photo quality or data integrity Use '1-Click Unzip' to automatically extract the contents of an archive into a folder it creates, and then open that folder in Windows Explorer for easy editing access* 'Auto Open' a zipped document, spreadsheet, or presentation in its associated Microsoft Office application by simply double clicking the Zip file within Windows Explorer or Microsoft Outlook* Use 'Open With' to unzip a compressed file using the default Windows file association (or an application you specify), and, if you make changes to the opened file, WinZip will offer to save them back to the Zip file for you* Use 'Save As', 'Rename', and 'New Folder' commands to easily manage Zip files Experience faster zipping performance on most files using optional LZMA compression View international characters in filenames thanks to WinZip's Unicode support Encrypt/Decrypt Standard Pro Encrypt and decrypt confidential files and email attachments Simply check 'Encrypt' to password protect your files and keep them completely secure Secure sensitive data with strong, FIPS-197 certified AES encryption (128- and 256-bit) Auto-wipe ('shred') temporarily extracted copies of encrypted files using the U.S. -

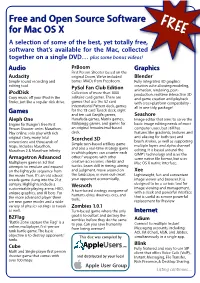

About FOSS for Mac OS X (FREE)

Free and Open Source Software FREE for Mac OS X A selection of some of the best, yet totally free, software that’s available for the Mac, collected together on a single DVD… plus some bonus videos! Audio PrBoom Graphics First Person Shooter based on the Audacity original Doom. We’ve included Blender Simple sound recording and bonus IWADs from Freedoom. Fully integrated 3D graphics editing tool. creation suite allowing modeling, PySol Fan Club Edition animation, rendering, post- iPodDisk Collection of more than 1000 production, realtime interactive 3D Copy music off your iPod in the solitaire card games. There are and game creation and playback finder, just like a regular disk drive. games that use the 52 card with cross-platform compatibility - International Pattern deck, games all in one tidy, package! Games for the 78 card Tarock deck, eight and ten suit Ganjifa games, Seashore Aleph One Hanafuda games, Matrix games, Image editor that aims to serve the Engine for Bungie's free First Mahjongg games, and games for basic image editing needs of most Person Shooter series Marathon. an original hexadecimal-based computer users, but still has Play online, solo play with rich deck. features like gradients, textures and original story, many total Scorched 3D anti-aliasing for both text and conversions and thousands of brush strokes, as well as supporting Simple turn-based artillery game multiple layers and alpha channel maps. Includes Marathon, and also a real-time strategy game Marathon 2 & Marathon Infinity editing. It is based around the in which players can counter each GIMP's technology and uses the Armagetron Advanced others' weapons with other same native file format, but uses Multiplayer game in 3d that creative accessories, shields and Mac OS X native interface.