Test First User Interfaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Contents Credits & Contacts

overload issue 72 april 2006 contents credits & contacts Multithreading 101 Overload Editor: Alan Griffiths Tim Penhey 7 [email protected] [email protected] To Grin Again Contributing Editor: Alan Griffiths 10 Mark Radford [email protected] A Fistful of Idioms Steve Love 14 Advisors: Phil Bass C++ Best Practice: [email protected] Designing Header Files Thaddaeus Frogley Alan Griffiths 19 [email protected] Richard Blundell Visiting Alice [email protected] Phil Bass 24 Pippa Hennessy [email protected] Tim Penhey [email protected] Advertising: Thaddaeus Frogley [email protected] Overload is a publication of the ACCU. For details of the ACCU and other ACCU publications and activities, see the ACCU website. ACCU Website: http://www.accu.org/ Information and Membership: Join on the website or contact David Hodge [email protected] Publications Officer: John Merrells [email protected] Copy Deadlines All articles intended for publication in Overload 73 should be submitted to the editor by ACCU Chair: May 1st 2006, and for Overload 74 by July 1st 2006. Ewan Milne [email protected] 3 overload issue 72 april 2006 Editorial: Doing What You Can Your magazine needs you! f you look at the contents of this issue of Overload you’ll see that most of the feature content has been written by the editorial team.You might even notice that the remaining Iarticle is not new material. To an extent this is a predictable consequence of the time of year: many of the potential contributors are busy preparing for the conference. However, as editor for the last couple of years I’ve noticed More on WG14 that there is a worrying decline in proposals for articles Last time I commented on the fact that I was hearing from authors. -

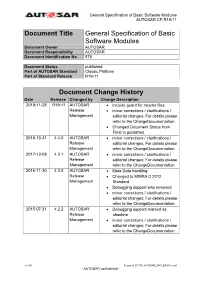

General Specification of Basic Software Modules AUTOSAR CP R19-11

General Specification of Basic Software Modules AUTOSAR CP R19-11 Document Title General Specification of Basic Software Modules Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 578 Document Status published Part of AUTOSAR Standard Classic Platform Part of Standard Release R19-11 Document Change History Date Release Changed by Change Description 2019-11-28 R19-11 AUTOSAR Include guard for header files Release minor corrections / clarifications / Management editorial changes; For details please refer to the ChangeDocumentation Changed Document Status from Final to published 2018-10-31 4.4.0 AUTOSAR minor corrections / clarifications / Release editorial changes; For details please Management refer to the ChangeDocumentation 2017-12-08 4.3.1 AUTOSAR minor corrections / clarifications / Release editorial changes; For details please Management refer to the ChangeDocumentation 2016-11-30 4.3.0 AUTOSAR Meta Data handling Release Changed to MISRA C 2012 Management Standard Debugging support was removed minor corrections / clarifications / editorial changes; For details please refer to the ChangeDocumentation 2015-07-31 4.2.2 AUTOSAR Debugging support marked as Release obsolete Management minor corrections / clarifications / editorial changes; For details please refer to the ChangeDocumentation 1 of 86 Document ID 578: AUTOSAR_SWS_BSWGeneral - AUTOSAR confidential - General Specification of Basic Software Modules AUTOSAR CP R19-11 Document Change History Date Release Changed by Change Description 2014-10-31 -

Rejuvenating Macros As C++11 Declarations

Rejuvenating C++ Programs through Demacrofication Aditya Kumar Andrew Sutton Bjarne Stroustrup Computer Science Engineering Computer Science Engineering Computer Science Engineering Texas A&M University Texas A&M University Texas A&M University College Station,Texas-77840 College Station,Texas-77840 College Station,Texas-77840 Email: [email protected] Email: [email protected] Email: [email protected] Abstract—We describe how legacy C++ programs can be of the C preprocessor has been reduced. The use of general- rejuvenated using C++11 features such as generalized constant ized constant expressions, type deduction, perfect forwarding, expressions, perfect forwarding, and lambda expressions. In lambda expressions, and alias templates eliminate the need for general, this work develops a correspondence between different kinds of macros and the C++ declarations to which they should many previous preprocessor-based idioms and solutions. be transformed. We have created a set of demacrofication tools Older C++ programs can be rejuvenated by replacing error- to assist a developer in the rejuvenation of C++ programs. To prone uses of the C preprocessor with type safe C++11 evaluate the work, we have applied the rejuvenation tools to declarations. In this paper, we present methods and tools a number of C++ libraries to assess the extent to which these to support the task of demacrofying C++ programs during libraries might be improved by demacrofication. Results indicate that between 68 and 98% of potentially refactorable macros could rejuvenation. This task is intended to be completed with the be transformed into C++11 declarations. Additional experiments help of a programmer; it is not a fully automated process. -

Source Listing for Project Pala

Source Listing for project pala Ulrich Mutze www.ulrichmutze.de July 1, 2020 Contents 1 Introduction, Draft 1 1.1 Addition for usage of multiple precision arithmetic . 2 1.2 Code Structure . 2 1.3 The First Steps in the Program . 4 2 Legal Matters 5 3 alloca.h 7 4 cpmactionprinciple.h 8 5 cpmactionprinciple.cpp 9 6 cpmalgorithms.h 17 7 cpmalgorithms.cpp 39 8 cpmangle.h 62 9 cpmangle.cpp 68 10 cpmapplication.h 76 11 cpmapplication.cpp 82 12 cpmbasicinterfaces.h 98 13 cpmbasictypes.h 100 14 cpmbody.h 102 Contents 15 cpmbody.cpp 104 16 cpmbodycpp.h 106 17 cpmbodyx.h 126 18 cpmc.h 148 19 cpmc.cpp 156 20 cpmcamera.h 163 21 cpmcamera.cpp 165 22 cpmcamera2.h 166 23 cpmcamera2cpp.h 171 24 cpmcamera3.h 178 25 cpmcamera3cpp.h 185 26 cpmcamerax.h 198 27 cpmcameraxcpp.h 205 28 cpmclinalg.h 215 29 cpmcompar.h 234 30 cpmcompar.cpp 236 31 cpmcomparcpp.h 238 32 cpmcomparx.h 295 33 cpmcompdef.h 333 34 cpmconstphys.h 336 35 cpmconstphys.cpp 339 36 cpmdefinitions.h 340 37 cpmdigraph.h 341 2 Contents 38 cpmdigraph.cpp 356 39 cpmdim.h 358 40 cpmdim.cpp 361 41 cpmdim2.h 362 42 cpmdim2cpp.h 382 43 cpmdim3.h 394 44 cpmdim3cpp.h 423 45 cpmdimdef.h 445 46 cpmdimx.h 446 47 cpmdimxcpp.h 473 48 cpmdiscretespace.h 524 49 cpmdistribution.h 527 50 cpmdistribution.cpp 532 51 cpmdynaux.h 537 52 cpmdynaux.cpp 540 53 cpmextractor.h 542 54 cpmextractorx.h 544 55 cpmf.h 554 56 cpmfa.h 564 57 cpmfft.h 574 58 cpmfftmore.h 578 59 cpmfield.h 583 60 cpmfile.h 590 3 Contents 61 cpmfl.h 594 62 cpmfo.h 617 63 cpmfontgeneration.h 621 64 cpmfontgeneration.cpp 628 65 cpmforces.h 645 66 cpmforces.cpp -

C++ for C# Developers

JacksonDunstan.com Copyright © 2021 Jackson Dunstan All rights reserved Table of Contents 1. Introduction 2. Primitive Types and Literals 3. Variables and Initialization 4. Functions 5. Build Model 6. Control Flow 7. Pointers, Arrays, and Strings 8. References 9. Enumerations 10. Struct Basics 11. Struct Functions 12. Constructors and Destructors 13. Initialization 14. Inheritance 15. Struct and Class Permissions 16. Struct and Class Wrapup 17. Namespaces 18. Exceptions 19. Dynamic Allocation 20. Implicit Type Conversion 21. Casting and RTTI 22. Lambdas 23. Compile-Time Programming 24. Preprocessor 25. Intro to Templates 26. Template Parameters 27. Template Deduction and Specialization 28. Variadic Templates 29. Template Constraints 30. Type Aliases 31. Deconstructing and Attributes 32. Thread-Local Storage and Volatile 33. Alignment, Assembly, and Language Linkage 34. Fold Expressions and Elaborated Type Specifiers 35. Modules, The New Build Model 36. Coroutines 37. Missing Language Features 38. C Standard Library 39. Language Support Library 40. Utilities Library 41. System Integration Library 42. Numbers Library 43. Threading Library 44. Strings Library 45. Array Containers Library 46. Other Containers Library 47. Containers Library Wrapup 48. Algorithms Library 49. Ranges and Parallel Algorithms 50. I/O Library 51. Missing Library Features 52. Idioms and Best Practices 53. Conclusion 1. Introduction History C++’s predecessor is C, which debuted in 1972. It is still the most used language with C++ in fourth place and C# in fifth. C++ got started with the name “C with Classes” in 1979. The name C++ came later in 1982. The original C++ compiler, Cfront, output C source files which were then compiled to machine code. -

More C++ Idioms/Print Version 1 More C++ Idioms/Print Version

More C++ Idioms/Print Version 1 More C++ Idioms/Print Version Preface [1] C++ has indeed become too "expert friendly" --- Bjarne Stroustrup, The Problem with Programming , Technology Review, Nov 2006. Stroustrup's saying is true because experts are intimately familiar with the idioms in the language. With the increase in the idioms a programmer understands, the language becomes friendlier to him or her. The objective of this open content book is to present modern C++ idioms to programmers who have moderate level of familiarity with C++, and help elevate their knowledge so that C++ feels much friendlier to them. It is designed to be an exhaustive catalog of reusable idioms that expert C++ programmers often use while programming or designing using C++. This is an effort to capture their techniques and vocabulary into a single work. This book describes the idioms in a regular format: Name-Intent-Motivation-Solution-References, which is succinct and helps speed learning. By their nature, idioms tend to have appeared in the C++ community and in published work many times. An effort has been made to refer to the original source(s) where possible; if you find a reference incomplete or incorrect, please feel free to suggest or make improvements. The world is invited to catalog reusable pieces of C++ knowledge (similar to the book on design patterns by GoF). The goal here is to first build an exhaustive catalog of modern C++ idioms and later evolve it into an idiom language, just like a pattern language. Finally, the contents of this book can be redistributed under the terms of the GNU Free Documentation License. -

Industrial Strength C++

Industrial Strength C++ Mats Henricson Erik Nyquist Prentice-Hall PTR Copyright ©1997 Mats Henricson, Erik Nyquist and Ellemtel Utvecklings AB Published by Prentice Hall PTR All rights reserved. ISBN 0-13-120965-5 Contents Naming 1 Meaningful names 2 Names that collide 5 Illegal naming 8 Organizing the code 11 Comments 19 Control flow 25 Object Life Cycle 31 Initialization of variables and constants 32 Constructor initializer lists 35 Copying of objects 38 Conversions 47 The class interface 55 Inline functions 56 Argument passing and return values 58 Const Correctness 66 Overloading and default arguments 74 i Conversion functions 81 new and delete 87 Static Objects 95 Object-oriented programming 103 Encapsulation 104 Dynamic binding 106 Inheritance 110 The Class Interface 115 Assertions 129 Error handling 133 Different ways to report errors 134 When to throw exceptions 137 Exception-safe code 143 Exception types 149 Error recovery 153 Exception specifications 155 Parts of C++ to avoid 157 Library functions to avoid 157 Language constructs to avoid 161 ii Industrial Strength C++ Size of executables 167 Portability 171 General aspects of portability 172 Including files 175 The size and layout of objects 177 Unsupported language features 181 Other compiler differences 184 Style 191 General Aspects of Style 192 Naming conventions 193 File-name extensions 196 Lexical style 197 AppendixTerminology 203 Rules and recommendations 207 Index 215 iii iv Industrial Strength C++ Examples EXAMPLE 1.1 Naming a variable 2 EXAMPLE 1.2 Different ways to -

Pattern-Oriented Software Architecture, Volume 2.Pdf

Pattern-Oriented Software Architecture, Patterns for Concurrent and Networked Objects, Volume 2 by Douglas Schmidt, Michael Stal, Hans Rohnert and Frank Buschmann ISBN: 0471606952 John Wiley & Sons © 2000 (633 pages) An examination of 17 essential design patterns used to build modern object- oriented middleware systems. 1 Table of Contents Pattern-Oriented Software Architecture—Patterns for Concurrent and Networked Objects, Volume 2 Foreword About this Book Guide to the Reader Chapter 1 - Concurrent and Networked Objects Chapter 2 - Service Access and Configuration Patterns Chapter 3 - Event Handling Patterns Chapter 4 - Synchronization Patterns Chapter 5 - Concurrency Patterns Chapter 6 - Weaving the Patterns Together Chapter 7 - The Past, Present, and Future of Patterns Chapter 8 - Concluding Remarks Glossary Notations References Index of Patterns Index Index of Names 2 Pattern-Oriented Software Architecture—Patterns for Concurrent and Networked Objects, Volume 2 Douglas Schmidt University of California, Irvine Michael Stal Siemens AG, Corporate Technology Hans Rohnert Siemens AG, Germany Frank Buschmann Siemens AG, Corporate Technology John Wiley & Sons, Ltd Chichester · New York · Weinheim · Brisbane · Singapore · Toronto Copyright © 2000 by John Wiley & Sons, Ltd Baffins Lane, Chichester, West Sussex PO19 1UD, England National 01243 779777 International (+44) 1243 779777 e-mail (for orders and customer service enquiries): <[email protected]> Visit our Home Page on http://www.wiley.co.uk or http://www.wiley.com All rights reserved. -

Lecture 5: Operator Overloading and Structuring Programs

Math 4997-3 Lecture 5: Operator overloading and structuring programs https://www.cct.lsu.edu/~pdiehl/teaching/2019/4977/ This work is licensed under a Creative Commons “Attribution-NonCommercial- NoDerivatives 4.0 International” license. Reminder Operator overloading Structure of code Header files Class types CMake Summary Reminder Lecture 4 What you should know from last lecture I N-Body simulations I Struct I Generic programming (Templates) Operator overloading Compilation error error: no match for ‘operator’+ (operand types are ‘’vector and ‘’vector) Example Vector template<typename T> struct vector { T x; T y; T z; }; Addition of two vectors vector <double > a; vector <double > b; std::cout << a + b << std::endl; Example Vector template<typename T> struct vector { T x; T y; T z; }; Addition of two vectors vector <double > a; vector <double > b; std::cout << a + b << std::endl; Compilation error error: no match for ‘operator’+ (operand types are ‘’vector and ‘’vector) Operator overloading1 template<typename T> struct vector { T x; T y; T z; // Overload the addition operator vector<T> operator+(const vector<T> rhs){ return vector<T>( x + rhs.x, y + rhs.y, z + rhs.z ); } }; Following operators can be overloaded I 38 operators can be overloaded I 40 operators can be overloaded, since C++ 20 1 https://en.cppreference.com/w/cpp/language/operators D’oh! error: no match for ‘operator’<< (operand types are ‘std::ostream {aka std::basic_ostream’} and ‘’vector) std::cout << a + b << std::endl; Can we compile now? template<typename T> struct -

Ns-3 Design Overview

ns-3 Design Overview ns-3 project http://www.nsnam.org/ feedback: [email protected] September 17, 2006 Introduction This ns-3 design document is intended to document the technical goals, software architecture, implementation choices, and interfaces of the simulator. It provides a place to record design agreements or archive design decisions for future reference. It is expected that this document can evolve or fork to become an “ns-3 Developers Guide” at some point in the future. This documentis written in Latex and is to be maintainedin revision control on the ns-3 code server. Changes to the document should be discussed on the [email protected] mailing list. Contents 1 Functional Overview 3 1.1 Goals ........................................... ............ 3 1.2 Userexperience.................................. ................ 3 1.2.1 Installation .................................. .............. 3 1.2.2 Userinterface................................. .............. 3 1.2.3 Scenariodefinition .... .... .... ... .... .... .... .. ............... 4 1.2.4 Capabilities.................................. .............. 4 1.3 Documentation ................................... ............... 4 1.4 RequirementsfromCollaborators. ...................... 4 1.4.1 VINI .......................................... ......... 5 1.4.2 Emulab........................................ .......... 5 1.4.3 NetworkSimulationCradle . ................ 5 1.4.4 Largescalesimulations. ................. 5 2 Technical Overview 6 2.1 Basics......................................... -

SOI 506 New 1.0 05/05/2006 by Alward Siyyid (Raytheon Information Solutions) Document Revision by Ken Jensen (Raytheon Information Solutions)

NOAA NESDIS CENTER for SATELLITE APPLICATIONS and RESEARCH TRAINING DOCUMENT TD-11.2 C PROGRAMMING STANDARDS and GUIDELINES Version 3.0 Hardcopy Uncontrolled NOAA/NESDIS/STAR TRAINING DOCUMENT TD-12.1.3 Version: 2.0 Date: September 30, 2007 TITLE: C Programming Standards and Guidelines Page 2 of 54 TITLE: TD-11.2: C PROGRAMMING STANDARDS AND GUIDELINES VERSION 3.0 AUTHORS: Alward Siyyid (formerly Raytheon Information Solutions – version 1) Ken Jensen (Raytheon Information Solutions) Peter Keehn (PSGS) Shanna Sampson (PSGS) C PROGRAMMING STANDARDS AND GUIDELINES VERSION HISTORY SUMMARY Version Description Revised Date Sections New Work Instruction (WI) adapted from Raytheon SOI 506 New 1.0 05/05/2006 by Alward Siyyid (Raytheon Information Solutions) Document Revision by Ken Jensen (Raytheon Information Solutions). 1.1 All 06/02/2006 Applied STAR standard style to entire document. Revision by Shanna Sampson (Perot), Peter Keehn (Perot), and Ken Jensen (Raytheon Information Solutions). Changed 2.0 from WI-12.1.2 to Training Document TD-12.1.3 for version All 09/30/2007 2 of the STAR Enterprise Product Lifecycle (EPL). Numerous revisions in response to peer review comments. Renamed TD-11.2 and revised by Ken Jensen (RIS) for 3.0 1, 2 10/1/2009 version 3. Hardcopy Uncontrolled NOAA/NESDIS/STAR TRAINING DOCUMENT TD-12.1.3 Version: 2.0 Date: September 30, 2007 TITLE: C Programming Standards and Guidelines Page 3 of 54 TABLE OF CONTENTS Page LIST OF ACRONYMS ....................................................................................... 5 1. INTRODUCTION ........................................................................................ 6 1.1. Objective......................................................................................... 6 1.2. Background .................................................................................... 7 1.3. C Versions ...................................................................................... 8 1.4. -

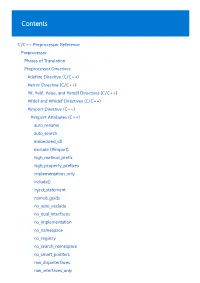

C/C++ Preprocessor Reference

Contents C/C++ Preprocessor Reference Preprocessor Phases of Translation Preprocessor Directives #define Directive (C/C++) #error Directive (C/C++) #if, #elif, #else, and #endif Directives (C/C++) #ifdef and #ifndef Directives (C/C++) #import Directive (C++) #import Attributes (C++) auto_rename auto_search embedded_idl exclude (#import) high_method_prefix high_property_prefixes implementation_only include() inject_statement named_guids no_auto_exclude no_dual_interfaces no_implementation no_namespace no_registry no_search_namespace no_smart_pointers raw_dispinterfaces raw_interfaces_only raw_method_prefix raw_native_types raw_property_prefixes rename (#import) rename_namespace rename_search_namespace tlbid #include Directive (C/C++) #line Directive (C/C++) Null Directive #undef Directive (C/C++) #using Directive (C++) Preprocessor Operators Stringizing Operator (#) Charizing Operator (#@) Token-Pasting Operator (##) Macros (C/C++) Macros and C++ Variadic Macros Predefined Macros Grammar Summary (C/C++) Definitions for the Grammar Summary Conventions Preprocessor Grammar Pragma Directives and the __Pragma Keyword alloc_text auto_inline bss_seg check_stack code_seg comment (C/C++) component conform const_seg data_seg deprecated (C/C++) detect_mismatch execution_character_set fenv_access float_control fp_contract function (C/C++) hdrstop include_alias init_seg inline_depth inline_recursion intrinsic loop make_public managed, unmanaged message omp once optimize pack pointers_to_members pop_macro push_macro region, endregion runtime_checks