Anonymity and Unobservability

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PVC Technical Specifications V.1.0

Pryvate™ Ltd. Functional & Technical Specifications PVC Technical Specifications V.1.0 APRIL 10, 2018 © PRYVATE™ 2018. PRYVATE™ is a suite of security products from Criptyque Ltd. Registered in the Cayman Islands. PRYVATE™ Is a brand wholly owned by CRIPTYQUE Ltd. Pryvate™ Ltd. Functional & Technical Specifications TABLE OF CONTENTS 1 GENERAL INFORMATION 5 1.1 Scope 1.2 Current Platform Summary 2 FUNCTIONAL TECH SPECIFICATIONS 5 2.1 Encrypted Voice Calls (VOIP) 2.2 Off Net Calling 2.3 Secure Conferencing 2.4 Encrypted Video Calls 2.5 Encrypted Instant Message (IM) 2.6 Notification of Screenshots 2.7 Encrypted Email 2.8 Secure File Transfer & Storage 2.9 Pin-Encrypted Mobile Protection 2.10 Multiple Account Management 2.11 Secure managed conversations 2.12 Anti-Blocking 3 HYBRIDIZATION 13 3.1 Voice / Video / Messaging 3.2 File Storage / Archival 3.3 Pryvate Crypto Wallet 3.3.1 Two-Wallet Solution 3.3.2 Three Methods 3.3.3 Enterprise Multi - by Pryvate 3.3.4 Risks of Cryptocurrency Wallets 3.4 Decentralized Email 3.5 Pryvate Dashboard 4 PERFORMANCE REQUIREMENTS 20 4.1 System Maintenance 4.2 Failure Contingencies 4.3 Customization and Flexibility 4.4 Equipment 4.5 Software 4.6 Interface / UI 5 CONCLUSION 21 6 APPENDIX 22 © PRYVATE™ 2018. PRYVATE™ is a suite of security products from Criptyque Ltd. Registered in the Cayman Islands. PRYVATE™ Is a brand wholly owned by CRIPTYQUE Ltd. Pryvate™ Ltd. Functional & Technical Specifications Acronyms: Definitions SCP = Secure Communications Platform Crypto= Cryptocurrency IPFS= Interplanetary File System ZRTP= ("Z" is a reference to its inventor, Zimmermann; "RTP" stands for Real-time Transport Protocol) it is a cryptographic key-agreement protocol to negotiate the keys for encryption between two end points in a Voice over Internet Protocol Diffie-Hellman= A method of securely exchanging cryptographic keys over a public channel and was one of the first public-key protocols as originally conceptualized by Ralph Merkle and named after Whitfield Diffie and Martin Hellman. -

Everyone's Guide to Bypassing Internet Censorship

EVERYONE’S GUIDE TO BY-PASSING INTERNET CENSORSHIP FOR CITIZENS WORLDWIDE A CIVISEC PROJECT The Citizen Lab The University of Toronto September, 2007 cover illustration by Jane Gowan Glossary page 4 Introduction page 5 Choosing Circumvention page 8 User self-assessment Provider self-assessment Technology page 17 Web-based Circumvention Systems Tunneling Software Anonymous Communications Systems Tricks of the trade page 28 Things to remember page 29 Further reading page 29 Circumvention Technologies Circumvention technologies are any tools, software, or methods used to bypass Inter- net filtering. These can range from complex computer programs to relatively simple manual steps, such as accessing a banned website stored on a search engine’s cache, instead of trying to access it directly. Circumvention Providers Circumvention providers install software on a computer in a non-filtered location and make connections to this computer available to those who access the Internet from a censored location. Circumvention providers can range from large commercial organi- zations offering circumvention services for a fee to individuals providing circumven- tion services for free. Circumvention Users Circumvention users are individuals who use circumvention technologies to bypass Internet content filtering. 4 Internet censorship, or content filtering, has become a major global problem. Whereas once it was assumed that states could not control Internet communications, according to research by the OpenNet Initiative (http://opennet.net) more than 25 countries now engage in Internet censorship practices. Those with the most pervasive filtering policies have been found to routinely block access to human rights organi- zations, news, blogs, and web services that challenge the status quo or are deemed threatening or undesirable. -

Threat Modeling and Circumvention of Internet Censorship by David Fifield

Threat modeling and circumvention of Internet censorship By David Fifield A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor J.D. Tygar, Chair Professor Deirdre Mulligan Professor Vern Paxson Fall 2017 1 Abstract Threat modeling and circumvention of Internet censorship by David Fifield Doctor of Philosophy in Computer Science University of California, Berkeley Professor J.D. Tygar, Chair Research on Internet censorship is hampered by poor models of censor behavior. Censor models guide the development of circumvention systems, so it is important to get them right. A censor model should be understood not just as a set of capabilities|such as the ability to monitor network traffic—but as a set of priorities constrained by resource limitations. My research addresses the twin themes of modeling and circumvention. With a grounding in empirical research, I build up an abstract model of the circumvention problem and examine how to adapt it to concrete censorship challenges. I describe the results of experiments on censors that probe their strengths and weaknesses; specifically, on the subject of active probing to discover proxy servers, and on delays in their reaction to changes in circumvention. I present two circumvention designs: domain fronting, which derives its resistance to blocking from the censor's reluctance to block other useful services; and Snowflake, based on quickly changing peer-to-peer proxy servers. I hope to change the perception that the circumvention problem is a cat-and-mouse game that affords only incremental and temporary advancements. -

Blockchain: an Enabler for Power Market Operations Exploring Potential Uses of Distributed Ledger Technology in the Evolving Georgian Power Market

BLOCKCHAIN: AN ENABLER FOR POWER MARKET OPERATIONS EXPLORING POTENTIAL USES OF DISTRIBUTED LEDGER TECHNOLOGY IN THE EVOLVING GEORGIAN POWER MARKET USAID GOVERNING FOR GROWTH (G4G) IN GEORGIA 30 May 2019 This publication was produced for review by the United States Agency for International Development. It was prepared by Deloitte Consulting LLP. The author’s views expressed in this publication do not necessarily reflect the views of the United States Agency for International Development or the United States Government. BLOCKCHAIN: AN ENABLER FOR POWER MARKET OPERATIONS EXPLORING POTENTIAL USES OF DISTRIBUTED LEDGER TECHNOLOGY IN THE EVOLVING GEORGIAN POWER MARKET USAID GOVERNING FOR GROWTH (G4G) IN GEORGIA CONTRACT NUMBER: AID-114-C-14-00007 DELOITTE CONSULTING LLP USAID | GEORGIA USAID CONTRACTING OFFICER’S REPRESENTATIVE: PHILLIP GREENE AUTHOR(S): SRI SEKAR, JAMES CALLIHAN, AVTANDILI TODUA ACTIVITY AREA: 4420 LANGUAGE: ENGLISH 30 MAY 2019 DISCLAIMER: This publication was produced for review by the United States Agency for International Development. It was prepared by Deloitte Consulting LLP. The author’s views expressed in this publication do not necessarily reflect the views of the United States Agency for International Development or the United States Government. USAID | GOVERNING FOR GROWTH (G4G) IN GEORGIA BLOCKCHAIN: AN ENABLER FOR POWER MARKET OPERATIONS i DATA Reviewed by: Giorgi Giorgobiani, Andrea Lora Project Component: Energy Trade Policy Improvement Component Practice Area: Electricity Trading Mechanism (ETM) Key Words: Blockchain, -

A Framework for Identifying Host-Based Artifacts in Dark Web Investigations

Dakota State University Beadle Scholar Masters Theses & Doctoral Dissertations Fall 11-2020 A Framework for Identifying Host-based Artifacts in Dark Web Investigations Arica Kulm Dakota State University Follow this and additional works at: https://scholar.dsu.edu/theses Part of the Databases and Information Systems Commons, Information Security Commons, and the Systems Architecture Commons Recommended Citation Kulm, Arica, "A Framework for Identifying Host-based Artifacts in Dark Web Investigations" (2020). Masters Theses & Doctoral Dissertations. 357. https://scholar.dsu.edu/theses/357 This Dissertation is brought to you for free and open access by Beadle Scholar. It has been accepted for inclusion in Masters Theses & Doctoral Dissertations by an authorized administrator of Beadle Scholar. For more information, please contact [email protected]. A FRAMEWORK FOR IDENTIFYING HOST-BASED ARTIFACTS IN DARK WEB INVESTIGATIONS A dissertation submitted to Dakota State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Cyber Defense November 2020 By Arica Kulm Dissertation Committee: Dr. Ashley Podhradsky Dr. Kevin Streff Dr. Omar El-Gayar Cynthia Hetherington Trevor Jones ii DISSERTATION APPROVAL FORM This dissertation is approved as a credible and independent investigation by a candidate for the Doctor of Philosophy in Cyber Defense degree and is acceptable for meeting the dissertation requirements for this degree. Acceptance of this dissertation does not imply that the conclusions reached by the candidate are necessarily the conclusions of the major department or university. Student Name: Arica Kulm Dissertation Title: A Framework for Identifying Host-based Artifacts in Dark Web Investigations Dissertation Chair: Date: 11/12/20 Committee member: Date: 11/12/2020 Committee member: Date: Committee member: Date: Committee member: Date: iii ACKNOWLEDGMENT First, I would like to thank Dr. -

Polish Journal for American Studies Yearbook of the Polish Association for American Studies

Polish Journal for American Studies Yearbook of the Polish Association for American Studies Vol. 14 (Spring 2020) INSTITUTE OF ENGLISH STUDIES UNIVERSITY OF WARSAW Polish Journal for American Studies Yearbook of the Polish Association for American Studies Vol. 14 (Spring 2020) Warsaw 2020 MANAGING EDITOR Marek Paryż EDITORIAL BOARD Justyna Fruzińska, Izabella Kimak, Mirosław Miernik, Łukasz Muniowski, Jacek Partyka, Paweł Stachura ADVISORY BOARD Andrzej Dakowski, Jerzy Durczak, Joanna Durczak, Andrew S. Gross, Andrea O’Reilly Herrera, Jerzy Kutnik, John R. Leo, Zbigniew Lewicki, Eliud Martínez, Elżbieta Oleksy, Agata Preis-Smith, Tadeusz Rachwał, Agnieszka Salska, Tadeusz Sławek, Marek Wilczyński REVIEWERS Ewa Antoszek, Edyta Frelik, Elżbieta Klimek-Dominiak, Zofia Kolbuszewska, Tadeusz Pióro, Elżbieta Rokosz-Piejko, Małgorzata Rutkowska, Stefan Schubert, Joanna Ziarkowska TYPESETTING AND COVER DESIGN Miłosz Mierzyński COVER IMAGE Jerzy Durczak, “Vegas Options” from the series “Las Vegas.” By permission. www.flickr/photos/jurek_durczak/ ISSN 1733-9154 eISSN 2544-8781 Publisher Polish Association for American Studies Al. Niepodległości 22 02-653 Warsaw paas.org.pl Nakład 110 egz. Wersją pierwotną Czasopisma jest wersja drukowana. Printed by Sowa – Druk na życzenie phone: +48 22 431 81 40; www.sowadruk.pl Table of Contents ARTICLES Justyna Włodarczyk Beyond Bizarre: Nature, Culture and the Spectacular Failure of B.F. Skinner’s Pigeon-Guided Missiles .......................................................................... 5 Małgorzata Olsza Feminist (and/as) Alternative Media Practices in Women’s Underground Comix in the 1970s ................................................................ 19 Arkadiusz Misztal Dream Time, Modality, and Counterfactual Imagination in Thomas Pynchon’s Mason & Dixon ............................................................................... 37 Ewelina Bańka Walking with the Invisible: The Politics of Border Crossing in Luis Alberto Urrea’s The Devil’s Highway: A True Story ............................................. -

Tor: the Second-Generation Onion Router (2014 DRAFT V1)

Tor: The Second-Generation Onion Router (2014 DRAFT v1) Roger Dingledine Nick Mathewson Steven Murdoch The Free Haven Project The Free Haven Project Computer Laboratory [email protected] [email protected] University of Cambridge [email protected] Paul Syverson Naval Research Lab [email protected] Abstract Perfect forward secrecy: In the original Onion Routing We present Tor, a circuit-based low-latency anonymous com- design, a single hostile node could record traffic and later munication service. This Onion Routing system addresses compromise successive nodes in the circuit and force them limitations in the earlier design by adding perfect forward se- to decrypt it. Rather than using a single multiply encrypted crecy, congestion control, directory servers, integrity check- data structure (an onion) to lay each circuit, Tor now uses an ing, configurable exit policies, anticensorship features, guard incremental or telescoping path-building design, where the nodes, application- and user-selectable stream isolation, and a initiator negotiates session keys with each successive hop in practical design for location-hidden services via rendezvous the circuit. Once these keys are deleted, subsequently com- points. Tor is deployed on the real-world Internet, requires promised nodes cannot decrypt old traffic. As a side benefit, no special privileges or kernel modifications, requires little onion replay detection is no longer necessary, and the process synchronization or coordination between nodes, and provides of building circuits is more reliable, since the initiator knows a reasonable tradeoff between anonymity, usability, and ef- when a hop fails and can then try extending to a new node. -

Peer to Peer Resources

Peer To Peer Resources By Marcus P. Zillman, M.S., A.M.H.A. Executive Director – Virtual Private Library [email protected] This January 2009 column Peer To Peer Resources is a comprehensive list of peer to peer resources, sources and sites available over the Internet and the World Wide Web including peer to peer, file sharing, and grid and matrix search engines. The below list is taken from the peer to peer research section of my Subject Tracer™ Information Blog titled Deep Web Research and is constantly updated with Subject Tracer™ bots at the following URL: http://www.DeepWebResearch.info/ These resources and sources will help you to discover the many pathways available to you through the Internet for obtaining and locating peer to peer sources in todays rapidly changing data procurement society. This is a MUST information keeper for those seeking the latest and greatest peer to peer resources! Peer To Peer Resources: ALPINE Network - SourceForge: Project http://sourceforge.net/projects/alpine/ An Efficient Scheme for Query Processing on Peer-to-Peer Networks http://aeolusres.homestead.com/files/index.html angrycoffee.com http://www.AngryCoffee.com/ Azureus - Vuze Java Bittorrent Client http://azureus.sourceforge.net/ 1 January 2009 Zillman Column – Peer To Peer Resources http://www.zillmancolumns.com/ [email protected] © 2009 Marcus P. Zillman, M.S., A.M.H.A. BadBlue http://badblue.com/ Between Rhizomes and Trees: P2P Information Systems by Bryn Loban http://firstmonday.org/htbin/cgiwrap/bin/ojs/index.php/fm/article/view/1182 -

Analysing the MUTE Anonymous File-Sharing System Using the Pi-Calculus

Analysing the MUTE Anonymous File-Sharing System Using the Pi-calculus Tom Chothia CWI, Kruislaan 413, 1098 SJ, Amsterdam, The Netherlands. Abstract. This paper gives details of a formal analysis of the MUTE system for anonymous file-sharing. We build pi-calculus models of a node that is innocent of sharing files, a node that is guilty of file-sharing and of the network environment. We then test to see if an attacker can dis- tinguish between a connection to a guilty node and a connection to an innocent node. A weak bi-simulation between every guilty network and an innocent network would be required to show possible innocence. We find that such a bi-simulation cannot exist. The point at which the bi- simulation fails leads directly to a previously undiscovered attack on MUTE. We describe a fix for the MUTE system that involves using au- thentication keys as the nodes’ pseudo identities and give details of its addition to the MUTE system. 1 Introduction MUTE is one of the most popular anonymous peer-to-peer file-sharing systems1. Peers, or nodes, using MUTE will connect to a small number of other, known nodes; only the direct neighbours of a node know its IP address. Communication with remote nodes is provided by sending messages hop-to-hop across this overlay network. Routing messages in this way allows MUTE to trade efficient routing for anonymity. There is no way to find the IP address of a remote node, and direct neighbours can achieve a level of anonymity by claiming that they are just forwarding requests and files for other nodes. -

Rhyming Dictionary

Merriam-Webster's Rhyming Dictionary Merriam-Webster, Incorporated Springfield, Massachusetts A GENUINE MERRIAM-WEBSTER The name Webster alone is no guarantee of excellence. It is used by a number of publishers and may serve mainly to mislead an unwary buyer. Merriam-Webster™ is the name you should look for when you consider the purchase of dictionaries or other fine reference books. It carries the reputation of a company that has been publishing since 1831 and is your assurance of quality and authority. Copyright © 2002 by Merriam-Webster, Incorporated Library of Congress Cataloging-in-Publication Data Merriam-Webster's rhyming dictionary, p. cm. ISBN 0-87779-632-7 1. English language-Rhyme-Dictionaries. I. Title: Rhyming dictionary. II. Merriam-Webster, Inc. PE1519 .M47 2002 423'.l-dc21 2001052192 All rights reserved. No part of this book covered by the copyrights hereon may be reproduced or copied in any form or by any means—graphic, electronic, or mechanical, including photocopying, taping, or information storage and retrieval systems—without written permission of the publisher. Printed and bound in the United States of America 234RRD/H05040302 Explanatory Notes MERRIAM-WEBSTER's RHYMING DICTIONARY is a listing of words grouped according to the way they rhyme. The words are drawn from Merriam- Webster's Collegiate Dictionary. Though many uncommon words can be found here, many highly technical or obscure words have been omitted, as have words whose only meanings are vulgar or offensive. Rhyming sound Words in this book are gathered into entries on the basis of their rhyming sound. The rhyming sound is the last part of the word, from the vowel sound in the last stressed syllable to the end of the word. -

Air Force Institute of Technology

to A TAXONOMY FOR AND ANALYSIS OF ANONYMOUS COMMUNICATIONS NETWORKS DISSERTATION Douglas Kelly, GG-14 AFIT/DCS/ENG/09-08 AIR FORCE INSTITUTE OF TECHNOLOGY Wright-Patterson Air Force Base, Ohio APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED The views expressed in this dissertation are those of the author and do not reflect the official policy or position of the United States Air Force, Department of Defense, or the U.S. Government. A TAXONOMY FOR AND ANALYSIS OF ANONYMOUS COMMUNICATIONS NETWORKS DISSERTATION Presented to the Faculty Graduate School of Engineering and Management Air Force Institute of Technology Air University Air Education and Training Command In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Douglas J. Kelly, BS, MS, MBA March 2009 APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED AFIT/DCS/ENG/09-08 A TAXONOMY FOR AND ANALYSIS OF ANONYMOUS COMMUNICATIONS NETWORKS DISSERTATION Douglas J. Kelly, BS, MS, MBA Approved: __________//SIGNED//________________ _16 Mar 09_ Dr. Richard A. Raines (Chairman) Date __________//SIGNED//________________ _16 Mar 09_ Dr. Barry E. Mullins (Member) Date __________//SIGNED//________________ _16 Mar 09_ Dr. Rusty O. Baldwin (Member) Date __________//SIGNED//________________ _16 Mar 09_ Dr. Michael R. Grimaila (Member) Date Accepted: __________//SIGNED//________________ _18 Mar 09_ Dr. M. U. Thomas Date Dean, Graduate School of Engineering and Management - iii - AFIT/DCS/ENG/09-08 Abstract Any entity operating in cyberspace is susceptible to debilitating attacks. With cyber attacks intended to gather intelligence and disrupt communications rapidly replacing the threat of conventional and nuclear attacks, a new age of warfare is at hand. -

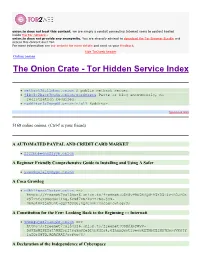

The Onion Crate - Tor Hidden Service Index Protected Onions Add New

onion.to does not host this content; we are simply a conduit connecting Internet users to content hosted inside the Tor network.. onion.to does not provide any anonymity. You are strongly advised to download the Tor Browser Bundle and access this content over Tor. For more information see our website for more details and send us your feedback. hide Tor2web header Online onions The Onion Crate - Tor Hidden Service Index Protected onions Add new nethack3dzllmbmo.onion A public nethack server. j4ko5c2kacr3pu6x.onion/wordpress Paste or blog anonymously, no registration required. redditor3a2spgd6.onion/r/all Redditor. Sponsored links 5168 online onions. (Ctrl-f is your friend) A AUTOMATED PAYPAL AND CREDIT CARD MARKET 2222bbbeonn2zyyb.onion A Beginner Friendly Comprehensive Guide to Installing and Using A Safer yuxv6qujajqvmypv.onion A Coca Growlog rdkhliwzee2hetev.onion ==> https://freenet7cul5qsz6.onion.to/freenet:USK@yP9U5NBQd~h5X55i4vjB0JFOX P97TAtJTOSgquP11Ag,6cN87XSAkuYzFSq-jyN- 3bmJlMPjje5uAt~gQz7SOsU,AQACAAE/cocagrowlog/3/ A Constitution for the Few: Looking Back to the Beginning ::: Internati 5hmkgujuz24lnq2z.onion ==> https://freenet7cul5qsz6.onion.to/freenet:USK@kpFWyV- 5d9ZmWZPEIatjWHEsrftyq5m0fe5IybK3fg4,6IhxxQwot1yeowkHTNbGZiNz7HpsqVKOjY 1aZQrH8TQ,AQACAAE/acftw/0/ A Declaration of the Independence of Cyberspace ufbvplpvnr3tzakk.onion ==> https://freenet7cul5qsz6.onion.to/freenet:CHK@9NuTb9oavt6KdyrF7~lG1J3CS g8KVez0hggrfmPA0Cw,WJ~w18hKJlkdsgM~Q2LW5wDX8LgKo3U8iqnSnCAzGG0,AAIC-- 8/Declaration-Final%5b1%5d.html A Dumps Market