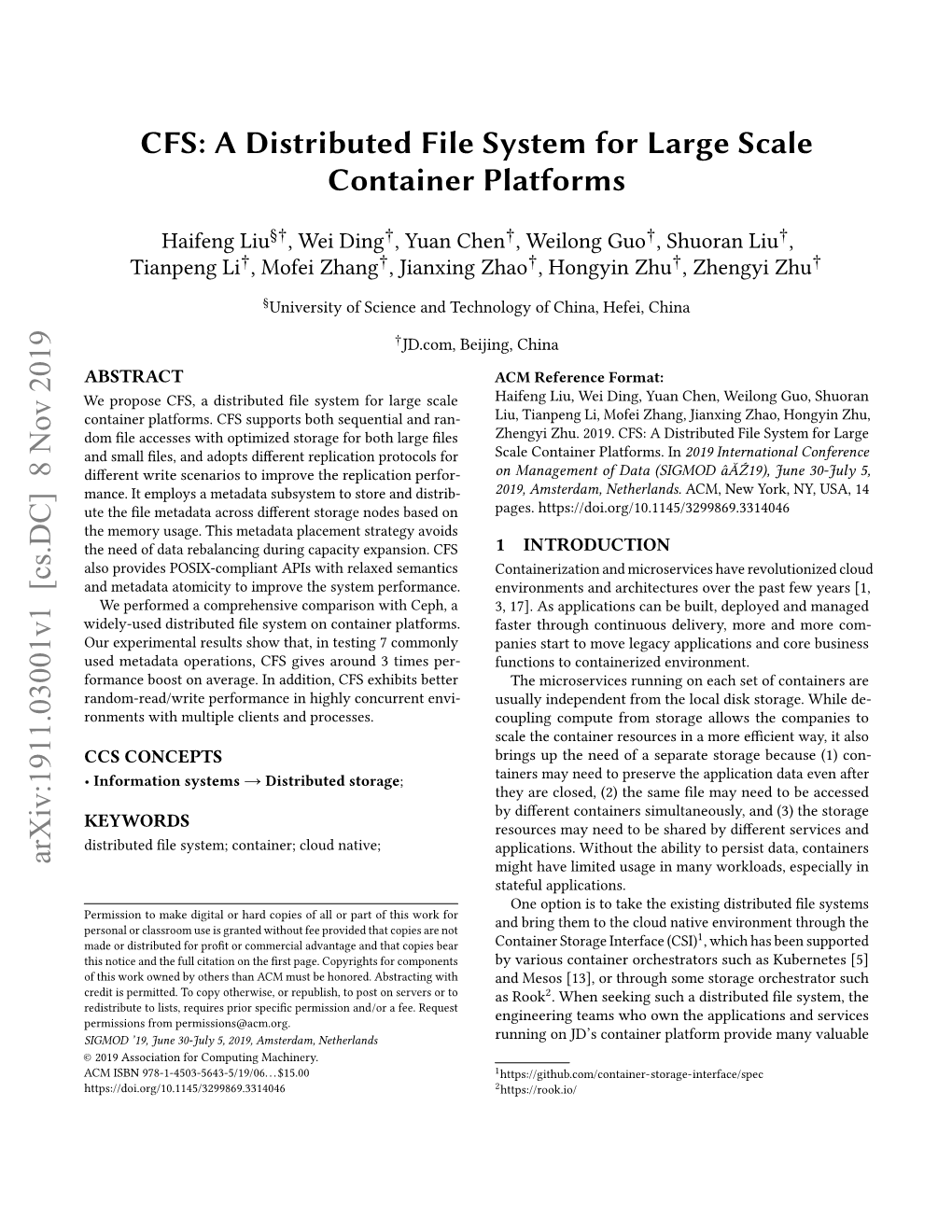

CFS: a Distributed File System for Large Scale Container Platforms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ACID Compliant Distributed Key-Value Store

ACID Compliant Distributed Key-Value Store #1 #2 #3 Lakshmi Narasimhan Seshan , Rajesh Jalisatgi , Vijaeendra Simha G A # 1l [email protected] # 2r [email protected] #3v [email protected] Abstract thought that would be easier to implement. All Built a fault-tolerant and strongly-consistent key/value storage components run http and grpc endpoints. Http endpoints service using the existing RAFT implementation. Add ACID are for debugging/configuration. Grpc Endpoints are transaction capability to the service using a 2PC variant. Build used for communication between components. on the raftexample[1] (available as part of etcd) to add atomic Replica Manager (RM): RM is the service discovery transactions. This supports sharding of keys and concurrent transactions on sharded KV Store. By Implementing a part of the system. RM is initiated with each of the transactional distributed KV store, we gain working knowledge Replica Servers available in the system. Users have to of different protocols and complexities to make it work together. instantiate the RM with the number of shards that the user wants the key to be split into. Currently we cannot Keywords— ACID, Key-Value, 2PC, sharding, 2PL Transaction dynamically change while RM is running. New Replica Servers cannot be added or removed from once RM is I. INTRODUCTION up and running. However Replica Servers can go down Distributed KV stores have become a norm with the and come back and RM can detect this. Each Shard advent of microservices architecture and the NoSql Leader updates the information to RM, while the DTM revolution. Initially KV Store discarded ACID properties leader updates its information to the RM. -

A Searchable-By-Content File System

A Searchable-by-Content File System Srinath Sridhar, Jeffrey Stylos and Noam Zeilberger May 2, 2005 Abstract Indexed searching of desktop documents has recently become popular- ized by applications from Google, AOL, Yahoo!, MSN and others. How- ever, each of these is an application separate from the file system. In our project, we explore the performance tradeoffs and other issues encountered when implementing file indexing inside the file system. We developed a novel virtual-directory interface to allow application and user access to the index. In addition, we found that by deferring indexing slightly, we could coalesce file indexing tasks to reduce total work while still getting good performance. 1 Introduction Searching has always been one of the fundamental problems of computer science, and from their beginnings computer systems were designed to support and when possible automate these searches. This support ranges from the simple-minded (e.g., text editors that allow searching for keywords) to the extremely powerful (e.g., relational database query languages). But for the mundane task of per- forming searches on users’ files, available tools still leave much to be desired. For the most part, searches are limited to queries on a directory namespace—Unix shell wildcard patterns are useful for matching against small numbers of files, GNU locate for finding files in the directory hierarchy. Searches on file data are much more difficult. While from a logical point of view, grep combined with the Unix pipe mechanisms provides a nearly universal solution to content-based searches, realistically it can be used only for searching within small numbers of (small-sized) files, because of its inherent linear complexity. -

A Semantic File System for Integrated Product Data Management', Advanced Engineering Informatics, Vol

Citation for published version: Eck, O & Schaefer, D 2011, 'A Semantic File System for Integrated Product Data Management', Advanced Engineering Informatics, vol. 25, no. 2, pp. 177-184. https://doi.org/10.1016/j.aei.2010.08.005 DOI: 10.1016/j.aei.2010.08.005 Publication date: 2011 Document Version Publisher's PDF, also known as Version of record Link to publication University of Bath Alternative formats If you require this document in an alternative format, please contact: [email protected] General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Download date: 02. Oct. 2021 Advanced Engineering Informatics 25 (2011) 177–184 Contents lists available at ScienceDirect Advanced Engineering Informatics journal homepage: www.elsevier.com/locate/aei A semantic file system for integrated product data management ⇑ Oliver Eck a, , Dirk Schaefer b a Department of Computer Science, HTWG Konstanz, Germany b Systems Realization Laboratory, Woodruff School of Mechanical Engineering, Georgia Institute of Technology, Atlanta, GA, USA article info abstract Article history: A mechatronic system is a synergistic integration of mechanical, electrical, electronic and software tech- Received 31 October 2009 nologies into electromechanical systems. Unfortunately, mechanical, electrical, and software data are Received in revised form 10 June 2010 often handled in separate Product Data Management (PDM) systems with no automated sharing of data Accepted 17 August 2010 between them or links between their data. -

Grpc - a Solution for Rpcs by Google Distributed Systems Seminar at Charles University in Prague, Nov 2016 Jan Tattermusch - Grpc Software Engineer

gRPC - A solution for RPCs by Google Distributed Systems Seminar at Charles University in Prague, Nov 2016 Jan Tattermusch - gRPC Software Engineer About me ● Software Engineer at Google (since 2013) ● Working on gRPC since Q4 2014 ● Graduated from Charles University (2010) Contacts ● jtattermusch on GitHub ● Feedback to [email protected] @grpcio Motivation: gRPC Google has an internal RPC system, called Stubby ● All production applications use RPCs ● Over 1010 RPCs per second in total ● 4 generations over 13 years (since 2003) ● APIs for C++, Java, Python, Go What's missing ● Not suitable for external use (tight coupling with internal tools & infrastructure) ● Limited language & platform support ● Proprietary protocol and security ● No mobile support @grpcio What's gRPC ● HTTP/2 based RPC framework ● Secure, Performant, Multiplatform, Open Multiplatform ● Idiomatic APIs in popular languages (C++, Go, Java, C#, Node.js, Ruby, PHP, Python) ● Supports mobile devices (Android Java, iOS Obj-C) ● Linux, Windows, Mac OS X ● (web browser support in development) OpenSource ● developed fully in open on GitHub: https://github.com/grpc/ @grpcio Use Cases Build distributed services (microservices) Service 1 Service 3 ● In public/private cloud ● Google's own services Service 2 Service 4 Client-server communication ● Mobile ● Web ● Also: Desktop, embedded devices, IoT Access APIs (Google, OSS) @grpcio Key Features ● Streaming, Bidirectional streaming ● Built-in security and authentication ○ SSL/TLS, OAuth, JWT access ● Layering on top of HTTP/2 -

Database Software Market: Billy Fitzsimmons +1 312 364 5112

Equity Research Technology, Media, & Communications | Enterprise and Cloud Infrastructure March 22, 2019 Industry Report Jason Ader +1 617 235 7519 [email protected] Database Software Market: Billy Fitzsimmons +1 312 364 5112 The Long-Awaited Shake-up [email protected] Naji +1 212 245 6508 [email protected] Please refer to important disclosures on pages 70 and 71. Analyst certification is on page 70. William Blair or an affiliate does and seeks to do business with companies covered in its research reports. As a result, investors should be aware that the firm may have a conflict of interest that could affect the objectivity of this report. This report is not intended to provide personal investment advice. The opinions and recommendations here- in do not take into account individual client circumstances, objectives, or needs and are not intended as recommen- dations of particular securities, financial instruments, or strategies to particular clients. The recipient of this report must make its own independent decisions regarding any securities or financial instruments mentioned herein. William Blair Contents Key Findings ......................................................................................................................3 Introduction .......................................................................................................................5 Database Market History ...................................................................................................7 Market Definitions -

Implementing and Testing Cockroachdb on Kubernetes

Implementing and testing CockroachDB on Kubernetes Albert Kasperi Iho Supervisors: Ignacio Coterillo Coz, Ricardo Brito Da Rocha Abstract This report will describe implementing CockroachDB on Kubernetes, a sum- mer student project done in 2019. The report addresses the implementation, monitoring, testing and results of the project. In the implementation and mon- itoring parts, a closer look to the full pipeline will be provided, with the intro- duction of all the frameworks used and the biggest problems that were faced during the project. After that, the report describes the testing process of Cock- roachDB, what tools were used in the testing and why. The results of testing will be presented after testing with the conclusion of results. Keywords CockroachDB; Kubernetes; benchmark. Contents 1 Project specification . 2 2 Implementation . 2 2.1 Kubernetes . 2 2.2 CockroachDB . 3 2.3 Setting up Kubernetes in CERN environment . 3 3 Monitoring . 3 3.1 Prometheus . 4 3.2 Prometheus Operator . 4 3.3 InfluxDB . 4 3.4 Grafana . 4 3.5 Scraping and forwarding metrics from the Kubernetes cluster . 4 4 Testing CockroachDB on Kubernetes . 5 4.1 SQLAlchemy . 5 4.2 Pgbench . 5 4.3 CockroachDB workload . 6 4.4 Benchmarking CockroachDB . 6 5 Test results . 6 5.1 SQLAlchemy . 6 5.2 Pgbench . 6 5.3 CockroachDB workload . 7 5.4 Conclusion . 7 6 Acknowledgements . 7 1 Project specification The core goal of the project was taking a look into implementing a database framework on top of Kuber- netes, the challenges in implementation and automation possibilities. Final project pipeline included a CockroachDB cluster running in Kubernetes with Prometheus monitoring both of them. -

Using Virtual Directories Prashanth Mohan, Raghuraman, Venkateswaran S and Dr

1 Semantic File Retrieval in File Systems using Virtual Directories Prashanth Mohan, Raghuraman, Venkateswaran S and Dr. Arul Siromoney {prashmohan, raaghum2222, wenkat.s}@gmail.com and [email protected] Abstract— Hard Disk capacity is no longer a problem. How- ‘/home/user/docs/univ/project’ directory lists the ever, increasing disk capacity has brought with it a new problem, file. The ‘Database File System’ [2], encounters this prob- the problem of locating files. Retrieving a document from a lem by listing recursively all the files within each directory. myriad of files and directories is no easy task. Industry solutions are being created to address this short coming. Thereby a funneling of the files is done by moving through We propose to create an extendable UNIX based File System sub-directories. which will integrate searching as a basic function of the file The objective of our project (henceforth reffered to as system. The File System will provide Virtual Directories which SemFS) is to give the user the choice between a traditional list the results of a query. The contents of the Virtual Directory heirarchial mode of access and a query based mechanism is formed at runtime. Although, the Virtual Directory is used mainly to facilitate the searching of file, It can also be used by which will be presented using virtual directories [1], [5]. These plugins to interpret other queries. virtual directories do not exist on the disk as separate files but will be created in memory at runtime, as per the directory Index Terms— Semantic File System, Virtual Directory, Meta Data name. -

Privacy Engineering for Social Networks

UCAM-CL-TR-825 Technical Report ISSN 1476-2986 Number 825 Computer Laboratory Privacy engineering for social networks Jonathan Anderson December 2012 15 JJ Thomson Avenue Cambridge CB3 0FD United Kingdom phone +44 1223 763500 http://www.cl.cam.ac.uk/ c 2012 Jonathan Anderson This technical report is based on a dissertation submitted July 2012 by the author for the degree of Doctor of Philosophy to the University of Cambridge, Trinity College. Technical reports published by the University of Cambridge Computer Laboratory are freely available via the Internet: http://www.cl.cam.ac.uk/techreports/ ISSN 1476-2986 Privacy engineering for social networks Jonathan Anderson In this dissertation, I enumerate several privacy problems in online social net- works (OSNs) and describe a system called Footlights that addresses them. Foot- lights is a platform for distributed social applications that allows users to control the sharing of private information. It is designed to compete with the performance of today’s centralised OSNs, but it does not trust centralised infrastructure to en- force security properties. Based on several socio-technical scenarios, I extract concrete technical problems to be solved and show how the existing research literature does not solve them. Addressing these problems fully would fundamentally change users’ interactions with OSNs, providing real control over online sharing. I also demonstrate that today’s OSNs do not provide this control: both user data and the social graph are vulnerable to practical privacy attacks. Footlights’ storage substrate provides private, scalable, sharable storage using untrusted servers. Under realistic assumptions, the direct cost of operating this storage system is less than one US dollar per user-year. -

Modern Infrastructure for Dummies®, Dell EMC #Getmodern Special Edition

Modern Infrastructure Dell EMC #GetModern Special Edition by Lawrence C. Miller These materials are © 2017 John Wiley & Sons, Ltd. Any dissemination, distribution, or unauthorized use is strictly prohibited. Modern Infrastructure For Dummies®, Dell EMC #GetModern Special Edition Published by: John Wiley & Sons Singapore Pte Ltd., 1 Fusionopolis Walk, #07-01 Solaris South Tower, Singapore 138628, www.wiley.com © 2017 by John Wiley & Sons Singapore Pte Ltd. Registered Office John Wiley & Sons Singapore Pte Ltd., 1 Fusionopolis Walk, #07-01 Solaris South Tower, Singapore 138628 All rights reserved No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted by the Copyright Act of Singapore 1987, without the prior written permission of the Publisher. For information about how to apply for permission to reuse the copyright material in this book, please see our website http://www.wiley.com/go/ permissions. Trademarks: Wiley, For Dummies, the Dummies Man logo, The Dummies Way, Dummies.com, Making Everything Easier, and related trade dress are trademarks or registered trademarks of John Wiley & Sons, Inc. and/or its affiliates in the United States and other countries, and may not be used without written permission. Dell EMC and the Dell EMC logo are registered trademarks of Dell EMC. All other trademarks are the property of their respective owners. John Wiley & Sons Singapore Pte Ltd., is not associated with any product or vendor mentioned in this book. LIMIT OF LIABILITY/DISCLAIMER OF WARRANTY: WHILE THE PUBLISHER AND AUTHOR HAVE USED THEIR BEST EFFORTS IN PREPARING THIS BOOK, THEY MAKE NO REPRESENTATIONS OR WARRANTIES WITH RESPECT TO THE ACCURACY OR COMPLETENESS OF THE CONTENTS OF THIS BOOK AND SPECIFICALLY DISCLAIM ANY IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. -

Orion File System : File-Level Host-Based Virtualization

Orion File System : File-level Host-based Virtualization Amruta Joshi Faraz Shaikh Sapna Todwal Pune Institute of Computer Pune Institute of Computer Pune Institute of Computer Technology, Technology, Technology, Dhankavadi, Pune 411043, India Dhankavadi, Pune 411043, India Dhankavadi, Pune 411043, India 020-2437-1101 020-2437-1101 020-2437-1101 [email protected] [email protected] [email protected] Abstract— The aim of Orion is to implement a solution that The automatic indexing of files and directories is called provides file-level host-based virtualization that provides for "semantic" because user programmable transducers use better aggregation of content/information based on information about these semantics of files to extract the semantics and properties. File-system organization today properties for indexing. The extracted properties are then very closely mirrors storage paradigms rather than user- stored in a relational database so that queries can be run access paradigms and semantic grouping. All file-system against them. Experimental results from our semantic file hierarchies are containers that are expressed based on their system implementation ORION show that semantic file physical presence (a separate drive letter on Windows, or a systems present a more effective storage abstraction than the particular mount point based on the volume in Unix). traditional tree structured file systems for information We have implemented a solution that will allow sharing, storage and retrieval. users to organize their files -

The Sile Model — a Semantic File System Infrastructure for the Desktop

The Sile Model — A Semantic File System Infrastructure for the Desktop Bernhard Schandl and Bernhard Haslhofer University of Vienna, Department of Distributed and Multimedia Systems {bernhard.schandl,bernhard.haslhofer}@univie.ac.at Abstract. With the increasing storage capacity of personal computing devices, the problems of information overload and information fragmen- tation become apparent on users’ desktops. For the Web, semantic tech- nologies aim at solving this problem by adding a machine-interpretable information layer on top of existing resources, and it has been shown that the application of these technologies to desktop environments is helpful for end users. Certain characteristics of the Semantic Web archi- tecture that are commonly accepted in the Web context, however, are not desirable for desktops; e.g., incomplete information, broken links, or disruption of content and annotations. To overcome these limitations, we propose the sile model, an intermediate data model that combines attributes of the Semantic Web and file systems. This model is intended to be the conceptual foundation of the Semantic Desktop, and to serve as underlying infrastructure on which applications and further services, e.g., virtual file systems, can be built. In this paper, we present the sile model, discuss Semantic Web vocabularies that can be used in the context of this model to annotate desktop data, and analyze the performance of typical operations on a virtual file system implementation that is based on this model. 1 Introduction A large amount of information is stored on personal desktops. We use our per- sonal computing devices—both mobile and stationary—to communicate, to write documents, to organize multimedia content, to search for and retrieve informa- tion, and much more. -

How Financial Service Companies Can Successfully Migrate Critical

How Financial Service Companies Can Successfully Migrate Critical Applications to the Cloud Chapter 1: Why every bank should aspire to be the Google of FinServe PAGE 3 Chapter 2: Reducing latency and other delays that are detrimental to FSIs PAGE 6 Chapter 3: A look to the future of transaction services in the face of modernization PAGE 9 Copyright © 2021 RTInsights. All rights reserved. All other trademarks are the property of their respective companies. The information contained in this publication has been obtained from sources believed to be reliable. NACG LLC and RTInsights disclaim all warranties as to the accuracy, completeness, or adequacy of such information and shall have no liability for errors, omissions or inadequacies in such information. The information expressed herein is subject to change without notice. Copyright (©) 2021 RTInsights How Financial Service Companies Can Successfully Migrate Critical Applications to the Cloud 2 Chapter 1: Why every bank should aspire to be the Google of FinServe Introduction Fintech companies are disrupting the staid world of traditional banking. Financial services institutions (FSIs) are trying to keep pace, be competitive, and become relevant by modernizing operations by leveraging the same technologies as the aggressive fintech startups. Such efforts include a move to cloud and the increased use of data to derive real-time insights for decision-making and automation. While businesses across most industries are making similar moves, FSIs have different requirements than most companies and thus must address special challenges. For example, keeping pace with changing customer desires and market conditions is driving the need for more flexible and highly scalable options when it comes to developing, deploying, maintaining, and updating applications.