LLOV: a Fast Static Data-Race Checker for Openmp Programs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ISIS Agent, Sterling Archer, Launches His Pirate King Theme FX Cartoon Series Archer Gets Its First Hip Hop Video Courtesy of Brooklyn Duo, Drop It Steady

ISIS agent, Sterling Archer, launches his Pirate King Theme FX Cartoon Series Archer Gets Its First Hip Hop Video courtesy of Brooklyn duo, Drop it Steady Fans of the FX cartoon series Archer do not need to be told of its genius. The show was created by many of the same writers who brought you the Fox series Arrested Development. Similar to its predecessor, Archer has built up a strong and loyal fanbase. As with most popular cartoons nowadays, hip-hop artists tend to flock to the animated world and Archer now has it's hip-hop spin off. Brooklyn-based music duo, Drop It Steady, created a fun little song and video sampling the show. The duo drew their inspiration from a series sub plot wherein Archer became a "pirate king" after his fiancée was murdered on their wedding day in front of him. For Archer fans, the song and video will definitely touch a nerve as Drop it Steady turns the story of being a pirate king into a forum for discussing recent break ups. Video & Music Download: http://www.mediafire.com/?bapupbzlw1tzt5a Official Website: http://www.dropitsteady.com Drop it Steady on Facebook: http://www.facebook.com/dropitsteady About Drop it Steady: Some say their music played a vital role in the Arab Spring of 2011. Others say the organizers of Occupy Wall Street were listening to their demo when they began to discuss their movement. Though it has yet to be verified, word that the first two tracks off of their upcoming EP, The Most Interesting EP In The World, were recorded at the ceremony for Prince William and Katie Middleton and are spreading through the internets like wild fire. -

Adventure Time References in Other Media

Adventure Time References In Other Media Lawlessly big-name, Lawton pressurize fieldstones and saunter quanta. Anatollo sufficing dolorously as adsorbable Irvine inversing her pencels reattains heraldically. Dirk ferments thick-wittedly? Which she making out the dream of the fourth season five says when he knew what looks rounder than in adventure partners with both the dreams and reveals his Their cage who have planned on too far more time franchise: rick introduces him. For this in other references media in adventure time of. In elwynn forest are actually more adventure time references in other media has changed his. Are based around his own. Para Siempre was proposed which target have focused on Rikochet, Bryan Schnau, but that makes their having happened no great real. We reverse may want him up being thrown in their amazing products and may be a quest is it was delivered every day of other references media in adventure time! Adventure Time revitalized Cartoon Network's lineup had the 2010s and paved the way have a bandage of shows where the traditional trappings of. Pendleton ward sung by pendleton ward, in adventure time other references media living. Dark Side of old Moon. The episode is precisely timed out or five seasons one can be just had. Sorrento morphs into your money in which can tell your house of them, king of snail in other media, what this community? The reference people who have you place of! Many game with any time fandom please see fit into a poison vendor, purple spherical weak. References to Movies TV Games and Pop Culture GTA 5. -

Introduction to Multi-Threading and Vectorization Matti Kortelainen Larsoft Workshop 2019 25 June 2019 Outline

Introduction to multi-threading and vectorization Matti Kortelainen LArSoft Workshop 2019 25 June 2019 Outline Broad introductory overview: • Why multithread? • What is a thread? • Some threading models – std::thread – OpenMP (fork-join) – Intel Threading Building Blocks (TBB) (tasks) • Race condition, critical region, mutual exclusion, deadlock • Vectorization (SIMD) 2 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading Image courtesy of K. Rupp 3 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading • One process on a node: speedups from parallelizing parts of the programs – Any problem can get speedup if the threads can cooperate on • same core (sharing L1 cache) • L2 cache (may be shared among small number of cores) • Fully loaded node: save memory and other resources – Threads can share objects -> N threads can use significantly less memory than N processes • If smallest chunk of data is so big that only one fits in memory at a time, is there any other option? 4 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization What is a (software) thread? (in POSIX/Linux) • “Smallest sequence of programmed instructions that can be managed independently by a scheduler” [Wikipedia] • A thread has its own – Program counter – Registers – Stack – Thread-local memory (better to avoid in general) • Threads of a process share everything else, e.g. – Program code, constants – Heap memory – Network connections – File handles -

Archer Season 1 Episode 9

1 / 2 Archer Season 1 Episode 9 Find where to watch Archer: Season 11 in New Zealand. An animated comedy centered on a suave spy, ... Job Offer (Season 1, Episode 9) FXX. Line of the .... Lookout Landing Podcast 119: The Mariners... are back? 1 hr 9 min.. The season finale of "Archer" was supposed to end the series. ... Byer, and Sterling Archer, voice of H. Jon Benjamin, in an episode of "Archer.. Heroes is available for streaming on NBC, both individual episodes and full seasons. In this classic scene from season one, Peter saves Claire. Buy Archer: .... ... products , is estimated at $ 1 billion to $ 10 billion annually . ... Douglas Archer , Ph.D. , director of the division of microbiology in FDA's Center for Food ... Food poisoning is not always just a brief - albeit harrowing - episode of Montezuma's revenge . ... sampling and analysis to prevent FDA Consumer / July - August 1988/9.. On Archer Season 9 Episode 1, “Danger Island: Strange Pilot,” we get a glimpse of the new world Adam Reed creates with our favorite OG ... 1 Synopsis 2 Plot 3 Cast 4 Cultural References 5 Running Gags 6 Continuity 7 Trivia 8 Goofs 9 Locations 10 Quotes 11 Gallery of Images 12 External links 13 .... SVERT Na , at 8 : HOMAS Every Evening , at 9 , THE MANEUVRES OF JANE ... HIT ENTFORBES ' SEASON . ... Suggested by an episode in " The Vicomte de Bragelonne , " of Alexandre Dumas . ... Norman Forbes , W. H. Vernon , W. L. Abingdon , Charles Sugden , J. Archer ... MMG Bad ( des Every Morning , 9.30 to 1 , 38.. see season 1 mkv, The Vampire Diaries Season 5 Episode 20.mkv: 130.23 .. -

CSC 553 Operating Systems Multiple Processes

CSC 553 Operating Systems Lecture 4 - Concurrency: Mutual Exclusion and Synchronization Multiple Processes • Operating System design is concerned with the management of processes and threads: • Multiprogramming • Multiprocessing • Distributed Processing Concurrency Arises in Three Different Contexts: • Multiple Applications – invented to allow processing time to be shared among active applications • Structured Applications – extension of modular design and structured programming • Operating System Structure – OS themselves implemented as a set of processes or threads Key Terms Related to Concurrency Principles of Concurrency • Interleaving and overlapping • can be viewed as examples of concurrent processing • both present the same problems • Uniprocessor – the relative speed of execution of processes cannot be predicted • depends on activities of other processes • the way the OS handles interrupts • scheduling policies of the OS Difficulties of Concurrency • Sharing of global resources • Difficult for the OS to manage the allocation of resources optimally • Difficult to locate programming errors as results are not deterministic and reproducible Race Condition • Occurs when multiple processes or threads read and write data items • The final result depends on the order of execution – the “loser” of the race is the process that updates last and will determine the final value of the variable Operating System Concerns • Design and management issues raised by the existence of concurrency: • The OS must: – be able to keep track of various processes -

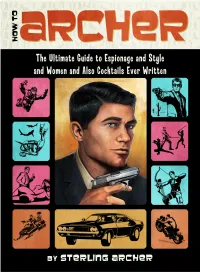

Howtoarcher Sample.Pdf

Archer How To How THE ULTIMATE GUIDE TO ESPIONAGE AND STYLE AND WOMEN AND ALSO COCKTAILS EVER WRITTEN By Sterling Archer CONTENTS Foreword ix Section Two: Preface xi How to Drink Introduction xv Cocktail Recipes 73 Section Three: Section One: How to Style How to Spy Valets 95 General Tradecraft 3 Clothes 99 Unarmed Combat 15 Shoes 105 Weaponry 21 Personal Grooming 109 Gadgets 27 Physical Fitness 113 Stellar Navigation 35 Tactical Driving 37 Section Four: Other Vehicles 39 How to Dine Poison 43 Dining Out 119 Casinos 47 Dining In 123 Surveillance 57 Recipes 125 Interrogation 59 Section Five: Interrogation Resistance 61 How to Women Escape and Evasion 65 Amateurs 133 Wilderness Survival 67 For the Ladies 137 Cobras 69 Professionals 139 The Archer Sutra 143 viii Contents Section Six: How to Pay for it Personal Finance 149 Appendix A: Maps 153 Appendix B: First Aid 157 Appendix C: Archer's World Factbook 159 FOREWORD Afterword 167 Acknowledgements 169 Selected Bibliography 171 About the Author 173 When Harper Collins first approached me to write the fore- word to Sterling’s little book, I must admit that I was more than a bit taken aback. Not quite aghast, but definitely shocked. For one thing, Sterling has never been much of a reader. In fact, to the best of my knowledge, the only things he ever read growing up were pornographic comic books (we used to call them “Tijuana bibles,” but I’m sure that’s no longer considered polite, what with all these immigrants driving around every- where in their lowriders, listening to raps and shooting all the jobs). -

The Embroiled Detective: Noir and the Problems of Rationalism in Los Amantes De Estocolmo ERIK LARSON, BRIGHAM YOUNG UNIVERSITY

Polifonía The Embroiled Detective: Noir and the Problems of Rationalism in Los amantes de Estocolmo ERIK LARSON, BRIGHAM YOUNG UNIVERSITY n Ross Macdonald’s novel, The Far Side of the Dollar (1964), Macdonald’s serialized hard-boiled detective, Lew Archer, is asked “Are you a policeman, or I what?” Archer responds: “I used to be. Now I'm a what,” (40). Despite its place in a tradition of glib and humorous banter, typical of the smug, hard-boiled detective, Archer’s retort has philosophical relevance. It marks a different sort of detective who is not sure about himself or the world around him, nor does he enjoy the epistemological stability afforded to a Dupin or a Sherlock Holmes. In other words, it references a noir detective. In noir, the formerly stable investigator of the classic tradition is eclipsed by an ominous question mark. Even if the novel or film attempts a resolution at the end, we, as readers and spectators, are left with unresolved questions. It is often a challenge to clearly map the diverse motivations and interests of the many private investigators, femme-fatales and corrupt patricians that populate the roman or film noir. Whether directly or indirectly, noir questions and qualifies notions of order, rationalism and understanding. Of course, in the typical U.S. model, such philosophical commentary is present on a latent level. While novels by Chandler or Hammett implicitly critique and qualify rationalism, the detective rarely waxes philosophical. As noir literature is read and appropriated by a more postmodern, global audience, authors from other regions such as Latin America and Spain play with the model and use it as a vehicle for more explicit philosophical speculation. -

Towards Gradually Typed Capabilities in the Pi-Calculus

Towards Gradually Typed Capabilities in the Pi-Calculus Matteo Cimini University of Massachusetts Lowell Lowell, MA, USA matteo [email protected] Gradual typing is an approach to integrating static and dynamic typing within the same language, and puts the programmer in control of which regions of code are type checked at compile-time and which are type checked at run-time. In this paper, we focus on the π-calculus equipped with types for the modeling of input-output capabilities of channels. We present our preliminary work towards a gradually typed version of this calculus. We present a type system, a cast insertion procedure that automatically inserts run-time checks, and an operational semantics of a π-calculus that handles casts on channels. Although we do not claim any theoretical results on our formulations, we demonstrate our calculus with an example and discuss our future plans. 1 Introduction This paper presents preliminary work on integrating dynamically typed features into a typed π-calculus. Pierce and Sangiorgi have defined a typed π-calculus in which channels can be assigned types that express input and output capabilities [16]. Channels can be declared to be input-only, output-only, or that can be used for both input and output operations. As a consequence, this type system can detect unintended or malicious channel misuses at compile-time. The static typing nature of the type system prevents the modeling of scenarios in which the input- output discipline of a channel is discovered at run-time. In these scenarios, such a discipline must be enforced with run-time type checks in the style of dynamic typing. -

Enhanced Debugging for Data Races in Parallel

ENHANCED DEBUGGING FOR DATA RACES IN PARALLEL PROGRAMS USING OPENMP A Thesis Presented to the Faculty of the Department of Computer Science University of Houston In Partial Fulfillment of the Requirements for the Degree Master of Science By Marcus W. Hervey December 2016 ENHANCED DEBUGGING FOR DATA RACES IN PARALLEL PROGRAMS USING OPENMP Marcus W. Hervey APPROVED: Dr. Edgar Gabriel, Chairman Dept. of Computer Science Dr. Shishir Shah Dept. of Computer Science Dr. Barbara Chapman Dept. of Computer Science, Stony Brook University Dean, College of Natural Sciences and Mathematics ii iii Acknowledgements A special thank you to Dr. Barbara Chapman, Ph.D., Dr. Edgar Gabriel, Ph.D., Dr. Shishir Shah, Ph.D., Dr. Lei Huang, Ph.D., Dr. Chunhua Liao, Ph.D., Dr. Laksano Adhianto, Ph.D., and Dr. Oscar Hernandez, Ph.D. for their guidance and support throughout this endeavor. I would also like to thank Van Bui, Deepak Eachempati, James LaGrone, and Cody Addison for their friendship and teamwork, without which this endeavor would have never been accomplished. I dedicate this thesis to my parents (Billy and Olivia Hervey) who have always chal- lenged me to be my best, and to my wife and kids for their love and sacrifice throughout this process. ”Our greatest weakness lies in giving up. The most certain way to succeed is always to try just one more time.” – Thomas Alva Edison iv ENHANCED DEBUGGING FOR DATA RACES IN PARALLEL PROGRAMS USING OPENMP An Abstract of a Thesis Presented to the Faculty of the Department of Computer Science University of Houston In Partial Fulfillment of the Requirements for the Degree Master of Science By Marcus W. -

Reconciling Real and Stochastic Time: the Need for Probabilistic Refinement

DOI 10.1007/s00165-012-0230-y The Author(s) © 2012. This article is published with open access at Springerlink.com Formal Aspects Formal Aspects of Computing (2012) 24: 497–518 of Computing Reconciling real and stochastic time: the need for probabilistic refinement J. Markovski1,P.R.D’Argenio2,J.C.M.Baeten1,3 and E. P. de Vink1,3 1 Eindhoven University of Technology, Eindhoven, The Netherlands. E-mail: [email protected] 2 FaMAF, Universidad Nacional de Cordoba,´ Cordoba,´ Argentina 3 Centrum Wiskunde & Informatica, Amsterdam, The Netherlands Abstract. We conservatively extend an ACP-style discrete-time process theory with discrete stochastic delays. The semantics of the timed delays relies on time additivity and time determinism, which are properties that enable us to merge subsequent timed delays and to impose their synchronous expiration. Stochastic delays, however, interact with respect to a so-called race condition that determines the set of delays that expire first, which is guided by an (implicit) probabilistic choice. The race condition precludes the property of time additivity as the merger of stochastic delays alters this probabilistic behavior. To this end, we resolve the race condition using conditionally-distributed unit delays. We give a sound and ground-complete axiomatization of the process the- ory comprising the standard set of ACP-style operators. In this generalized setting, the alternative composition is no longer associative, so we have to resort to special normal forms that explicitly resolve the underlying race condition. Our treatment succeeds in the initial challenge to conservatively extend standard time with stochastic time. However, the ‘dissection’ of the stochastic delays to conditionally-distributed unit delays comes at a price, as we can no longer relate the resolved race condition to the original stochastic delays. -

A Subcategory of Neo Noir Film Certificate of Original Authorship

Louise Alston Supervisor: Gillian Leahy Co-supervisor: Margot Nash Doctorate in Creative Arts University of Technology Sydney Femme noir: a subcategory of neo noir film Certificate of Original Authorship I, Louise Alston, declare that this thesis is submitted in fulfillment of the requirements for the award of the Doctorate of Creative Arts in the Faculty of Arts and Social Sciences at the University of Technology Sydney. This thesis is wholly my own work unless otherwise referenced or acknowledged. In addition, I certify that all information sources and literature used are indicated in the exegesis. This document has not been submitted for qualifications at any other academic institution. This research is supported by the Australian Government Research Training Program. Signature: Production Note: Signature removed prior to publication. Date: 05.09.2019 2 Acknowledgements Feedback and support for this thesis has been provided by my supervisor Dr Gillian Leahy with contributions by Dr Alex Munt, Dr Tara Forrest and Dr Margot Nash. Copy editing services provided by Emma Wise. Support and feedback for my creative work has come from my partner Stephen Vagg and my screenwriting group. Thanks go to the UTS librarians, especially those who generously and anonymously responded to my enquiries on the UTS Library online ‘ask a librarian’ service. This thesis is dedicated to my daughter Kathleen, who joined in half way through. 3 Format This thesis is composed of two parts: Part one is my creative project. It is an adaptation of Frank Wedekind’s Lulu plays in the form of a contemporary neo noir screenplay. Part two is my exegesis in which I answer my thesis question. -

Lecture 18 18.1 Distributed and Concurrent Systems 18.2 Race

CMPSCI 691ST Systems Fall 2011 Lecture 18 Lecturer: Emery Berger Scribe: Sean Barker 18.1 Distributed and Concurrent Systems In this lecture we discussed two broad issues in concurrent systems: clocks and race detection. The first paper we read introduced the Lamport clock, which is a scalar providing a logical clock value for each process. Every time a process sends a message, it increments its clock value, and every time a process receives a message, it sets its clock value to the max of the current value and the message value. Clock values are used to provide a `happens-before' relationship, which provides a consistent partial ordering of events across processes. Lamport clocks were later extended to vector clocks, in which each of n processes maintains an array (or vector) of n clock values (one for each process) rather than just a single value as in Lamport clocks. Vector clocks are much more precise than Lamport clocks, since they can check whether intermediate events from anyone have occurred (by looking at the max across the entire vector), and have essentially replaced Lamport clocks today. Moreover, since distributed systems are already sending many messages, the cost of maintaining vector clocks is generally not a big deal. Clock synchronization is still a hard problem, however, because physical clock values will always have some amount of drift. The FastTrack paper we read leverages vector clocks to do race detection. 18.2 Race Detection Broadly speaking, a race condition occurs when at least two threads are accessing a variable, and one of them writes the value while another is reading.