Multithreading

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IEEE Paper Template in A4

Vasantha.N.S. et al, International Journal of Computer Science and Mobile Computing, Vol.6 Issue.6, June- 2017, pg. 302-306 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 6.017 IJCSMC, Vol. 6, Issue. 6, June 2017, pg.302 – 306 Enhancing Performance in Multiple Execution Unit Architecture using Tomasulo Algorithm Vasantha.N.S.1, Meghana Kulkarni2 ¹Department of VLSI and Embedded Systems, Centre for PG Studies, VTU Belagavi, India ²Associate Professor Department of VLSI and Embedded Systems, Centre for PG Studies, VTU Belagavi, India 1 [email protected] ; 2 [email protected] Abstract— Tomasulo’s algorithm is a computer architecture hardware algorithm for dynamic scheduling of instructions that allows out-of-order execution, designed to efficiently utilize multiple execution units. It was developed by Robert Tomasulo at IBM. The major innovations of Tomasulo’s algorithm include register renaming in hardware. It also uses the concept of reservation stations for all execution units. A common data bus (CDB) on which computed values broadcast to all reservation stations that may need them is also present. The algorithm allows for improved parallel execution of instructions that would otherwise stall under the use of other earlier algorithms such as scoreboarding. Keywords— Reservation Station, Register renaming, common data bus, multiple execution unit, register file I. INTRODUCTION The instructions in any program may be executed in any of the 2 ways namely sequential order and the other is the data flow order. The sequential order is the one in which the instructions are executed one after the other but in reality this flow is very rare in programs. -

Computer Science 246 Computer Architecture Spring 2010 Harvard University

Computer Science 246 Computer Architecture Spring 2010 Harvard University Instructor: Prof. David Brooks [email protected] Dynamic Branch Prediction, Speculation, and Multiple Issue Computer Science 246 David Brooks Lecture Outline • Tomasulo’s Algorithm Review (3.1-3.3) • Pointer-Based Renaming (MIPS R10000) • Dynamic Branch Prediction (3.4) • Other Front-end Optimizations (3.5) – Branch Target Buffers/Return Address Stack Computer Science 246 David Brooks Tomasulo Review • Reservation Stations – Distribute RAW hazard detection – Renaming eliminates WAW hazards – Buffering values in Reservation Stations removes WARs – Tag match in CDB requires many associative compares • Common Data Bus – Achilles heal of Tomasulo – Multiple writebacks (multiple CDBs) expensive • Load/Store reordering – Load address compared with store address in store buffer Computer Science 246 David Brooks Tomasulo Organization From Mem FP Op FP Registers Queue Load Buffers Load1 Load2 Load3 Load4 Load5 Store Load6 Buffers Add1 Add2 Mult1 Add3 Mult2 Reservation To Mem Stations FP adders FP multipliers Common Data Bus (CDB) Tomasulo Review 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 LD F0, 0(R1) Iss M1 M2 M3 M4 M5 M6 M7 M8 Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 M3 Wb SUBI R1, R1, 8 Iss Ex Wb BNEZ R1, Loop Iss Ex Wb LD F0, 0(R1) Iss Iss Iss Iss M Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 -

Chapter 1: Introduction What Is an Operating System?

Chapter 1: Introduction What is an Operating System? Mainframe Systems Desktop Systems Multiprocessor Systems Distributed Systems Clustered System Real -Time Systems Handheld Systems Computing Environments Operating System Concepts 1.1 Silberschatz, Galvin and Gagne 2002 What is an Operating System? A program that acts as an intermediary between a user of a computer and the computer hardware. Operating system goals: ) Execute user programs and make solving user problems easier. ) Make the computer system convenient to use. Use the computer hardware in an efficient manner. Operating System Concepts 1.2 Silberschatz, Galvin and Gagne 2002 1 Computer System Components 1. Hardware – provides basic computing resources (CPU, memory, I/O devices). 2. Operating system – controls and coordinates the use of the hardware among the various application programs for the various users. 3. Applications programs – define the ways in which the system resources are used to solve the computing problems of the users (compilers, database systems, video games, business programs). 4. Users (people, machines, other computers). Operating System Concepts 1.3 Silberschatz, Galvin and Gagne 2002 Abstract View of System Components Operating System Concepts 1.4 Silberschatz, Galvin and Gagne 2002 2 Operating System Definitions Resource allocator – manages and allocates resources. Control program – controls the execution of user programs and operations of I/O devices . Kernel – the one program running at all times (all else being application programs). -

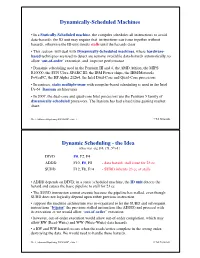

Dynamic Scheduling

Dynamically-Scheduled Machines ! • In a Statically-Scheduled machine, the compiler schedules all instructions to avoid data-hazards: the ID unit may require that instructions can issue together without hazards, otherwise the ID unit inserts stalls until the hazards clear! • This section will deal with Dynamically-Scheduled machines, where hardware- based techniques are used to detect are remove avoidable data-hazards automatically, to allow ‘out-of-order’ execution, and improve performance! • Dynamic scheduling used in the Pentium III and 4, the AMD Athlon, the MIPS R10000, the SUN Ultra-SPARC III; the IBM Power chips, the IBM/Motorola PowerPC, the HP Alpha 21264, the Intel Dual-Core and Quad-Core processors! • In contrast, static multiple-issue with compiler-based scheduling is used in the Intel IA-64 Itanium architectures! • In 2007, the dual-core and quad-core Intel processors use the Pentium 5 family of dynamically-scheduled processors. The Itanium has had a hard time gaining market share.! Ch. 6, Advanced Pipelining-DYNAMIC, slide 1! © Ted Szymanski! Dynamic Scheduling - the Idea ! (class text - pg 168, 171, 5th ed.)" !DIVD ! !F0, F2, F4! ADDD ! !F10, F0, F8 !- data hazard, stall issue for 23 cc! SUBD ! !F12, F8, F14 !- SUBD inherits 23 cc of stalls! • ADDD depends on DIVD; in a static scheduled machine, the ID unit detects the hazard and causes the basic pipeline to stall for 23 cc! • The SUBD instruction cannot execute because the pipeline has stalled, even though SUBD does not logically depend upon either previous instruction! • suppose the machine architecture was re-organized to let the SUBD and subsequent instructions “bypass” the previous stalled instruction (the ADDD) and proceed with its execution -> we would allow “out-of-order” execution! • however, out-of-order execution would allow out-of-order completion, which may allow RW (Read-Write) and WW (Write-Write) data hazards ! • a RW and WW hazard occurs when the reads/writes complete in the wrong order, destroying the data. -

PIPELINING and ASSOCIATED TIMING ISSUES Introduction: While

PIPELINING AND ASSOCIATED TIMING ISSUES Introduction: While studying sequential circuits, we studied about Latches and Flip Flops. While Latches formed the heart of a Flip Flop, we have explored the use of Flip Flops in applications like counters, shift registers, sequence detectors, sequence generators and design of Finite State machines. Another important application of latches and flip flops is in pipelining combinational/algebraic operation. To understand what is pipelining consider the following example. Let us take a simple calculation which has three operations to be performed viz. 1. add a and b to get (a+b), 2. get magnitude of (a+b) and 3. evaluate log |(a + b)|. Each operation would consume a finite period of time. Let us assume that each operation consumes 40 nsec., 35 nsec. and 60 nsec. respectively. The process can be represented pictorially as in Fig. 1. Consider a situation when we need to carry this out for a set of 100 such pairs. In a normal course when we do it one by one it would take a total of 100 * 135 = 13,500 nsec. We can however reduce this time by the realization that the whole process is a sequential process. Let the values to be evaluated be a1 to a100 and the corresponding values to be added be b1 to b100. Since the operations are sequential, we can first evaluate (a1 + b1) while the value |(a1 + b1)| is being evaluated the unit evaluating the sum is dormant and we can use it to evaluate (a2 + b2) giving us both |(a1 + b1)| and (a2 + b2) at the end of another evaluation period. -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Microprocessor Architecture

EECE416 Microcomputer Fundamentals Microprocessor Architecture Dr. Charles Kim Howard University 1 Computer Architecture Computer System CPU (with PC, Register, SR) + Memory 2 Computer Architecture •ALU (Arithmetic Logic Unit) •Binary Full Adder 3 Microprocessor Bus 4 Architecture by CPU+MEM organization Princeton (or von Neumann) Architecture MEM contains both Instruction and Data Harvard Architecture Data MEM and Instruction MEM Higher Performance Better for DSP Higher MEM Bandwidth 5 Princeton Architecture 1.Step (A): The address for the instruction to be next executed is applied (Step (B): The controller "decodes" the instruction 3.Step (C): Following completion of the instruction, the controller provides the address, to the memory unit, at which the data result generated by the operation will be stored. 6 Harvard Architecture 7 Internal Memory (“register”) External memory access is Very slow For quicker retrieval and storage Internal registers 8 Architecture by Instructions and their Executions CISC (Complex Instruction Set Computer) Variety of instructions for complex tasks Instructions of varying length RISC (Reduced Instruction Set Computer) Fewer and simpler instructions High performance microprocessors Pipelined instruction execution (several instructions are executed in parallel) 9 CISC Architecture of prior to mid-1980’s IBM390, Motorola 680x0, Intel80x86 Basic Fetch-Execute sequence to support a large number of complex instructions Complex decoding procedures Complex control unit One instruction achieves a complex task 10 -

United States Patent (19) 11 Patent Number: 5,680,565 Glew Et Al

USOO568.0565A United States Patent (19) 11 Patent Number: 5,680,565 Glew et al. 45 Date of Patent: Oct. 21, 1997 (54) METHOD AND APPARATUS FOR Diefendorff, "Organization of the Motorola 88110 Super PERFORMING PAGE TABLE WALKS INA scalar RISC Microprocessor.” IEEE Micro, Apr. 1996, pp. MCROPROCESSOR CAPABLE OF 40-63. PROCESSING SPECULATIVE Yeager, Kenneth C. "The MIPS R10000 Superscalar Micro INSTRUCTIONS processor.” IEEE Micro, Apr. 1996, pp. 28-40 Apr. 1996. 75 Inventors: Andy Glew, Hillsboro; Glenn Hinton; Smotherman et al. "Instruction Scheduling for the Motorola Haitham Akkary, both of Portland, all 88.110" Microarchitecture 1993 International Symposium, of Oreg. pp. 257-262. Circello et al., “The Motorola 68060 Microprocessor.” 73) Assignee: Intel Corporation, Santa Clara, Calif. COMPCON IEEE Comp. Soc. Int'l Conf., Spring 1993, pp. 73-78. 21 Appl. No.: 176,363 "Superscalar Microprocessor Design" by Mike Johnson, Advanced Micro Devices, Prentice Hall, 1991. 22 Filed: Dec. 30, 1993 Popescu, et al., "The Metaflow Architecture.” IEEE Micro, (51) Int. C. G06F 12/12 pp. 10-13 and 63–73, Jun. 1991. 52 U.S. Cl. ........................... 395/415: 395/800; 395/383 Primary Examiner-David K. Moore 58) Field of Search .................................. 395/400, 375, Assistant Examiner-Kevin Verbrugge 395/800, 414, 415, 421.03 Attorney, Agent, or Firm-Blakely, Sokoloff, Taylor & 56 References Cited Zafiman U.S. PATENT DOCUMENTS 57 ABSTRACT 5,136,697 8/1992 Johnson .................................. 395/586 A page table walk is performed in response to a data 5,226,126 7/1993 McFarland et al. 395/.394 translation lookaside buffer miss based on a speculative 5,230,068 7/1993 Van Dyke et al. -

Instruction Pipelining (1 of 7)

Chapter 5 A Closer Look at Instruction Set Architectures Objectives • Understand the factors involved in instruction set architecture design. • Gain familiarity with memory addressing modes. • Understand the concepts of instruction- level pipelining and its affect upon execution performance. 5.1 Introduction • This chapter builds upon the ideas in Chapter 4. • We present a detailed look at different instruction formats, operand types, and memory access methods. • We will see the interrelation between machine organization and instruction formats. • This leads to a deeper understanding of computer architecture in general. 5.2 Instruction Formats (1 of 31) • Instruction sets are differentiated by the following: – Number of bits per instruction. – Stack-based or register-based. – Number of explicit operands per instruction. – Operand location. – Types of operations. – Type and size of operands. 5.2 Instruction Formats (2 of 31) • Instruction set architectures are measured according to: – Main memory space occupied by a program. – Instruction complexity. – Instruction length (in bits). – Total number of instructions in the instruction set. 5.2 Instruction Formats (3 of 31) • In designing an instruction set, consideration is given to: – Instruction length. • Whether short, long, or variable. – Number of operands. – Number of addressable registers. – Memory organization. • Whether byte- or word addressable. – Addressing modes. • Choose any or all: direct, indirect or indexed. 5.2 Instruction Formats (4 of 31) • Byte ordering, or endianness, is another major architectural consideration. • If we have a two-byte integer, the integer may be stored so that the least significant byte is followed by the most significant byte or vice versa. – In little endian machines, the least significant byte is followed by the most significant byte. -

Introduction to Multi-Threading and Vectorization Matti Kortelainen Larsoft Workshop 2019 25 June 2019 Outline

Introduction to multi-threading and vectorization Matti Kortelainen LArSoft Workshop 2019 25 June 2019 Outline Broad introductory overview: • Why multithread? • What is a thread? • Some threading models – std::thread – OpenMP (fork-join) – Intel Threading Building Blocks (TBB) (tasks) • Race condition, critical region, mutual exclusion, deadlock • Vectorization (SIMD) 2 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading Image courtesy of K. Rupp 3 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading • One process on a node: speedups from parallelizing parts of the programs – Any problem can get speedup if the threads can cooperate on • same core (sharing L1 cache) • L2 cache (may be shared among small number of cores) • Fully loaded node: save memory and other resources – Threads can share objects -> N threads can use significantly less memory than N processes • If smallest chunk of data is so big that only one fits in memory at a time, is there any other option? 4 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization What is a (software) thread? (in POSIX/Linux) • “Smallest sequence of programmed instructions that can be managed independently by a scheduler” [Wikipedia] • A thread has its own – Program counter – Registers – Stack – Thread-local memory (better to avoid in general) • Threads of a process share everything else, e.g. – Program code, constants – Heap memory – Network connections – File handles -

Parallel Programming

Parallel Programming Parallel Programming Parallel Computing Hardware Shared memory: multiple cpus are attached to the BUS all processors share the same primary memory the same memory address on different CPU’s refer to the same memory location CPU-to-memory connection becomes a bottleneck: shared memory computers cannot scale very well Parallel Programming Parallel Computing Hardware Distributed memory: each processor has its own private memory computational tasks can only operate on local data infinite available memory through adding nodes requires more difficult programming Parallel Programming OpenMP versus MPI OpenMP (Open Multi-Processing): easy to use; loop-level parallelism non-loop-level parallelism is more difficult limited to shared memory computers cannot handle very large problems MPI(Message Passing Interface): require low-level programming; more difficult programming scalable cost/size can handle very large problems Parallel Programming MPI Distributed memory: Each processor can access only the instructions/data stored in its own memory. The machine has an interconnection network that supports passing messages between processors. A user specifies a number of concurrent processes when program begins. Every process executes the same program, though theflow of execution may depend on the processors unique ID number (e.g. “if (my id == 0) then ”). ··· Each process performs computations on its local variables, then communicates with other processes (repeat), to eventually achieve the computed result. In this model, processors pass messages both to send/receive information, and to synchronize with one another. Parallel Programming Introduction to MPI Communicators and Groups: MPI uses objects called communicators and groups to define which collection of processes may communicate with each other. -

Real-Time Performance During CUDA™ a Demonstration and Analysis of Redhawk™ CUDA RT Optimizations

A Concurrent Real-Time White Paper 2881 Gateway Drive Pompano Beach, FL 33069 (954) 974-1700 www.concurrent-rt.com Real-Time Performance During CUDA™ A Demonstration and Analysis of RedHawk™ CUDA RT Optimizations By: Concurrent Real-Time Linux® Development Team November 2010 Overview There are many challenges to creating a real-time Linux distribution that provides guaranteed low process-dispatch latencies and minimal process run-time jitter. Concurrent Real Time’s RedHawk Linux distribution meets and exceeds these challenges, providing a hard real-time environment on many qualified hardware configurations, even in the presence of a heavy system load. However, there are additional challenges faced when guaranteeing real-time performance of processes while CUDA applications are simultaneously running on the system. The proprietary CUDA driver supplied by NVIDIA® frequently makes demands upon kernel resources that can dramatically impact real-time performance. This paper discusses a demonstration application developed by Concurrent to illustrate that RedHawk Linux kernel optimizations allow hard real-time performance guarantees to be preserved even while demanding CUDA applications are running. The test results will show how RedHawk performance compares to CentOS performance running the same application. The design and implementation details of the demonstration application are also discussed in this paper. Demonstration This demonstration features two selectable real-time test modes: 1. Jitter Mode: measure and graph the run-time jitter of a real-time process 2. PDL Mode: measure and graph the process-dispatch latency of a real-time process While the demonstration is running, it is possible to switch between these different modes at any time.