Motivation Real-Time Rendering Offline 3D Graphics Rendering New

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Realizm Data Sheet200.Indd

The Ultimate in Professional 3D Graphics Processing Welcome to a new kind of Realizm . where precision, speed, and your creativity are combined in ways you’ve only dreamed. 3Dlabs® puts the power of the industry’s most advanced visual processing right at your fingertips with Wildcat® Realizm™ 200. 3Dlabs’ AGP 8x-based graphics solution delivers all the performance, image fidelity, and features you’d expect from a professional graphics accelerator. So, whether you’re working on realistic animations, intricate CAD renderings, or complex scientific visualizations – if you can imagine it, you can make it real with Wildcat Realizm. Remove the boundaries to Remove the boundaries to your creativity. your productivity. With Wildcat Realizm 200’s no- Wildcat Realizm graphics compromise performance plus accelerators offer the highest levels the industry’s largest memory of image precision. You get quality resources, you’ll have more time and performance in one advanced to devote to your creativity. technology solution. Unmatched VPU Performance Extreme Geometry Performance • The most advanced Visual Processing Unit (VPU) available today • Manipulate the most complex models easily in real-time offering unparalleled levels of performance, programmability, • Wildcat Realizm’s VPU features full floating-point pipelines from With over 40 years of combined engineering talent, accuracy, and fidelity input vertices to displayed pixels to offer you unparalleled levels of 3Dlabs is the only graphics hardware developer 100% • Optimized floating-point precision across the entire pipeline performance, programmability, accuracy, and fidelity dedicated to building solutions designed specifically for The Most Memory Available on Any AGP Graphics Card – Image Quality graphics professionals. • Genuine real-time image manipulation and rendering using advanced 512 MB The Advanced Benefits of Wildcat Realizm 200 . -

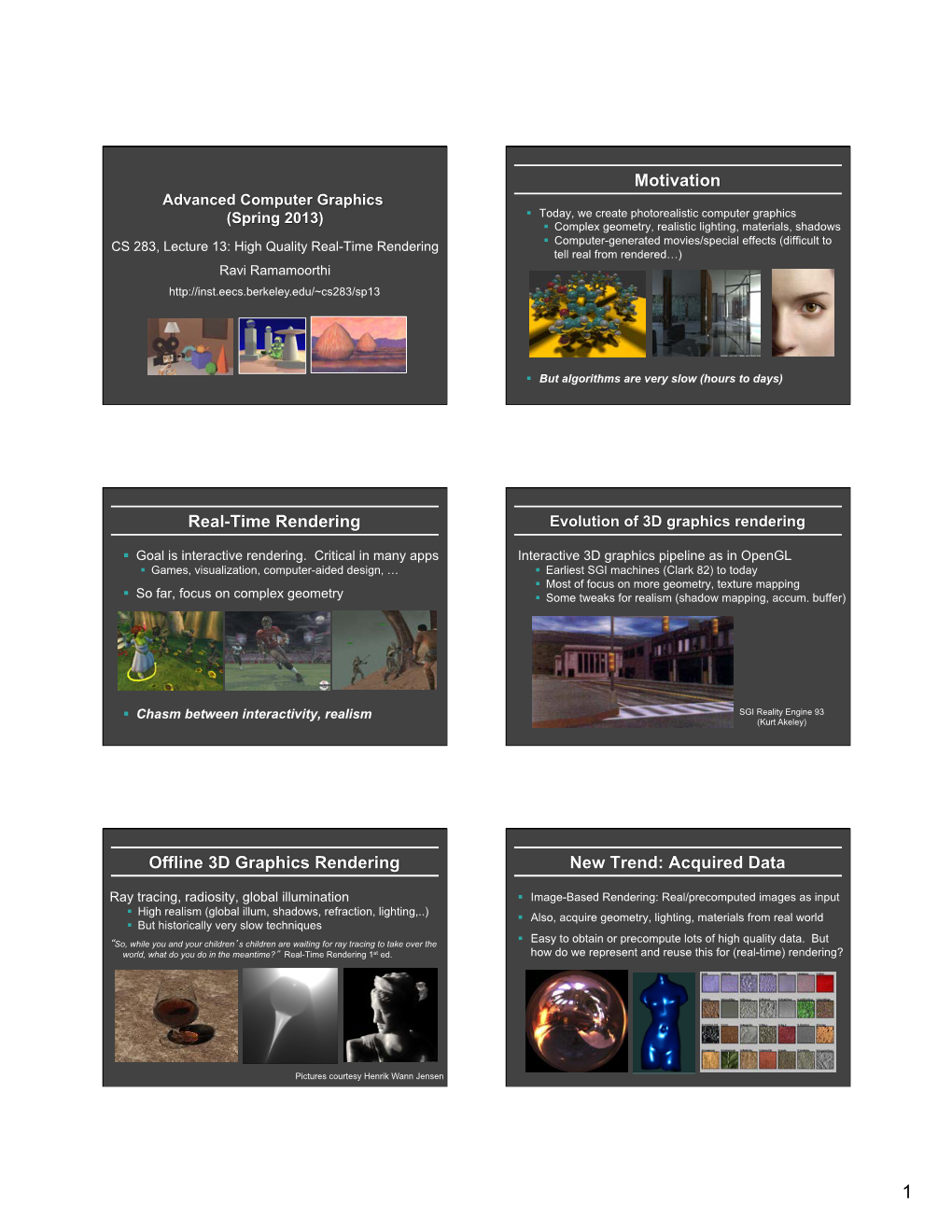

Advanced Computer Graphics to Do Motivation Real-Time Rendering

To Do Advanced Computer Graphics § Assignment 2 due Feb 19 § Should already be well on way. CSE 190 [Winter 2016], Lecture 12 § Contact us for difficulties etc. Ravi Ramamoorthi http://www.cs.ucsd.edu/~ravir Motivation Real-Time Rendering § Today, create photorealistic computer graphics § Goal: interactive rendering. Critical in many apps § Complex geometry, lighting, materials, shadows § Games, visualization, computer-aided design, … § Computer-generated movies/special effects (difficult or impossible to tell real from rendered…) § Until 10-15 years ago, focus on complex geometry § CSE 168 images from rendering competition (2011) § § But algorithms are very slow (hours to days) Chasm between interactivity, realism Evolution of 3D graphics rendering Offline 3D Graphics Rendering Interactive 3D graphics pipeline as in OpenGL Ray tracing, radiosity, photon mapping § Earliest SGI machines (Clark 82) to today § High realism (global illum, shadows, refraction, lighting,..) § Most of focus on more geometry, texture mapping § But historically very slow techniques § Some tweaks for realism (shadow mapping, accum. buffer) “So, while you and your children’s children are waiting for ray tracing to take over the world, what do you do in the meantime?” Real-Time Rendering SGI Reality Engine 93 (Kurt Akeley) Pictures courtesy Henrik Wann Jensen 1 New Trend: Acquired Data 15 years ago § Image-Based Rendering: Real/precomputed images as input § High quality rendering: ray tracing, global illumination § Little change in CSE 168 syllabus, from 2003 to -

Sun Opengl 1.3 for Solaris Implementation and Performance Guide

Sun™ OpenGL 1.3 for Solaris™ Implementation and Performance Guide Sun Microsystems, Inc. www.sun.com Part No. 817-2997-11 November 2003, Revision A Submit comments about this document at: http://www.sun.com/hwdocs/feedback Copyright 2003 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, U.S.A. All rights reserved. Sun Microsystems, Inc. has intellectual property rights relating to technology that is described in this document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S. patents listed at http://www.sun.com/patents and one or more additional patents or pending patent applications in the U.S. and in other countries. This document and the product to which it pertains are distributed under licenses restricting their use, copying, distribution, and decompilation. No part of the product or of this document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and in other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, SunSoft, SunDocs, SunExpress, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. -

From a Programmable Pipeline to an Efficient Stream Processor

Computation on GPUs: From a Programmable Pipeline to an Efficient Stream Processor João Luiz Dihl Comba 1 Carlos A. Dietrich1 Christian A. Pagot1 Carlos E. Scheidegger1 Resumo: O recente desenvolvimento de hardware gráfico apresenta uma mu- dança na implementação do pipeline gráfico, de um conjunto fixo de funções, para programas especiais desenvolvidos pelo usuário que são executados para cada vértice ou fragmento. Esta programabilidade permite implementações de diversos algoritmos diretamente no hardware gráfico. Neste tutorial serão apresentados as principais técnicas relacionadas a implementação de algoritmos desta forma. Serão usados exemplos baseados em artigos recentemente publicados. Através da revisão e análise da contribuição dos mesmos, iremos explicar as estratégias por trás do desenvolvimento de algoritmos desta forma, formando uma base que permita ao leitor criar seus próprios algoritmos. Palavras-chave: Hardware Gráfico Programável, GPU, Pipeline Gráfico Abstract: The recent development of graphics hardware is presenting a change in the implementation of the graphics pipeline, from a fixed set of functions, to user- developed special programs to be executed on a per-vertex or per-fragment basis. This programmability allows the efficient implementation of different algorithms directly on the graphics hardware. In this tutorial we will present the main techniques that are involved in implementing algorithms in this fashion. We use several test cases based on recently published pa- pers. By reviewing and analyzing their contribution, we explain the reasoning behind the development of the algorithms, establishing a common ground that allow readers to create their own novel algorithms. Keywords: Programmable Graphics Hardware, GPU, Graphics Pipeline 1UFRGS, Instituto de Informática, Caixa Postal 15064, 91501-970 Porto Alegre/RS, Brasil e-mail: {comba, cadietrich, capagot, carlossch}@inf.ufrgs.br Este trabalho foi parcialmente financiado pela CAPES, CNPq e FAPERGS. -

Evolution of the Programmable Graphics Pipeline

Course Roadmap Evolution of the Programmable Graphics ►Graphics Pipeline (GLSL) ►GPGPU (GLSL) Pipeline Briefly ►GPU Computing (CUDA, OpenCL) Lecture 2 ►Choose your own adventure Original Slides by: Suresh Venkatasubramanian Choose your own adventure Updates by Joseph Kider Student Presentation Final Project ►Goal: Prepare you for your presentation and project Lecture Outline The evolution of the pipeline ► A historical perspective on the graphics pipeline Elements of the graphics pipeline: Parameters controlling design of the pipeline: Dimensions of innovation. 1. A scene description: vertices, triangles, colors, lighting 1. Where is the boundary Where we are today between CPU and GPU ? 2. Transformations that map the Fixed-function vs programmable pipelines scene to a camera viewpoint 2. What transfer method is used ? ► A closer look at the fixed function pipeline 3. “Effects”: texturing, shadow 3. What resources are provided Walk thru the sequence of operations mapping, lighting calculations at each step ? Reinterpret these as stream operations 4. Rasterizing: converting geometry 4. What units can access which into pixels GPU memory elements ? ► We can program the fixed-function pipeline ! 5. Pixel processing: depth tests, Some examples stencil tests, and other per-pixel ► What constitutes data and memory, and how operations. access affects program design. Generation I: 3dfx Voodoo (1996) Aside: Mario Kart 64 • One of the first true 3D game cards ►High fragment load / low vertex load • Worked by supplementing standard 2D video -

Evolution of the Programmable Graphics Pipeline Graphics Pipeline

Administrivia Evolution of the Tip: google “cis 565” Programmable Slides posted before each class Graphics Pipeline Tentative assignment dates on website 1st assignment handed out today Patrick Cozzi Write concisely University of Pennsylvania Due start of class, one week from today CIS 565 - Spring 2011 Google group in progress FYI. GDC Early Registration - 01/24 Survey Results Survey Results 15/23 – graphics experience Class interests Most students have usable video cards Pure architecture Game rendering Lerk – don’t be scared Physical simulations I want to be a Toys R Us kid too Animation Vision algorithms Image/video processing … 1 Course Roadmap Agenda Graphics Pipeline (GLSL) Why program the GPU? GPGPU (GLSL) Graphics Review Briefly Evolution of the Programmable Graphics GPU Computing (CUDA, OpenCL) Pipeline Choose your own adventure Understand the past Student Presentation Final Project Goal : Prepare you for your presentation and project Why Program the GPU? Why Program the GPU? Graph from: http://developer.download.nvidia.com/compute/cuda/3_2_prod/toolkit/docs/CUDA_C_Programming_Guide.pdf Graph from: http://developer.download.nvidia.com/compute/cuda/3_2_prod/toolkit/docs/CUDA_C_Programming_Guide.pdf 2 Why Program the GPU? NVIDIA GPU Evolution Compute Intel Core i7 – 4 cores – 100 GFLOP NVIDIA GTX280 – 240 cores – 1 TFLOP Memory Bandwidth System Memory – 60 GB/s NVIDIA GT200 – 150 GB/s Install Base Over 200 million NVIDIA G80s shipped Numbers from Programming Massively Parallel Processors . Slide from David Luebke: http://s08.idav.ucdavis.edu/luebke-nvidia-gpu-architecture.pdf Graphics Review Graphics Review: Modeling Modeling Modeling Rendering Polygons vs Triangles How do you store a triangle mesh? Animation Implicit Surfaces Height maps … 3 Triangles Triangles Image courtesy of A K Peters, Ltd. -

Projective Texture Mapping with Full Panorama

EUROGRAPHICS 2002 / G. Drettakis and H.-P. Seidel Volume 21 (2002), Number 3 (Guest Editors) Projective Texture Mapping with Full Panorama Dongho Kim and James K. Hahn Department of Computer Science, The George Washington University, Washington, DC, USA Abstract Projective texture mapping is used to project a texture map onto scene geometry. It has been used in many applications, since it eliminates the assignment of fixed texture coordinates and provides a good method of representing synthetic images or photographs in image-based rendering. But conventional projective texture mapping has limitations in the field of view and the degree of navigation because only simple rectangular texture maps can be used. In this work, we propose the concept of panoramic projective texture mapping (PPTM). It projects cubic or cylindrical panorama onto the scene geometry. With this scheme, any polygonal geometry can receive the projection of a panoramic texture map, without using fixed texture coordinates or modeling many projective texture mapping. For fast real-time rendering, a hardware-based rendering method is also presented. Applications of PPTM include panorama viewer similar to QuicktimeVR and navigation in the panoramic scene, which can be created by image-based modeling techniques. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Viewing Algorithms; I.3.7 [Computer Graphics]: Color, Shading, Shadowing, and Texture 1. Introduction texture mapping. Image-based rendering (IBR) draws more applications of projective texture mapping. When Texture mapping has been used for a long time in photographs or synthetic images are used for IBR, those computer graphics imagery, because it provides much images contain scene information, which is projected onto 7 visual detail without complex models . -

Opengl Shading Language Course Chapter 1 – Introduction to GLSL By

OpenGL Shading Language Course Chapter 1 – Introduction to GLSL By Jacobo Rodriguez Villar [email protected] TyphoonLabs’ GLSL Course 1/29 CHAPTER 1: INTRODUCTION INDEX An Introduction to Programmable Hardware 3 Brief History of the OpenGL Programmable Hardware Pipeline 3 Fixed Function vs. Programmable Function 5 Programmable Function Scheme 6 Fixed Function Scheme 7 Shader 'Input' Data 7 Uniform Variables 7 Vertex Attributes 9 Varying Variables 10 Shader 'Output' Data 12 Vertex Shader 12 Fragment Shader 12 Simple GLSL Shader Example 13 Shader Designer IDE 16 User Interface 16 Toolbar 17 Menu 17 State Properties 22 Light States 22 Vertex States 24 Fragment States 25 Code Window 26 Uniform Variables Manager 27 TyphoonLabs’ GLSL Course 2/29 An Introduction to Programmable Hardware Brief history of the OpenGL Programmable Hardware Pipeline 2000 Card(s) on the market: GeForce 2, Rage 128, WildCat, and Oxygen GVX1 These cards did not use any programmability within their pipeline. There were no vertex and pixel shaders or even texture shaders. The only programmatically think was the register combiners. Multi-texturing and additive blending were used to create clever effects and unique materials. 2001 Card(s) on the market: GeForce 3, Radeon 8500 With GeForce 3, NVIDIA introduced programmability into the vertex processing pipeline, allowing developers to write simple 'fixed-length' vertex programs using pseudo-assembler style code. Pixel processing was also improved with the texture shader, allowing more control over textures. ATI added similar functionality including some VS and FS extensions (EXT_vertex_shader and ATI_fragment_shader) Developers could now interpolate, modulate, replace, and decal between texture units, as well as extrapolate or combine with constant colors. -

Rendering from Compressed Textures

Rendering from Compressed Textures y Andrew C. Beers, Maneesh Agrawala, and Navin Chaddha y Computer Science Department Computer Systems Laboratory Stanford University Stanford University Abstract One way to alleviate these memory limitations is to store com- pressed representations of textures in memory. A modi®ed renderer We present a simple method for rendering directly from compressed could then render directly from this compressed representation. In textures in hardware and software rendering systems. Textures are this paper we examine the issues involved in rendering from com- compressed using a vector quantization (VQ) method. The advan- pressed texture maps and propose a scheme for compressing tex- tage of VQ over other compression techniques is that textures can tures using vector quantization (VQ)[4]. We also describe an ex- be decompressed quickly during rendering. The drawback of us- tension of our basic VQ technique for compressing mipmaps. We ing lossy compression schemes such as VQ for textures is that such show that by using VQ compression, we can achieve compression methods introduce errors into the textures. We discuss techniques rates of up to 35 : 1 with little loss in the visual quality of the ren- for controlling these losses. We also describe an extension to the dered scene. We observe a 2 to 20 percent impact on rendering time basic VQ technique for compressing mipmaps. We have observed using a software renderer that renders from the compressed format. compression rates of up to 35 : 1, with minimal loss in visual qual- However, the technique is so simple that incorporating it into hard- ity and a small impact on rendering time. -

Research on Improving Methods for Visualizing Common Elements in Video Game Applications ビデオゲームアプリケーショ

Research on Improving Methods for Visualizing Common Elements in Video Game Applications ビデオゲームアプリケーションにおける共通的 なな要素要素のの視覚化手法視覚化手法のの改良改良にに関関関するする研究 June 2013 Graduate School of Global Information and Telecommunication Studies Waseda University Title of the project Research on Image Processing II Candidate ’’’s name Sven Dierk Michael Forstmann 2 Index Index 3 Acknowledgements Foremost, I would like to express my sincere thanks to my advisor Prof. Jun Ohya for his continuous support of my Ph.D study and research. His guidance helped me in all the time of my research and with writing of this thesis. I would further like to thank the members of my PhD committee, Prof. Shigekazu Sakai, Prof. Takashi Kawai, and Prof. Shigeo Morishima for their valuable comments and suggestions. For handling the formalities and always being helpful when I had questions, I would like to thank the staff of the Waseda GITS Office, especially Yumiko Kishimoto san. For their financial support, I would like to thank the DAAD (Deutscher Akademischer Austauschdienst), the Japanese Government for supporting me with the Monbukagakusho Scholarship and ITO EN Ltd, for supporting me with the Honjo International Scholarship. For their courtesy of allowing me the use of some of their screenshots, I would like to thank John Olick, Bernd Beyreuther, Prof. Ladislav Kavan and Dr. Cyril Crassin. Special thanks are given to my friends Dr. Gleb Novichkov, Prof. Artus Krohn-Grimberghe, Yutaka Kanou, and Dr. Gernot Grund for their encouragement and insightful comments. Last, I would like to thank my family: my parents Dierk and Friederike Forstmann, and my brother Hanjo Forstmann for their support and encouragement throughout the thesis. -

Modern Software Rendering

Production Systems and Information Engineering 57 Volume 6 (2013), pp. 57-68 MODERN SOFTWARE RENDERING PETER MILEFF University of Miskolc, Hungary Department of Information Technology [email protected] JUDIT DUDRA Bay Zoltán Nonprofit Ltd., Hungary Department of Structural Integrity [email protected] [Received January 2012 and accepted September 2012] Abstract. The computer visualization process, under continuous changes has reached a major milestone. Necessary modifications are required in the currently applied physical devices and technologies because the possibilities are nearly exhausted in the field of the programming model. This paper presents an overview of new performance improvement methods that today CPUs can provide utilizing their modern instruction sets and of how these methods can be applied in the specific field of computer graphics, called software rendering. Furthermore the paper focuses on GPGPU based parallel computing as a new technology for computer graphics where a TBR based software rasterization method is investigated in terms of performance and efficiency. Keywords: software rendering, real-time graphics, SIMD, GPGPU, performance optimization 1. Introduction Computer graphics is an integral part of our life. Often unnoticed, it is almost everywhere in the world today. The area has evolved over many years in the past few decades, where an important milestone was the appearance of graphic processors. Because the graphical computations have different needs than the CPU requirements, a demand has appeared early for a fast and uniformly programmable graphical processor. This evolution has opened many new opportunities for developers, such as developing real-time, high quality computer simulations and analysis. The appearance of the first graphics accelerators radically changed everything and created a new basis for computer rendering. -

Performance Comparison on Rendering Methods for Voxel Data

fl Performance comparison on rendering methods for voxel data Oskari Nousiainen School of Science Thesis submitted for examination for the degree of Master of Science in Technology. Espoo July 24, 2019 Supervisor Prof. Tapio Takala Advisor Dip.Ins. Jukka Larja Aalto University, P.O. BOX 11000, 00076 AALTO www.aalto.fi Abstract of the master’s thesis Author Oskari Nousiainen Title Performance comparison on rendering methods for voxel data Degree programme Master’s Programme in Computer, Communication and Information Sciences Major Computer Science Code of major SCI3042 Supervisor Prof. Tapio Takala Advisor Dip.Ins. Jukka Larja Date July 24, 2019 Number of pages 50 Language English Abstract There are multiple ways in which 3 dimensional objects can be presented and rendered to screen. Voxels are a representation of scene or object as cubes of equal dimensions, which are either full or empty and contain information about their enclosing space. They can be rendered to image by generating surface geometry and passing that to rendering pipeline or using ray casting to render the voxels directly. In this paper, these methods are compared to each other by using different octree structures for voxel storage. These methods are compared in different generated scenes to determine which factors affect their relative performance as measured in real time it takes to draw a single frame. These tests show that ray casting performs better when resolution of voxel model is high, but rasterisation is faster for low resolution voxel models. Rasterisation scales better with screen resolution and on higher screen resolutions most of the tested voxel resolutions where faster to render with with rasterisation.