On-Demand ETL for Real-Time Analytics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improving High Contention OLTP Performance Via Transaction

Improving High Contention OLTP Performance via Transaction Scheduling Guna Prasaad, Alvin Cheung, Dan Suciu University of Washington {guna, akcheung, suciu}@cs.washington.edu ABSTRACT Validation-based protocols [5, 26] are known to be well- Research in transaction processing has made significant suited for workloads with low data contention or conflicts, i.e., progress in improving the performance of multi-core in- when data items are being accessed by transactions concur- memory transactional systems. However, the focus has mainly rently, with at least one access being a write. Since conflicting been on low-contention workloads. Modern transactional accesses are rare, it is unlikely that the value of a data item systems perform poorly on workloads with transactions ac- is updated by another transaction during its execution, and cessing a few highly contended data items. We observe that hence validation mostly succeeds. On the other hand, for most transactional workloads, including those with high workloads where data contention is high, locking-based con- contention, can be divided into clusters of data conflict-free currency control protocols are generally preferred as they transactions and a small set of residuals. pessimistically block other transactions that require access In this paper, we introduce a new concurrency control to the same data item instead of incurring the overhead of protocol called Strife that leverages the above observation. repeatedly aborting and restarting the transaction like in Strife executes transactions in batches, where each batch is OCC. When the workload is known to be partitionable, parti- partitioned into clusters of conflict-free transactions and a tioned concurrency control [12] is preferred as it eliminates small set of residual transactions. -

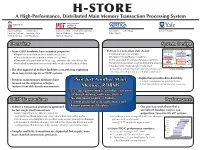

A High-Performance, Distributed Main Memory Transaction Processing System

H-Store: A High-Performance, Distributed Main Memory Transaction Processing System Robert Kallman Evan P. C. Jones John Hugg Hideaki Kimura Samuel Madden Vertica Inc. Jonathan Natkins Michael Stonebraker [email protected] Andrew Pavlo Yang Zhang Alexander Rasin Massachusetts Institute of Stanley Zdonik Technology Daniel J. Abadi Brown University Yale University {evanj, madden, {rkallman, hkimura, stonebraker, [email protected] jnatkins, pavlo, alexr, yang}@csail.mit.edu sbz}@cs.brown.edu ABSTRACT sponsibilities across multiple shared-nothing machines. Al- Our previous work has shown that architectural and appli- though some RDBMS platforms now provide support for cation shifts have resulted in modern OLTP databases in- this paradigm in their execution framework, research shows creasingly falling short of optimal performance [10]. In par- that building a new OLTP system that is optimized from ticular, the availability of multiple-cores, the abundance of its inception for a distributed environment is advantageous main memory, the lack of user stalls, and the dominant use over retrofitting an existing RDBMS [2]. of stored procedures are factors that portend a clean-slate Using a disk-oriented RDBMS is another key bottleneck redesign of RDBMSs. This previous work showed that such in OLTP databases. All but the very largest OLTP applica- a redesign has the potential to outperform legacy OLTP tions are able to fit their entire data set into the memory of databases by a significant factor. These results, however, a modern shared-nothing cluster of server machines. There- were obtained using a bare-bones prototype that was devel- fore, disk-oriented storage and indexing structures are un- oped just to demonstrate the potential of such a system. -

![Arxiv:1612.05566V1 [Cs.DB] 16 Dec 2016](https://docslib.b-cdn.net/cover/3105/arxiv-1612-05566v1-cs-db-16-dec-2016-1143105.webp)

Arxiv:1612.05566V1 [Cs.DB] 16 Dec 2016

Building Efficient Query Engines in a High-Level Language Amir Shaikhha, École Polytechnique Fédérale de Lausanne Yannis Klonatos, École Polytechnique Fédérale de Lausanne Christoph Koch, École Polytechnique Fédérale de Lausanne Abstraction without regret refers to the vision of using high-level programming languages for systems de- velopment without experiencing a negative impact on performance. A database system designed according to this vision offers both increased productivity and high performance, instead of sacrificing the former for the latter as is the case with existing, monolithic implementations that are hard to maintain and extend. In this article, we realize this vision in the domain of analytical query processing. We present LegoBase, a query engine written in the high-level programming language Scala. The key technique to regain efficiency is to apply generative programming: LegoBase performs source-to-source compilation and optimizes the entire query engine by converting the high-level Scala code to specialized, low-level C code. We show how generative programming allows to easily implement a wide spectrum of optimizations, such as introducing data partitioning or switching from a row to a column data layout, which are difficult to achieve with existing low-level query compilers that handle only queries. We demonstrate that sufficiently powerful abstractions are essential for dealing with the complexity of the optimization effort, shielding developers from compiler internals and decoupling individual optimizations from each other. We evaluate our approach with the TPC-H benchmark and show that: (a) With all optimizations en- abled, our architecture significantly outperforms a commercial in-memory database as well as an existing query compiler. -

Jennie Duggan (Née Rogers)

Jennie Duggan (n´eeRogers) MIT CSAIL Voice: omitted for web 32 Vasser Street, Room G904B E-mail: [email protected] Cambridge, MA 02139 Web: http://people.csail.mit.edu/jennie/ Interests Large-scale data processing, cloud computing, core database internals, scientific data management, database performance, big data Education Brown University December 2012 Ph.D., Computer Science • Thesis: \Query Performance Prediction for Analytical Workloads" • Advisor: U˘gurC¸etintemel Brown University May 2009 Sci.M., Computer Science • Thesis: \Towards a Generic Compression Advisor" • Advisor: U˘gurC¸etintemel Rensselaer Polytechnic Institute May 2003 B.S., Computer Science • Minor: Brain & Brain Behavior Research MIT CSAIL January 2013{Present Experience Postdoctoral Associate Data placement for elastic array processing (SciDB): • Researched the efficient reorganization of data for scalable array analytics • Designed a control loop for incrementally scaling out a scientific database cluster • Proposed partitioning schemes to maximize the preservation of array space for spatial querying Data placement for elastic distributed main memory databases (H-Store): • Investigated the management of execution skew for large-scale transactional workloads • Created algorithms for determining when and how to reprovision hardware resources for con- sistent query throughput • Built a two-level caching scheme that caters to hot spots in a transactional workload Massively parallelized join optimization (SciDB): • Proposed a two-phase join for arrays independently optimizing -

Scheduling OLTP Transactions Via Learned Abort Prediction

Scheduling OLTP Transactions via Learned Abort Prediction Yangjun Sheng Anthony Tomasic Carnegie Mellon University Carnegie Mellon University Pittsburgh, PA Pittsburgh, PA [email protected] [email protected] Tieying Zhang Andrew Pavlo Alibaba Database Group Carnegie Mellon University [email protected] Pittsburgh, PA ABSTRACT CCS CONCEPTS Current main memory database system architectures are still • Information systems → Database transaction process- challenged by high contention workloads and this challenge ing; • Computing methodologies → Machine learning; will continue to grow as the number of cores in processors continues to increase [23]. These systems schedule transac- KEYWORDS tions randomly across cores to maximize concurrency and machine learning scheduling to produce a uniform load across cores. Scheduling never ACM Reference Format: considers potential conflicts. Performance could be improved Yangjun Sheng, Anthony Tomasic, Tieying Zhang, and Andrew if scheduling balanced between concurrency to maximize Pavlo. 2019. Scheduling OLTP Transactions via Learned Abort throughput and scheduling transactions linearly to avoid Prediction. In International Workshop on Exploiting Artificial In- conflicts. In this paper, we present the design of several intel- telligence Techniques for Data Management (aiDM’19), July 5, 2019, ligent transaction scheduling algorithms that consider both Amsterdam, Netherlands. ACM, New York, NY, USA, 8 pages. https: potential transaction conflicts and concurrency. To incor- //doi.org/10.1145/3329859.3329871 porate reasoning about transaction conflicts, we develop a supervised machine learning model that estimates the prob- 1 INTRODUCTION ability of conflict. This model is incorporated into several Transaction aborts are one of the main sources of perfor- scheduling algorithms. In addition, we integrate an unsuper- mance loss in main memory online transaction processing vised machine learning algorithm into an intelligent schedul- (OLTP) database management systems (DBMS) [23]. -

Handling Highly Contended OLTP Workloads Using Fast Dynamic

Research 6: Transaction Processing and Query Optimization SIGMOD ’20, June 14–19, 2020, Portland, OR, USA Handling Highly Contended OLTP Workloads Using Fast Dynamic Partitioning Guna Prasaad Alvin Cheung Dan Suciu University of Washington University of California, Berkeley University of Washington [email protected] [email protected] [email protected] ABSTRACT 1 INTRODUCTION Research on transaction processing has made significant As modern OLTP servers today ship with an increasing progress towards improving performance of main memory number of cores, users expect transaction processing perfor- multicore OLTP systems under low contention. However, mance to scale accordingly. In practice, however, achieving these systems struggle on workloads with lots of conflicts. scalability is challenging, with the bottleneck often being Partitioned databases (and variants) perform well on high the concurrency control needed to ensure a serializable ex- contention workloads that are statically partitionable, but ecution of the transactions. Consequently, a new class of time-varying workloads often make them impractical. To- high performance concurrency control protocols [8, 18, 19, wards addressing this, we propose StrifeÐa novel transac- 37, 39, 44] have been developed and are shown to scale to tion processing scheme that clusters transactions together even thousands of cores [39, 43]. dynamically and executes most of them without any con- Yet, the performance of these protocols degrades rapidly currency control. Strife executes transactions in batches, on workloads with high contention [1, 43]. One promising where each batch is partitioned into disjoint clusters with- approach to execute workloads with high contention is to out any cross-cluster conflicts and a small set of residuals. -

H-Store: a High-Performance, Distributed Main Memory Transaction Processing System

H-Store: A High-Performance, Distributed Main Memory Transaction Processing System Robert Kallman Evan P. C. Jones John Hugg Hideaki Kimura Samuel Madden Vertica Inc. Jonathan Natkins Michael Stonebraker [email protected] Andrew Pavlo Yang Zhang Alexander Rasin Massachusetts Institute of Stanley Zdonik Technology Daniel J. Abadi Brown University Yale University {evanj, madden, {rkallman, hkimura, stonebraker, [email protected] jnatkins, pavlo, alexr, yang}@csail.mit.edu sbz}@cs.brown.edu ABSTRACT sponsibilities across multiple shared-nothing machines. Al- Our previous work has shown that architectural and appli- though some RDBMS platforms now provide support for cation shifts have resulted in modern OLTP databases in- this paradigm in their execution framework, research shows creasingly falling short of optimal performance [10]. In par- that building a new OLTP system that is optimized from ticular, the availability of multiple-cores, the abundance of its inception for a distributed environment is advantageous main memory, the lack of user stalls, and the dominant use over retrofitting an existing RDBMS [2]. of stored procedures are factors that portend a clean-slate Using a disk-oriented RDBMS is another key bottleneck redesign of RDBMSs. This previous work showed that such in OLTP databases. All but the very largest OLTP applica- a redesign has the potential to outperform legacy OLTP tions are able to fit their entire data set into the memory of databases by a significant factor. These results, however, a modern shared-nothing cluster of server machines. There- were obtained using a bare-bones prototype that was devel- fore, disk-oriented storage and indexing structures are un- oped just to demonstrate the potential of such a system. -

Chiller: Contention-Centric Transaction Execution and Data Partitioning For

Research 6: Transaction Processing and Query Optimization SIGMOD ’20, June 14–19, 2020, Portland, OR, USA Chiller: Contention-centric Transaction Execution and Data Partitioning for Modern Networks Erfan Zamanian Julian Shun [email protected] [email protected] Brown University MIT CSAIL Carsten Binnig Tim Kraska [email protected] [email protected] TU Darmstadt MIT CSAIL Abstract SIGMOD International Conference on Management of Data (SIG- Distributed transactions on high-overhead TCP/IP-based net- MOD’20), June 14–19, 2020, Portland, OR, USA. ACM, New York, NY, works were conventionally considered to be prohibitively USA, 16 pages. https://doi.org/10.1145/3318464.3389724 expensive and thus were avoided at all costs. To that end, 1 Introduction the primary goal of almost any existing partitioning scheme The common wisdom is to avoid distributed transactions is to minimize the number of cross-partition transactions. at almost all costs as they represent the dominating bot- However, with the new generation of fast RDMA-enabled tleneck in distributed database systems. As a result, many networks, this assumption is no longer valid. In fact, recent partitioning schemes have been proposed with the goal of work has shown that distributed databases can scale even minimizing the number of cross-partition transactions [8, 28, when the majority of transactions are cross-partition. 29, 34, 37, 44]. Yet, a recent result [43] has shown that with In this paper, we first make the case that the new bottle- the advances of high-bandwidth RDMA-enabled networks, neck which hinders truly scalable transaction processing in neither the message overhead nor the network bandwidth modern RDMA-enabled databases is data contention, and that are limiting factors anymore, significantly mitigating the optimizing for data contention leads to different partition- scalability issues of traditional systems. -

Cross-Engine Query Execution in Federated Database Systems Ankush M. Gupta

Cross-Engine Query Execution in Federated Database Systems by Ankush M. Gupta S.B., Massachusetts Institute of Technology (2016) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2016 c Ankush M. Gupta, MMXVI. All rights reserved. The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Author.............................................................. Department of Electrical Engineering and Computer Science May 18, 2016 Certified by. Michael Stonebraker Adjunct Professor Thesis Supervisor Accepted by . Christopher J. Terman Chairman, Department Committee on Graduate Theses Cross-Engine Query Execution in Federated Database Systems by Ankush M. Gupta Submitted to the Department of Electrical Engineering and Computer Science on May 18, 2016, in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science Abstract Duggan et al.[5] have created a reference implementation of the BigDAWG system: a new architecture for future Big Data applications, guided by the philosophy that \one size does not fit all". Such applications not only call for large-scale analytics, but also for real-time streaming support, smaller analytics at interactive speeds, data visualization, and cross-storage-system queries. The importance and effectiveness of such a system has been demonstrated in a hospital application using data from an intensive care unit (ICU). In this report, we implement and evaluate a concrete version of a cross-system Query Executor and its interface with a cross-system Query Planner. -

Uses Data Partitioning to Distribute Execution » on Shared

H-STORE A High-Performance, Distributed Main Memory Transaction Processing System Robert Kallman Andrew Pavlo Evan P. C. Jones Michael Stonebraker Ryan Betts John Hugg Daniel J. Abadi Jonathan Natkins Alexander Rasin Samuel Madden Yang Zhang Bobbi Heath Hideaki Kimura Stanley Zdonik Michael McCanna Overview System Design Database Cluster OLLTP Application Schema Information H-Store API » Many OLTP databases have common properties: » H-Store is a new clean-slate design: Stored Sample Procedures Workload Transaction Initiator • Main-memory row storage. Node Node NodeNNoded Node • Repetitive execution of short-lived transactions. Deployment Framework Messaging Fabric NodeNNdd Node • Designed for multi-core machines. Database Designer Other Cluster • Entire database fits in main memory of a cluster. Query Planner/Optimizer Transactionon ManagerMa Execution Nodes • One execution thread per database partition. Stored Procedure Executor • Data naturally partitions on keys (e.g., customer ids, warehouse ids) Query Execution Engine Compiled Stored Proceedures System Catalogs • Individual transaction invocations only touch a small subset of data. • Distributed operation on shared-nothing clusters. Query Plans Physical Layout Main Memory Storage Manager • Applications invoke pre-defined stored Deployment Time Runtime » The development of H-Store facilitates research into exploiting procedures consisting of structured control code System Architecture these non-trivial aspects of OLTP systems. intermixed with parameterized SQL commands. » Replication -

P-Store: an Elastic Database System with Predictive Provisioning

Industry 1: Adaptive Query Processing SIGMOD’18, June 10-15, 2018, Houston, TX, USA P-Store: An Elastic Database System with Predictive Provisioning Rebecca Taft∗ Nosayba El-Sayed† Yu Lu [email protected] Marco Serafini [email protected] MIT [email protected] University of Illinois mserafi[email protected] Urbana-Champaign Qatar Computing Research Institute - HBKU Ashraf Aboulnaga Michael Stonebraker Ricardo Mayerhofer [email protected] [email protected] Francisco Andrade Qatar Computing Research Institute - MIT [email protected] HBKU [email protected] B2W Digital 4 x 10 2.5 ABSTRACT 1−day OLTP database systems are a critical part of the operation of many 2 enterprises. Such systems are often configured statically with suffi- 1.5 cient capacity for peak load. For many OLTP applications, however, 1 the maximum load is an order of magnitude larger than the mini- mum, and load varies in a repeating daily pattern. It is thus prudent Load (requests/min) 0.5 0 to allocate computing resources dynamically to match demand. One 0 500 1000 1500 2000 2500 3000 3500 4000 can allocate resources reactively after a load increase is detected, but Time (min) this places additional burden on the already-overloaded system to Figure 1: Load on one of B2W’s databases over three days. reconfigure. A predictive allocation, in advance of load increases, is Load peaks during daytime hours and dips at night. clearly preferable. We present P-Store, the first elastic OLTP DBMS to use predic- 1 INTRODUCTION tion, and apply it to the workload of B2W Digital (B2W), a large Large, modern OLTP applications increasingly store their data in online retailer. -

Faster: a Concurrent Key-Value Store with In-Place Updates

Faster: A Concurrent Key-Value Store with In-Place Updates Badrish Chandramouliy, Guna Prasaadz∗, Donald Kossmanny, Justin Levandoskiy, James Huntery, Mike Barnett† yMicrosoft Research zUniversity of Washington [email protected], [email protected], {donaldk, justin.levandoski, jahunter, mbarnett}@microsoft.com ABSTRACT 1.1 Challenges and Existing Solutions Over the last decade, there has been a tremendous growth in data- State management is an important problem for all these applications intensive applications and services in the cloud. Data is created on and services. It exhibits several unique characteristics: a variety of edge sources, e.g., devices, browsers, and servers, and • Large State: The amount of state accessed by some applications processed by cloud applications to gain insights or take decisions. can be very large, far exceeding the capacity of main memory. For Applications and services either work on collected data, or monitor example, a targeted search ads provider may maintain per-user, and process data in real time. These applications are typically update per-ad and clickthrough-rate statistics for billions of users. Also, intensive and involve a large amount of state beyond what can fit in it is often cheaper to retain state that is infrequently accessed on main memory. However, they display significant temporal locality secondary storage [18], even when it fits in memory. in their access pattern. This paper presents Faster, a new key- • Update Intensity: While reads and inserts are common, there value store for point read, blind update, and read-modify-write are applications with significant update traffic. For example, a operations. Faster combines a highly cache-optimized concurrent monitoring application receiving millions of CPU readings every hash index with a hybrid log: a concurrent log-structured record second from sensors and devices may need to update a per-device store that spans main memory and storage, while supporting fast aggregate for each reading.