Lady Gaga Sings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Touchstones of Popular Culture Among Contemporary College Students in the United States

Minnesota State University Moorhead RED: a Repository of Digital Collections Dissertations, Theses, and Projects Graduate Studies Spring 5-17-2019 Touchstones of Popular Culture Among Contemporary College Students in the United States Margaret Thoemke [email protected] Follow this and additional works at: https://red.mnstate.edu/thesis Part of the Higher Education and Teaching Commons Recommended Citation Thoemke, Margaret, "Touchstones of Popular Culture Among Contemporary College Students in the United States" (2019). Dissertations, Theses, and Projects. 167. https://red.mnstate.edu/thesis/167 This Thesis (699 registration) is brought to you for free and open access by the Graduate Studies at RED: a Repository of Digital Collections. It has been accepted for inclusion in Dissertations, Theses, and Projects by an authorized administrator of RED: a Repository of Digital Collections. For more information, please contact [email protected]. Touchstones of Popular Culture Among Contemporary College Students in the United States A Thesis Presented to The Graduate Faculty of Minnesota State University Moorhead By Margaret Elizabeth Thoemke In Partial Fulfillment of the Requirements for the Degree of Master of Arts in Teaching English as a Second Language May 2019 Moorhead, Minnesota iii Copyright 2019 Margaret Elizabeth Thoemke iv Dedication I would like to dedicate this thesis to my three most favorite people in the world. To my mother, Heather Flaherty, for always supporting me and guiding me to where I am today. To my husband, Jake Thoemke, for pushing me to be the best I can be and reminding me that I’m okay. Lastly, to my son, Liam, who is my biggest fan and my reason to be the best person I can be. -

In the High Court of New Zealand Wellington Registry

IN THE HIGH COURT OF NEW ZEALAND WELLINGTON REGISTRY I TE KŌTI MATUA O AOTEAROA TE WHANGANUI-Ā-TARA ROHE CIV-2014-485-11220 [2017] NZHC 2603 UNDER The Copyright Act 1994 EIGHT MILE STYLE, LLC BETWEEN First Plaintiff MARTIN AFFILIATED, LLC Second Plaintiff NEW ZEALAND NATIONAL PARTY AND First Defendant GREG HAMILTON Second Defendant STAN 3 LIMITED AND First Third Party SALE STREET STUDIOS LIMITED Second Third Party Continued Hearing: 1–8 May 2017 and 11–12 May 2017 Appearances: G C Williams, A M Simpson and C M Young for plaintiffs G F Arthur, G M Richards and P T Kiely for defendants A J Holmes for second third party T P Mullins and C I Hadlee for third and fourth third parties L M Kelly for fifth third party R K P Stewart for fourth party No appearance for fifth party Judgment: 25 October 2017 JUDGMENT OF CULL J EIGHT MILE STYLE v NEW ZEALAND NATIONAL PARTY [2017] NZHC 2603 [25 October 2017] AND AMCOS NEW ZEALAND LIMITED Third Third Party AUSTRALASIAN MECHANICAL COPYRIGHT OWNERS SOCIETY LIMITED Fourth Third Party BEATBOX MUSIC PTY LIMITED Fifth Third Party AND LABRADOR ENTERTAINMENT INC Fourth Party AND MICHAEL ALAN COHEN Fifth Party INDEX The musical works ............................................................................................................................. [8] Lose Yourself .................................................................................................................................. [9] Eminem Esque ............................................................................................................................. -

Eminem Interview Download

Eminem interview download LINK TO DOWNLOAD UPDATE 9/14 - PART 4 OUT NOW. Eminem sat down with Sway for an exclusive interview for his tenth studio album, Kamikaze. Stream/download Kamikaze HERE.. Part 4. Download eminem-interview mp3 – Lost In London (Hosted By DJ Exclusive) of Eminem - renuzap.podarokideal.ru Eminem X-Posed: The Interview Song Download- Listen Eminem X-Posed: The Interview MP3 song online free. Play Eminem X-Posed: The Interview album song MP3 by Eminem and download Eminem X-Posed: The Interview song on renuzap.podarokideal.ru 19 rows · Eminem Interview Title: date: source: Eminem, Back Issues (Cover Story) Interview: . 09/05/ · Lil Wayne has officially launched his own radio show on Apple’s Beats 1 channel. On Friday’s (May 8) episode of Young Money Radio, Tunechi and Eminem Author: VIBE Staff. 07/12/ · EMINEM: It was about having the right to stand up to oppression. I mean, that’s exactly what the people in the military and the people who have given their lives for this country have fought for—for everybody to have a voice and to protest injustices and speak out against shit that’s wrong. Eminem interview with BBC Radio 1 () Eminem interview with MTV () NY Rock interview with Eminem - "It's lonely at the top" () Spin Magazine interview with Eminem - "Chocolate on the inside" () Brian McCollum interview with Eminem - "Fame leaves sour aftertaste" () Eminem Interview with Music - "Oh Yes, It's Shady's Night. Eminem will host a three-hour-long special, “Music To Be Quarantined By”, Apr 28th Eminem StockX Collab To Benefit COVID Solidarity Response Fund. -

Focus 2020 Pioneering Women Composers of the 20Th Century

Focus 2020 Trailblazers Pioneering Women Composers of the 20th Century The Juilliard School presents 36th Annual Focus Festival Focus 2020 Trailblazers: Pioneering Women Composers of the 20th Century Joel Sachs, Director Odaline de la Martinez and Joel Sachs, Co-curators TABLE OF CONTENTS 1 Introduction to Focus 2020 3 For the Benefit of Women Composers 4 The 19th-Century Precursors 6 Acknowledgments 7 Program I Friday, January 24, 7:30pm 18 Program II Monday, January 27, 7:30pm 25 Program III Tuesday, January 28 Preconcert Roundtable, 6:30pm; Concert, 7:30pm 34 Program IV Wednesday, January 29, 7:30pm 44 Program V Thursday, January 30, 7:30pm 56 Program VI Friday, January 31, 7:30pm 67 Focus 2020 Staff These performances are supported in part by the Muriel Gluck Production Fund. Please make certain that all electronic devices are turned off during the performance. The taking of photographs and use of recording equipment are not permitted in the auditorium. Introduction to Focus 2020 by Joel Sachs The seed for this year’s Focus Festival was planted in December 2018 at a Juilliard doctoral recital by the Chilean violist Sergio Muñoz Leiva. I was especially struck by the sonata of Rebecca Clarke, an Anglo-American composer of the early 20th century who has been known largely by that one piece, now a staple of the viola repertory. Thinking about the challenges she faced in establishing her credibility as a professional composer, my mind went to a group of women in that period, roughly 1885 to 1930, who struggled to be accepted as professional composers rather than as professional performers writing as a secondary activity or as amateur composers. -

Leonard Bernstein's MASS

27 Season 2014-2015 Thursday, April 30, at 8:00 Friday, May 1, at 8:00 The Philadelphia Orchestra Saturday, May 2, at 8:00 Sunday, May 3, at 2:00 Leonard Bernstein’s MASS: A Theatre Piece for Singers, Players, and Dancers* Conducted by Yannick Nézet-Séguin Texts from the liturgy of the Roman Mass Additional texts by Stephen Schwartz and Leonard Bernstein For a list of performing and creative artists please turn to page 30. *First complete Philadelphia Orchestra performances This program runs approximately 1 hour, 50 minutes, and will be performed without an intermission. These performances are made possible in part by the generous support of the William Penn Foundation and the Andrew W. Mellon Foundation. Additional support has been provided by the Presser Foundation. 28 I. Devotions before Mass 1. Antiphon: Kyrie eleison 2. Hymn and Psalm: “A Simple Song” 3. Responsory: Alleluia II. First Introit (Rondo) 1. Prefatory Prayers 2. Thrice-Triple Canon: Dominus vobiscum III. Second Introit 1. In nomine Patris 2. Prayer for the Congregation (Chorale: “Almighty Father”) 3. Epiphany IV. Confession 1. Confiteor 2. Trope: “I Don’t Know” 3. Trope: “Easy” V. Meditation No. 1 VI. Gloria 1. Gloria tibi 2. Gloria in excelsis 3. Trope: “Half of the People” 4. Trope: “Thank You” VII. Mediation No. 2 VIII. Epistle: “The Word of the Lord” IX. Gospel-Sermon: “God Said” X. Credo 1. Credo in unum Deum 2. Trope: “Non Credo” 3. Trope: “Hurry” 4. Trope: “World without End” 5. Trope: “I Believe in God” XI. Meditation No. 3 (De profundis, part 1) XII. -

5 Ferndale Studios at Epicenter of Detroit's Music Recording Industry

5 Ferndale studios at epicenter of Detroit’s music recording indu... http://www.freep.com/article/20140119/ENT04/301190085/fern... CLASSIFIEDS: CARS JOBS HOMES APARTMENTS CLASSIFIEDS SHOPPING E-CIRCULARS DAILY DEALS ADVERTISE SUBSCRIBE News Sports Michigan Business Entertainment Life Better Michigan Obits Help MORE: MitchAlbom.com e-Edition Health care reform Detroit bankruptcy High School Sports Find what you are looking for ... SEARCH 5 Ferndale studios at epicenter of Detroit's music recording industry 2,507 people recommend this. Sign Up to see what your friends 10:10 AM, January 19, 2014 | 10 Comments Recommend recommend. Recommend 2.5k 4 A A Recommend Most Popular Most Commented More Headlines 1 Ex-Chrysler engineer cleared in sex case sues defense attorney after 7 years in prison 2 New Yorker article quotes L. Brooks Patterson with choice words about Detroit 3 Detroit Lions headquarters will be quieter with Jim Caldwell in charge 4 Freep 5: Great restaurants for Polish food 5 Dave Birkett: 5 Senior Bowl players who could interest the Detroit Lions Most Viewed Vintage King Audio technician Jeff Spatafora looks over a refurbished Neve 8078 audio mixer at its warehouse in Ferndale. Vintage King specializes in providing vintage equipment to studios across the country. / Jarrad Henderson/Detroit Free Press By Brian McCollum Nestled along a half-mile stretch in Ferndale, among the rugged Detroit Free industrial shops and gray warehouses, is the bustling epicenter of Press Pop Music Writer Detroitʼs music recording scene. FILED UNDER Most of the thousands who drive every day through this area by 9 Where in the world is Jessica Biel? | DailyDish Entertainment Mile and Hilton probably donʼt even realize itʼs there: a Jan. -

Due to Overwhelming Demand Jay-Z and Eminem's Historic

DUE TO OVERWHELMING DEMAND JAY-Z AND EMINEM’S HISTORIC “HOME AND HOME” TOUR ADD ADDITIONAL SHOWS IN DETROIT AND NEW YORK TICKETS GO ON SALE FRIDAY, JUNE 25TH Tickets to EMINEM and JAY-Z’ historic “Home And Home” tour went on sale today to presale partners with overwhelming fan demand. In response to the tremendous demand, and in preparation for tomorrow’s public on-sale, another show has been added in Detroit at Comerica Park on Friday, September 3rd and in New York at Yankee Stadium on Tuesday, September 14th. This is the only time these two superstars will perform together. Tickets for all four shows, produced by Live Nation, go on sale to the public on Friday, June 25 at 10:00 am local time at LiveNation.com. JAY-Z AND EMINEM’S “HOME AND HOME” TOUR DATES INCLUDE: September 2 Detroit, MI Comerica Park September 3 Detroit, MI Comerica Park September 13 New York, NY Yankee Stadium September 14 New York, NY Yankee Stadium. The same day that tickets go on sale, EMINEM and JAY-Z take over late night television in support of the tour when they perform together on the roof of the Ed Sullivan Theater building on LATE SHOW with DAVID LETTERMAN, taped Monday, June 21 to be broadcast Friday, June 25 (11:35 PM-12:37 AM, ET/PT) on the CBS Television Network. Since 1996, 10-time Grammy award-winner, Shawn “JAY-Z” Carter has dominated the rap industry and set trends for a generation. Through his own label, Roc Nation, JAY-Z released The Blueprint 3, his 11th number one album, putting him ahead of Elvis for the most number one albums by any solo artist. -

'Elton!' to Launch on SIRIUS XM Radio

'Elton!' to Launch on SIRIUS XM Radio --Limited-run music channel coincides with Elton John and Leon Russell's "The Union" release --"Elton!" to feature exclusive interview with Elton John and Leon Russell conducted by director Cameron Crowe -- Listeners to hear seven days of music spanning Elton John's career --Robert Downey Jr. and Andy Roddick to guest DJ on the channel NEW YORK, Oct 14, 2010 /PRNewswire via COMTEX News Network/ -- SIRIUS XM Radio (Nasdaq: SIRI) announced today that it will launch "Elton!," a seven-day long, commercial-free music channel featuring music, interviews and special guest DJ sessions celebrating the release of Elton John and Leon Russell's new album, The Union. (Logo: http://photos.prnewswire.com/prnh/20101014/NY82093LOGO ) (Logo: http://www.newscom.com/cgi-bin/prnh/20101014/NY82093LOGO ) The limited-run channel will launch on Friday, October 15 at 3:00 pm ET and will run until Friday, October 22 at 3:00 am ET on SIRIUS channel 33 and XM channel 27. "Elton!" will feature music spanning Elton John's prolific and award-winning career as well as music from Leon Russell's storied music past. Listeners will hear an interview with Elton John and Leon Russell conducted by director Cameron Crowe, during which Elton will talk at length about The Union, scheduled to be released on Decca Records on October 19, as well as various highlights from his 41-year career. "Elton!" will also feature interviews with T Bone Burnett, who produced The Union, and singer, songwriter and long-time Elton John collaborator, Bernie Taupin. -

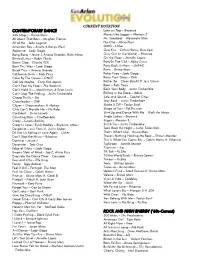

Front of House Master Song List

CURRENT ROTATION CONTEMPORARY DANCE Love on Top – Beyoncé 24K Magic – Bruno Mars Moves Like Jagger – Maroon 5 All About That Bass – Meghan Trainor Mr. Saxobeat – Alexandra Stan All of Me – John Legend No One – Alicia Keys American Boy – Estelle & Kanye West OMG – Usher Applause – Lady Gaga One Kiss – Calvin Harris, Dua Lipa Bang Bang – Jessie J, Ariana Grande, Nicki Minaj Only Girl (in the World) – Rihanna Blurred Lines – Robin Thicke On the Floor – Jennifer Lopez Boom Clap – Charlie XCX Party In The USA – Miley Cyrus Born This Way – Lady Gaga Party Rock Anthem – LMFAO Break Free – Ariana Grande Perm – Bruno Mars California Gurls – Katy Perry Poker Face – Lady Gaga Cake By The Ocean – DNCE Raise Your Glass – Pink Call Me Maybe – Carly Rae Jepsen Rather Be – Clean Bandit ft. Jess Glynn Can’t Feel My Face – The Weeknd Roar – Katy Perry Can’t Hold Us – Macklemore & Ryan Lewis Rock Your Body – Justin Timberlake Can’t Stop The Feeling – Justin Timberlake Rolling in the Deep – Adele Cheap Thrills – Sia Safe and Sound – Capital Cities Cheerleader – OMI Sexy Back – Justin Timberlake Closer – Chainsmokers ft. Halsey Shake It Off – Taylor Swift Club Can’t Handle Me – Flo Rida Shape of You – Ed Sheeran Confident – Demi Lovato Shut Up and Dance With Me – Walk the Moon Counting Stars – OneRepublic Single Ladies – Beyoncé Crazy – Gnarls Barkley Sugar – Maroon 5 Crazy In Love / Funk Medley – Beyoncé, others Suit & Tie – Justin Timberlake Despacito – Luis Fonsi ft. Justin Bieber Take Back the Night – Justin Timberlake DJ Got Us Falling in Love Again – Usher That’s What I Like – Bruno Mars Don’t Stop the Music – Rihanna There’s Nothing Holding Me Back – Shawn Mendez Domino – Jessie J This Is What You Came For – Calvin Harris ft. -

Eminem 1 Eminem

Eminem 1 Eminem Eminem Eminem performing live at the DJ Hero Party in Los Angeles, June 1, 2009 Background information Birth name Marshall Bruce Mathers III Born October 17, 1972 Saint Joseph, Missouri, U.S. Origin Warren, Michigan, U.S. Genres Hip hop Occupations Rapper Record producer Actor Songwriter Years active 1995–present Labels Interscope, Aftermath Associated acts Dr. Dre, D12, Royce da 5'9", 50 Cent, Obie Trice Website [www.eminem.com www.eminem.com] Marshall Bruce Mathers III (born October 17, 1972),[1] better known by his stage name Eminem, is an American rapper, record producer, and actor. Eminem quickly gained popularity in 1999 with his major-label debut album, The Slim Shady LP, which won a Grammy Award for Best Rap Album. The following album, The Marshall Mathers LP, became the fastest-selling solo album in United States history.[2] It brought Eminem increased popularity, including his own record label, Shady Records, and brought his group project, D12, to mainstream recognition. The Marshall Mathers LP and his third album, The Eminem Show, also won Grammy Awards, making Eminem the first artist to win Best Rap Album for three consecutive LPs. He then won the award again in 2010 for his album Relapse and in 2011 for his album Recovery, giving him a total of 13 Grammys in his career. In 2003, he won the Academy Award for Best Original Song for "Lose Yourself" from the film, 8 Mile, in which he also played the lead. "Lose Yourself" would go on to become the longest running No. 1 hip hop single.[3] Eminem then went on hiatus after touring in 2005. -

News Release

For Immediate Release NEWS RELEASE TENTH ANNIVERSARY MUSICARES MAP FUND® BENEFIT CONCERT TO HONOR GRAMMY®-WINNING SINGER/SONGWRITER OZZY OSBOURNE AND OWNER/CEO OF THE VILLAGE STUDIOS JEFF GREENBERG ON MAY 12 TO RAISE FUNDS FOR MUSICARES®' ADDICTION RECOVERY SERVICES Mix Master Mike Will DJ In Memory Of DJ AM Performance By Osbourne With Special Guest Slash On Guitar SANTA MONICA, Calif. (March 3, 2014) — The 10th anniversary MusiCares MAP Fund® benefit concert will honor Black Sabbath's GRAMMY®-winning singer/songwriter Ozzy Osbourne, and owner/CEO of the Village studios Jeff Greenberg. Taking place at Club Nokia in Los Angeles on May 12, Osbourne will be honored with the Stevie Ray Vaughan Award for his dedication and support of the MusiCares MAP Fund. He is being recognized for his commitment to helping other addicts with the addiction recovery process. Greenberg will be the recipient of MusiCares®' From the Heart Award for his unconditional friendship and dedication to the mission and goals of the organization. All proceeds will benefit the MusiCares MAP Fund, which provides members of the music community access to addiction recovery treatment regardless of their financial situation. Continuing to celebrate the memory of DJ AM, the evening's DJ will be Mix Master Mike. There will also be a performance by Osbourne and his touring band with special guest Slash on guitar. Additional performers will be announced shortly. "Ten years ago MusiCares merged with the Musicians' Assistance Program, originally founded by Buddy Arnold, to create the MusiCares MAP Fund," said Neil Portnow, President/CEO of The Recording Academy® and MusiCares. -

Album the Eminem Show

Album The Eminem Show Curtains Up (Skit) White America Business Cleanin Out My Closet Square Dance The Kiss (skit) Soldier Say Goodbye Hollywood Drips Without Me Paul Rosenberg (Skit) Sing For The Moment Superman Hailie's Song Steve Berman When The Music Stops Say What You Say Till I Collapse My Dad's Gone Crazy Curtain's Close Curtains Up (Skit) - still not avalaible White America Intro America hahaha We love you How many people are proud to be citizens of this beautiful country of ours? The stripes and the stars for the rights of men who have died for the protect? The women and men who have broke their necks for the freedem of speech the United States Government has sworn to uphold Yo, I want everyone to listen to the words of this song Or so we're told... Verse #1 I never woulda dreamed in a million years id see so many mutha fuckin people who feel like me Who share the same views And the same exact beliefs Its like a fuckin army marchin in back of me So many lives i touched So much anger aimed at no perticular direction Just sprays and sprays Straight through your radio wavs It plays and plays Till it stays stuck in your head For days and days who woulda thought standin in this mirror Bleachin my hair wit some Peroxide Reachin for a T shirt to wear That i would catipult to the fore-front of rap like this How can i predict my words And have an impact like this I musta struck a chord wit somebody up in the office Cuz congress keeps tellin me I aint causin nuttin but problems And now they sayin im in trouble wit the government Im lovin