A Note on a Matrix Inequality for Generalized Means

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

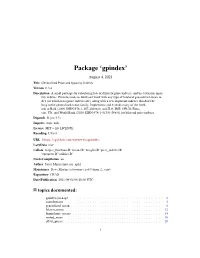

Gpindex: Generalized Price and Quantity Indexes

Package ‘gpindex’ August 4, 2021 Title Generalized Price and Quantity Indexes Version 0.3.4 Description A small package for calculating lots of different price indexes, and by extension quan- tity indexes. Provides tools to build and work with any type of bilateral generalized-mean in- dex (of which most price indexes are), along with a few important indexes that don't be- long to the generalized-mean family. Implements and extends many of the meth- ods in Balk (2008, ISBN:978-1-107-40496-0) and ILO, IMF, OECD, Euro- stat, UN, and World Bank (2020, ISBN:978-1-51354-298-0) for bilateral price indexes. Depends R (>= 3.5) Imports stats, utils License MIT + file LICENSE Encoding UTF-8 URL https://github.com/marberts/gpindex LazyData true Collate 'helper_functions.R' 'means.R' 'weights.R' 'price_indexes.R' 'operators.R' 'utilities.R' NeedsCompilation no Author Steve Martin [aut, cre, cph] Maintainer Steve Martin <[email protected]> Repository CRAN Date/Publication 2021-08-04 06:10:06 UTC R topics documented: gpindex-package . .2 contributions . .3 generalized_mean . .8 lehmer_mean . 12 logarithmic_means . 15 nested_mean . 18 offset_prices . 20 1 2 gpindex-package operators . 22 outliers . 23 price_data . 25 price_index . 26 transform_weights . 33 Index 37 gpindex-package Generalized Price and Quantity Indexes Description A small package for calculating lots of different price indexes, and by extension quantity indexes. Provides tools to build and work with any type of bilateral generalized-mean index (of which most price indexes are), along with a few important indexes that don’t belong to the generalized-mean family. Implements and extends many of the methods in Balk (2008, ISBN:978-1-107-40496-0) and ILO, IMF, OECD, Eurostat, UN, and World Bank (2020, ISBN:978-1-51354-298-0) for bilateral price indexes. -

Linear Discriminant Analysis Using a Generalized Mean of Class Covariances and Its Application to Speech Recognition

IEICE TRANS. INF. & SYST., VOL.E91–D, NO.3 MARCH 2008 478 PAPER Special Section on Robust Speech Processing in Realistic Environments Linear Discriminant Analysis Using a Generalized Mean of Class Covariances and Its Application to Speech Recognition Makoto SAKAI†,††a), Norihide KITAOKA††b), Members, and Seiichi NAKAGAWA†††c), Fellow SUMMARY To precisely model the time dependency of features is deal with unequal covariances because the maximum likeli- one of the important issues for speech recognition. Segmental unit input hood estimation was used to estimate parameters for differ- HMM with a dimensionality reduction method has been widely used to ent Gaussians with unequal covariances [9]. Heteroscedas- address this issue. Linear discriminant analysis (LDA) and heteroscedas- tic extensions, e.g., heteroscedastic linear discriminant analysis (HLDA) or tic discriminant analysis (HDA) was proposed as another heteroscedastic discriminant analysis (HDA), are popular approaches to re- objective function which employed individual weighted duce dimensionality. However, it is difficult to find one particular criterion contributions of the classes [10]. The effectiveness of these suitable for any kind of data set in carrying out dimensionality reduction methods for some data sets has been experimentally demon- while preserving discriminative information. In this paper, we propose a ffi new framework which we call power linear discriminant analysis (PLDA). strated. However, it is di cult to find one particular crite- PLDA can be used to describe various criteria including LDA, HLDA, and rion suitable for any kind of data set. HDA with one control parameter. In addition, we provide an efficient selec- In this paper we show that these three methods have tion method using a control parameter without training HMMs nor testing a strong mutual relationship, and provide a new interpreta- recognition performance on a development data set. -

Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes, Frédéric Barbaresco, Marc Arnaudon, Jérémie Bigot

Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes, Frédéric Barbaresco, Marc Arnaudon, Jérémie Bigot To cite this version: Yann Cabanes, Frédéric Barbaresco, Marc Arnaudon, Jérémie Bigot. Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm. C&ESAR 2019, Nov 2019, Rennes, France. hal-02875430 HAL Id: hal-02875430 https://hal.archives-ouvertes.fr/hal-02875430 Submitted on 19 Jun 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes1;2, Fred´ eric´ Barbaresco1, Marc Arnaudon2, and Jer´ emie´ Bigot2 1 Thales LAS, Advanced Radar Concepts, Limours, FRANCE [email protected]; [email protected] 2 Institut de Mathematiques´ de Bordeaux, Bordeaux, FRANCE [email protected]; [email protected] Abstract. This paper deals with unsupervised radar clutter clustering to char- acterize pathological clutter based on their Doppler fluctuations. Operationally, being able to recognize pathological clutter environments may help to tune radar parameters to regulate the false alarm rate. This request will be more important for new generation radars that will be more mobile and should process data on the move. -

Hydrologic and Mass-Movement Hazards Near Mccarthy Wrangell-St

Hydrologic and Mass-Movement Hazards near McCarthy Wrangell-St. Elias National Park and Preserve, Alaska By Stanley H. Jones and Roy L Glass U.S. GEOLOGICAL SURVEY Water-Resources Investigations Report 93-4078 Prepared in cooperation with the NATIONAL PARK SERVICE Anchorage, Alaska 1993 U.S. DEPARTMENT OF THE INTERIOR BRUCE BABBITT, Secretary U.S. GEOLOGICAL SURVEY ROBERT M. HIRSCH, Acting Director For additional information write to: Copies of this report may be purchased from: District Chief U.S. Geological Survey U.S. Geological Survey Earth Science Information Center 4230 University Drive, Suite 201 Open-File Reports Section Anchorage, Alaska 99508-4664 Box 25286, MS 517 Denver Federal Center Denver, Colorado 80225 CONTENTS Abstract ................................................................ 1 Introduction.............................................................. 1 Purpose and scope..................................................... 2 Acknowledgments..................................................... 2 Hydrology and climate...................................................... 3 Geology and geologic hazards................................................ 5 Bedrock............................................................. 5 Unconsolidated materials ............................................... 7 Alluvial and glacial deposits......................................... 7 Moraines........................................................ 7 Landslides....................................................... 7 Talus.......................................................... -

Generalized Mean Field Approximation For

Generalized mean field approximation for parallel dynamics of the Ising model Hamed Mahmoudi and David Saad Non-linearity and Complexity Research Group, Aston University, Birmingham B4 7ET, United Kingdom The dynamics of non-equilibrium Ising model with parallel updates is investigated using a gen- eralized mean field approximation that incorporates multiple two-site correlations at any two time steps, which can be obtained recursively. The proposed method shows significant improvement in predicting local system properties compared to other mean field approximation techniques, partic- ularly in systems with symmetric interactions. Results are also evaluated against those obtained from Monte Carlo simulations. The method is also employed to obtain parameter values for the kinetic inverse Ising modeling problem, where couplings and local fields values of a fully connected spin system are inferred from data. PACS numbers: 64.60.-i, 68.43.De, 75.10.Nr, 24.10.Ht I. INTRODUCTION Statistical physics of infinite-range Ising models has been extensively studied during the last four decades [1{3]. Various methods such as the replica and cavity methods have been devised to study the macroscopic properties of Ising systems with long range random interactions at equilibrium. Other mean field approximations that have been successfully used to study the infinite-range Ising model are naive mean field and the TAP approximation [2, 4], both are also applicable to broad range of problems [5, 6]. While equilibrium properties of Ising systems are well-understood, analysing their dynamics is still challenging and is not completely solved. The root of the problem is the multi-time statistical dependencies between time steps manifested in the corresponding correlation and response functions. -

Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm

Unsupervised Machine Learning for Pathological Radar Clutter Clustering: the P-Mean-Shift Algorithm Yann Cabanes1;2, Fred´ eric´ Barbaresco1, Marc Arnaudon2, and Jer´ emie´ Bigot2 1 Thales LAS, Advanced Radar Concepts, Limours, FRANCE [email protected]; [email protected] 2 Institut de Mathematiques´ de Bordeaux, Bordeaux, FRANCE [email protected]; [email protected] Abstract. This paper deals with unsupervised radar clutter clustering to char- acterize pathological clutter based on their Doppler fluctuations. Operationally, being able to recognize pathological clutter environments may help to tune Radar parameters to regulate the false alarm rate. This request will be more important for new generation radars that will be more mobile and should process data on-the- move. We first introduce the radar data structure and explain how it can be coded by Toeplitz covariance matrices. We then introduce the manifold of Toeplitz co- variance matrices and the associated metric coming from information geometry. We have adapted the classical k-means algorithm to the Riemaniann manifold of Toeplitz covariance matrices in [1], [2]; the mean-shift algorithm is presented in [3], [4]. We present here a new clustering algorithm based on the p-mean defi- nition in a Riemannian manifold and the mean-shift algorithm. Keywords: radar clutter · machine learning · unsupervised classification · p- mean-shift · autocorrelation matrix · Burg algorithm · reflection coefficients · Kahler¨ metric · Tangent Principal Components Analysis · Capon spectra. 1 Introduction Radar installation on a new geographical site is long and costly. We would like to shorten the time of deployment by recognizing automatically pathological clutters with past known diagnosed cases. -

The Generalized Mean Generalized Mean Inequalities

Generalized Means a.cyclohexane.molecule The Generalized Mean Consider the generalized mean for non-negative xi n !1=p 1 X M(p; x ; : : : ; x ) := xp 1 n n i i=1 whose name becomes self-explanatory when we consider the six cases n !−1 1 X −1 n M(−1; x1; : : : ; xn) = x = harmonic mean n i x−1 + ::: + x−1 i=1 1 n n !1=p 1 X p 1=n M(0; x1; : : : ; xn) = lim x = (x1 ··· xn) geometric mean p!0 n i i=1 n 1 X x1 + ::: + xn M(1; x ; : : : ; x ) = x = arithmetic mean 1 n n i n i=1 n 1=2 1 X x2 + ::: + x2 M(2; x ; : : : ; x ) = x2 = 1 n quadratic mean 1 n n i n i=1 n !1=p 1 X p M(−∞; x1; : : : ; xn) = lim x = minfx1; : : : ; xng minimum p→−∞ n i i=1 n !1=p 1 X p M(1; x1; : : : ; xn) = lim x = maxfx1; : : : ; xng maximum p!1 n i i=1 The three cases involving limits are somewhat more difficult to evaluate. In these cases, the calculations are simplified by first considering the natural logarithm of the limit and hence applying L'H^opital'srule. p p p p log((x1 + ::: + xn)=n) x1 log x1 + ::: + xn log xn log x1 ··· xn log L0 = lim = lim p p = p!0 p p!0 x1 + ::: + xn n p p p p 1 x1 + ::: + xn 1 1 x1 xn log L1 = lim log = log xk + lim log + ::: + 1 + ::: + = log xk p!1 p n p!1 p n xk xk 1 1 −1 1 1 −1 L−∞ = M 1; ;:::; = max ;:::; = min fx1; : : : ; xng x1 xn x1 xn p The first case is simple. -

Information to Users

INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI fihns the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter 6ce, while others may be from any type of computer printer. The quality of this reproduction Is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleedtfarough, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not said UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, b^inning at the upper left-hand comer and continuing from left to right in equal sections with small overlaps. Each original is also photographed in one exposure and is included in reduced form at the back of the book. Photographs included in the original manuscript have been reproduced xerographically in this copy. Higher quality 6” x 9” black and white photographic prints are available for any photographs or illustrations appearing in this copy for an additional charge. Contact UMI directly to order. UMI A Bell & Howell Infiumadon Company 300 North Zeeb Road, Ann Aibor MI 48106-1346 USA 313/761-4700 800/521-0600 A CENTRAL LIMIT THEOREM FOR COMPLEX-VALUED PROBABILITIES DISSERTATION Presented in Partial FulfiUment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Natalia A. -

Abstract the Question an Observation

Abstract There are many notions of means, the arithmetic mean and geometric mean are quite familiar to many. There are also a few generalizations of these concepts, including the mean developed by V. M. Tikhomirov. However, these means fail to capture the general nature of means. A new definition is presented and it is shown that the Tikhomirov mean is a form of this mean. The Question While a mean is a powerful mathematical structure, it is not well defined. One common definition of a mean is a measure of center or a middle. V. M. Tikhomirov defines a generalized mean as follows. Given a continuous strictly monotone function ϕ a nd its inverse ψ, the mean of a ϕ(x1)+ϕ(x2)+...+ϕ(xn) sequence of values {x1, x2, ..., xn}, M n(x1, x2, ..., xn) = ψ( n ). As Tikhomirov explains, the arithmetic mean, the geometric mean, the harmonic mean, and the root-mean-square are all forms of this generalized mean1. However, this definition does make the essence of the operation clear. An Observation To try to understand what is happening when we take the mean of a collection of values, consider the arithmetic mean. To calculate the mean of a sequence X = {x1, x2, ..., xn} we add all the values of the sequence together, and divide by the number of elements in the sequence: n ˉ 1 X = n ∑ xi. i=1 What property does this value have? One property is that if we sum the elements of X, we end up with the same result that we would get if we replaced each element of X with Xˉ . -

Divergence Geometry

2015 Workshop on High-Dimensional Statistical Analysis Dec.11 (Friday) ~15 (Tuesday) Humanities and Social Sciences Center, Academia Sinica, Taiwan Information Geometry and Spontaneous Data Learning ShintoShinto Eguchi Eguchi InstituteInstitute Statistical Statistical Mathematics, Mathematics, Japan Japan This talk is based on a joint work with Osamu Komori and Atsumi Ohara, University of Fukui Outline Short review for Information geometry Kolmogorov-Nagumo mean -path in a function space Generalized mean and variance –divergence geometry U-divergence geometry Minimum U-divergence and density estimation 2 A short review of IG Nonparametric space Space of statistics Information geometry is discussed on the product space 3 Bartlett’s identity Parametric model Bartlett’s first identity Bartlett’s second identity 4 Metric and connections Information metric Mixture connection Exponential connection Rao (1945), Dawid (1975), Amari (1982) 5 Geodesic curves and surfaces in IG m-geodesic curve e-geodesic curve m-geodesic surface e-geodesic surface 6 Kullback-Leibler K-L divergence 1. KL divergence is the expected log-likelihood ratio 2. Maximum likelihood is minimum KL divergence. Akaike (1974) 3. KL divergence induces to the m-connection and e-connection Eguchi (1983) 7 Pythagoras Thm Amari-Nagaoka (2001) Pf 8 Exponential model Exponential model Mean parameter For For Amari (1982) Degenerated Bartlett identity 9 Minimum KL leaf Exponential model Mean equal space 10 Pythagoras foliation 11 log + exp log & exp Bartlett identities KL-divergence e-connection e-geodesic m-connection m-geodesic Pythagoras identity exponential model Pythagoras foliation mean equal space 12 Path geometry { m-geodesic, e-geodesic , … } 13 Kolmogorov-Nagumo mean K-N mean is for positive numbers ¥ 14 K-N mean in Y Def . -

©2018 Mengmeng Zhu ALL RIGHTS RESERVED

©2018 Mengmeng Zhu ALL RIGHTS RESERVED SOFTWARE RELIABILITY MODELING AND ITS APPLICATIONS CONSIDERING FAULT DEPENDENCY AND ENVIRONMENTAL FACTORS by MENGMENG ZHU A dissertation submitted to the School of Graduate Studies Rutgers, The State University of New Jersey In partial fulfillment of the requirements For the degree of Doctor of Philosophy Graduate Program in Industrial and Systems Engineering Written under the direction of Hoang Pham And approved by New Brunswick, New Jersey OCTOBER 2018 ABSTRACT OF THE DISSERTATION SOFTWARE RELIABILITY MODELING AND ITS APPLICATIONS CONSIDERING FAULT DEPENDENCY AND ENVIRONMENTAL FACTORS By MENGMENG ZHU Dissertation Director: Hoang Pham The increasing dependence of our modern society on software systems has driven the development of software product to be more competitive and time-consuming. At the same time, large-scale software development is still considered as a complex, effort consuming, and expensive activity, given the influence of the transitions in software development, which are the adoption of software product lines, software development globalization, and the adoption of software ecosystems. Hence, the consequence of software failures becomes costly, and even dangerous. Therefore, in this dissertation, we have not only integrated software practitioners’ opinions from a wide variety of industries, but also developed software reliability models by addressing different practical problems observed in software development practices. We first revisit 32 environmental factors affecting software reliability in single-release software development and compare with the findings 15 years ago [27, 28]. Later, we investigate the environmental factors affecting software reliability in multi-release software development and compare the impact of environmental factors between the ii development of multi-release and single-release software to provide a comprehensive analysis for software development practices. -

Bu-1133-M Deriving Generalized Means As Least

DERIVING GENERALIZED MEANS AS LEAST SQUARES AND MAXIMUM LIKELIHOOD ESTIMATES Roger L. Berger Statistics Department Box 8203 North Carolina State University Raleigh, NC 27695-8203 and George Casella1 Biometrics Unit 337 Warren Hall Cornell University Ithaca, NY 14853-7801 BU-1133-M September 1991 ABSTRACT Functions called generalized means are of interest in statistics because they are simple to compute, have intuitive appeal, and can serve as reasonable parameter estimates. The well-known arithmetic, geometric, and harmonic means are all examples of generalized means. We show how generalized means can be derived in a unified way, as le<!St squares estimates for a transformed data set. We also investigate models that have generalized means as their maximum likelihood estimates. Key words: Exponential family, arithmetic mean, geometric mean, harmonic mean. 1 Research supported by National Science Foundation Grant No. DMS91-00839 and National Security Agency Grant No. 90F-073 Part of this work was performed at the Cornell Workshop on Conditional Inference, sponsored by the Army Mathematical Sciences Institute and the Cornell Statistics Center, Ithaca, NY June 2-14, 1991. -2- 1. INTRODUCTION Abramowitz and Stegun (1965) define a generalized mean to be a function of n positive variables of the form (1.1) which is known as the geometric mean. Two other well-known special cases are the arithmetic mean (A= 1) and the harmonic mean (A= -1). The form of (1.1) leads us to inquire about the conditions that would yield (1.1) as a measure of center (a mean), or the models that would yield (1.1) as an estimate of a parameter.