Coding Write in Responses in a Census

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Millbach (Muhlbach) Cemetery PA SR 419 & Church Road Next To

Lebanon County, PA Millbach (Muhlbach) Cemetery PA SR 419 & Church Road next to St. Paul's UCC Church in Millbach. Findagrave Millbach: has the Meyers as Moyers (as seen on the stones). Millbach cemetery has lots of Moyer including recent burials. Henry Meyer, the ancestor immigrant might be buried here. 15 Lebanon County, PA Millbach (Muhlbach) Cemetery Other relatives at Millbach our database Meyer, Henry (1815‐1881) buried in Millbach, his wife is in Schaefferstown. Henry is a grandson of John Moyer and Catharine Schaeffer. Meyer, John (1745‐1812) son of immigrant Henry Meyer And Schaeffer, Catharine (1747‐1825). Meyer, Johannes (1719‐1786) son of John Meyer. No stone on findagrave. 16 Lebanon County, PA Henry Meyer, the immigrant given in another place. Little is known about this Meyer while in this country, and still less about his history before he came across. From The Meyer Family Tree compiled by John D. Meyer, Tyrone, Many genealogies are lost in the Atlantic Ocean. Maj. John Meyer, PA, October 1937 Rebersburg, Pa., states, he often heard his uncle Philip Meyer The original Meyer from Germany was born in the Palatinate (grandson of the Meyer from Germany) s say that two brothers Germany was one of three brothers who came to America about came with our ancestor across from the old country, and that one 1723 or 24 to the Muhlbach then in Lancaster County Pa new of them returned to his home because he did not like this country, Lebanon County, about a mile south of the present town of while the other went to the Carolinas in quest of a warmer Sheridan and seven miles south east of Myerstown, Pa. -

First Name Americanization Patterns Among Twentieth-Century Jewish Immigrants to the United States

City University of New York (CUNY) CUNY Academic Works All Dissertations, Theses, and Capstone Projects Dissertations, Theses, and Capstone Projects 2-2017 From Rochel to Rose and Mendel to Max: First Name Americanization Patterns Among Twentieth-Century Jewish Immigrants to the United States Jason H. Greenberg The Graduate Center, City University of New York How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/gc_etds/1820 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] FROM ROCHEL TO ROSE AND MENDEL TO MAX: FIRST NAME AMERICANIZATION PATTERNS AMONG TWENTIETH-CENTURY JEWISH IMMIGRANTS TO THE UNITED STATES by by Jason Greenberg A dissertation submitted to the Graduate Faculty in Linguistics in partial fulfillment of the requirements for the degree of Master of Arts in Linguistics, The City University of New York 2017 © 2017 Jason Greenberg All Rights Reserved ii From Rochel to Rose and Mendel to Max: First Name Americanization Patterns Among Twentieth-Century Jewish Immigrants to the United States: A Case Study by Jason Greenberg This manuscript has been read and accepted for the Graduate Faculty in Linguistics in satisfaction of the thesis requirement for the degree of Master of Arts in Linguistics. _____________________ ____________________________________ Date Cecelia Cutler Chair of Examining Committee _____________________ ____________________________________ Date Gita Martohardjono Executive Officer THE CITY UNIVERSITY OF NEW YORK iii ABSTRACT From Rochel to Rose and Mendel to Max: First Name Americanization Patterns Among Twentieth-Century Jewish Immigrants to the United States: A Case Study by Jason Greenberg Advisor: Cecelia Cutler There has been a dearth of investigation into the distribution of and the alterations among Jewish given names. -

Why Many Dutch Surnames Look So Archaic: the Exceptional Orthographic Position of Names Leendert Brouwer Netherlands

Leendert Brouwer, Netherlands 1 Why Many Dutch Surnames Look So Archaic: The Exceptional Orthographic Position of Names Leendert Brouwer Netherlands Abstract In the 19th century, several scholars in the Netherlands worked on a standardization of spelling of words. Only just after World War II, a uniform spelling was codified. Nobody, however, seemed to care about, or even to think of, an adaptation of the spelling of surnames. In the first decades of the 19th century, due to Napoleon’s enforcement of implementing his Civil Code, all inhabitants were registered with a surname, which from then on never formally changed. This means that the final stage of surname development took place in a period which may be considered as orthographically unstable. For this reason, many names still have many variations nowadays. This often leads to insecurity about the spelling of names. Even someone with an uncomplicated name, such as Brouwer, will often be asked, if the name is written with -au- or with -ou-, although this name is identical with the word ‘brouwer’ (= brewer) and 29,000 individuals bear the name Brouwer with -ou-, while only 40 people bear the name De Brauwer (Brauwer without an article doesn’t even exist). But we easily accept this kind of inconvenience, because people do not expect names to be treated as words. A surname has a special meaning, particularly when it shows an antique aura. *** Leendert Brouwer, Netherlands 2 My name is Brouwer. That should be an easy name to spell in the Netherlands, because my name is written exactly as the noun ‘brouwer’, denoting the trade of my forefather, who must have been a brewer: a craftsman who produces beer. -

![Eugene Meyer Papers [Finding Aid]. Library of Congress](https://docslib.b-cdn.net/cover/8745/eugene-meyer-papers-finding-aid-library-of-congress-3978745.webp)

Eugene Meyer Papers [Finding Aid]. Library of Congress

Eugene Meyer Papers A Finding Aid to the Collection in the Library of Congress Manuscript Division, Library of Congress Washington, D.C. 2014 Revised 2015 November Contact information: http://hdl.loc.gov/loc.mss/mss.contact Additional search options available at: http://hdl.loc.gov/loc.mss/eadmss.ms013146 LC Online Catalog record: http://lccn.loc.gov/mm82052019 Prepared by C. L. Craig, Paul Ledvina, and David Mathisen with the assistance of Leonard Hawley and Susie Moody Collection Summary Title: Eugene Meyer Papers Span Dates: 1864-1970 Bulk Dates: (bulk 1890-1959) ID No.: MSS52019 Creator: Meyer, Eugene, 1875-1959 Extent: 78,500 items ; 267 containers plus 2 oversize ; 107.6 linear feet ; 1 microfilm reel Language: Collection material in English Location: Manuscript Division, Library of Congress, Washington, D.C. Summary: Investment banker, financier, public official, and newspaperman. Correspondence, memoranda, minutes, diaries, oral history interviews, speeches, writings, congressional testimony, press statements, financial papers, family papers, biographical material, printed material, scrapbooks, photographs, and other papers relating to Meyer's life and career. Selected Search Terms The following terms have been used to index the description of this collection in the Library's online catalog. They are grouped by name of person or organization, by subject or location, and by occupation and listed alphabetically therein. People Baruch, Bernard M. (Bernard Mannes), 1870-1965--Correspondence. Bixby, Fred H.--Correspondence. Blythe, Samuel G. (Samuel George), 1868-1947--Correspondence. Friendly, Alfred--Correspondence. Friendly, Alfred. Graham, Philip L., 1915-1963. Hyman, Sidney. Kahn family. Meyer family. Meyer, Eugene, 1875-1959. Weill family. Wiggins, James Russell, 1903-2000--Correspondence. -

Surname Changes Jongklaas → Jonklaas Kelaar → Kelaart

Surname Changes Jongklaas ® Jonklaas Kelaar ® Kelaart Ferdinandus ® Ferdinando or ® Ferdinand or ® Ferdinands Lawrence Joseph ® Joseph Lawrence De Silva ® D’Silva ® Silva (There are also distinct branches of these same names) Von Drieberg ® Anjou ® Von Drieberg ® Drieberg Parber ® Barber Meijer ® Meyer and could also be linked to the family Meier One of the things I have learnt is beware of name/surname changes so as not to discount somebody that you think may not be of your line, these occur in instances like: - 1. Better pronunciation/ less errors in spelling, The name Jongklaas with a G changed to Jonklaas probably due to pronunciation reasons in the country of Ceylon, while in Holland the surname can still be found in its original form another instance is Misso. 2. Assumed names (running away from trouble/family name was disgraced) Anjou-Von Drieberg, Shultz-Van Ranzow 3. Anglicized Parbe-Barber, Smits-Smith, Johannes Henricus-John Henry 4. To create less confusion with another family Kellar and the name Kelaart which at one stage was Kelaar. On my latest discoveries I have found that Joost Kelaar the originator of the Kelaart Line to be actually Joost Kesselaer which goes back to my first reason of Surname Changes for better pronunciation. It is interesting to note that the first name is more of Dutch Origin while the original surname is of German Origin. Perhaps he came from Holland while the past generations came from Germany or near the Border, by looking at your options you can start to look in the right areas. 5. Developments of a name over a period of time De arpes, de Arpa, Del Harpe, de la Harpe 6. -

Names in Multi-Lingual

Leendert Brouwer, Netherlands 164 Why Many Dutch Surnames Look So Archaic: The Exceptional Orthographic Position of Names Leendert Brouwer Netherlands Abstract In the 19th century, several scholars in the Netherlands worked on a standardization of spelling of words. Only just after World War II, a uniform spelling was codified. Nobody, however, seemed to care about, or even to think of, an adaptation of the spelling of surnames. In the first decades of the 19th century, due to Napoleon’s enforcement of implementing his Civil Code, all inhabitants were registered with a surname, which from then on never formally changed. This means that the final stage of surname development took place in a period which may be considered as orthographically unstable. For this reason, many names still have many variations nowadays. This often leads to insecurity about the spelling of names. Even someone with an uncomplicated name, such as Brouwer, will often be asked, if the name is written with -au- or with -ou-, although this name is identical with the word ‘brouwer’ (= brewer) and 29,000 individuals bear the name Brouwer with -ou-, while only 40 people bear the name De Brauwer (Brauwer without an article doesn’t even exist). But we easily accept this kind of inconvenience, because people do not expect names to be treated as words. A surname has a special meaning, particularly when it shows an antique aura. *** Leendert Brouwer, Netherlands 165 My name is Brouwer. That should be an easy name to spell in the Netherlands, because my name is written exactly as the noun ‘brouwer’, denoting the trade of my forefather, who must have been a brewer: a craftsman who produces beer. -

Names List 2015.Indd

National Days of Remembrance NAMES LIST OF STEIN, Viktor—From Hungary VICTIMS OF THE HOLOCAUST HOUILLER, Marcel—Perished at Natzweiler This list contains the names of 5,000 Jewish and SEELIG, Karl—Perished at Dachau non-Jewish individuals who were murdered by Nazi Germany and its collaborators between 1939 BAUER, Ferenc—From Hungary and 1945. Each name is followed by the victim’s LENKONISZ, Lola—Perished at Bergen-Belsen country of origin or place of death. IRMSCHER, Paul—Perished at Neuengamme Unfortunately, there is no single list of those known to have perished during the Holocaust. The list GRZYWACZ, Jan—Perished at Bergen-Belsen provided here is a very small sample of names taken from archival documents at the United States BUGAJSKI, Abraham-Hersh—From Poland Holocaust Memorial Museum. BRAUNER, Ber—From Poland Approximately 650 names can be read in an hour. NATKIEWICZ, Josef—Perished at Buchenwald PROSTAK, Benjamin—From Romania LESER, Julius—Perished at Dachau GEOFFROY, Henri—Perished at Natzweiler FRIJDA, Joseph—Perished at Bergen-Belsen HERZ, Lipotne—From Slovakia GROSZ, Jeno—From Hungary GUTMAN, Rivka—From Romania VAN DE VATHORST, Antonius—Perished at Neuengamme AUERBACH, Mordechai—From Romania WINNINGER, Sisl—From Romania PRINZ, Beatrix—Perished at Bergen-Belsen BEROCVCZ, Estella—Perished at Bergen-Belsen WAJNBERG, Jakob—Perished at Buchenwald FRUHLING, Sandor—From Hungary SOMMERLATTE, Berta—From Germany BOERSMA, Marinus—Perished at Neuengamme DANKEWICZ, Dovid—From Poland ushmm.org National Days of Remembrance WEISZ, Frida—From -

Claims Resolution Tribunal

CLAIMS RESOLUTION TRIBUNAL In re Holocaust Victim Assets Litigation Case No. CV96-4849 Certified Denial to Claimant [REDACTED} Claimed Account Owner: Louis Meyer1 Claim Numbers: 224442/SB; 300658/SB This Certified Denial is to the claims of [REDACTED} (the Claimant ) to a Swiss bank account potentially owned by the Claimant s relative, Louis (Levi) Meyer (Meier, Maier, Mayer) (the Claimed Account Owner ). All denials are published, but where a claimant has requested confidentiality, as in this case, the names of the claimant, any relatives of the claimant other than the account owner, and the bank have been redacted. Information Provided by the Claimant The Claimant did not state where Louis Meyer, who was Jewish, actually resided, but the CRT notes that what information the Claimant does provide is consistent with Louis Meyer having resided in Gambach, Germany. The CRT s Investigation The CRT matched the name of Louis (Levi) Meyer (Meier, Maier, Mayer) to the names of all account owners in the Account History Database and identified accounts belonging to individuals whose names match, or are substantially similar to, the name of the Claimed Account Owner. In doing so, the CRT used advanced name matching systems and computer programs, and considered variations of names, including name variations provided by Yad Vashem, The Holocaust Martyrs and Heroes Remembrance Authority, in Jerusalem, Israel, to ensure that all possible name matches were identified. However, a close review of the relevant bank records indicated that the information contained therein was inconsistent with the information the Claimant provided regarding the Claimed Account Owner. Accordingly, the CRT was unable to conclude that any of these accounts belonged to the Claimed Account Owner. -

Adult's Names

The names of the Holocaust victims that appear on this list were taken from Pages of Testimony submitted to Yad Vashem Date of Family name First name Father's name Age Place of residence Place of death death ABRAMOVICZ BENTZION MOSHE 46 POLAND AUSCHWITZ 1943 ABRAMSON SARA MOSHE 23 POLAND LIVANI, LATVIA 1941 ADLER PNINA YESHAIAHU 42 CZECHOSLOVAKIA AUSCHWITZ 1942 ADAM FEIGA REUVEN 25 POLAND WARSZAWA, POLAND 1942 AUERBACH RIKA ELIEZER 65 POLAND LODZ, POLAND 1942 AUGUSTOWSKI SHABTAI JAKOB 39 POLAND JANOW, POLAND 1941 AWROBLANSKI CHAJM YAAKOV 47 POLAND TREBLINKA 1942 ALTMAN BERNHARD ISRAEL 47 ROMANIA KOPAYGOROD, UKRAINE (USSR) 22/02/42 AMSTERDAMSKI MENACHEM ELIAHU 19 LITHUANIA KAUFERING, GERMANY 23/04/45 ANGEL ISAAC YOSSEF 64 GREECE POLAND 1940 ANDERMAN CHAIM BENIAMIN 60 POLAND BUCZACZ, POLAND 1942 APELBOJM SENDL FISHEL 37 POLAND TREBLINKA 1941 AKSELRAD ETI AVRAHAM 19 POLAND JAGIELNICA, POLAND 1942 Yad Vashem - Hall of Names 1/87 The names of the Holocaust victims that appear on this list were taken from Pages of Testimony submitted to Yad Vashem Date of Family name First name Father's name Age Place of residence Place of death death OSLERNER CHANA SHMUEL 41 POLAND TREBLINKA 1941 ARANOWICZ MOSHE TZVI 64 POLAND LODZ, POLAND 1942 AARONSON MICHELINE ALBERT 23 FRANCE AUSCHWITZ 27/03/44 OBSTBAUM SARA YAKOB 30 POLAND WARSZAWA, POLAND 1942 OGUREK MOSE ARIE 55 POLAND WARSZAWA, POLAND 1940 OZEROWICZ LIBA MOSHE 41 POLAND ZDZIECIOL, POLAND 21/07/41 OJCER HERSCH AVRAHAM 30 POLAND LODZ, POLAND 1942 OSTFELD AVRAHAM MERL 51 ROMANIA BERSHAD, UKRAINE (USSR) -

Schedule of Attorneys Suspended for Violation of Judiciary Law §468-A Last Names Beginning with Letters L-Z and Partial

Schedule of Attorneys Suspended for Violation of Judiciary Law §468-a Last Names Beginning With Letters L-Z and partial L-Q Name Last registered address Oath Date Dept. LADDEN KARLIN & PIMSLER INC. 02/02/1998 1 ANDREW 205 LEXINGTON AVE 6TH FL NEW YORK, NY 10016 LAFFIN BROWN WOOD IVEY MITCHELL & PETTY 09/21/1982 1 ROBERT W. 555 CALIFORNIA STREET SUITE 5060 SAN FRANCISCO, CA 94104 LAHIFF, JR. CITICORP 12/01/1980 1 THOMAS M. LEGAL AFFAIRS DEPT 425 PARK AVE 3RD FL ZONE 4 NEW YORK, NY 10043 LALLY 380 POWELL DRIVE 09/25/1989 1 ELIZABETH NUNZIATO BAY VILLAGE, OH 44140 LAM BERNARD M LAM 06/24/1982 4 BERNARD MINGWAI 2 MOTT STREET SUITE 701 NEW YORK, NY 10013 LAMATINA LOUIS J LAMATINA ESQ 03/02/1983 2 LOUIS JAMES 488 MADISON AVENUE 6TH FLOOR NEW YORK, NY 10022 LAMB NIXON, HARGRAVE, DEVANS & DOYLE 08/07/1995 1 DAVID 437 MADISON AVENUE NEW YORK, NY 10022 LAMB FOULSTON CONLEE SCHMIDT & EMERSON LLP 08/10/1992 1 JANE DETERDING 300 N MAIN SUITE 300 WICHITA, KS 67202 LAMERE FERGUS INCORPORATED 05/07/1984 1 ELIZABETH JANE 1325 AVENUE OF THE AMERICAS SUITE 2302 NEW YORK, NY 10019 LANDY CHATHAM COMMUNICATIONS INC 10/28/1970 1 RICHARD I. 407 LINCOLN RD. SUITE 10E MIAMI BEACH, FL 33139 LANNON 343 E 74TH STREET 03/17/1975 1 JOSEPH EDWARD APT 16B NEW YORK, NY 10021 Name Last registered address Oath Date Dept. LAPORTE, III DOORWAY COMMUNICATION SERVICES 03/23/1987 1 CLOYD 3615 38TH ST., NW #409 WASHINGTON, DC 20016 LAPORTE RICHARD G LAPORTE 12/23/1971 1 RICHARD G. -

TOP SO GERMAN SURNAMES from Mueller (Miller) to Keller (Cellar)

HAVE GERMAN WILL TRAVEL AHNENTAFEL GERMAN GENEALOGY FROM A TO Z TOP SO GERMAN SURNAMES From Mueller (miller) to Keller (cellar) Thili Top 50 list ranks the most common family names in Germany. The list was produced by surveying German surnames found in German telephone books. We have supplemented that list with the English meaning for each German family name. Please note that the English meaning is a translation of the German name and may or may not be a surname actually used in English-speaking countries. For example, the most common German surname is Muller, which means "miller." Miller is a fairly common name in the English-speaking world, and is also a family name derived from an occupation. However, the German last name Keller means "cellar" or "basement." Neither of those words is used as a family name in English, but Keller belongs to a class of names derived from where someone worked, in this case, perhaps a Rathskeller (a cellar pub or restaurant) or a Weinkeller (wine cellar). The German surname is also a fairly common family name in the U.S., including the famous Helen Keller (1880- 1968, born in Alabama). The German word Kellner (waiter) is also related to Keller. However, the name Keller may have other possible origins. Names and their sources are tricky things. This list is not meant as a guide to name ori~ins. It is only intended to be an enjoyable way to discove;r the possible meanings of various German names. Most-Common German Last Names 1. Muller (Mueller, Moller) = miller 26. -

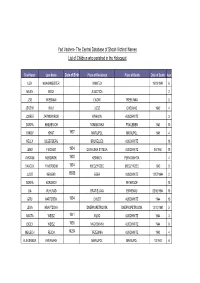

The Central Database of Shoah Victims' Names List of Children Who

Yad Vashem- The Central Database of Shoah Victims' Names List of Children who perished in the Holocaust First Name Last Name Date of Birth Place of Residence Place of Death Date of Death Age LIZA KHAKHMEISTER VINNITZA 19/09/1941 6 MILEN BECK SUBOTICA 2 JZIO WIESMAN LWOW TREBLINKA 8 JERZYK WILK LODZ CHELMNO 1942 4 JOSELE ZARNOWIECKI KRAKOW AUSCHWITZ 3 SONYA KHILKEVICH ROMANOVKA FRAILEBEN 1941 10 YAKOV KHAIT 1937 MARIUPOL MARIUPOL 1941 4 NELLY SILBERBERG BRUXELLES AUSCHWITZ 10 JENO FISCHER 1934 DUNAJSKA STREDA AUSCHWITZ 06/1944 10 AVRAAM NERDINSKI 1938 KISHINEV PERVOMAYSK 4 YAACOV YAVERBOIM 1934 MIEDZYRZEC MIEDZYRZEC 1942 9 JUDIT KEMENY 15555 EGER AUSCHWITZ 12/07/1944 2 SONYA KOROBOV PETERGOF 10 LIA MUHLRAD BRATISLAVA BIRKENAU 05/10/1944 10 GITU HARTSTEIN 1934 CHUST AUSCHWITZ 1944 10 LENA KRAVTZOVA DNEPROPETROVSK DNEPROPETROVSK 13/10/1941 5 AGOTA WEISZ 1941 IKLOD AUSCHWITZ 1944 3 IBOLY WEISZ 1936 NAGYBANYA AUSCHWITZ 1944 8 MEILECH REICH 14254 TRZEBINIA AUSCHWITZ 1943 4 ALEKSANDR AVERBAKH MARIUPOL MARIUPOL 10/1941 6 Yad Vashem- The Central Database of Shoah Victims' Names List of Children who perished in the Holocaust First Name Last Name Date of Birth Place of Residence Place of Death Date of Death Age SALOMON LOTERSPIL 1941 LUBLIN LUBLIN 2 MISHA GORLACHOV 1932 RYASNA RYASNA 1941 9 YEVGENI PUPKIN NOVY OSKOL NOVY OSKOL 4 REVEKKA KRUK 1934 ODESSA ODESSA 1941 7 VOVA ZAYONCHIK 1936 KIEV BABI YAR 29/09/1941 5 MAYA LABOVSKAYA 1931 KIYEV BABI YAR 1941 10 MILOS PLATOVSKY 1932 PROBULOW 1942 10 VILIAM HOENIG 15/12/1939 TREBISOV SACHSENHAUSEN 12/11/1944