An Analysis of Bernard Hermann's Scores for Jane Eyre (1943) and the Ghost and Mrs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Of Gods and Monsters: Signification in Franz Waxman's Film Score Bride of Frankenstein

This is a repository copy of Of Gods and Monsters: Signification in Franz Waxman’s film score Bride of Frankenstein. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/118268/ Version: Accepted Version Article: McClelland, C (Cover date: 2014) Of Gods and Monsters: Signification in Franz Waxman’s film score Bride of Frankenstein. Journal of Film Music, 7 (1). pp. 5-19. ISSN 1087-7142 https://doi.org/10.1558/jfm.27224 © Copyright the International Film Music Society, published by Equinox Publishing Ltd 2017, This is an author produced version of a paper published in the Journal of Film Music. Uploaded in accordance with the publisher's self-archiving policy. Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Paper for the Journal of Film Music Of Gods and Monsters: Signification in Franz Waxman’s film score Bride of Frankenstein Universal’s horror classic Bride of Frankenstein (1935) directed by James Whale is iconic not just because of its enduring images and acting, but also because of the high quality of its score by Franz Waxman. -

A Divine Round Trip: the Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2014 A Divine Round Trip: The Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John Susan Elizabeth Humble University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Biblical Studies Commons Recommended Citation Humble, Susan Elizabeth, "A Divine Round Trip: The Literary and Christological Function of the Descent/ Ascent Leitmotif in the Gospel of John" (2014). Electronic Theses and Dissertations. 978. https://digitalcommons.du.edu/etd/978 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. A Divine Round Trip: The Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John _________ A Dissertation Presented to the Faculty of the University of Denver and the Iliff School of Theology Joint PhD Program University of Denver ________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy _________ by Susan Elizabeth Humble November 2014 Advisor: Gregory Robbins ©Copyright by Susan Elizabeth Humble 2014 All Rights Reserved Author: Susan Elizabeth Humble Title: A Divine Round Trip: The Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John Advisor: Gregory Robbins Degree Date: November 2014 ABSTRACT The thesis of this dissertation is that the Descent/Ascent Leitmotif, which includes the language of not only descending and ascending, but also going, coming, and being sent, performs a significant literary and christological function in the Gospel of John. -

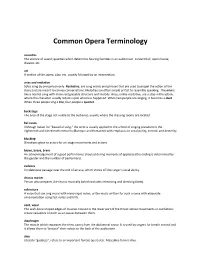

Common Opera Terminology

Common Opera Terminology acoustics The science of sound; qualities which determine hearing facilities in an auditorium, concert hall, opera house, theater, etc. act A section of the opera, play, etc. usually followed by an intermission. arias and recitative Solos sung by one person only. Recitative, are sung words and phrases that are used to propel the action of the story and are meant to convey conversations. Melodies are often simple or fast to resemble speaking. The aria is like a normal song with more recognizable structure and melody. Arias, unlike recitative, are a stop in the action, where the character usually reflects upon what has happened. When two people are singing, it becomes a duet. When three people sing a trio, four people a quartet. backstage The area of the stage not visible to the audience, usually where the dressing rooms are located. bel canto Although Italian for “beautiful song,” the term is usually applied to the school of singing prevalent in the eighteenth and nineteenth centuries (Baroque and Romantic) with emphasis on vocal purity, control, and dexterity blocking Directions given to actors for on-stage movements and actions bravo, brava, bravi An acknowledgement of a good performance shouted during moments of applause (the ending is determined by the gender and the number of performers). cadenza An elaborate passage near the end of an aria, which shows off the singer’s vocal ability. chorus master Person who prepares the chorus musically (which includes rehearsing and directing them). coloratura A voice that can sing music with many rapid notes, or the music written for such a voice with elaborate ornamentation using fast notes and trills. -

The Application of Bel Canto Concepts and Principles to Trumpet Pedagogy and Performance

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 1980 The Application of Bel Canto Concepts and Principles to Trumpet Pedagogy and Performance. Malcolm Eugene Beauchamp Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Recommended Citation Beauchamp, Malcolm Eugene, "The Application of Bel Canto Concepts and Principles to Trumpet Pedagogy and Performance." (1980). LSU Historical Dissertations and Theses. 3474. https://digitalcommons.lsu.edu/gradschool_disstheses/3474 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. INFORMATION TO USERS This was produced from a copy of a document sent to us for microfilming. While the most advanced technological means to photograph and reproduce this document have been used, the quality is heavily dependent upon the quality of the material submitted. The following explanation of techniques is provided to help you understand markings or notations which may appear on this reproduction. 1. The sign or “target” for pages apparently lacking from the document photographed is “Missing Page(s)”. If it was possible to obtain the missing page(s) or section, they are spliced into the film along with adjacent pages. This may have necessitated cutting through an image and duplicating adjacent pages to assure you of complete continuity. 2. When an image on the film is obliterated with a round black mark it is an indication that the film inspector noticed either blurred copy because of movement during exposure, or duplicate copy. -

A Computational Lens Into How Music Characterizes Genre in Film Abstract

A computational lens into how music characterizes genre in film Benjamin Ma1Y*, Timothy Greer1Y*, Dillon Knox1Y*, Shrikanth Narayanan1,2 1 Department of Computer Science, University of Southern California, Los Angeles, CA, USA 2 Department of Electrical and Computer Engineering, University of Southern California, Los Angeles, CA, USA YThese authors contributed equally to this work. * [email protected], [email protected], [email protected] Abstract Film music varies tremendously across genre in order to bring about different responses in an audience. For instance, composers may evoke passion in a romantic scene with lush string passages or inspire fear throughout horror films with inharmonious drones. This study investigates such phenomena through a quantitative evaluation of music that is associated with different film genres. We construct supervised neural network models with various pooling mechanisms to predict a film's genre from its soundtrack. We use these models to compare handcrafted music information retrieval (MIR) features against VGGish audio embedding features, finding similar performance with the top-performing architectures. We examine the best-performing MIR feature model through permutation feature importance (PFI), determining that mel-frequency cepstral coefficient (MFCC) and tonal features are most indicative of musical differences between genres. We investigate the interaction between musical and visual features with a cross-modal analysis, and do not find compelling evidence that music characteristic of a certain genre implies low-level visual features associated with that genre. Furthermore, we provide software code to replicate this study at https://github.com/usc-sail/mica-music-in-media. This work adds to our understanding of music's use in multi-modal contexts and offers the potential for future inquiry into human affective experiences. -

A European Singspiel

Columbus State University CSU ePress Theses and Dissertations Student Publications 2012 Die Zauberflöte: A urE opean Singspiel Zachary Bryant Columbus State University, [email protected] Follow this and additional works at: https://csuepress.columbusstate.edu/theses_dissertations Part of the Music Commons Recommended Citation Bryant, Zachary, "Die Zauberflöte: A urE opean Singspiel" (2012). Theses and Dissertations. 116. https://csuepress.columbusstate.edu/theses_dissertations/116 This Thesis is brought to you for free and open access by the Student Publications at CSU ePress. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of CSU ePress. r DIE ZAUBEFL5TE: A EUROPEAN SINGSPIEL Zachary Bryant Die Zauberflote: A European Singspiel by Zachary Bryant A Thesis Submitted in Partial Fulfillment of Requirements of the CSU Honors Program for Honors in the Bachelor of Arts in Music College of the Arts Columbus State University Thesis Advisor JfAAlj LtKMrkZny Date TttZfQjQ/Aj Committee Member /1^^^^^^^C^ZL^>>^AUJJ^AJ (?YUI£^"QdJu**)^-) Date ^- /-/<£ Director, Honors Program^fSs^^/O ^J- 7^—^ Date W3//±- Through modern-day globalization, the cultures of the world are shared on a daily basis and are integrated into the lives of nearly every person. This reality seems to go unnoticed by most, but the fact remains that many individuals and their societies have formed a cultural identity from the combination of many foreign influences. Such a multicultural identity can be seen particularly in music. Composers, artists, and performers alike frequently seek to incorporate separate elements of style in their own identity. One of the earliest examples of this tradition is the German Singspiel. -

That World of Somewhere in Between: the History of Cabaret and the Cabaret Songs of Richard Pearson Thomas, Volume I

That World of Somewhere In Between: The History of Cabaret and the Cabaret Songs of Richard Pearson Thomas, Volume I D.M.A. DOCUMENT Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts in the Graduate School of The Ohio State University By Rebecca Mullins Graduate Program in Music The Ohio State University 2013 D.M.A. Document Committee: Dr. Scott McCoy, advisor Dr. Graeme Boone Professor Loretta Robinson Copyright by Rebecca Mullins 2013 Abstract Cabaret songs have become a delightful and popular addition to the art song recital, yet there is no concise definition in the lexicon of classical music to explain precisely what cabaret songs are; indeed, they exist, as composer Richard Pearson Thomas says, “in that world that’s somewhere in between” other genres. So what exactly makes a cabaret song a cabaret song? This document will explore the topic first by tracing historical antecedents to and the evolution of artistic cabaret from its inception in Paris at the end of the 19th century, subsequent flourish throughout Europe, and progression into the United States. This document then aims to provide a stylistic analysis to the first volume of the cabaret songs of American composer Richard Pearson Thomas. ii Dedication This document is dedicated to the person who has been most greatly impacted by its writing, however unknowingly—my son Jack. I hope you grow up to be as proud of your mom as she is of you, and remember that the things in life most worth having are the things for which we must work the hardest. -

ATINER's Conference Paper Series LNG2015-1680

ATINER CONFERENCE PAPER SERIES No: LNG2014-1176 Athens Institute for Education and Research ATINER ATINER's Conference Paper Series LNG2015-1680 Transformed Precedent Phrases in the Headlines of Online Media Texts. Paratextual Aspect Darya Mironova Associate Professor Chelyabinsk State University Russia 1 ATINER CONFERENCE PAPER SERIES No: LNG2015-1680 An Introduction to ATINER's Conference Paper Series ATINER started to publish this conference papers series in 2012. It includes only the papers submitted for publication after they were presented at one of the conferences organized by our Institute every year. This paper has been peer reviewed by at least two academic members of ATINER. Dr. Gregory T. Papanikos President Athens Institute for Education and Research This paper should be cited as follows: Mironova, D. (2015). "Transformed Precedent Phrases in the Headlines of Online Media Texts. Paratextual Aspect", Athens: ATINER'S Conference Paper Series, No: LNG2015-1680. Athens Institute for Education and Research 8 Valaoritou Street, Kolonaki, 10671 Athens, Greece Tel: + 30 210 3634210 Fax: + 30 210 3634209 Email: [email protected] URL: www.atiner.gr URL Conference Papers Series: www.atiner.gr/papers.htm Printed in Athens, Greece by the Athens Institute for Education and Research. All rights reserved. Reproduction is allowed for non-commercial purposes if the source is fully acknowledged. ISSN: 2241-2891 04/11/2015 ATINER CONFERENCE PAPER SERIES No: LNG2015-1680 Transformed Precedent Phrases in the Headlines of Online Media Texts. Paratextual Aspect Darya Mironova Associate Professor Chelyabinsk State University Russia Abstract The paper studies transformed precedent phrases in the headlines of American and British online media texts with the aim to explore paratextual peculiarities of their functioning. -

Microkorg Owner's Manual

E 1 ii Precautions Data handling Location THE FCC REGULATION WARNING (for U.S.A.) Unexpected malfunctions can result in the loss of memory Using the unit in the following locations can result in a This equipment has been tested and found to comply with the contents. Please be sure to save important data on an external malfunction. limits for a Class B digital device, pursuant to Part 15 of the data filer (storage device). Korg cannot accept any responsibility • In direct sunlight FCC Rules. These limits are designed to provide reasonable for any loss or damage which you may incur as a result of data • Locations of extreme temperature or humidity protection against harmful interference in a residential loss. • Excessively dusty or dirty locations installation. This equipment generates, uses, and can radiate • Locations of excessive vibration radio frequency energy and, if not installed and used in • Close to magnetic fields accordance with the instructions, may cause harmful interference to radio communications. However, there is no Printing conventions in this manual Power supply guarantee that interference will not occur in a particular Please connect the designated AC adapter to an AC outlet of installation. If this equipment does cause harmful interference Knobs and keys printed in BOLD TYPE. the correct voltage. Do not connect it to an AC outlet of to radio or television reception, which can be determined by Knobs and keys on the panel of the microKORG are printed in voltage other than that for which your unit is intended. turning the equipment off and on, the user is encouraged to BOLD TYPE. -

Animals, Mimesis, and the Origin of Language

Animals, Mimesis, and the Origin of Language Kári Driscoll One imitates only if one fails, when one fails. Gilles Deleuze, Félix Guattari, A Thousand Plateaus Prelude: Forest Murmurs Reclining under a linden tree in the forest in Act II of Richard Wagner’s opera Siegfried, the titular hero is struck by the sound of a bird singing above him. “Du holdes Vöglein! / Dich hört’ ich noch nie,” he exclaims.1 Having just been musing on the identity of his mother, whom he never knew, Siegfried interprets the interruption as a message of some sort: “Verstünd’ ich sein süßes Stammeln! / Gewiß sagt’ es mir ’was, / vielleicht von der lieben Mutter?” He cannot understand the bird’s song, but it simply must be saying something to him; if only he could learn its language somehow. (Er sinnt nach. Sein Blick fällt auf ein Rohrgebüsch unweit der Linde.) Hei! ich versuch’s, sing’ ihm nach: auf dem Rohr tön’ ich ihm ähnlich! Entrath’ ich der Worte, achte der Weise, sing’ ich so seine Sprache, versteh’ ich wohl auch, was es spricht. (Er hat sich mit dem Schwerte ein Rohr abgeschnitten, und schnitzt sich eine Pfeife draus.)2 Siegfried’s reasoning here presupposes a direct correlation between imitation (μίμησις) and speech (λόγος): simply replicating the sound of this utterance will automatically provide access to the meaning of that utterance. According to such a mimetic model of language, the signifier and the signified are one and the same. Readers familiar with the opera will already know the outcome of Siegfried’s attempt to imitate the forest bird, but for the moment suffice it 1 Richard Wagner: “Zweiter Tag: Siegfried.” In: Gesammelte Schriften und Dichtungen. -

Alfred Music Submission Guidelines for Concert Band

Alfred Music Submission Guidelines For Concert Band At Alfred Music, we believe that everyone should have the opportunity and necessary information to submit music to be considered for publication. We encourage composers of all backgrounds and levels of experience to create and submit music for review. In addition to full-time composers, teachers are very often some of the best writers of educationally focused music—finding the balance between art and education is a challenge, but one that we encourage you to try. The framework that follows is designed to aid in the creation of music that fosters the education and growth of instrumental music students from all over the world. Please view these as guidelines rather than rules, as musical context is also very important in defining grade levels. Music that is submitted must be in the public domain or an original work. The instrumentation must align with our offered series, and works must be intended for the educational market. Our catalogs are not without limits—with that in mind, please do not be discouraged if your submission is not accepted for publication. Publication decisions are multifaceted; if a piece does not move forward for publication that is not always an indication of the quality of the writing. Also, with the number of submissions we receive, it is impossible to provide specific feedback about why a piece may not have been accepted. We sincerely appreciate your understanding and want you to know that every submitted piece will be reviewed and responded to. Thank you for considering Alfred Music as a home for your music! Please begin the submission process by reviewing our most recent releases to ensure that your creative work is targeted to our audience and in alignment with our guidelines. -

Eureka Entertainment to Release a LETTER to THREE WIVES, The

Dual Format Cat. No.| EKA70187 Certificate| U Director| Joseph L. MANKIEWICZ Dual Format Barcode| 5060000701876 Run Time| 103 min. Year| 1949 Dual Format SRP| £19.99 OAR| 1.37:1 OAR Country| USA Release Date| 29 June 2015 Picture| Black & White Language| English Genre| Drama / Romance Subtitles| English (Optional) stunning romantic drama from writer-director Joseph L. Mankiewicz (All About Eve , The Barefoot Contessa ), A A Letter to Three Wives explores the domestic travails of three couples and the woman that brings them to the brink of crisis. The letter of the title is written with a poisonous pen: the three women (portrayed by Jeanne Crain , Linda Darnell , and Ann Sothern ) receive a note stating that one of their husbands has run off with a woman named Addie Ross – which husband in particular, however, remains unmentioned, though each husband had their own affinity for Ross. And so amid the women’s mounting anxiety commences a series of flashbacks, each telling the story of how the three individual marriages had come in their own way to be so strained at the present... A spectacular success at the time of its release, A Letter to Three Wives was nominated for the Best Picture Oscar, and earned Mankiewicz the Academy Awards for both Best Director and Best Screenplay. The Masters of Cinema Series is proud to present A Letter to Three Wives in a special Dual Format edition that includes the film on Blu-ray for the first time in the UK. “Despite its emotional intensity, the film is comic, effervescently so, and its magical ending lends