Classifying Leitmotifs in Recordings of Operas by Richard Wagner

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Let Me Be Your Guide: 21St Century Choir Music in Flanders

Let me be your guide: 21st century choir music in Flanders Flanders has a rich tradition of choir music. But what about the 21st century? Meet the composers in this who's who. Alain De Ley Alain De Ley (° 1961) gained his first musical experience as a singer of the Antwerp Cathedral Choir, while he was still behind the school desks in the Sint-Jan Berchmans College in Antwerp. He got a taste for it and a few years later studied flute with Remy De Roeck and piano with Patrick De Hooghe, Freddy Van der Koelen, Hedwig Vanvaerenbergh and Urbain Boodts). In 1979 he continued his studies at the Royal Music Conservatory in Antwerp. Only later did he take private composition lessons with Alain Craens. Alain De Ley prefers to write music for choir and smaller ensembles. As the artistic director of the Flemish Radio Choir, he is familiar with the possibilities and limitations of a singing voice. Since 2003 Alain De Ley is composer in residence for Ensemble Polyfoon that premiered a great number of his compositions and recorded a CD dedicated to his music, conducted by Lieven Deroo He also received various commissions from choirs like Musa Horti and Amarylca, Kalliope and from the Flanders Festival. Alain De Ley’s music is mostly melodic, narrative, descriptive and reflective. Occasionally Alain De Ley combines the classical writing style with pop music. This is how the song Liquid Waltz was created in 2003 for choir, solo voice and pop group, sung by K’s Choice lead singer Sarah Bettens. He also regularly writes music for projects in which various art forms form a whole. -

The Individual Inventor Motif in the Age of the Patent Troll

Cotropia: THE INDIVIDUAL INVENTOR MOTIF THE INDIVIDUAL INVENTOR MOTIF IN THE AGE OF THE PATENT TROLL Christopher A. Cotropia* 12 YALE J.L. & TECH. 52 (2009) ABSTRACT The individual inventor motif has been part of American patent law since its inception. The question is whether the recent patent troll hunt has damaged the individual inventor's image and, in turn, caused Congress, the United States Patent and Trademark Office (USPTO), and the courts to become less concerned with patent law's impact on the small inventor. This Article explores whether there has been a change in attitude by looking at various sources from legislative, administrative, and judicial actors in the patent system, such as congressional statements and testimony in discussions of the recent proposed patent reform legislation, the USPTO's two recently proposedsets ofpatent rules and responses to comments on those rules, and recent Supreme Court patent decisions. These sources indicate that the rhetoric of the motif has remained unchanged, but its substantive impact is essentially nil. The motif has done little to stave off the increasingly anti- individual-inventor changes in substantive patent law. This investigation also provides a broader insight into the various governmental institutions' roles in patent law by illustrating how different institutions have responded-or not responded-to the use of the individual inventor motif in legal andpolicy arguments. * Professor of Law, Intellectual Property Institute, University of Richmond Law School. Thanks to Kevin Collins, Jim Gibson, Jack Preis, Arti Rai, Josh Sarnoff, and the participants at the Patents and Entrepreneurship in Business and Information Technologies symposium hosted at George Washington University Law School for their comments and suggestions on an earlier draft. -

Complete Catalog of the Motivic Material In

Complete Catalog of the Motivic Material in Star Wars Leitmotif Criteria 1) Distinctiveness: Musical idea has a clear and unique melody, without being wholly derived from, subsidiary section within, or attached to, another motif. 2) Recurrence: Musical idea is intentionally repeated in more than three discrete cues (including cut or replaced cues). 3) Variation: Musical idea’s repetitions are not exact. 4) Intentionality: Musical idea’s repetitions are compositionally intentional, and do not require undue analytical detective work to notice. Principal Leitmotif Criteria (Indicated in boldface) 5) Abundance: Musical idea occurs in more than one film, and with more than ten iterations overall. 6) Meaningfulness: Musical idea attaches to an important subject or symbol, and accrues additional meaning through repetition in different contexts. 7) Development: Musical idea is not only varied, but subjected to compositionally significant development and transformation across its iterations. Incidental Motifs: Not all themes are created equal. Materials that are repeated across distinct cues but that do not meet criteria for proper leitmotifs are included within a category of Incidental Motifs. Most require additional explanation, which is provided in third column of table. Leit-harmonies, leit-timbres, and themes for self-contained/non-repeating set-pieces are not included in this category, no matter how memorable or iconic. Naming and Listing Conventions Motifs are listed in order of first clear statement in chronologically oldest film, according to latest release [Amazon.com streaming versions used]. For anthology films, abbreviations are used, R for Rogue One and S for Solo. Appearances in cut cues indicated by parentheses. Hyperlinks lead to recordings of clear or characteristic usages of a given theme. -

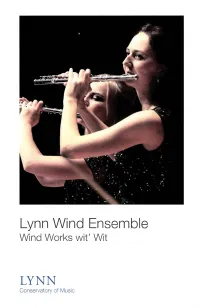

2015-2016 Lynn University Wind Ensemble-Wind Works Wit'wit

Lynn Wind Ensemble Wind Works wit' Wit LYNN Conservatory of Music Wind Ensemble Roster FLUTE T' anna Tercero Jared Harrison Hugo Valverde Villalobos Scott Kemsley Robert Williams Al la Sorokoletova TRUMPET OBOE Zachary Brown Paul Chinen Kevin Karabell Walker Harnden Mark Poljak Trevor Mansell Alexander Ramazanov John Weisberg Luke Schwalbach Natalie Smith CLARINET Tsukasa Cherkaoui TROMBONE Jacqueline Gillette Mariana Cisneros Cameron Hewes Halgrimur Hauksson Christine Pascual-Fernandez Zongxi Li Shaquille Southwell Em ily Nichols Isabel Thompson Amalie Wyrick-Flax EUPHONIUM Brian Logan BASSOON Ryan Ruark Sebastian Castellanos Michael Pittman TUBA Sodienye Fi nebone ALTO SAX Joseph Guimaraes Matthew Amedio Dannel Espinoza PERCUSSION Isaac Fernandez Hernandez TENOR SAX Tyler Flynt Kyle Mechmet Juanmanuel Lopez Bernadette Manalo BARITONE SAX Michael Sawzin DOUBLE BASS August Berger FRENCH HORN Mileidy Gonzalez PIANO Shaun Murray Al fonso Hernandez Please silence or turn off all electronic devices, including cell phones, beepers, and watch alarms. Unauthorized recording or photography is strictly prohibited Lynn Wind Ensemble Kenneth Amis, music director and conductor 7:30 pm, Friday, January 15, 2016 Keith C. and Elaine Johnson Wold Performing Arts Center Onze Variations sur un theme de Haydn Jean Fran c;; aix lntroduzione - Thema (1912-1997) Variation 1: Pochissimo piu vivo Variation 2: Moderato Variation 3: Allegro Variation 4: Adagio Variation 5: Mouvement de va/se viennoise Variation 6: Andante Variation 7: Vivace Variation 8: Mouvement de valse Variation 9: Moderato Variation 10: Mo/to tranquil/a Variation 11 : Allegro giocoso Circus Polka Igor Stravinsky (1882-1971) Hommage a Stravinsky Ole Schmidt I. (1928-2010) II. Ill. Spiel, Op.39 Ernst Toch /. -

A Divine Round Trip: the Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2014 A Divine Round Trip: The Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John Susan Elizabeth Humble University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Biblical Studies Commons Recommended Citation Humble, Susan Elizabeth, "A Divine Round Trip: The Literary and Christological Function of the Descent/ Ascent Leitmotif in the Gospel of John" (2014). Electronic Theses and Dissertations. 978. https://digitalcommons.du.edu/etd/978 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. A Divine Round Trip: The Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John _________ A Dissertation Presented to the Faculty of the University of Denver and the Iliff School of Theology Joint PhD Program University of Denver ________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy _________ by Susan Elizabeth Humble November 2014 Advisor: Gregory Robbins ©Copyright by Susan Elizabeth Humble 2014 All Rights Reserved Author: Susan Elizabeth Humble Title: A Divine Round Trip: The Literary and Christological Function of the Descent/Ascent Leitmotif in the Gospel of John Advisor: Gregory Robbins Degree Date: November 2014 ABSTRACT The thesis of this dissertation is that the Descent/Ascent Leitmotif, which includes the language of not only descending and ascending, but also going, coming, and being sent, performs a significant literary and christological function in the Gospel of John. -

DANSES CQNCERTANTES by IGOR STRAVINSKY: an ARRANGEMENT for TWO PIANOS, FOUR HANDS by KEVIN PURRONE, B.M., M.M

DANSES CQNCERTANTES BY IGOR STRAVINSKY: AN ARRANGEMENT FOR TWO PIANOS, FOUR HANDS by KEVIN PURRONE, B.M., M.M. A DISSERTATION IN FINE ARTS Submitted to the Graduate Faculty of Texas Tech University in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY Approved Accepted August, 1994 t ACKNOWLEDGMENTS I would like to extend my appreciation to Dr. William Westney, not only for the excellent advice he offered during the course of this project, but also for the fine example he set as an artist, scholar and teacher during my years at Texas Tech University. The others on my dissertation committee-Dr. Wayne Hobbs, director of the School of Music, Dr. Kenneth Davis, Dr. Richard Weaver and Dr. Daniel Nathan-were all very helpful in inspiring me to complete this work. Ms. Barbi Dickensheet at the graduate school gave me much positive assistance in the final preparation and layout of the text. My father, Mr. Savino Purrone, as well as my family, were always very supportive. European American Music granted me permission to reprint my arrangement—this was essential, and I am thankful for their help and especially for Ms. Caroline Kane's assistance in this matter. Many other individuals assisted me, sometimes without knowing it. To all I express my heartfelt thanks and appreciation. 11 TABLE OF CONTENTS ACKNOWLEDGMENTS ii CHAPTER L INTRODUCTION 1 n. GENERAL PRINCIPLES 3 Doubled Notes 3 Articulations 4 Melodic Material 4 Equal Roles 4 Free Redistribution of Parts 5 Practical Considerations 5 Homogeneity of Rhythm 5 Dynamics 6 Tutti GesUires 6 Homogeneity of Texmre 6 Forte-Piano Chords 7 Movement EI: Variation I 7 Conclusion 8 BIBLIOGRAPHY 9 m APPENDIX A. -

A Computational Lens Into How Music Characterizes Genre in Film Abstract

A computational lens into how music characterizes genre in film Benjamin Ma1Y*, Timothy Greer1Y*, Dillon Knox1Y*, Shrikanth Narayanan1,2 1 Department of Computer Science, University of Southern California, Los Angeles, CA, USA 2 Department of Electrical and Computer Engineering, University of Southern California, Los Angeles, CA, USA YThese authors contributed equally to this work. * [email protected], [email protected], [email protected] Abstract Film music varies tremendously across genre in order to bring about different responses in an audience. For instance, composers may evoke passion in a romantic scene with lush string passages or inspire fear throughout horror films with inharmonious drones. This study investigates such phenomena through a quantitative evaluation of music that is associated with different film genres. We construct supervised neural network models with various pooling mechanisms to predict a film's genre from its soundtrack. We use these models to compare handcrafted music information retrieval (MIR) features against VGGish audio embedding features, finding similar performance with the top-performing architectures. We examine the best-performing MIR feature model through permutation feature importance (PFI), determining that mel-frequency cepstral coefficient (MFCC) and tonal features are most indicative of musical differences between genres. We investigate the interaction between musical and visual features with a cross-modal analysis, and do not find compelling evidence that music characteristic of a certain genre implies low-level visual features associated with that genre. Furthermore, we provide software code to replicate this study at https://github.com/usc-sail/mica-music-in-media. This work adds to our understanding of music's use in multi-modal contexts and offers the potential for future inquiry into human affective experiences. -

A Thematic Approach to Emerging Narrative Structure Charlie Hargood David E

A Thematic Approach to Emerging Narrative Structure Charlie Hargood David E. Millard Mark J. Weal Learning Societies Lab Learning Societies Lab Learning Societies Lab School of Electronics and School of Electronics and School of Electronics and Computer Science Computer Science Computer Science University of Southampton University of Southampton University of Southampton +44 (0)23 8059 7208 +44 (0)23 8059 5567 +44 (0)23 8059 9400 [email protected] [email protected] [email protected] ABSTRACT information representation that are well established as an In this paper we look at the possibility of using a thematic model engaging way of representing an experience. Narrative generation of narrative to find emergent structure in tagged collections. We is a field that seeks to explore alternative representations of propose that a thematic underpinning could provide the narrative narrative, and investigate the possibility of automatically direction which can often be a problem with stories from existing generating custom stories from information collections. There are narrative generation methods, and present a thematic model of a wide variety of different techniques for narrative generation narrative built of narrative atoms and their features, motifs and ranging from structured narrative grammars to emergent themes. We explore the feasibility of our approach by examining narratives. However the narratives generated can seem flat, how collaborative tags in online collections match these lacking engagement and direction. properties, and find that while tags match across the model the In our work we are exploring a thematic approach to solving some majority are higher level (matching broader themes and motifs of the problems with narrative generation. -

STRAVINSKY's NEO-CLASSICISM and HIS WRITING for the VIOLIN in SUITE ITALIENNE and DUO CONCERTANT by ©2016 Olivia Needham Subm

STRAVINSKY’S NEO-CLASSICISM AND HIS WRITING FOR THE VIOLIN IN SUITE ITALIENNE AND DUO CONCERTANT By ©2016 Olivia Needham Submitted to the graduate degree program in School of Music and the Graduate Faculty of the University of Kansas in partial fulfillment of the requirements for the degree of Doctor of Musical Arts. ________________________________________ Chairperson: Paul Laird ________________________________________ Véronique Mathieu ________________________________________ Bryan Haaheim ________________________________________ Philip Kramp ________________________________________ Jerel Hilding Date Defended: 04/15/2016 The Dissertation Committee for Olivia Needham certifies that this is the approved version of the following dissertation: STRAVINSKY’S NEO-CLASSICISM AND HIS WRITING FOR THE VIOLIN IN SUITE ITALIENNE AND DUO CONCERTANT ________________________________________ Chairperson: Paul Laird Date Approved: 04/15/2016 ii ABSTRACT This document is about Stravinsky and his violin writing during his neoclassical period, 1920-1951. Stravinsky is one of the most important neo-classical composers of the twentieth century. The purpose of this document is to examine how Stravinsky upholds his neoclassical aesthetic in his violin writing through his two pieces, Suite italienne and Duo Concertant. In these works, Stravinsky’s use of neoclassicism is revealed in two opposite ways. In Suite Italienne, Stravinsky based the composition upon actual music from the eighteenth century. In Duo Concertant, Stravinsky followed the stylistic features of the eighteenth century without parodying actual music from that era. Important types of violin writing are described in these two works by Stravinsky, which are then compared with examples of eighteenth-century violin writing. iii Igor Stravinsky (1882-1971) was born in Oranienbaum (now Lomonosov) in Russia near St. -

The Virtual Worlds of Japanese Cyberpunk

arts Article New Spaces for Old Motifs? The Virtual Worlds of Japanese Cyberpunk Denis Taillandier College of International Relations, Ritsumeikan University, Kyoto 603-8577, Japan; aelfi[email protected] Received: 3 July 2018; Accepted: 2 October 2018; Published: 5 October 2018 Abstract: North-American cyberpunk’s recurrent use of high-tech Japan as “the default setting for the future,” has generated a Japonism reframed in technological terms. While the renewed representations of techno-Orientalism have received scholarly attention, little has been said about literary Japanese science fiction. This paper attempts to discuss the transnational construction of Japanese cyberpunk through Masaki Goro’s¯ Venus City (V¯ınasu Shiti, 1992) and Tobi Hirotaka’s Angels of the Forsaken Garden series (Haien no tenshi, 2002–). Elaborating on Tatsumi’s concept of synchronicity, it focuses on the intertextual dynamics that underlie the shaping of those texts to shed light on Japanese cyberpunk’s (dis)connections to techno-Orientalism as well as on the relationships between literary works, virtual worlds and reality. Keywords: Japanese science fiction; cyberpunk; techno-Orientalism; Masaki Goro;¯ Tobi Hirotaka; virtual worlds; intertextuality 1. Introduction: Cyberpunk and Techno-Orientalism While the inversion is not a very original one, looking into Japanese cyberpunk in a transnational context first calls for a brief dive into cyberpunk Japan. Anglo-American pioneers of the genre, quite evidently William Gibson, but also Pat Cadigan or Bruce Sterling, have extensively used high-tech, hyper-consumerist Japan as a motif or a setting for their works, so that Japan became in the mid 1980s the very exemplification of the future, or to borrow Gibson’s (2001, p. -

The Critical Reception of Tippett's Operas

Between Englishness and Modernism: The Critical Reception of Tippett’s Operas Ivan Hewett (Royal College of Music) [email protected] The relationship between that particular form of national identity and consciousness we term ‘Englishness’ and modernism has been much discussed1. It is my contention in this essay that the critical reception of the operas of Michael Tippett sheds an interesting and revealing light on the relationship at a moment when it was particularly fraught, in the decades following the Second World War. Before approaching that topic, one has to deal first with the thorny and many-sided question as to how and to what extent those operas really do manifest a quality of Englishness. This is more than a parochial question of whether Tippett is a composer whose special significance for listeners and opera-goers in the UK is rooted in qualities that only we on these islands can perceive. It would hardly be judged a triumph for Tippett if that question were answered in the affirmative. On the contrary, it would be perceived as a limiting factor — perhaps the very factor that prevents Tippett from exporting overseas as successfully as his great rival and antipode, Benjamin Britten. But even without that hard evidence of Tippett’s limited appeal, the very idea that his Englishness forms a major part of his significance could in itself be a disabling feature of the music. We are squeamish these days about granting a substantive aesthetic value to music on the grounds of its national qualities, if the composer in question is not safely locked away in the relatively remote past. -

ELEMENTS of FICTION – NARRATOR / NARRATIVE VOICE Fundamental Literary Terms That Indentify Components of Narratives “Fiction

Dr. Hallett ELEMENTS OF FICTION – NARRATOR / NARRATIVE VOICE Fundamental Literary Terms that Indentify Components of Narratives “Fiction” is defined as any imaginative re-creation of life in prose narrative form. All fiction is a falsehood of sorts because it relates events that never actually happened to people (characters) who never existed, at least not in the manner portrayed in the stories. However, fiction writers aim at creating “legitimate untruths,” since they seek to demonstrate meaningful insights into the human condition. Therefore, fiction is “untrue” in the absolute sense, but true in the universal sense. Critical Thinking – analysis of any work of literature – requires a thorough investigation of the “who, where, when, what, why, etc.” of the work. Narrator / Narrative Voice Guiding Question: Who is telling the story? …What is the … Narrative Point of View is the perspective from which the events in the story are observed and recounted. To determine the point of view, identify who is telling the story, that is, the viewer through whose eyes the readers see the action (the narrator). Consider these aspects: A. Pronoun p-o-v: First (I, We)/Second (You)/Third Person narrator (He, She, It, They] B. Narrator’s degree of Omniscience [Full, Limited, Partial, None]* C. Narrator’s degree of Objectivity [Complete, None, Some (Editorial?), Ironic]* D. Narrator’s “Un/Reliability” * The Third Person (therefore, apparently Objective) Totally Omniscient (fly-on-the-wall) Narrator is the classic narrative point of view through which a disembodied narrative voice (not that of a participant in the events) knows everything (omniscient) recounts the events, introduces the characters, reports dialogue and thoughts, and all details.