Two New Datasets for Origami Classification and Difficulty Estimation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

It's Modern. It's Modular. It's the MOD* Dispensing System

It’s Modern. It’s Modular. It’s the MOD* Dispensing System. The promise is simple: deliver a customized washroom experience to everyone, everywhere. Nurses caring for patients, employees in office buildings, factory workers on break and fans cheering for their team– all have exactly what they need, when they need it. You design the system you need. The innovative MOD* Hard Roll Towel System has internal dispensing modules that plug and play to give you the flexibility to build a dispenser customized to meet your requirements. So as your needs change, your dispensers can change with them. NG-Series: Loaded with Great for office buildings and Innovation. high traffic facilities. Features And Towels. two delivery options: power The MOD* System delivers assist and hanging pre-cut sheet. The MOD* System combines touchless one of the highest capacity towels around and includes E-Series: technology with the bacteria-reducing a stub roll feature that Electronic motion-activated benefits of drying with paper towels. reduces waste and cost. dispensing with an unexposed This promotes better hand and surface hygiene and supports The Healthy So whether it’s 1,150 feet of sheet option that’s ideal for ® Workplace Project*, a patent-pending SCOTT Hard Roll Towels or healthcare facilities. ® program that educates and encourages 700 feet of KLEENEX Hard M-Series: employees to HYGIENIFY!* wash, wipe, Roll Towels, that means Controlled manual delivery sanitize to help break the chain of germ fewer change outs and offers the hygiene of touchless transmission. -

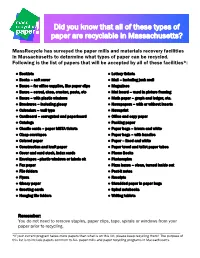

Did You Know That All of These Types of Paper Are Recyclable In

Did you know that all of these types of paper are recyclable in Massachusetts? MassRecycle has surveyed the paper mills and materials recovery facilities in Massachusetts to determine what types of paper can be recycled. Following is the list of papers that will be accepted by all of these facilities*: • Booklets • Lottery tickets • Books – soft cover • Mail – including junk mail • Boxes – for office supplies, like paper clips • Magazines • Boxes – cereal, shoe, cracker, pasta, etc • Mat board – used in picture framing • Boxes – with plastic windows • Math paper – graph and ledger, etc. • Brochures – including glossy • Newspapers – with or without inserts • Calendars – wall type • Newsprint • Cardboard – corrugated and paperboard • Office and copy paper • Catalogs • Packing paper • Charlie cards – paper MBTA tickets • Paper bags – brown and white • Clasp envelopes • Paper bags – with handles • Colored paper • Paper – lined and white • Construction and kraft paper • Paper towel and toilet paper tubes • Cover and card stock, index cards • Phone Books • Envelopes –plastic windows or labels ok • Photocopies • Fax paper • Pizza boxes – clean, turned inside out • File folders • Post-it notes • Flyers • Receipts • Glossy paper • Shredded paper in paper bags • Greeting cards • Spiral notebooks • Hanging file folders • Writing tablets Remember: You do not need to remove staples, paper clips, tape, spirals or windows from your paper prior to recycling. _______________________________________________________________________________ *If your current program takes more papers than what is on this list, please keep recycling them! The purpose of this list is to include papers common to ALL paper mills and paper recycling programs in Massachusetts. . -

Travel Postcard

Travel Postcard Unit: Full Steam Ahead: Robert Fulton & the Age of Steam Boating Destination(s) Albany Institute of History & Art 518-463-4478 125 Washington Avenue Albany, NY 12210 www.albanyinstitute.org County Albany Grades 3rd Grade,4th Grade,5th Grade Author Lynn Willigan, Darby Seward, Tracy Grosner,Erika Sanger Summary This activity can be adapted for use with many topics although it was developed in conjunction with Full Steam Ahead: Robert Fulton and the Age of Steamboating at the Albany Institute of History & Art. Students, teachers, and museum staff discussed steamboats, New York society, and travel on the Hudson River before students created postcards. Question Who might have been interested in using a steamboat instead of a sailing ship? Why? How/why can a relatively simple illustration, like a postcard, increase interest in taking such a trip? Content Understanding(s) Use of collage and painting techniques Concept Understanding(s) Ways form, color, and detail can be used to illustrate objects and their functions Ways to use language and art communicate why and how people travel on the Hudson River Vocabulary Form - Any three-dimensional object. A form can be measured from top to bottom (height), side to side (width), and front to back (depth). Form is also a general term that means the structure or design of a work. Color - The perceived character of a surface according to the wavelength of light reflected from it. Color has three dimensions: Hue (indicated by a name such as red or yellow), Value (lightness or darkness), and Intensity ( purity or saturation). Illustration -- a design or picture that explains text or shows what happens in a story in a book, magazine, or other print or electronic medium. -

Paper Towel Strength and Absorbency

Paper Towel Strength and Absorbency Summary Think about the last time you spilled a drink. How did you clean it up? Most likely you grabbed the nearest roll of paper towels. Why didn’t you use a roll of toilet paper or why didn’t you grab a wad of notebook or computer paper? What is it about a paper towel that makes it so useful to clean up spilled liquids? It has to do with how the paper towels are made. Paper towels are made from the same types of plant fibers that other types of paper are made from. The difference between paper towels and other types of paper comes when the paper fibers are mixed with a special type of resin to make them strong when they are wet. That’s why paper towels don’t tear as easily as notebook paper or tissue paper when they are wet. Once the sheets are made they have shapes pressed into them to make them look quilted. These shapes form air pockets to attract water. That is why paper towels are so absorbent. Different brands of paper towels have different methods for manufacturing their paper towels. In this activity you will get to test different brands of paper towels for their strength and absorbency. In this activity we will: 1. Observe what qualities about paper towels give them the best strength and ability to absorb water. 2. Test four different brands of paper towels to see which is the strongest and most absorbent. Materials - four or more different brands of paper towels - a jar of pennies - bowl of water - teaspoon - eye dropper or turkey baster - two assistants - record sheet and pencil (see below) Preparation 1. -

Paper, Paper, Paper…

Paper, paper, paper… Did you know that fully one-third of what we dispose of curbside each week in Arlington is paper and cardboard [6000 tons/year - 280 lbs per person/year]? And that we recycle only 50 percent of it?! What if instead each of us took a little bit of time to recycle the other 50 percent? What would be the impact? We would save the town $200,000 in incineration costs and we would reduce greenhouse gas emissions and save natural resources too. So there’s really no reason to delay. Start today! And just in case you didn't realize it a wide variety of paper materials that can be recycled, here is just a partial list: Booklets Lottery tickets Books: soft cover Mail (including Junk mail) Boxes: office supplies, like paper clips Magazines Boxes: cereal, shoe, cracker, pasta, etc. Mat board: used in picture framing Boxes: with plastic windows Math paper: graph and ledger, etc. Brochures: including glossy Newspapers: with or without inserts Calendars: wall type Newsprint Cardboard: corrugated and Office and copy paper paperboard Packing paper Catalogs Paper: lined, white, yellow Charlie cards: paper MBTA tickets Paper: fax Clasp envelopes Paper bags: brown, white, colored Colored paper Paper bags: with handles Construction and kraft paper Paper towel and toilet paper tubes Cover and card stock, index cards Pendaflex hanging file folders Envelopes: w/ plastic windows or Phone Books labels Photocopies File folders Post-it notes Flyers Receipts Glossy paper Shredded paper in paper bags Greeting cards Spiral notebooks Hanging file folders Writing tablets Note that paper clips & staples need not be removed. -

SLED Sexual Assault Evidence Collection Kit Instructions

SLED Sexual Assault Evidence Collection Kit Instructions This kit is designed to assist the examining physician and nurse or S.A.N.E. in the collection of evidentiary specimens from both adult and child victims for analysis by the SLED Forensic Laboratory. Sexual Assault is a legal matter, not a medical diagnosis. Conclusions or opinions should neither be conveyed to the victim nor written in the record. If you have questions concerning the use of this kit, please call the SLED DNA Casework Department at 896-7300 during business hours or at 737-9000 after 5 p.m. STEP 1 STATE OF SOUTH CAROLINA STATE OFFICE OF VICTIM ASSISTANCE (SOVA) FORMS Fill out all information requested on both the Medical Examination Release Form and the SAP Billing Statement and have victim (or parent/guardian, if applicable) and witness sign where indicated. STEP 2 SEXUAL ASSAULT EXAMINATION PROTOCOL FORM • Print protocol form from sled.sc.gov. • Fill out all information requested. • Make two copies and distribute to local law enforcement and SLED. Keep the original copy for hospital records. NOTE: Do not send SOVA a copy of this protocol -only the two forms listed in step 1. STEP 3 COLLECTION OF OUTER CLOTHING Please Note: 1. Wet or damp clothing should be air dried before packaging. 2. If victim changed clothes after the alleged assault, collect only the items in direct contact with the victim's genital area. Notify law enforcement officer so that clothing worn at the time of the assault can be collected by police. 3. Do not cut through any existing holes, rips or stains in the victim's clothing. -

1-2 CLEAN MASKS DAILY • Water Bottle (Your Child Can Bring a Clean Water Bottle Daily for WATER ONLY

• 1-2 CLEAN MASKS DAILY • Water Bottle (Your child can bring a clean water bottle daily for WATER ONLY. Due to CDC guidelines water fountains will be prohibited.) First Grade Supply List – 2020/2021 Each student should supply the following items: 1-2 CLEAN MASKS DAILY Water Bottle (Your child can bring a clean water bottle daily for WATER ONLY. Due to CDC guidelines water fountains will be prohibited.) 48 ct. pencils (Ticonderoga work the best!) 1 boxes of crayons 24 pack 6 glue sticks 2 pink erasers 2 boxes of Kleenex 2 large bottle of hand sanitizer 2 large containers of disinfectant wipes 2 packages of baby wipes 2 rolls of paper towel 1 pencil bag – NO BOXES – These are too big for the caddies 2 -70 count notebooks one green and one blue 2 black dry erase markers 1 each yellow, blue and green folder A healthy snack for each day In addition to the items we are asking first graders not bring the following items: No pencil sharpeners No folders with characters on them No fancy pencils wrapped in paper/plastic These don’t work well in the pencil sharpener No toys of any kind 2nd Grade Supply List 2020-2021 • 1-2 CLEAN MASKS DAILY • Water Bottle (Your child can bring a clean water bottle daily for WATER ONLY. Due to CDC guidelines water fountains will be prohibited.) • Crayons • Pencils • Erasers • Dry Erase Markers • 4 Folders • 2 Notebooks • Glue stick • Clorox Wipes • Tissues • Hand Sanitizer 2020-2021 School Year 3rd Grade Supply List: • 1-2 CLEAN MASKS DAILY • Water Bottle (Your child can bring a clean water bottle daily for WATER ONLY. -

Forensic Evidence Collection

Forensic Evidence Collection STEP 1-FOREIGN MATERIAL • Inside this bag is a paper “sheet”. Open bag and remove paper “sheet”. Place a hospital sheet on the floor of the exam room. Place the paper “sheet” on top of the hospital sheet. Have the patient undress on the paper “sheet”. This will collect any foreign material that can fall from the body in the process of undressing. Have paper bags available for the patient to deposit their clothes as they undress. If no paper bags are available, have a sheet/towel placed on the floor or on a stand so the patient can deposit clothing. Instruct the patient to place the clothing in such a manner as the clothing does not touch so as not to cross contaminate. Once the patient has undressed, have the patient don a gown and assist the patient to the exam bed/table. When collecting the paper “sheet”, fold the “sheet” in a bindle fold/druggist fold. This a fold where the edges fold upon themselves so as to keep any foreign material securely in the paper. Place the folded paper inside the “Step 1-Foreign Material” Collection bag. Seal the bag with evidence tape. Sign/date the evidence tape. Complete the form on the outside of the bag. Place step 1 collection in the “Sexual Assault Evidence Collection Kit” Envelope. • If the patient has changed clothes several times, had several bathes, or there is another reason as to why the patient would not have foreign material upon their body, then this step can be skipped. -

Small Appliances • Drink Holders

SMALL APPLIANCES • DRINK HOLDERS HOUSEWARES A In Section A: Small Appliances Drink Holders Kitchen Accessories Organizers Clean-Up Bathware Closet & Laundry Thermometers ROADPRO ROADPRO CORDLESS/ 12-VOLT RECHARGEABLE, BEVERAGE 12V BLENDER HEATER, IMMERSIBLE • Crushes ice or mixes • Plugs directly into your your favorite party drinks cigarette lighter socket for with a 10,000 rpm motor. use with glass or • Weighted base helps ceramic containers only. keeps blender secure. • Place heater coil CP • Includes 110-volt charger directly into your beverage. PRODUCTS for home & 12-volt charger for • 120 watts, 10-amp draw. vehicle recharging. • UL/CSA Approved MUG HOLDER AC Charger. • 156 watts,13-amp draw. 12401 12-Volt Beverage Heater 46864 Blender 12-Volt, Rechargeable, #RPBH-012 12 Open it to hold mugs, glasses, bottles and cups Black #RPSC-876 4 in cars, vans, RVs, boats. When not in use, fold it up, out of the way. Great-looking accessory is 9 9 easy to install. Size: 3 /16" wide x 3 /16" high; opens 4" out. One per card. ROADPRO 49635 Mug Holder, Brown #49635 20 12-VOLT COFFEE MAKER 5 CUP AND BASKET FILTER JR PRODUCTS MAC • Plugs directly into your cigarette lighter socket. CUP • See through water gauge and thermo-glass ‘MUGGERS’ carafe with stay cool handle. • Hot plate to keep ADJUSTABLE MUG HOLDERS HOLDERS your coffee warm. • Reusable basket type filter. Sturdy 3" diameter cup holders. Durable nylon. • 5.5' Power cord. • 130 watts, 11-amp draw. Adjustable design holds a wide variety of 48248 12-Volt Coffee Maker glasses, cups and mugs. Packaged each. -

堆肥 Compost Compostar

堆肥 COMPOST COMPOSTAR 廚餘食物 Food scraps Restos de comida 髒的紙盒 Dirty paper containers Recipientes sucios de papel 擦手紙和紙巾 Paper towels & tissues Toallas y pañuelos de papel 植物 Plants Plantas 可有機分解的產品 Compostable Must be labeled “compostable” or BPI products 物品必須標有 “compostable”(可分解) 或BPI Artículos compostables El artículo debe estar rotulado como “compostable” o BPI i SFRecycles.org [email protected] (415) 330−1300 印刷100 %再生紙 Printed on 100% post-consumer recycled paper Imprimido en papel 100% reciclado COMPOST Put these materials in your green composting bin: Dirty paper • Greasy pizza boxes and paper bags • Paper coffee filters and tea bags • Paper plates • Paper napkins, tissues, and paper towels • Paper take-out boxes and containers Food scraps • All fruits and vegetables (including pits and shells) • Coffee grounds and tea leaves • Dairy products (no liquids) • Eggshells and eggs • Leftovers and spoiled food • Cooked meat (including bones) • Seafood (including shellfish) Plants • All plant debris, including flowers, leaves, weeds, and branches • Tree trimmings (less than 6” in diameter and 4’ long) Other • Bags labeled “Compostable” or BPI • Cooking grease: Small amounts can be soaked up with a paper towel and composted. For large amounts, contact SF Greasecycle at (415) 725−1607 • Corks (no plastic) • Cotton balls, cotton swabs with paper stems • Hair, fur, and feathers (non-synthetic/colored) • Products clearly labeled “Compostable” • Vegetable wood crates (metal wire OK) • Waxed cardboard and paper • Wood: small pieces of clean wood/sawdust (no plywood/pressboard/painted/ stained/treated) • Wooden chopsticks, coffee stirrers, toothpicks 不收玻璃品 不收塑膠袋 NO GLASS NO PLASTIC BAGS NO SE ACEPTA VIDRIO NO SE ACEPTAN BOLSAS DE PLASTICO i For more information contact [email protected] or call (415) 330-1300 Printed on 100% post-consumer recycled paper 回收 RECYCLE RECICLAR 紙 Paper Papel 塑膠 Plastic Plástico LEAN LI C MP 的 IA 潔 金屬 清 Metal Metal 玻璃 Glass Vidrio Collect all clean plastic bags and wrap inside one bag. -

Pennypacker Express

The Pennypacker Express ___________________________________ __ ______________________________________ May—June 2020 MMXX ___________________________ ____________________________ NOTICE— As Montgomery County continues to help reduce the spread of the COVID-19 virus, all Montgomery County parks and historic sites are closed to the public until further notice. This edition of the Pennypacker Express will not contain information on any events. When we know when we will be open and be able to have events again, we will send out a notice as well as post information on our website. In the meantime, please enjoy these articles, check out our Facebook posts, and our on-line collection, available at—https://pennypackermills.pastperfectonline.com/ Did you know that the celebra- John Wanamaker and food giant H. J. Heinz. By tion of Mother’s Day began in 1908, the Philadelphia Inquirer reported that many in Philadelphia? Anna Jarvis the city were observing Mother’s Day. Interestingly, (1862-1948) is credited with Jarvis never married or became a mother. That did making this special day for mothers. Jarvis was a not deter her from her mission to honor mothers. Philadelphian who was determined to recognize her She and George Bailey, chairman of the World’s own mother with a national observance. She was not Sunday School Association, created a church led the first woman to propose this holiday and certainly celebration of Mother’s Day and in 1910, the Associ- not the first woman to engage Philadelphia society in ation endorsed the holiday. Sunday school attend- celebrating it. In 1870, Julia Ward Howe (1819-1910) ance increased as many mothers approved of the founded the Women’s Journal, a Boston publication themed lessons on appreciation and respect for par- that featured a Mother’s Day Proclamation. -

100% Recycled Paper Products

100% Recycled Paper Products 1150 Industry Road • PO Box 189 • Harrodsburg, Kentucky 40330-0189 • 800.723.0001 • wausaupaper.com EcoSoft™ 100% Recycled Paper Products GREEN SEAL StANDARDS FOR TOWEL AND TISSUE (GS-01) EcoSoft™ is a complete line of 100% recycled towel and tissue • 100% recycled (recovered after papermaking process) • Minimum 50% post-consumer wastepaper content in paper towel; products designed for the environmentally conscious consumer. 25% post-consumer wastepaper content in bath tissue; 15% in All EcoSoft™ products meet or exceed EPA guidelines for post- facial tissue (EPA guidelines) consumer waste content and the majority are Green Seal™-certified, • Wastepaper is not de-inked using solvents containing chlorine or any other chemicals or compounds harmful to the environment which means they satisfy procurement criteria for government- • Wastepaper is not bleached using chlorine or any of its derivatives funded organizations that are enforced by state and federal law. such as hypochlorite and chlorine dioxide EcoSoft™ is constructed with a perfect blend of pre- and post- • The papermaking process does not contain any papermaking additives or contaminants that are carcinogens, mutagens consumer wastepaper, which allows creation of recycled towel or reproductive toxins that are known to produce or release carcinogens, mutagens, or reproductive toxins and tissue products without sacrificing quality or performance. • The papermaking process is free from chlorophenolic biocides, fragrances, heavy metals, including but not limited to lead, chromium or selenium both in the elemental form or compound form and ozone-depleting compounds • Paper towel, bath and facial tissue products do not contain any added pigments, inks, dyes, or fragrances • Products must meet minimum sheets/feet per roll as laid out in the product specifications • Product is made in accordance with reasonable industry practice with respect to holes, tears, wrinkles, cleanliness, foreign materials, basis weight, tensile and dirt.