1. Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Axiomatic Approach to Physical Systems

' An Axiomatic Approach $ to Physical Systems & % Januari 2004 ❦ ' Summary $ Mereology is generally considered as a formal theory of the part-whole relation- ship concerning material bodies, such as planets, pickles and protons. We argue that mereology can be considered more generally as axiomatising the concept of a physical system, such as a planet in a gravitation-potential, a pickle in heart- burn and a proton in an electro-magnetic field. We design a theory of sets and physical systems by extending standard set-theory (ZFC) with mereological axioms. The resulting theory deductively extends both `Mereology' of Tarski & L`esniewski as well as the well-known `calculus of individuals' of Leonard & Goodman. We prove a number of theorems and demonstrate that our theory extends ZFC con- servatively and hence equiconsistently. We also erect a model of our theory in ZFC. The lesson to be learned from this paper reads that not only is a marriage between standard set-theory and mereology logically respectable, leading to a rigorous vin- dication of how physicists talk about physical systems, but in addition that sets and physical systems interact at the formal level both quite smoothly and non- trivially. & % Contents 0 Pre-Mereological Investigations 1 0.0 Overview . 1 0.1 Motivation . 1 0.2 Heuristics . 2 0.3 Requirements . 5 0.4 Extant Mereological Theories . 6 1 Mereological Investigations 8 1.0 The Language of Physical Systems . 8 1.1 The Domain of Mereological Discourse . 9 1.2 Mereological Axioms . 13 1.2.0 Plenitude vs. Parsimony . 13 1.2.1 Subsystem Axioms . 15 1.2.2 Composite Physical Systems . -

Functional Declarative Language Design and Predicate Calculus: a Practical Approach

Functional Declarative Language Design and Predicate Calculus: A Practical Approach RAYMOND BOUTE INTEC, Ghent University, Belgium In programming language and software engineering, the main mathematical tool is de facto some form of predicate logic. Yet, as elsewhere in applied mathematics, it is used mostly far below its potential, due to its traditional formulation as just a topic in logic instead of a calculus for everyday practical use. The proposed alternative combines a language of utmost simplicity (four constructs only) that is devoid of the defects of common mathematical conventions, with a set of convenient calculation rules that is sufficiently comprehensive to make it practical for everyday use in most (if not all) domains of interest. Its main elements are a functional predicate calculus and concrete generic functionals. The first supports formal calculation with quantifiers with the same fluency as with derivatives and integrals in classical applied mathematics and engineering. The second achieves the same for calculating with functionals, including smooth transition between pointwise and point-free expression. The extensive collection of examples pertains mainly to software specification, language seman- tics and its mathematical basis, program calculation etc., but occasionally shows wider applicability throughout applied mathematics and engineering. Often it illustrates how formal reasoning guided by the shape of the expressions is an instrument for discovery and expanding intuition, or highlights design opportunities in declarative -

What Is an Embedding? : a Problem for Category-Theoretic Structuralism

What is an Embedding? : A Problem for Category-theoretic Structuralism Angere, Staffan Published in: Preprint without journal information 2014 Link to publication Citation for published version (APA): Angere, S. (2014). What is an Embedding? : A Problem for Category-theoretic Structuralism. Unpublished. Total number of authors: 1 General rights Unless other specific re-use rights are stated the following general rights apply: Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Read more about Creative commons licenses: https://creativecommons.org/licenses/ Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. LUND UNIVERSITY PO Box 117 221 00 Lund +46 46-222 00 00 What is an Embedding? A Problem for Category-theoretic Structuralism PREPRINT Staffan Angere April 16, 2014 Abstract This paper concerns the proper definition of embeddings in purely category-theoretical terms. It is argued that plain category theory can- not capture what, in the general case, constitutes an embedding of one structure in another. -

Proposals for Mathematical Extensions for Event-B

Proposals for Mathematical Extensions for Event-B J.-R. Abrial, M. Butler, M Schmalz, S. Hallerstede, L. Voisin 9 January 2009 Mathematical Extensions 1 Introduction In this document we propose an approach to support user-defined extension of the mathematical language and theory of Event-B. The proposal consists of considering three kinds of extension: (1) Extensions of set-theoretic expressions or predicates: example extensions of this kind consist of adding the transitive closure of relations or various ordered relations. (2) Extensions of the library of theorems for predicates and operators. (2) Extensions of the Set Theory itself through the definition of algebraic types such as lists or ordered trees using new set constructors. 2 Brief Overview of Mathematical Language Structure A full definition of the mathematical language of Event-B may be found in [1]. Here we give a very brief overview of the structure of the mathematical language to help motivate the remaining sections. Event-B distinguishes predicates and expressions as separate syntactic categories. Predicates are defined in term of the usual basic predicates (>, ?, A = B, x 2 S, y ≤ z, etc), predicate combinators (:, ^, _, etc) and quantifiers (8, 9). Expressions are defined in terms of constants (0, ?, etc), (logical) variables (x, y, etc) and operators (+, [, etc). Basic predicates have expressions as arguments. For example in the predicate E 2 S, both E and S are expressions. Expression operators may have expressions as arguments. For example, the set union operator has two expressions as arguments, i.e., S [ T . Expression operators may also have predicates as arguments. For example, set comprehension is defined in terms of a predicate P , i.e., f x j P g. -

DRUM–II: Efficient Model–Based Diagnosis of Technical Systems

DRUM–II: Efficient Model–based Diagnosis of Technical Systems Vom Fachbereich Elektrotechnik und Informationstechnik der Universitat¨ Hannover zur Erlangung des akademischen Grades Doktor–Ingenieur genehmigte Dissertation von Dipl.-Inform. Peter Frohlich¨ geboren am 12. Februar 1970 in Wurselen¨ 1998 1. Referent: Prof. Dr. techn. Wolfgang Nejdl 2. Referent: Prof. Dr.-Ing. Claus-E. Liedtke Tag der Promotion: 23. April 1998 Abstract Diagnosis is one of the central application areas of artificial intelligence. The compu- tation of diagnoses for complex technical systems which consist of several thousand components and exist in many different configurations is a grand challenge. For such systems it is usually impossible to directly deduce diagnoses from observed symptoms using empirical knowledge. Instead, the model–based approach to diagnosis uses a model of the system to simulate the system behaviour given a set of faulty components. The diagnoses are obtained by comparing the simulation results with the observed be- haviour of the system. Since the second half of the 1980’s several model–based diagnosis systems have been developed. However, the flexibility of current systems is limited, because they are based on restricted diagnosis definitions and they lack support for reasoning tasks related to diagnosis like temporal prediction. Furthermore, current diagnosis engines are often not sufficiently efficient for the diagnosis of complex systems, especially because of their exponential memory requirements. In this thesis we describe the new model–based diagnosis system DRUM–II. This system achieves increased flexibility by embedding diagnosis in a general logical framework. It computes diagnoses efficiently by exploiting the structure of the sys- tem model, so that large systems with complex internal structure can be solved. -

Cse541 LOGIC for Computer Science

cse541 LOGIC for Computer Science Professor Anita Wasilewska LECTURE 4 Chapter 4 GENERAL PROOF SYSTEMS PART 1: Introduction- Intuitive definitions PART 2: Formal Definition of a Proof System PART 3: Formal Proofs and Simple Examples PART 4: Consequence, Soundness and Completeness PART 5: Decidable and Syntactically Decidable Proof Systems PART 1: General Introduction Proof Systems - Intuitive Definition Proof systems are built to prove, it means to construct formal proofs of statements formulated in a given language First component of any proof system is hence its formal language L Proof systems are inference machines with statements called provable statements being their final products Semantical Link The starting points of the inference machine of a proof systemS are called its axioms We distinguish two kinds of axioms: logical axioms LA and specific axioms SA Semantical link: we usually build a proof systems for a given language and its semantics i.e. for a logic defined semantically Semantical Link We always choose as a set of logical axioms LA some subset of tautologies, under a given semantics We will consider here only proof systems with finite sets of logical or specific axioms , i.e we will examine only finitely axiomatizable proof systems Semantical Link We can, and we often do, consider proof systems with languages without yet established semantics In this case the logical axiomsLA serve as description of tautologies under a future semantics yet to be built Logical axiomsLA of a proof systemS are hence not only tautologies under an -

Formal Methods Unifying Computing Science and Systems Theory

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Ghent University Academic Bibliography Formal Methods Unifying Computing Science and Systems Theory Raymond BOUTE INTEC, Universiteit Gent B-9000 Gent, Belgium ABSTRACT The typical formal rules used are those for arithmetic (associativity, distributivity etc.) plus those from calculus. Computing Science and Systems Theory can gain much Exploiting formality and the calculational style are from unified mathematical models and methodology, in taken for granted throughout most of applied mathematics particular formal reasoning (“letting the symbols do the based on algebra and calculus (although, as shown later, work”). This is achieved by a wide-spectrum formalism. common conventions still exhibit some serious defects). The language uses just four constructs, yet suffices to By contrast, logical reasoning in everyday practice by synthesize familiar notations (minus the defects) as well as mathematicians and engineers is highly informal, and of- new ones. It supports formal calculation rules convenient ten involves what Taylor [20] calls syncopation, namely for hand calculation and amenable to automation. using symbols as mere abbreviations of natural language, The basic framework has two main elements. First, a for instance the quantifier symbols ∀ and ∃ just standing functional predicate calculus makes formal logic practical for “for all” and “there exists”, without calculation rules. for engineers, allowing them to calculate with predicates The result is a severe style breach between “regular and quantifiers as easily as with derivatives and integrals. calculus”, usually done in an essentially formal way, and Second, concrete generic functionals support smooth tran- the logical justification of its rules, which even in the best sition between pointwise and point-free formulations, facil- analysis texts is done in words, with syncopation instead itating calculation with functionals and exploiting formal of calculation. -

Development of Conjunctive Decomposition Tools

Development of Conjunctive Decomposition Tools Igor Shubin Kharkiv National University of Radio Electronics, Nauky Ave. 14, Kharkiv, 61166, Ukraine Abstract The development of conjunctive decomposition tools focused on building logical network models is the main topic of this article. The possibility of using such tools for the development of linguistic information processing systems determines the relevance of the chosen topic. The relationship between operations in relational algebra and in the algebra of finite predicates is de-scribed. With the help of relational statements about dependencies, the algebraological apparatus of decomposition of predicates was further developed. The method of binary decomposition of functional predicates is founded, which differs from the general method of Cartesian decomposition in that the number of values of the auxiliary variable is minimized. Keywords1 Predicate Algebra, Logical Networks, Binary Decomposition, Cartesian Decomposition. 1. Introduction Among the many formalisms applicable to one degree or another to the tasks of processing informal information, the most appropriate is the use of predicate algebra, systems of equations which are technically implemented in the form of a logical network. One of the advantages of this approach is its direct applicability to all the following types of problems, which is provided by the declaratives of the logical network as a method of solving systems of predicate equations. The notation of the predicate equations itself becomes possible due to the algebra -

Functional Predicate Calculus and Generic Functionals in Software Engineering

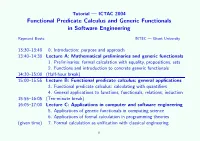

Tutorial — ICTAC 2004 Functional Predicate Calculus and Generic Functionals in Software Engineering Raymond Boute INTEC — Ghent University 13:30–13:40 0. Introduction: purpose and approach 13:40–14:30 Lecture A: Mathematical preliminaries and generic functionals 1. Preliminaries: formal calculation with equality, propositions, sets 2. Functions and introduction to concrete generic functionals 14:30–15:00 (Half-hour break) 15:00–15:55 Lecture B: Functional predicate calculus; general applications 3. Functional predicate calculus: calculating with quantifiers 4. General applications to functions, functionals, relations, induction 15:55–16:05 (Ten-minute break) 16:05–17:00 Lecture C: Applications in computer and software engineering 5. Applications of generic functionals in computing science 6. Applications of formal calculation in programming theories (given time) 7. Formal calculation as unification with classical engineering 0 Next topic 13:30–13:40 0. Introduction: purpose and approach Lecture A: Mathematical preliminaries and generic functionals 13:40–14:10 1. Preliminaries: formal calculation with equality, propositions, sets 14:10–14:30 2. Functions and introduction to concrete generic functionals 14:30–15:00 Half hour break Lecture B: Functional predicate calculus and general applications 15:00–15:30 3. Functional predicate calculus: calculating with quantifiers 15:30–15:55 4. General applications to functions, functionals, relations, induction 15:55–16:05 Ten-minute break Lecture C: Applications in computer and software engineering 16:05–16:40 5. Applications of generic functionals in computing science 16:40–17:00 6. Applications of formal calculation in programming theories (given time) 7. Formal calculation as unification with classical engineering Note: depending on the definitive program for tutorials, times indicated may shift. -

00 Interactive Realizers. a New Approach to Program Extraction from Non Constructive Proofs

00 Interactive realizers. A new approach to program extraction from non constructive proofs STEFANO BERARDI Universita` di Torino UGO DE’LIGUORO Universita` di Torino We propose a realizability interpretation of a system for quantier free arithmetic which is equivalent to the fragment of classical arithmetic without nested quantifiers, called here EM1-arithmetic. We interpret classi- cal proofs as interactive learning strategies, namely as processes going through several stages of knowledge and learning by interacting with the “nature”, represented by the standard interpretation of closed atomic formulas, and with each other. We obtain in this way a program extraction method by proof interpretation, which is faithful w.r.t. proofs, in the sense that it is compositional and that it does not need any translation. Categories and Subject Descriptors: D.1.1 [Software]: Applicative (Functional) Programming; D.1.2 [Software]: Automatic Programming; F.1.2 [Theory of Computation]: Modes of Computation—Interactive and reactive computation; F.4.1 [Math- ematical Logic]: Lambda calculus and related systems—Proof Theory; I.2.6 [Artificial Intelligence]: Learning—Induc- tion General Terms: Theory, Languages Additional Key Words and Phrases: Realizability, Learning in the Limit, Constructive Interpretations of Classical Logic. 1. INTRODUCTION 0 It is well known that even from a non constructive proof of a Π2 statement ∀x∃yA(x, y) one can ex- tract an algorithm to compute a non trivial term t(x) such that ∀xA(x, t(x)). Extraction techniques fall into two groups: either by cut elimination and proof normalization, or by proof interpretation. We investigate here a new approach to program extraction by proof interpretation, based on realiz- ability and learning (see subsection 1.1 for references). -

Philosophy 148 Lecture 1

Branden Fitelson Philosophy 148 Lecture 1 ' Philosophy 148 — Announcements & Such $ • Administrative Stuff – See the course website for all administrative information (also, note that lecture notes are posted the morning prior to each class): http://socrates.berkeley.edu/∼fitelson/148/ – Section times (II): Those of you who can’t make Tu @ 10–11, please fill-out an index card. New times: Mon or Wed 9–10 or 10–11. • Last Time: Review of Boolean (Truth-Functional) Sentential Logic – Truth-Table definitions of connectives – Semantical (Metatheoretic) notions ∗ Individual Sentences: Logical Truth, Logical Falsity, etc. ∗ Sets of Sentences: Entailment (î), Consistency, etc. • Today: Finite Propositional Boolean Algebras, Review of Boolean (Truth-Functional) Predicate Logic, and a General Boolean Framework &UCB Philosophy Logical Background, Cont’d 01/24/08 % Branden Fitelson Philosophy 148 Lecture 2 ' Finite Propositional Boolean Algebras $ • Sentences express propositions. We individuate propositions according to their logical content. If two sentences are logically equivalent, then they express the same proposition. [E.g.,“A ! B” and “∼A _ B”] • A finite propositional Boolean algebra is a finite set of propositions which is closed under the (Boolean) logical operations. • A set S is closed under a logical operation λ if applying λ to a member (or pair of members) of S always yields a member of S. • Example: consider a sentential language L with three atomic letters “X”, “Y ”, and “Z”. The set of propositions expressible using the logical connectives and these letters is a finite Boolean algebra of propositions. • This Boolean algebra has 23 = 8 atomic propositions or states (i.e., the rows of a 3-atomic sentence truth-table!). -

Type Construction and the Logic of Concepts

Type Construction and the Logic of Concepts James Pustejovsky Computer Science Department Volen Center for Complex Systems Brandeis University Waltham, MA 02254 USA [email protected] Abstract I would like to pose a set of fundamental questions regarding the constraints we can place on the structure of our concepts, particularly as revealed through language. I will outline a methodology for the con- struction of ontological types based on the dual concerns of capturing linguistic generalizations and satisfying metaphysical considerations. I discuss what “kinds of things” there are, as reflected in the models of semantics we adopt for our linguistic theories. I argue that the flat and relatively homogeneous typing models coming out of classic Mon- tague Grammar are grossly inadequate to the task of modelling and describing language and its meaning. I outline aspects of a semantic theory (Generative Lexicon) employing a ranking of types. I distin- guish first between natural (simple) types and functional types, and then motivate the use of complex types (dot objects) to model objects with multiple and interdependent denotations. This approach will be called the Principle of Type Ordering. I will explore what the top lattice structures are within this model, and how these constructions relate to more classic issues in syntactic mapping from meaning. 1 Language and Category Formation Since the early days of artificial intelligence, researchers have struggled to find a satisfactory definition for category or concept, one which both meets formal demands on soundness and completeness, and practical demands on relevance to real-world tasks of classification. One goal is usually sacrificed 1 in the hope of achieving the other, where the results are muddled with good intentions but poor methodology.