Real-Time Ray Tracing with Polarization Parameters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Polarization of Electron and Photon Beams

Revista Brasileira de Física, Vol. 11, NP 4, 1981 Polarization of Electron and Photon Beams ?. R. S. GOMES Instituto de Física, Universidade Federal Fluminense, Niterdi, RJ. Recebido eni 17 de Fevereiro de 1981 In this work the concepts of polarization of photons and re- lativistic electrons are introduced in a simplified form. Although there are important differences in the concepts of photon and electron polarization, it is shown that the saine formal ism may be used to des- cribe them, which is very useful in the study oF interactions concer- ned with polarizations of both photons and electrons, as in the yho- toelectric and Compton effects. Photons are considered, in this work, as a special case of relativistic spin 1 particles, with zero rest mass. Neste trabalho são apresentados, de uma forma simplificada, os conceitos de polarização de fotons e eletrons. Embora existam im- portantes diferenças nos conceitos de polarizaçao de fot0ns.e eletrons, mostra-se que o mesmo formalismo pode ser usado para descreve-los, o que é muito útil no estudo de interações envolvendo polarizações de ambos, fotons e eletrons, como em efeitos fotoelétrico e Compton. Nes- te trabalho os fotons são considerados como um caso especial de partí- culas relativísticas de spin 1, com massa de repouso nula. 1. INTRODUCTION Dur ing a research progi-am concerned xi th correlat ions between polarizãtions of photons and electror~sin relativistic photoelectric effect, i t was noticed the lack of a text which brings together thecon- cepts and simplified descriptions of polarization of electron and pho- ton beams . That was the reason for trying to write such a text. -

Peter Shirley, NVIDIA

Course slides, resources, information: intro-to-dxr.cwyman.org Introduction to DirectX Raytracing Welcome & Introductions Chris Wyman, NVIDIA Shawn Hargreaves, Microsoft Peter Shirley, NVIDIA Colin Barré-Brisebois, SEED Course slides, resources, information: intro-to-dxr.cwyman.org Photography & Recording Encouraged 1 Image rendered with our course tutorials: Emerald Square scene from Open Research Content Archive Welcome • Why have this course? 2 Welcome • Why have this course? • Microsoft announced DirectX Raytracing at GDC • My Twitter feed blew up – With people (re)trying ray tracing How many times did you see this image on Twitter?3 Welcome • Why have this course? • Microsoft announced DirectX Raytracing at GDC • My Twitter feed blew up – With people (re)trying ray tracing A simple scene with added ray traced lighting • Now: Widely used API w/ray tracing support – Straightforward sharing of GPU resources – Compelling demos suggest real use cases 4 Welcome • Why have this course? • Microsoft announced DirectX Raytracing at GDC • My Twitter feed blew up – With people (re)trying ray tracing Left: Feasible sample count today in real-time • Now: Widely used API w/ray tracing support – Straightforward sharing of GPU resources – Compelling demos suggest real use cases • Not yet full path traced renderers – Only fast enough for a few rays per pixel – Research needed: reconstruct from low samples Right: Fully converged path tracing5 Welcome • Why have this course? • Microsoft announced DirectX Raytracing at GDC • My Twitter feed blew up – -

'Call of Duty: Modern Warfare' to Support Directx Raytracing on PC

‘Call of Duty: Modern Warfare’ to Support DirectX Raytracing on PC, Powered by NVIDIA GeForce RTX One of the Most Anticipated Games of the Year to Leverage NVIDIA RTX Technology on PC NVIDIA and Activision today announced that NVIDIA is the official PC partner for Call of Duty®: Modern Warfare®, the highly anticipated, all-new title scheduled for release on Oct. 25. NVIDIA is working side by side with developer Infinity Ward to bring real-time DirectX Raytracing (DXR), and NVIDIA® Adaptive Shading gaming technologies to the PC version of the new Modern Warfare. “Call of Duty: Modern Warfare is poised to reset the bar upon release this October,'' said Matt Wuebbling, head of GeForce marketing at NVIDIA. “NVIDIA is proud to partner closely with this epic development and contribute toward the creation of this gripping experience. Our teams of engineers have been working closely with Infinity Ward to use NVIDIA RTX technologies to display the realistic effects and incredible immersion that Modern Warfare offers.'' The most celebrated series in Call of Duty will make its return in a powerful experience reimagined from the ground up. Published by Activision and developed by Infinity Ward, the PC version of Call of Duty: Modern Warfare will release on Blizzard Battle.net. Modern Warfare features a unified narrative experience and progression across an epic, heart-racing, single-player story, an action-packed multiplayer playground, and new cooperative gameplay. “Our work with NVIDIA GPUs has helped us throughout the PC development of Modern Warfare,'' said Dave Stohl, co-studio head at Infinity Ward. “We've seamlessly integrated the RTX features like ray tracing and adaptive shading into our rendering pipeline. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

Are We Done with Ray Tracing? State-Of-The-Art and Challenges in Game Ray Tracing

Are We Done With Ray Tracing? State-of-the-Art and Challenges in Game Ray Tracing Colin Barré-Brisebois, @ZigguratVertigo SEED – Electronic Arts How did we get here? GDC 2018 – DXR Unveiled “Ray tracing is the future and ever will be” [Electronic Arts, SEED] [Epic Games, NVIDIA, ILMxLAB] [SEED 2018] Real-Time Ray Tracing in Software and Hardware Real-Time Hybrid Ray Tracing in Unreal Engine 4 [https://docs.unrealengine.com/en-US/Engine/Rendering/RayTracing/index.html] [Courtesy of Epic Games, Goodbye Kansas, Deep Forest Films] [Courtesy of Epic Games, Goodbye Kansas, Deep Forest Films] [Tatarchuk 2019, Courtesy of Unity Technologies] Left: real-world footage. Right rendered with Unity [Tatarchuk 2019, Courtesy of Unity Technologies] Left: real-world footage. Right rendered with Unity [Tatarchuk 2019, Courtesy of Unity Technologies] Left: real-world footage. Right rendered with Unity [Tatarchuk 2019, Courtesy of Unity Technologies] [Christophe Schied, NVIDIA Lightspeed Studios™] SIGGRAPH 2019 – Are We Done With Ray Tracing? – State-of-the-Art and Challenges in Game Ray Tracing And many more… ▪ Assetto Corsa ▪ JX3 ▪ Atomic Heart ▪ Mech Warrior V: Mercenaries ▪ Call of Duty: Modern Warfare ▪ Project DH ▪ Cyberpunk 2077 ▪ Stay in the Light ▪ Enlisted ▪ Vampire: The Masquerade – Bloodlines 2 ▪ Justice ▪ Watch Dogs: Legion ▪ Wolfenstein: Youngblood Just the beginning of real-time ray tracing making its way into game products We’re in for a great ride, and the work is not done! This is super exciting! ☺ SIGGRAPH 2019 – Are We Done With Ray Tracing? -

Ellipsometry

AALBORG UNIVERSITY Institute of Physics and Nanotechnology Pontoppidanstræde 103 - 9220 Aalborg Øst - Telephone 96 35 92 15 TITLE: Ellipsometry SYNOPSIS: This project concerns measurement of the re- fractive index of various materials and mea- PROJECT PERIOD: surement of the thickness of thin films on sili- September 1st - December 21st 2004 con substrates by use of ellipsometry. The el- lipsometer used in the experiments is the SE 850 photometric rotating analyzer ellipsome- ter from Sentech. THEME: After an introduction to ellipsometry and a Detection of Nanostructures problem description, the subjects of polar- ization and essential ellipsometry theory are covered. PROJECT GROUP: The index of refraction for silicon, alu- 116 minum, copper and silver are modelled us- ing the Drude-Lorentz harmonic oscillator model and afterwards measured by ellipsom- etry. The results based on the measurements GROUP MEMBERS: show a tendency towards, but are not ade- Jesper Jung quately close to, the table values. The mate- Jakob Bork rials are therefore modelled with a thin layer of oxide, and the refractive indexes are com- Tobias Holmgaard puted. This model yields good results for the Niels Anker Kortbek refractive index of silicon and copper. For aluminum the result is improved whereas the result for silver is not. SUPERVISOR: The thickness of a thin film of SiO2 on a sub- strate of silicon is measured by use of ellip- Kjeld Pedersen sometry. The result is 22.9 nm which deviates from the provided information by 6.5 %. The thickness of two thick (multiple wave- NUMBERS PRINTED: 7 lengths) thin polymer films are measured. The polymer films have been spin coated on REPORT PAGE NUMBER: 70 substrates of silicon and the uniformities of the surfaces are investigated. -

Exploiting Hardware-Accelerated Ray Tracing for Monte Carlo Particle Transport with Openmc

Exploiting Hardware-Accelerated Ray Tracing for Monte Carlo Particle Transport with OpenMC Justin Lewis Salmon, Simon McIntosh Smith Department of Computer Science University of Bristol Bristol, U.K. fjustin.salmon.2018, [email protected] Abstract—OpenMC is a CPU-based Monte Carlo particle HPC due to their proliferation in modern supercomputer transport simulation code recently developed in the Computa- designs such as Summit [6]. tional Reactor Physics Group at MIT, and which is currently being evaluated by the UK Atomic Energy Authority for use on A. OpenMC the ITER fusion reactor project. In this paper we present a novel port of OpenMC to run on the new ray tracing (RT) cores in OpenMC is a Monte Carlo particle transport code focussed NVIDIA’s latest Turing GPUs. We show here that the OpenMC on neutron criticality simulations, recently developed in the GPU port yields up to 9.8x speedup on a single node over a Computational Reactor Physics Group at MIT [7]. OpenMC 16-core CPU using the native constructive solid geometry, and is written in modern C++, and has been developed using up to 13x speedup using approximate triangle mesh geometry. Furthermore, since the expensive 3D geometric operations re- high code quality standards to ensure maintainability and quired during particle transport simulation can be formulated consistency. This is in contrast to many older codes, which as a ray tracing problem, there is an opportunity to gain even are often written in obsolete versions of Fortran, and have higher performance on triangle meshes by exploiting the RT grown to become highly complex and difficult to maintain. -

Results from the QUIET Q-Band Observing Season COLUMBIA

Results from the QUIET Q-Band Observing Season Robert Nicolas Dumoulin Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Science COLUMBIA UNIVERSITY 2011 ©2011 Robert Nicolas Dumoulin All Rights Reserved Abstract Results from the QUIET Q-Band Observing Season Robert Nicolas Dumoulin The Q/U Imaging ExperimenT (QUIET) is a ground-based telescope located in the high Atacama Desert in Chile, and is designed to measure the polarization of the Cosmic Mi- crowave Background (CMB) in the Q and W frequency bands (43 and 95 GHz respec- tively) using coherent polarimeters. From 2008 October to 2010 December, data from more than 10,000 observing hours were collected, first with the Q-band receiver (2008 October to 2009 June) and then with the W-band receiver (until the end of the 2010 ob- serving season). The QUIET data analysis effort uses two independent pipelines, one consisting of a max- imum likelihood framework and the other consisting of a pseudo-C` framework. Both pipelines employ blind analysis methods, and each provides analysis of the data using large suites of null tests specific to the pipeline. Analysis of the Q-band receiver data was completed in November of 2010, confirming the only previous detection of the first acoustic peak of the EE power spectrum and setting competitive limits on the scalar-to- tensor ratio, r. In this dissertation, the results from the Q-band observing season using the maximum likelihood pipeline will be presented. Contents 1 The Cosmic Microwave Background 1 1.1 The Origins and Features of the CMB . -

Multi-Hit Ray Tracing in DXR Christiaan Gribble SURVICE Engineering

CHAPTER 9 Multi-Hit Ray Tracing in DXR Christiaan Gribble SURVICE Engineering ABSTRACT Multi-hit ray traversal is a class of ray traversal algorithm that finds one or more, and possibly all, primitives intersected by a ray, ordered by point of intersection. Multi- hit traversal generalizes traditional first-hit ray traversal and is useful in computer graphics and physics-based simulation. We present several possible multi-hit implementations using Microsoft DirectX Raytracing and explore the performance of these implementations in an example GPU ray tracer. 9.1 INTRODUCTION Ray casting has been used to solve the visibility problem in computer graphics since its introduction to the field over 50 years ago. First-hit traversal returns information regarding the nearest primitive intersected by a ray, as shown on the left in Figure 9-1. When applied recursively, first-hit traversal can also be used to incorporate visual effects such as reflection, refraction, and other forms of indirect illumination. As a result, most ray tracing APIs are heavily optimized for first-hit performance. Figure 9-1. Three categories of ray traversal. First-hit traversal and any-hit traversal are well-known and often-used ray traversal algorithms in computer graphics applications for effects like visibility (left) and ambient occlusion (center). We explore multi-hit ray traversal, the third major category of ray traversal that returns the N closest primitives ordered by point of intersection (for N ≥ 1). Multi- hit ray traversal is useful in a number of computer graphics and physics-based simulation applications, including optical transparency (right). © NVIDIA 2019 111 E. -

Evaluating the Use of Proxy Geometry for RTX-Based Ray Traced Diffuse Global Illumination

Evaluating the Use of Proxy Geometry for RTX-based Ray Traced Diffuse Global Illumination Master’s thesis in computer science and engineering Simon Moos Department of Computer Science and Engineering CHALMERS UNIVERSITY OF TECHNOLOGY UNIVERSITY OF GOTHENBURG Gothenburg, Sweden 2020 Master’s thesis 2020 Evaluating the Use of Proxy Geometry for RTX-based Ray Traced Diffuse Global Illumination Simon Moos Department of Computer Science and Engineering Chalmers University of Technology University of Gothenburg Gothenburg, Sweden 2020 Evaluating the Use of Proxy Geometry for RTX-based Ray Traced Diffuse Global Illumination Simon Moos © Simon Moos, 2020. Supervisor: Erik Sintorn, Department of Computer Science and Engineering Examiner: Ulf Assarsson, Department of Computer Science and Engineering Advisor: Magnus Pettersson, RapidImages Master’s Thesis 2020 Department of Computer Science and Engineering Chalmers University of Technology and University of Gothenburg SE-412 96 Gothenburg Telephone +46 31 772 1000 Cover: Visualization of sphere set proxy geometry, with superimposed diffuse global illumination Typeset in LATEX Gothenburg, Sweden 2020 iv Evaluating the Use of Proxy Geometry for RTX-based Ray Traced Diffuse Global Illumination Simon Moos Department of Computer Science and Engineering Chalmers University of Technology Abstract Diffuse global illumination is vital for photorealistic rendering, but accurately eval- uating it is computationally expensive and usually involves ray tracing. Ray tracing has often been considered prohibitively expensive for real-time rendering, but with the new RTX technology it can be done many times faster than what was previously possible. While ray tracing is now faster, ray traced diffuse global illumination is still relatively slow on GPUs, and we see the potential of improving performance on the application-level. -

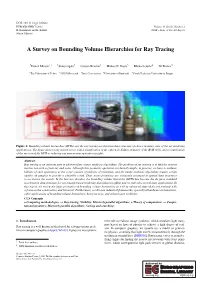

A Survey on Bounding Volume Hierarchies for Ray Tracing

DOI: 10.1111/cgf.142662 EUROGRAPHICS 2021 Volume 40 (2021), Number 2 H. Rushmeier and K. Bühler STAR – State of The Art Report (Guest Editors) A Survey on Bounding Volume Hierarchies for Ray Tracing yDaniel Meister1z yShinji Ogaki2 Carsten Benthin3 Michael J. Doyle3 Michael Guthe4 Jiríˇ Bittner5 1The University of Tokyo 2ZOZO Research 3Intel Corporation 4University of Bayreuth 5Czech Technical University in Prague Figure 1: Bounding volume hierarchies (BVHs) are the ray tracing acceleration data structure of choice in many state of the art rendering applications. The figure shows a ray-traced scene, with a visualization of the otherwise hidden structure of the BVH (left), and a visualization of the success of the BVH in reducing ray intersection operations (right). Abstract Ray tracing is an inherent part of photorealistic image synthesis algorithms. The problem of ray tracing is to find the nearest intersection with a given ray and scene. Although this geometric operation is relatively simple, in practice, we have to evaluate billions of such operations as the scene consists of millions of primitives, and the image synthesis algorithms require a high number of samples to provide a plausible result. Thus, scene primitives are commonly arranged in spatial data structures to accelerate the search. In the last two decades, the bounding volume hierarchy (BVH) has become the de facto standard acceleration data structure for ray tracing-based rendering algorithms in offline and recently also in real-time applications. In this report, we review the basic principles of bounding volume hierarchies as well as advanced state of the art methods with a focus on the construction and traversal. -

Directx Raytracing

DirectX Raytracing Matt Sandy Program Manager DirectX Agenda • Why Raytracing? • DXR Deep Dive • Tools & Helpers • Applied Raytracing (EA/SEED) • Get Started 3D Graphics is a Lie • Solving the Visibility Problem Emergence of Exceptions • Dynamic Shadows • Environment Mapping • Reflections • Global Illumination A Brief History of Pixels • 1999 – Hardware T&L • 2000 – Simple Programmable Shaders • 2002 – Complex Programmable Shaders • 2008 – Compute Shaders • 2014 – Asynchronous Compute • 2018… A Brief History of Pixels • 1999 – Hardware T&L • 2000 – Simple Programmable Shaders • 2002 – Complex Programmable Shaders • 2008 – Compute Shaders • 2014 – Asynchronous Compute • 2018 – DirectX Raytracing Raytracing 101 1. Construct a 3D representation of a scene. 2. Trace rays into the scene from a point of interest (e.g. camera). 3. Accumulate data about ray intersections. 4. Optional: go to step 2. 5. Process the accumulated data to form an image. ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ ⁛ Case Study: SSR DXR 101 Case Study: SSR DXR 101 Raytracing Requirements • Scene geometry representation • Trace rays into scene and get intersections • Determine and execute material shaders Raytracing Requirements • Scene geometry representation • Trace rays into scene and get intersections • Determine and execute material shaders Acceleration Structures • Opaque buffers that represent a scene • Constructed on the GPU • Two-level hierarchy [M] [M] [M] [M] Geometries • Triangles • Vertex Buffer (float16x3 or float32x3) • Index Buffer (uint16 or uint32) • Transformation