Chapter 1: Linearity: Basic Concepts and Examples

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automatic Linearity Detection

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Mathematical Institute Eprints Archive Report no. [13/04] Automatic linearity detection Asgeir Birkisson a Tobin A. Driscoll b aMathematical Institute, University of Oxford, 24-29 St Giles, Oxford, OX1 3LB, UK. [email protected]. Supported for this work by UK EPSRC Grant EP/E045847 and a Sloane Robinson Foundation Graduate Award associated with Lincoln College, Oxford. bDepartment of Mathematical Sciences, University of Delaware, Newark, DE 19716, USA. [email protected]. Supported for this work by UK EPSRC Grant EP/E045847. Given a function, or more generally an operator, the question \Is it lin- ear?" seems simple to answer. In many applications of scientific computing it might be worth determining the answer to this question in an automated way; some functionality, such as operator exponentiation, is only defined for linear operators, and in other problems, time saving is available if it is known that the problem being solved is linear. Linearity detection is closely connected to sparsity detection of Hessians, so for large-scale applications, memory savings can be made if linearity information is known. However, implementing such an automated detection is not as straightforward as one might expect. This paper describes how automatic linearity detection can be implemented in combination with automatic differentiation, both for stan- dard scientific computing software, and within the Chebfun software system. The key ingredients for the method are the observation that linear operators have constant derivatives, and the propagation of two logical vectors, ` and c, as computations are carried out. -

Transformations, Polynomial Fitting, and Interaction Terms

FEEG6017 lecture: Transformations, polynomial fitting, and interaction terms [email protected] The linearity assumption • Regression models are both powerful and useful. • But they assume that a predictor variable and an outcome variable are related linearly. • This assumption can be wrong in a variety of ways. The linearity assumption • Some real data showing a non- linear connection between life expectancy and doctors per million population. The linearity assumption • The Y and X variables here are clearly related, but the correlation coefficient is close to zero. • Linear regression would miss the relationship. The independence assumption • Simple regression models also assume that if a predictor variable affects the outcome variable, it does so in a way that is independent of all the other predictor variables. • The assumed linear relationship between Y and X1 is supposed to hold no matter what the value of X2 may be. The independence assumption • Suppose we're trying to predict happiness. • For men, happiness increases with years of marriage. For women, happiness decreases with years of marriage. • The relationship between happiness and time may be linear, but it would not be independent of sex. Can regression models deal with these problems? • Fortunately they can. • We deal with non-linearity by transforming the predictor variables, or by fitting a polynomial relationship instead of a straight line. • We deal with non-independence of predictors by including interaction terms in our models. Dealing with non-linearity • Transformation is the simplest method for dealing with this problem. • We work not with the raw values of X, but with some arbitrary function that transforms the X values such that the relationship between Y and f(X) is now (closer to) linear. -

Glossary: a Dictionary for Linear Algebra

GLOSSARY: A DICTIONARY FOR LINEAR ALGEBRA Adjacency matrix of a graph. Square matrix with aij = 1 when there is an edge from node T i to node j; otherwise aij = 0. A = A for an undirected graph. Affine transformation T (v) = Av + v 0 = linear transformation plus shift. Associative Law (AB)C = A(BC). Parentheses can be removed to leave ABC. Augmented matrix [ A b ]. Ax = b is solvable when b is in the column space of A; then [ A b ] has the same rank as A. Elimination on [ A b ] keeps equations correct. Back substitution. Upper triangular systems are solved in reverse order xn to x1. Basis for V . Independent vectors v 1,..., v d whose linear combinations give every v in V . A vector space has many bases! Big formula for n by n determinants. Det(A) is a sum of n! terms, one term for each permutation P of the columns. That term is the product a1α ··· anω down the diagonal of the reordered matrix, times det(P ) = ±1. Block matrix. A matrix can be partitioned into matrix blocks, by cuts between rows and/or between columns. Block multiplication of AB is allowed if the block shapes permit (the columns of A and rows of B must be in matching blocks). Cayley-Hamilton Theorem. p(λ) = det(A − λI) has p(A) = zero matrix. P Change of basis matrix M. The old basis vectors v j are combinations mijw i of the new basis vectors. The coordinates of c1v 1 +···+cnv n = d1w 1 +···+dnw n are related by d = Mc. -

Linearity in Calibration: How to Test for Non-Linearity Previous Methods for Linearity Testing Discussed in This Series Contain Certain Shortcom- Ings

Chemometrics in Spectroscopy Linearity in Calibration: How to Test for Non-linearity Previous methods for linearity testing discussed in this series contain certain shortcom- ings. In this installment, the authors describe a method they believe is superior to others. Howard Mark and Jerome Workman Jr. n the previous installment of “Chemometrics in Spec- instrumental methods have to produce a number, represent- troscopy” (1), we promised we would present a descrip- ing the final answer for that instrument’s quantitative assess- I tion of what we believe is the best way to test for linearity ment of the concentration, and that is the test result from (or non-linearity, depending upon your point of view). In the that instrument. This is a univariate concept to be sure, but first three installments of this column series (1–3) we exam- the same concept that applies to all other analytical methods. ined the Durbin–Watson (DW) statistic along with other Things may change in the future, but this is currently the way methods of testing for non-linearity. We found that while the analytical results are reported and evaluated. So the question Durbin–Watson statistic is a step in the right direction, but we to be answered is, for any given method of analysis: Is the also saw that it had shortcomings, including the fact that it relationship between the instrument readings (test results) could be fooled by data that had the right (or wrong!) charac- and the actual concentration linear? teristics. The method we present here is mathematically This method of determining non-linearity can be viewed sound, more subject to statistical validity testing, based upon from a number of different perspectives, and can be consid- well-known mathematical principles, consists of much higher ered as coming from several sources. -

Student Difficulties with Linearity and Linear Functions and Teachers

Student Difficulties with Linearity and Linear Functions and Teachers' Understanding of Student Difficulties by Valentina Postelnicu A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Approved April 2011 by the Graduate Supervisory Committee: Carole Greenes, Chair Victor Pambuccian Finbarr Sloane ARIZONA STATE UNIVERSITY May 2011 ABSTRACT The focus of the study was to identify secondary school students' difficulties with aspects of linearity and linear functions, and to assess their teachers' understanding of the nature of the difficulties experienced by their students. A cross-sectional study with 1561 Grades 8-10 students enrolled in mathematics courses from Pre-Algebra to Algebra II, and their 26 mathematics teachers was employed. All participants completed the Mini-Diagnostic Test (MDT) on aspects of linearity and linear functions, ranked the MDT problems by perceived difficulty, and commented on the nature of the difficulties. Interviews were conducted with 40 students and 20 teachers. A cluster analysis revealed the existence of two groups of students, Group 0 enrolled in courses below or at their grade level, and Group 1 enrolled in courses above their grade level. A factor analysis confirmed the importance of slope and the Cartesian connection for student understanding of linearity and linear functions. There was little variation in student performance on the MDT across grades. Student performance on the MDT increased with more advanced courses, mainly due to Group 1 student performance. The most difficult problems were those requiring identification of slope from the graph of a line. That difficulty persisted across grades, mathematics courses, and performance groups (Group 0, and 1). -

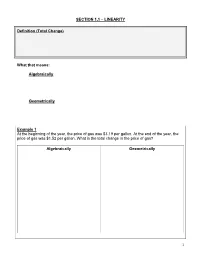

LINEARITY Definition

SECTION 1.1 – LINEARITY Definition (Total Change) What that means: Algebraically Geometrically Example 1 At the beginning of the year, the price of gas was $3.19 per gallon. At the end of the year, the price of gas was $1.52 per gallon. What is the total change in the price of gas? Algebraically Geometrically 1 Definition (Average Rate of Change) What that means: Algebraically: Geometrically: Example 2 John collects marbles. After one year, he had 60 marbles and after 5 years, he had 140 marbles. What is the average rate of change of marbles with respect to time? Algebraically Geometrically 2 Definition (Linearly Related, Linear Model) Example 3 On an average summer day in a large city, the pollution index at 8:00 A.M. is 20 parts per million, and it increases linearly by 15 parts per million each hour until 3:00 P.M. Let P be the amount of pollutants in the air x hours after 8:00 A.M. a.) Write the linear model that expresses P in terms of x. b.) What is the air pollution index at 1:00 P.M.? c.) Graph the equation P for 0 ≤ x ≤ 7 . 3 SECTION 1.2 – GEOMETRIC & ALGEBRAIC PROPERTIES OF A LINE Definition (Slope) What that means: Algebraically Geometrically Definition (Graph of an Equation) 4 Example 1 Graph the line containing the point P with slope m. a.) P = ( ,1 1) & m = −3 c.) P = − ,1 −3 & m = 3 ( ) 5 b.) P = ,2 1 & m = 1 ( ) 2 d.) P = ,0 −2 & m = − 2 ( ) 3 5 Definition (Slope -Intercept Form) Definition (Point -Slope Form) 6 Example 2 Find the equation of the line through the points P and Q. -

The Gradient System. Understanding Gradients from an EM Perspective: (Gradient Linearity, Eddy Currents, Maxwell Terms & Peripheral Nerve Stimulation)

The Gradient System. Understanding Gradients from an EM Perspective: (Gradient Linearity, Eddy Currents, Maxwell Terms & Peripheral Nerve Stimulation) Franz Schmitt Siemens Healthcare, Business Unit MR Karl Schall Str. 6 91054 Erlangen, Germany Email: [email protected] 1 Introduction Since the introduction of magnetic resonance imaging (MRI) as a commercially available diagnostic tool in 1984, dramatic improvements have been achieved in all features defining image quality, such as resolution, signal to noise ratio (SNR), and speed. Initially, spin echo (SE)(1) images containing 128x128 pixels were measured in several minutes. Nowadays, standard matrix sizes for musculo skeletal and neuro studies using SE based techniques is 512x512 with similar imaging times. Additionally, the introduction of echo planar imaging (EPI)(2,3) techniques had made it possible to acquire 128x128 images as produced with earlier MRI scanners in roughly 100 ms. This im- provement in resolution and speed is only possible through improvements in gradient hardware of the recent MRI scanner generations. In 1984, typical values for the gradients were in the range of 1 - 2 mT/m at rise times of 1-2 ms. Over the last decade, amplitudes and rise times have changed in the order of magnitudes. Present gradient technology allows the application of gradient pulses up to 50 mT/m (for whole-body applications) with rise times down to 100 µs. Using dedicated gradient coils, such as head-insert gradient coils, even higher amplitudes and shorter rise times are feasible. In this presentation we demonstrate how modern high-performance gradient systems look like and how components of an MR system have to correlate with one another to guarantee maximum performance. -

Chapter 4. Linear Input/Output Systems

Chapter 4 Linear Input/Output Systems This chapter provides an introduction to linear input/output systems, a class of models that is the focus of the remainder of the text. 4.1 Introduction In Chapters ?? and ?? we consider construction and analysis of di®eren- tial equation models for physical systems. We placed very few restrictions on these systems other than basic requirements of smoothness and well- posedness. In this chapter we specialize our results to the case of linear, time-invariant, input/output systems. This important class of systems is one for which a wealth of analysis and synthesis tools are avilable, and hence it has found great utility in a wide variety of applications. What is a linear system? Recall that a function F : Rp ! Rq is said to be linear if it satis¯es the following property: F (®x + ¯y) = ®F (x) + ¯F (y) x; y 2 Rp; ®; ¯ 2 R: (4.1) This equation implies that the function applied to the sum of two vectors is the sum of the function applied to the individual vectors, and that the results of applying F to a scaled vector is given by scaling the result of applying F to the original vector. 53 54 CHAPTER 4. LINEAR INPUT/OUTPUT SYSTEMS Input/output systems are described in a similar manner. Namely, we wish to capture the notion that if we apply two inputs u1 and u2 to a dynamical system and obtain outputs y1 and ys, then the result of applying the sum, u1 + u2, would give y1 + y2 as the output. -

Fundamental Concepts: Linearity and Homogeneity

Fundamental Concepts: Linearity and Homogeneity This is probably the most abstract section of the course, and also the most important, since the procedures followed in solving PDEs will be simply a bewil- dering welter of magic tricks to you unless you learn the general principles behind them. We have already seen the tricks in use in a few examples; it is time to extract and formulate the principles. (These ideas will already be familiar if you have had a good linear algebra course.) Linear equations and linear operators I think that you already know how to recognize linear and nonlinear equa- tions, so let’s look at some examples before I give the official definition of “linear” and discuss its usefulness. 1 Algebraic equations: Linear Nonlinear x +2y =0, x5 =2x ( x − 3y =1 ) Ordinary differential equations: Linear Nonlinear dy dy + t3 y = cos3t = t2 + ey dt dt Partial differential equations: 2 Linear Nonlinear ∂u ∂2u ∂u ∂u 2 = = ∂t ∂x2 ∂t ∂x What distinguishes the linear equations from the nonlinear ones? The most visible feature of the linear equations is that they involve the unknown quantity (the dependent variable, in the differential cases) only to the first power. The unknown does not appear inside transcendental functions (such as sin and ln), or in a denominator, or squared, cubed, etc. This is how a linear equation is usually recognized by eye. Notice that there may be terms (like cos 3t in one example) which don’t involve the unknown at all. Also, as the same example term shows, there’s no rule against nonlinear functions of the independent variable. -

Linear Systems 2 2 Linear Systems

2 LINEAR SYSTEMS 2 2 LINEAR SYSTEMS We will discuss what we mean by a linear time-invariant system, and then consider several useful transforms. 2.1 De¯nition of a System In short, a system is any process or entity that has one or more well-defined inputs and one or more well-defined outputs. Examples of systems include a simple physical object obeying Newtonian mechanics, and the US economy! Systems can be physical, or we may talk about a mathematical description of a system. The point of modeling is to capture in a mathematical representation the behavior of a physical system. As we will see, such representation lends itself to analysis and design, and certain restrictions such as linearity and time-invariance open a huge set of available tools. We often use a block diagram form to describe systems, and in particular their interconnec tions: input u(t) output y(t) System F input u(t) output y(t) System F System G In the second case shown, y(t) = G[F [u(t)]]. Looking at structure now and starting with the most abstracted and general case, we may write a system as a function relating the input to the output; as written these are both functions of time: y(t) = F [u(t)] The system captured in F can be a multiplication by some constant factor - an example of a static system, or a hopelessly complex set of differential equations - an example of a dynamic system. If we are talking about a dynamical system, then by definition the mapping from u(t) to y(t) is such that the current value of the output y(t) depends on the past history of u(t). -

Vector Space Concepts ECE 275A – Statistical Parameter Estimation

Vector Space Concepts ECE 275A { Statistical Parameter Estimation Ken Kreutz-Delgado ECE Department, UC San Diego Ken Kreutz-Delgado (UC San Diego) ECE 275A Fall 2012 1 / 24 Heuristic Concept of a Linear Vector Space Many important physical, engineering, biological, sociological, economic, scientific quantities, which we call vectors, have the following conceptual properties. There exists a natural or conventional `zero point' or \origin", the zero vector,0, . Vectors can be added in a symmetric commutative and associative manner to produce other vectors z = x + y = y + x ; x,y,z are vectors (commutativity) x + y + z , x + (y + z) = (x + y) + z ; x,y,z are vectors (associativity) Vectors can be scaled by the (symmetric) multiplication of scalars (scalar multiplication) to produce other vectors z = αx = xα ; x,z are vectors, α is a scalar (scalar multiplication of x by α) The scalars can be members of any fixed field (such as the field of rational polynomials). We will work only with the fields of real and complex numbers. Each vector x has an additive inverse, −x = (−1)x x − x , x + (−1)x = x + (−x) = 0 Ken Kreutz-Delgado (UC San Diego) ECE 275A Fall 2012 2 / 24 Formal Concept of a Linear Vector Space A Vector Space, X , is a set of vectors, x 2 X , over a field, F, of scalars. If the scalars are the field of real numbers, then we have a Real Vector Space. If the scalars are the field of complex numbers, then we have a Complex Vector Space. Any vector x 2 X can be multiplied by an arbitrary scalar α to form α x = x α 2 X . -

11 Elements of the General Theory of the Linear ODE

11 Elements of the general theory of the linear ODE In the last lecture we looked for a solution to the second order linear homogeneous ODE with constant coefficients in the form y(t) = C1y1(t) + C2y2(t), where C1;C2 are arbitrary constants and y1(t) and y2(t) are some particular solutions to our problem, the only condition is that one of them is not a multiple of another (this prohibits, e.g., using y1(t) = 0, which, as we know, is a solution). Using the λt ansatz y(t) = e , we actually were able to produce these two solutions y1(t) and y2(t)(ansatz is an educated guess). However, the questions remain: Did our assumption actually lead to a legitimate solution? And how do we know that all possible solutions to our problem can be represented by our formula? In this lecture I will try to answer these questions. Since no advantage is gained by restricting the attention to the case of constant coefficients, I will be talking here about arbitrary second order linear ODE. 11.1 General theory. Linear operators Definition 1. The equation of the form y00 + p(t)y0 + q(t)y = f(t); (1) where p(t); q(t); f(t) are given functions, is called second order linear ODE. This ODE is homogeneous if f(t) ≡ 0 and nonhomogeneous in the opposite case. If p(t); q(t) are constant, then this equation is called linear ODE with constant coefficients. For equation (1) the following theorem of uniqueness and existence of the solution to the IVP holds.