Computational Analysis of World Music Corpora

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SEM 62 Annual Meeting

SEM 62nd Annual Meeting Denver, Colorado October 26 – 29, 2017 Hosted by University of Denver University of Colorado Boulder and Colorado College SEM 2017 Annual Meeting Table of Contents Sponsors .............................................................................................................................................................................................................. 1 Committees, Board, Staff, and Council ................................................................................................................................................... 2 – 3 Welcome Messages ............................................................................................................................................................................................. 4 Exhibitors and Advertisers ............................................................................................................................................................................... 5 General Information ................................................................................................................................................................................. 5 – 7 Charles Seeger Lecture...................................................................................................................................................................................... 8 Schedule at a Glance. ........................................................................................................................................................................................ -

Chapter 2. Corpus-Based Musicology. 11

UCC Library and UCC researchers have made this item openly available. Please let us know how this has helped you. Thanks! Title A programmer's environment for music analysis Author(s) Ó Maidín, Donncha Seán Publication date 1995 Original citation Ó Maidín, D. S. 1995. A programmer's environment for music analysis. PhD Thesis, University College Cork. Type of publication Doctoral thesis Link to publisher's http://library.ucc.ie/record=b1230585~S0 version http://dx.doi.org/10.13140/2.1.3387.2969 Access to the full text of the published version may require a subscription. Rights © 1995, Donncha S. Ó Maidín http://creativecommons.org/licenses/by-nc-nd/3.0/ Embargo information No embargo required Item downloaded http://hdl.handle.net/10468/1629 from Downloaded on 2021-10-05T12:42:50Z A Programmer’s Environment for Music Analysis by Donncha Seán Ó Maidín, M.Sc., B.Mus. Submitted in May 1995 to The National University of Ireland for the degree of Doctor of Philosophy and revised in September 1995. This thesis was registered in The Department of Music, Faculty of Arts, University College, Cork, and was completed under the internal supervision of Professor David Cox and under the external examination of Professor Anthony Pople of the University of Lancaster. Copyright Donncha Ó Maidín 1995. scoreView. Table of Contents. ACKNOWLEDGEMENTS. IX CHAPTER 1. INTRODUCTION. 1 1.1 Overview. 1 1.2 Contribution of this Study to the Field of Corpus-Based Musicology. 3 1.3 Goals. 4 1.3.1 Informational Completeness. 4 1.3.2 Informational Objectivity. 5 1.3.3 Multi-Level. -

Music Information Retrieval and Musicology: What Do the Two Have in Common?

Music Information Retrieval and Musicology: What do the two have in common? Frans Wiering Information and Computing Sciences Utrecht University Musical Heritage, Oslo, 11 December 2010 1 Topic two disciplines Music Information Retrieval musicology what is the relationship? meaningful to musicology? implications for digital editions data-richness 2 1 Preliminary descriptions Music Information Retrieval (MIR) delivering the right (digital) music in answer to a user need right: matching the user’s taste, expertise, emotional state, activity, and cultural, social, physical and musical environment musicology understanding music in its context e.g. personal, social, economic, historical, theoretical MIR versus musicology delivery vs. understanding generic vs. specific 3 Music universe of music > 25,000,000 unique items individuals can recognise several 1000s of items different views of music object, digital or otherwise product work of art process in time mental process social phenomenon 4 2 MIR as a discipline emerged in 1960s (Kassler 1966), maturing since late 1990s definition (Downie 2004) a multidisciplinary research endeavor that strives to develop innovative content-based searching schemes, novel interfaces, and evolving networked delivery mechanisms in an effort to make the world’s vast store of music accessible to all contributing areas (Futrelle and Downie 2002) computer science, information retrieval audio engineering, digital sound processing musicology, music theory library science cognitive science, -

An Introduction to Music Studies Pdf, Epub, Ebook

AN INTRODUCTION TO MUSIC STUDIES PDF, EPUB, EBOOK Jim Samson,J. P. E. Harper-Scott | 310 pages | 31 Jan 2009 | CAMBRIDGE UNIVERSITY PRESS | 9780521603805 | English | Cambridge, United Kingdom An Introduction to Music Studies PDF Book To see what your friends thought of this book, please sign up. An analysis of sociomusicology, its issues; and the music and society in Hong Kong. Critical Entertainments: Music Old and New. Other Editions 6. The examination measures knowledge of facts and terminology, an understanding of concepts and forms related to music theory for example: pitch, dynamics, rhythm, melody , types of voices, instruments, and ensembles, characteristics, forms, and representative composers from the Middle Ages to the present, elements of contemporary and non-Western music, and the ability to apply this knowledge and understanding in audio excerpts from musical compositions. An Introduction to Music Studies by J. She has been described by the Harvard Gazette as "one of the world's most accomplished and admired music historians". The job market for tenure track professor positions is very competitive. You should have a passion for music and a strong interest in developing your understanding of music and ability to create it. D is the standard minimum credential for tenure track professor positions. Historical studies of music are for example concerned with a composer's life and works, the developments of styles and genres, e. Mus or a B. For other uses, see Musicology disambiguation. More Details Refresh and try again. Goodreads helps you keep track of books you want to read. These models were established not only in the field of physical anthropology , but also cultural anthropology. -

Iran 2019 Human Rights Report

IRAN 2019 HUMAN RIGHTS REPORT EXECUTIVE SUMMARY The Islamic Republic of Iran is an authoritarian theocratic republic with a Shia Islamic political system based on velayat-e faqih (guardianship of the jurist). Shia clergy, most notably the rahbar (supreme leader), and political leaders vetted by the clergy dominate key power structures. The supreme leader is the head of state. The members of the Assembly of Experts are nominally directly elected in popular elections. The assembly selects and may dismiss the supreme leader. The candidates for the Assembly of Experts, however, are vetted by the Guardian Council (see below) and are therefore selected indirectly by the supreme leader himself. Ayatollah Ali Khamenei has held the position since 1989. He has direct or indirect control over the legislative and executive branches of government through unelected councils under his authority. The supreme leader holds constitutional authority over the judiciary, government-run media, and other key institutions. While mechanisms for popular election exist for the president, who is head of government, and for the Islamic Consultative Assembly (parliament or majles), the unelected Guardian Council vets candidates, routinely disqualifying them based on political or other considerations, and controls the election process. The supreme leader appoints half of the 12-member Guardian Council, while the head of the judiciary (who is appointed by the supreme leader) appoints the other half. Parliamentary elections held in 2016 and presidential elections held in 2017 were not considered free and fair. The supreme leader holds ultimate authority over all security agencies. Several agencies share responsibility for law enforcement and maintaining order, including the Ministry of Intelligence and Security and law enforcement forces under the Interior Ministry, which report to the president, and the Islamic Revolutionary Guard Corps (IRGC), which reports directly to the supreme leader. -

Postdoctoral Research Fellowship in Computational Musicological Analysis

Postdoctoral Research Fellowship in Computational Musicological Analysis Job description A Postdoctoral Research Fellowship (SKO1352) in Computational Musicological Analysis is available at RITMO Centre for Interdisciplinary Studies in Rhythm, Time and Motion at the University of Oslo. RITMO is a Centre of Excellence funded by the Research Council of Norway. This interdisciplinary centre focuses on rhythm as a structuring mechanism for the temporal dimensions of human life. Methods from musicology, psychology, neuroscience, and informatics are combined to study rhythm as a fundamental property that shapes and underpins human cognition, behavior and cultural expressions. For more information, see www.uio.no/ritmo. All RITMO researchers are co-located and work in a unique interdisciplinary constellation, with world-leading competence in musicology, psychology and informatics. It is expected that all members of the center contribute to the general activities and collaborations within RITMO. The researchers have access to state-of-the-art facilities in sound/video recording, motion capture, eye tracking, physiological measurements, various types of brain imaging (EEG, fMRI), and rapid prototyping and robotics laboratories. More about the position The postdoctoral fellow will take part in the MIRAGE project, aimed at designing and implementing a comprehensive computational framework for music analysis. The objective is to generate rich and detailed descriptions of music, encompassing a large range of dimensions, including low-level features, mid-level structures and high-level concepts. The envisioned methodology consists of close interaction between automated music transcription and detailed musicological analysis of the transcriptions. Significant effort will be dedicated to the design of applications of value for musicology, music cognition and the general public. -

The Computational Attitude in Music Theory

The Computational Attitude in Music Theory Eamonn Bell Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2019 © 2019 Eamonn Bell All rights reserved ABSTRACT The Computational Attitude in Music Theory Eamonn Bell Music studies’s turn to computation during the twentieth century has engendered particular habits of thought about music, habits that remain in operation long after the music scholar has stepped away from the computer. The computational attitude is a way of thinking about music that is learned at the computer but can be applied away from it. It may be manifest in actual computer use, or in invocations of computationalism, a theory of mind whose influence on twentieth-century music theory is palpable. It may also be manifest in more informal discussions about music, which make liberal use of computational metaphors. In Chapter 1, I describe this attitude, the stakes for considering the computer as one of its instruments, and the kinds of historical sources and methodologies we might draw on to chart its ascendance. The remainder of this dissertation considers distinct and varied cases from the mid-twentieth century in which computers or computationalist musical ideas were used to pursue new musical objects, to quantify and classify musical scores as data, and to instantiate a generally music-structuralist mode of analysis. I present an account of the decades-long effort to prepare an exhaustive and accurate catalog of the all-interval twelve-tone series (Chapter 2). This problem was first posed in the 1920s but was not solved until 1959, when the composer Hanns Jelinek collaborated with the computer engineer Heinz Zemanek to jointly develop and run a computer program. -

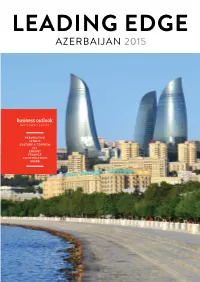

Azerbaijan Investment Guide 2015

PERSPECTIVE SPORTS CULTURE & TOURISM ICT ENERGY FINANCE CONSTRUCTION GUIDE Contents 4 24 92 HE Ilham Aliyev Sports Energy HE Ilham Aliyev, President Find out how Azerbaijan is The Caspian powerhouse is of Azerbaijan talks about the entering the world of global entering stage two of its oil future for Azerbaijan’s econ- sporting events to improve and gas development plans, omy, its sporting develop- its international image, and with eyes firmly on the ment and cultural tolerance. boost tourism. European market. 8 50 120 Perspective Culture & Finance Tourism What is modern Azerbaijan? Diversifying the sector MICE tourism, economic Discover Azerbaijan’s is key for the country’s diversification, international hospitality, art, music, and development, see how relations and building for tolerance for other cultures PASHA Holdings are at the future. both in the capital Baku the forefront of this move. and beyond. 128 76 Construction ICT Building the monuments Rapid development of the that will come to define sector will see Azerbaijan Azerbaijan’s past, present and future in all its glory. ASSOCIATE PUBLISHERS: become one of the regional Nicole HOWARTH, leaders in this vital area of JOHN Maratheftis the economy. EDITOR: 138 BENJAMIN HEWISON Guide ART DIRECTOR: JESSICA DORIA All you need to know about Baku and beyond in one PROJECT DIRECTOR: PHIL SMITH place. Venture forth and explore the ‘Land of Fire’. PROJECT COORDINATOR: ANNA KOERNER CONTRIBUTING WRITERS: MARK Elliott, CARMEN Valache, NIGAR Orujova COVER IMAGE: © RAMIL ALIYEV / shutterstock.com 2nd floor, Berkeley Square House London W1J 6BD, United Kingdom In partnership with T: +44207 887 6105 E: [email protected] LEADING EDGE AZERBAIJAN 2015 5 Interview between Leading Edge and His Excellency Ilham Aliyev, President of the Republic of Azerbaijan LE: Your Excellency, in October 2013 you received strong reserves that amount to over US $53 billion, which is a very support from the people of Azerbaijan and were re-elect- favourable figure when compared to the rest of the world. -

Music Theory - Why Bother? on Music Theory in Relation to Music Information Processing

Music Theory - Why bother? On Music Theory in relation to Music Information Processing Sven Ahlbäck, Doremir Music Research AB Prof. Royal College of Music, Stockholm 1 This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 765068. • My background There is no such • What is this about? thing as a conceptual • Why is this in the summer vacuum school? Agenda • No, this will not be a full course or crash course in Music Theory • Aim: introduce some issues, discuss basic concepts and give some overview • Today: Introduction to approaches in the field of music theory and the possible relevance for MIR • Thursday: Pitch and Tonality - Sven Ahlbäck • How to study a music culture: Indian Classical music, by Rafael Caro • Friday: Rhythm & Structure - Sven Ahlbäck Topics for the workshops in afternoon sessions are connected • Harmony • Melodic similarity • Rhythm An example manual learning task: Transcription using your built-in biological system listen sing notate what we sing notate what we hear 200 300 200 300 200 intervals in cents (1/100 semitone) (”anhemitonic pentatonic scale”) How did it really sound? Examples Equidistant pentatonic pitch set Court music Uganda Moçambique 240 240 240 240 Studies of tuning & perception (Cooke 1970, Wachsmann 1971 Kubik 1960, Valkare 2016 etc.) What happened? Ex. Tongoli Fumbo Adhola Music: Fur ber Tongoli harp So what’s the ground truth here? ”There are only semitones and whole tones” Our perception is influenced by music theoretical concepts We hear what we know How does this relate to MIR? If models we use model human cognition, and human cognition is related to concepts originating from music theory, it might not be out of the way to be know a little about it? You might want to contribute to knowledge about music. -

CT 11 Final:Layout 1

Iran Takes on the World By Jamsheed K. Choksy he islamic republic of iran is today challenging the world. The Iranian leadership’s appetite for power is growing, for they have become thoroughly convinced that no outside power—the U.S. in- cluded—will derail their rise to regional and even global prominence. “Whether you like it or not,” the Iranian cleric and politician Ayatollah TAhmad Khatami, an influential figure and on-and-off mentor to Iranian President Mahmoud Ahmadinejad, publicly boasted to the U.S., “you have to regard Iran as a great power in the political sphere. The people of Iran have realized there is nothing you can do to us now or will be capable of doing [in the future]. So rather than using all your resources in failed attempts to oppose Iran, you should work with us.”1 Khatami’s statement, like many other recent pronouncements made by the Is- lamic Republic’s leaders, underscores why Iran has not complied with years of Amer- ican demands for full transparency in its nuclear programs, for putting a halt to its sponsorship of terrorism and propagation of militant Islam or Islamism globally, and for cooperation in regional affairs. Simply put, the Islamic Republic’s ruling politicians no longer fear America; they believe the U.S. and its allies have lost the political will to preserve the current order. Tehran, therefore, no longer worries about the repercussions of pursuing an ever-more ambitious policy aimed at refash- ioning the international order and extending Iranian power and influence. Students of history know this is not the first time a rising Iran has sought to dom- inate its neighbors and the world beyond. -

Paradigms from Muslim Contexts

eCommons@AKU Exploring Muslim Contexts ISMC Series 1-1-2009 Volume 1: The Challenge of Pluralism : Paradigms from Muslim Contexts Abdou Filali-Ansary Editor Sikeena Karmali Ahmed Editor Follow this and additional works at: https://ecommons.aku.edu/uk_ismc_series_emc Part of the Islamic World and Near East History Commons, and the Public Affairs, Public Policy and Public Administration Commons Recommended Citation Filali-Ansary, A. , Ahmed, S. (Eds.). (2009). Volume 1: The Challenge of Pluralism : Paradigms from Muslim Contexts Vol. 1, p. 128. Available at: https://ecommons.aku.edu/uk_ismc_series_emc/5 The Challenge of Pluralism Ansary_00_All.indd 1 02/09/2009 16:38 Exploring Muslim Contexts Series Editor: Abdou Filali-Ansary Books in the series include Development Models in Muslim Contexts: Chinese, “Islamic” and Neo-Liberal Alternatives Edited by Robert Springborg The Challenge of Pluralism: Paradigms from Muslim Contexts Edited by Abdou Filali-Ansary and Sikeena Karmali Ahmed The Possibility of Pluralism Edited by A. C. Grayling Ansary_00_All.indd 2 02/09/2009 16:38 The Challenge of Pluralism Paradigms from Muslim Contexts Edited by Abdou Filali-Ansary and Sikeena Karmali Ahmed Edinburgh University Press in association with THE AGA KHAN UNIVERSITY Institute for the Study of Muslim Civilisations Ansary_00_All.indd 3 02/09/2009 16:38 The opinions expressed in this volume are those of the authors and do not necessarily reflect those of the Aga Khan University, Institute for the Study of Muslim Civilisations. © Editorial matter and organisation -

16Th Sound and Music Computing Conference SMC 2019 (28–31 May 2019, Malaga, Spain)

applied sciences Meeting Report 16th Sound and Music Computing Conference SMC 2019 (28–31 May 2019, Malaga, Spain) Lorenzo J. Tardón 1,* , Isabel Barbancho 1,* , Ana M. Barbancho 1 , Alberto Peinado 2, Stefania Serafin 3 and Federico Avanzini 4 1 ATIC Research Group, Universidad de Málaga, Andalucía Tech, E.T.S.I. Telecomunicación, 29071 Málaga, Spain; [email protected] 2 Universidad de Málaga, Andalucía Tech, E.T.S.I. Telecomunicación, 29071 Málaga, Spain; [email protected] 3 Multisensory Experience Lab., Aalborg University Copenhagen, 2450 Copenhagen SV, Denmark; [email protected] 4 LIM–Laboratorio di Informatica Musicale, Department of Computer Science, University of Milan, 20133 Milan, Italy; [email protected] * Correspondence: [email protected] (L.J.T.); [email protected] (I.B.) Received: 5 June 2019; Accepted: 9 June 2019; Published: 19 June 2019 Abstract: The 16th Sound and Music Computing Conference (SMC 2019) took place in Malaga, Spain, 28–31 May 2019 and it was organized by the Application of Information and Communication Technologies Research group (ATIC) of the University of Malaga (UMA). The SMC 2019 associated Summer School took place 25–28 May 2019. The First International Day of Women in Inclusive Engineering, Sound and Music Computing Research (WiSMC 2019) took place on 28 May 2019. The SMC 2019 TOPICS OF INTEREST included a wide selection of topics related to acoustics, psychoacoustics, music, technology for music, audio analysis, musicology, sonification, music games, machine learning, serious games, immersive audio, sound synthesis, etc. 1. Summer School 1.1. Arduino and Audio David Cuartielles Malmö University, Sweden The Arduino and audio workshop looks at possible ways to create inter- active sound production machines using Arduino boards.